|

Range Encoding

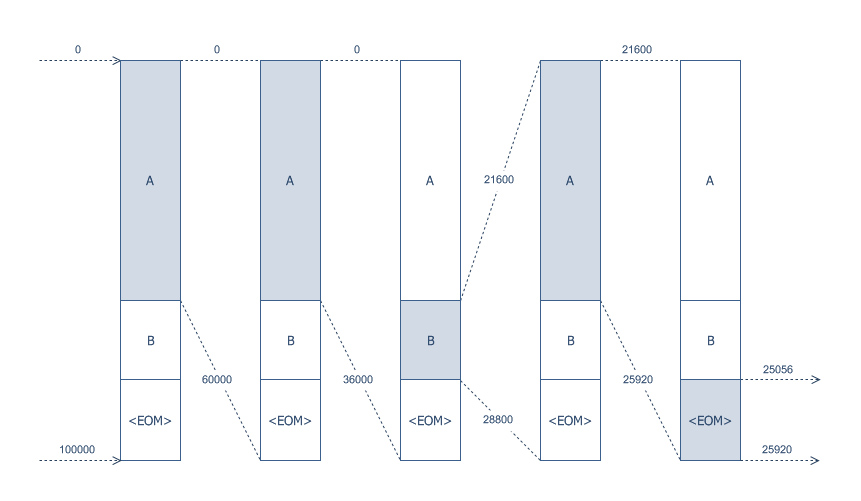

Range coding (or range encoding) is an entropy coding method defined by G. Nigel N. Martin in a 1979 paper,G. Nigel N. Martin, ''Range encoding: An algorithm for removing redundancy from a digitized message'' Video & Data Recording Conference, , UK, July 24–27, 1979. which effectively rediscovered the FIFO arithmetic code first introduced by Richard Clark Pasco in 1976. Given a stream of symbols and their probabilities, a range coder produces a space-efficient stream of bits to represent these symbols and, given the stream and the probabilities, a range decoder re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Coding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source. More precisely, the source coding theorem states that for any source distribution, the expected code length satisfies \operatorname E_ ell(d(x))\geq \operatorname E_ \log_b(P(x))/math>, where \ell is the function specifying the number of symbols in a code word, d is the coding function, b is the number of symbols used to make output codes and P is the probability of the source symbol. An entropy coding attempts to approach this lower bound. Two of the most common entropy coding techniques are Huffman coding and arithmetic coding. If the approximate entropy characteristics of a data stream are known in advance (especially for signal compression), a simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Numeral System

A binary number is a number expressed in the base-2 numeral system or binary numeral system, a method for representing numbers that uses only two symbols for the natural numbers: typically "0" ( zero) and "1" ( one). A ''binary number'' may also refer to a rational number that has a finite representation in the binary numeral system, that is, the quotient of an integer by a power of two. The base-2 numeral system is a positional notation with a radix of 2. Each digit is referred to as a bit, or binary digit. Because of its straightforward implementation in digital electronic circuitry using logic gates, the binary system is used by almost all modern computers and computer-based devices, as a preferred system of use, over various other human techniques of communication, because of the simplicity of the language and the noise immunity in physical implementation. History The modern binary number system was studied in Europe in the 16th and 17th centuries by Thomas Harrio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Coding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source. More precisely, the source coding theorem states that for any source distribution, the expected code length satisfies \operatorname E_ ell(d(x))\geq \operatorname E_ \log_b(P(x))/math>, where \ell is the function specifying the number of symbols in a code word, d is the coding function, b is the number of symbols used to make output codes and P is the probability of the source symbol. An entropy coding attempts to approach this lower bound. Two of the most common entropy coding techniques are Huffman coding and arithmetic coding. If the approximate entropy characteristics of a data stream are known in advance (especially for signal compression), a simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon–Fano Coding

In the field of data compression, Shannon–Fano coding, named after Claude Shannon and Robert Fano, is one of two related techniques for constructing a prefix code based on a set of symbols and their probabilities (estimated or measured). * Shannon's method chooses a prefix code where a source symbol i is given the codeword length l_i = \lceil - \log_2 p_i\rceil. One common way of choosing the codewords uses the binary expansion of the cumulative probabilities. This method was proposed in Shannon's "A Mathematical Theory of Communication" (1948), his article introducing the field of information theory. * Fano's method divides the source symbols into two sets ("0" and "1") with probabilities as close to 1/2 as possible. Then those sets are themselves divided in two, and so on, until each set contains only one symbol. The codeword for that symbol is the string of "0"s and "1"s that records which half of the divides it fell on. This method was proposed in a later (in print) technica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiscale Electrophysiology Format

Multiscale Electrophysiology Format (MEF) was developed to handle the large amounts of data produced by large-scale electrophysiology in human and animal subjects. MEF can store any time series data up to 24 bits in length, and employs lossless range encoded difference compression. Subject identifying information in the file header can be encrypted using 128-bit AES encryption in order to comply with HIPAA requirements for patient privacy when transmitting data across an open network. Compressed data is stored in independent blocks to allow direct access to the data, facilitate parallel processing and limit the effects of potential damage to files. Data fidelity is ensured by a 32-bit cyclic redundancy check in each compressed data block using the Koopman polynomial (0xEB31D82E), which has a Hamming distance of from 4 to 114 kbits. A formal specification and source code are available online. MEF_import is an EEGLAB plugin to import MEF data into EEGLAB. See also * Range encodi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Encoding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source. More precisely, the source coding theorem states that for any source distribution, the expected code length satisfies \operatorname E_ ell(d(x))\geq \operatorname E_ \log_b(P(x))/math>, where \ell is the function specifying the number of symbols in a code word, d is the coding function, b is the number of symbols used to make output codes and P is the probability of the source symbol. An entropy coding attempts to approach this lower bound. Two of the most common entropy coding techniques are Huffman coding and arithmetic coding. If the approximate entropy characteristics of a data stream are known in advance (especially for signal compression), a simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymmetric Numeral Systems

Asymmetric numeral systems (ANS)J. Duda, K. Tahboub, N. J. Gadil, E. J. Delp''The use of asymmetric numeral systems as an accurate replacement for Huffman coding'' Picture Coding Symposium, 2015.J. Duda''Asymmetric numeral systems: entropy coding combining speed of Huffman coding with compression rate of arithmetic coding'' arXiv:1311.2540, 2013. is a family of entropy encoding methods introduced by Jarosław (Jarek) Duda from Jagiellonian University, used in data compression since 2014 due to improved performance compared to previous methods. ANS combines the compression ratio of arithmetic coding (which uses a nearly accurate probability distribution), with a processing cost similar to that of Huffman coding. In the tabled ANS (tANS) variant, this is achieved by constructing a finite-state machine to operate on a large alphabet without using multiplication. Among others, ANS is used in the Facebook Zstandard compressor [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fraction (mathematics)

A fraction (from , "broken") represents a part of a whole or, more generally, any number of equal parts. When spoken in everyday English, a fraction describes how many parts of a certain size there are, for example, one-half, eight-fifths, three-quarters. A ''common'', ''vulgar'', or ''simple'' fraction (examples: and ) consists of an integer numerator, displayed above a line (or before a slash like ), and a division by zero, non-zero integer denominator, displayed below (or after) that line. If these integers are positive, then the numerator represents a number of equal parts, and the denominator indicates how many of those parts make up a unit or a whole. For example, in the fraction , the numerator 3 indicates that the fraction represents 3 equal parts, and the denominator 4 indicates that 4 parts make up a whole. The picture to the right illustrates of a cake. Fractions can be used to represent ratios and division (mathematics), division. Thus the fraction can be used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Coding

Arithmetic coding (AC) is a form of entropy encoding used in lossless data compression. Normally, a String (computer science), string of characters is represented using a fixed number of bits per character, as in the American Standard Code for Information Interchange, ASCII code. When a string is converted to arithmetic encoding, frequently used characters will be stored with fewer bits and not-so-frequently occurring characters will be stored with more bits, resulting in fewer bits used in total. Arithmetic coding differs from other forms of entropy encoding, such as Huffman coding, in that rather than separating the input into component symbols and replacing each with a code, arithmetic coding encodes the entire message into a single number, an arbitrary-precision arithmetic, arbitrary-precision fraction ''q'', where . It represents the current information as a range, defined by two numbers. A recent family of entropy coders called asymmetric numeral systems allows for faster imp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Southampton

Southampton is a port City status in the United Kingdom, city and unitary authority in Hampshire, England. It is located approximately southwest of London, west of Portsmouth, and southeast of Salisbury. Southampton had a population of 253,651 at the 2011 census, making it one of the most populous cities in southern England. Southampton forms part of the larger South Hampshire conurbation which includes the city of Portsmouth and the boroughs of Borough of Havant, Havant, Borough of Eastleigh, Eastleigh, Borough of Fareham, Fareham and Gosport. A major port, and close to the New Forest, Southampton lies at the northernmost point of Southampton Water, at the confluence of the River Test and River Itchen, Hampshire, Itchen, with the River Hamble joining to the south. Southampton is classified as a Medium-Port City. Southampton was the departure point for the and home to 500 of the people who perished on board. The Supermarine Spitfire, Spitfire was built in the city and Sout ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |