|

Randomized Trial

In science, randomized experiments are the experiments that allow the greatest reliability and validity of statistical estimates of treatment effects. Randomization-based inference is especially important in experimental design and in survey sampling. Overview In the statistical theory of design of experiments, randomization involves randomly allocating the experimental units across the treatment groups. For example, if an experiment compares a new drug against a standard drug, then the patients should be allocated to either the new drug or to the standard drug control using randomization. Randomized experimentation is ''not'' haphazard. Randomization reduces bias by equalising other factors that have not been explicitly accounted for in the experimental design (according to the law of large numbers). Randomization also produces ignorable designs, which are valuable in model-based statistical inference, especially Bayesian or likelihood-based. In the design of experiments, th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flowchart Of Phases Of Parallel Randomized Trial - Modified From CONSORT 2010

A flowchart is a type of diagram that represents a workflow or process. A flowchart can also be defined as a diagrammatic representation of an algorithm, a step-by-step approach to solving a task. The flowchart shows the steps as boxes of various kinds, and their order by connecting the boxes with arrows. This diagrammatic representation illustrates a solution model to a given problem. Flowcharts are used in analyzing, designing, documenting or managing a process or program in various fields. * ''Document flowcharts'', showing controls over a document-flow through a system * ''Data flowcharts'', showing controls over a data-flow in a system * ''System flowcharts'', showing controls at a physical or resource level * ''Program flowchart'', showing the controls in a program within a system Notice that every type of flowchart focuses on some kind of control, rather than on the particular flow itself. However, there are some different classifications. For example, Andrew Veronis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Clinical Equipoise

Clinical equipoise, also known as the principle of equipoise, provides the ethical basis for medical research that involves assigning patients to different treatment arms of a clinical trial. The term was proposed by Benjamin Freedman in 1987 in response to "controversy in the clinical community" to define an ethical situation of “genuine uncertainty within the expert medical community… about the preferred treatment.” This applies also for off-label treatments performed before or during their required clinical trials. An ethical dilemma arises in a clinical trial when the investigator(s) begin to believe that the treatment or intervention administered in one arm of the trial is significantly outperforming the other arms. A trial should begin with a null hypothesis, and there should exist no decisive Empirical evidence, evidence that the intervention or drug being tested will be superior to existing treatments, or that it will be completely ineffective. As the trial progresses, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a Critical value (statistics), critical value or equivalently by evaluating a p-value, ''p''-value computed from the test statistic. Roughly 100 list of statistical tests, specialized statistical tests are in use and noteworthy. History While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Choice of null hypothesis Paul Meehl has argued that the epistemological importance of the choice of null hypothesis has gone largely unacknowledged. When the null hypothesis is predicted by the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student's T-test

Student's ''t''-test is a statistical test used to test whether the difference between the response of two groups is statistically significant or not. It is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a scaling term in the test statistic were known (typically, the scaling term is unknown and is therefore a nuisance parameter). When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's ''t'' distribution. The ''t''-test's most common application is to test whether the means of two populations are significantly different. In many cases, a ''Z''-test will yield very similar results to a ''t''-test because the latter converges to the former as the size of the dataset increases. History The term "''t''-statistic" is abbreviated from " ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ANOVA

Analysis of variance (ANOVA) is a family of statistical methods used to compare the means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variation ''within'' each group. If the between-group variation is substantially larger than the within-group variation, it suggests that the group means are likely different. This comparison is done using an F-test. The underlying principle of ANOVA is based on the law of total variance, which states that the total variance in a dataset can be broken down into components attributable to different sources. In the case of ANOVA, these sources are the variation between groups and the variation within groups. ANOVA was developed by the statistician Ronald Fisher. In its simplest form, it provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. History While the analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rubin Causal Model

The Rubin causal model (RCM), also known as the Neyman–Rubin causal model, is an approach to the statistical analysis of cause and effect based on the framework of potential outcomes, named after Donald Rubin. The name "Rubin causal model" was first coined by Paul W. Holland. The potential outcomes framework was first proposed by Jerzy Neyman in his 1923 Master's thesis,Neyman, Jerzy. ''Sur les applications de la theorie des probabilites aux experiences agricoles: Essai des principes.'' Master's Thesis (1923). Excerpts reprinted in English, Statistical Science, Vol. 5, pp. 463–472. ( D. M. Dabrowska, and T. P. Speed, Translators.) though he discussed it only in the context of completely randomized experiments. Rubin extended it into a general framework for thinking about causation in both observational and experimental studies. Introduction The Rubin causal model is based on the idea of potential outcomes. For example, a person would have a particular income at age 4 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Methods For Research Workers

''Statistical Methods for Research Workers'' is a classic book on statistics, written by the statistician R. A. Fisher. It is considered by some to be one of the 20th century's most influential books on statistical methods, together with his '' The Design of Experiments'' (1935). It was originally published in 1925, by Oliver & Boyd (Edinburgh); the final and posthumous 14th edition was published in 1970. The impulse to write a book on the statistical methodology he had developed came not from Fisher himself but from D. Ward Cutler, one of the two editors of a series of "Biological Monographs and Manuals" being published by Oliver and Boyd. Reviews According to Denis Conniffe: Ronald A. Fisher was "interested in application and in the popularization of statistical methods and his early book ''Statistical Methods for Research Workers'', published in 1925, went through many editions and motivated and influenced the practical use of statistics in many fields of study. His ''Design ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Isis (journal)

''Isis'' is a quarterly peer-reviewed academic journal published by the University of Chicago Press for the History of Science Society. It covers the history of science, history of medicine, and the history of technology, as well as their cultural influences. It contains original research articles and extensive book reviews and review essays. Furthermore, sections devoted to one particular topic are published in each issue in open access. These sections consist of the Focus section, the Viewpoint section and the Second Look section. History The journal was established by George Sarton and the first issue appeared in March 1913. Contributions were originally in any of four European languages (English, French, German, and Italian), but since the 1920s, only English has been used. Publication is partly supported by an endowment from the Dibner Fund. Two associated publications are ''Osiris'' (established 1936 by Sarton) and the ''Isis Current Bibliography''. The publication o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joseph Jastrow

Joseph Jastrow (January 30, 1863 – January 8, 1944) was a Polish-born American psychologist renowned for his contributions to experimental psychology, design of experiments, and psychophysics. He also worked on the phenomena of optical illusions, and a number of well-known optical illusions (notably the Jastrow illusion) that were either first reported in or popularized by his work. Jastrow believed that everyone had their own, often incorrect, preconceptions about psychology. One of his ultimate goals was to use the scientific method to identify truth from error, and educate the general public, which Jastrow accomplished through speaking tours, popular print media, and the radio. Biography Jastrow was born in Warsaw, Congress Poland, Poland. A son of Talmud scholar Marcus Jastrow, Joseph Jastrow was the younger brother of the Oriental studies, orientalist, Morris Jastrow, Jr. Joseph Jastrow came to Philadelphia, Pennsylvania, Philadelphia in 1866 and received his bachelor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

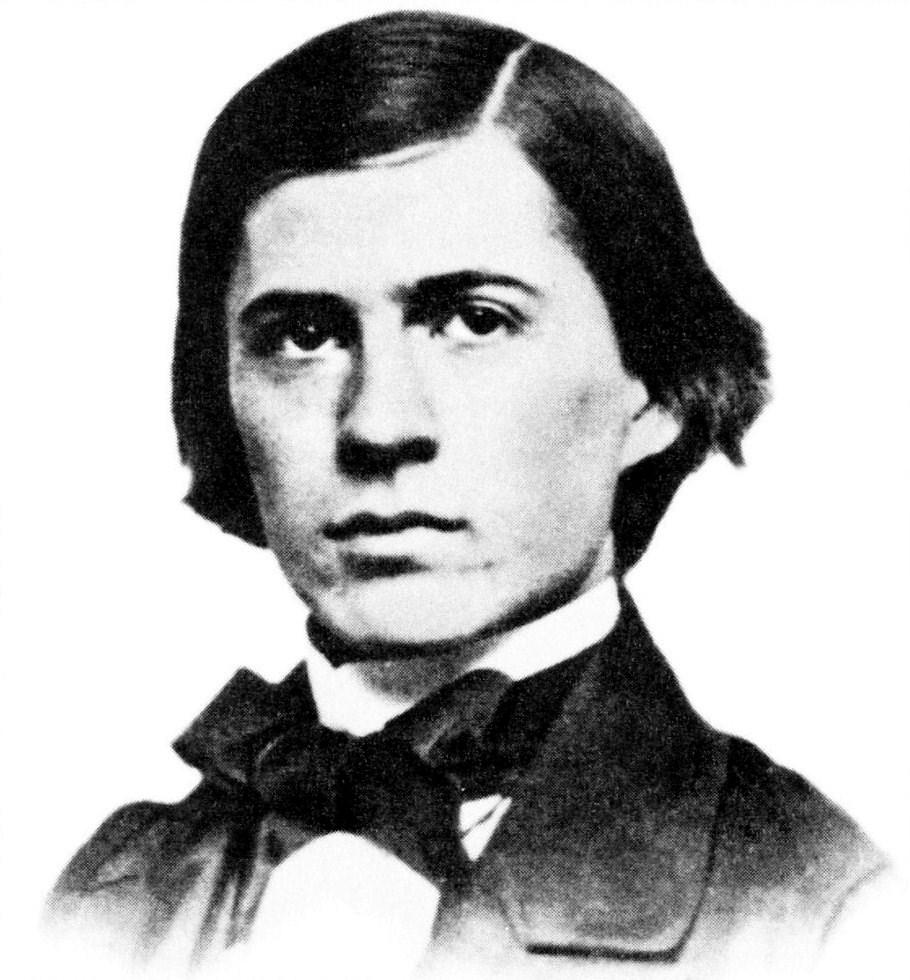

Charles Sanders Peirce

Charles Sanders Peirce ( ; September 10, 1839 – April 19, 1914) was an American scientist, mathematician, logician, and philosopher who is sometimes known as "the father of pragmatism". According to philosopher Paul Weiss (philosopher), Paul Weiss, Peirce was "the most original and versatile of America's philosophers and America's greatest logician". Bertrand Russell wrote "he was one of the most original minds of the later nineteenth century and certainly the greatest American thinker ever". Educated as a chemist and employed as a scientist for thirty years, Peirce meanwhile made major contributions to logic, such as theories of Algebraic logic, relations and Quantifier (logic), quantification. Clarence Irving Lewis, C. I. Lewis wrote, "The contributions of C. S. Peirce to symbolic logic are more numerous and varied than those of any other writer—at least in the nineteenth century." For Peirce, logic also encompassed much of what is now called epistemology and the philoso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vegetarian Cuisine

Vegetarian cuisine is based on food that meets vegetarian standards by not including meat and animal tissue products (such as gelatin or animal-derived rennet). Common vegetarian foods Vegetarian cuisine includes consumption of foods containing vegetable protein, vitamin B12, and other nutrients. Food regarded as suitable for all vegetarians (including vegans) typically includes: * Cereals/grains: barley, buckwheat, corn, fonio, hempseed, maize, millet, oats, quinoa, rice, rye, sorghum, triticale, wheat; derived products such as flour (dough, bread, baked goods, cornflakes, dumplings, granola, Muesli, pasta etc.). * Vegetables (fresh, canned, frozen, pureed, dried or pickled); derived products such as vegetable sauces like chili sauce and vegetable oils. * Edible fungi (fresh, canned, dried or pickled). Edible fungi include some mushrooms and cultured microfungi which can be involved in fermentation of food (yeasts and moulds) such as ''Aspergillus oryzae'' and ''Fusarium ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |