|

Partial Identification

In statistics and econometrics, set identification (or partial identification) extends the concept of identifiability (or "point identification") in statistical models to environments where the model and the distribution of observable variables are not sufficient to determine a unique value for the model parameters, but instead constrain the parameters to lie in a strict subset of the parameter space. Statistical models that are set (or partially) identified arise in a variety of settings in economics, including game theory and the Rubin causal model. Unlike approaches that deliver point-identification of the model parameters, methods from the literature on partial identification are used to obtain set estimates that are valid under weaker modelling assumptions. History Early works containing the main ideas of set identification included and . However, the methods were significantly developed and promoted by Charles Manski, beginning with and . Partial identification contin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Law Of Total Probability

In probability theory, the law (or formula) of total probability is a fundamental rule relating marginal probabilities to conditional probabilities. It expresses the total probability of an outcome which can be realized via several distinct events, hence the name. Statement The law of total probability isZwillinger, D., Kokoska, S. (2000) ''CRC Standard Probability and Statistics Tables and Formulae'', CRC Press. page 31. a theorem that states, in its discrete case, if \left\ is a finite or countably infinite set of mutually exclusive and collectively exhaustive events, then for any event A :P(A)=\sum_n P(A\cap B_n) or, alternatively, :P(A)=\sum_n P(A\mid B_n)P(B_n), where, for any n, if P(B_n) = 0 , then these terms are simply omitted from the summation since P(A\mid B_n) is finite. The summation can be interpreted as a weighted average, and consequently the marginal probability, P(A), is sometimes called "average probability"; "overall probability" is sometimes used i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrica

''Econometrica'' is a peer-reviewed academic journal of economics, publishing articles in many areas of economics, especially econometrics. It is published by Wiley-Blackwell on behalf of the Econometric Society. The current editor-in-chief is Guido Imbens. History ''Econometrica'' was established in 1933. Its first editor was Ragnar Frisch, recipient of the first Nobel Memorial Prize in Economic Sciences in 1969, who served as an editor from 1933 to 1954. Although ''Econometrica'' is currently published entirely in English, the first few issues also contained scientific articles written in French. Indexing and abstracting ''Econometrica'' is abstracted and indexed in: * Scopus * EconLit * Social Sciences Citation Index According to the ''Journal Citation Reports'', the journal has a 2020 impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a type of journal ranking. Journals with higher impact factor values are considered mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Annual Review Of Economics

The ''Annual Review of Economics'' is a peer-reviewed academic journal that publishes an annual volume of review articles relevant to economics. It was established in 2009 and is published by Annual Reviews. The co-editors are Philippe Aghion and Hélène Rey. As of 2023, it is being published as open access, under the Subscribe to Open model. History The ''Annual Review of Economics'' was first published in 2009 by the nonprofit publisher Annual Reviews. Its founding editors were Timothy Bresnahan and Nobel laureate Kenneth J. Arrow. As of 2021, it is published both in print and online. Scope and indexing The ''Annual Review of Economics'' defines its scope as covering significant developments in economics; specific subdisciplines included are macroeconomics; microeconomics; international, social, behavioral, cultural, institutional, education, and network economics; public finance; economic growth, economic development; political economy; game theory; and social choice ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Economic Literature

The ''Journal of Economic Literature'' is a peer-reviewed academic journal, published by the American Economic Association, that surveys the academic literature in economics. It was established by Arthur Smithies in 1963 as the ''Journal of Economic Abstracts'',Journal of Economic Literature: About JEL , retrieved 6 May 2011. and is currently one of the highest ranked journals in economics. /ref> As a , it mainly features essays and reviews of recent economic theories (as opposed to the latest research). The |

Confidence Region

In statistics, a confidence region is a multi-dimensional generalization of a confidence interval. For a bivariate normal distribution, it is an ellipse, also known as the error ellipse. More generally, it is a set of points in an ''n''-dimensional space, often represented as a hyperellipsoid around a point which is an estimated solution to a problem, although other shapes can occur. Interpretation The confidence region is calculated in such a way that if a set of measurements were repeated many times and a confidence region calculated in the same way on each set of measurements, then a certain percentage of the time (e.g. 95%) the confidence region would include the point representing the "true" values of the set of variables being estimated. However, unless certain assumptions about prior probabilities are made, it does not mean, when one confidence region has been calculated, that there is a 95% probability that the "true" values lie inside the region, since we do not ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population. Inferential statistics can be contrasted with descriptive statistics. Descriptive statistics is solely concerned with properties of the observed data, and it does not rest on the assumption that the data come from a larger population. In machine learning, the term ''inference'' is sometimes used instead to mean "make a prediction, by evaluating an already trained model"; in this context inferring properties of the model is referred to as ''training'' or ''learning'' (rather than ''inference''), and using a model for prediction is referred to as ''inference'' (instead of ''prediction''); se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Point Estimation

In statistics, point estimation involves the use of sample data to calculate a single value (known as a point estimate since it identifies a point in some parameter space) which is to serve as a "best guess" or "best estimate" of an unknown population parameter (for example, the population mean). More formally, it is the application of a point estimator to the data to obtain a point estimate. Point estimation can be contrasted with interval estimation: such interval estimates are typically either confidence intervals, in the case of frequentist inference, or credible intervals, in the case of Bayesian inference. More generally, a point estimator can be contrasted with a set estimator. Examples are given by confidence sets or credible sets. A point estimator can also be contrasted with a distribution estimator. Examples are given by confidence distributions, randomized estimators, and Bayesian posteriors. Properties of point estimates Biasedness “Bias” is defined as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Set Estimation

In statistics, a random vector is classically represented by a probability density function. In a set-membership approach or set estimation, is represented by a set to which is assumed to belong. This means that the Support (mathematics), support of the probability distribution function of is included inside . On the one hand, representing random vectors by sets makes it possible to provide fewer assumptions on the random variables (such as Independence (probability theory), independence) and dealing with nonlinearity, nonlinearities is easier. On the other hand, a probability distribution function provides a more accurate information than a set enclosing its support. Set-membership estimation Set membership estimation (or ''set estimation'' for short) is an Estimation theory, estimation approach which considers that measurements are represented by a set (most of the time a box of where is the number of measurements) of the measurement space. If is the parameter vector a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Missing Data

In statistics, missing data, or missing values, occur when no data value is stored for the variable in an observation. Missing data are a common occurrence and can have a significant effect on the conclusions that can be drawn from the data. Missing data can occur because of nonresponse: no information is provided for one or more items or for a whole unit ("subject"). Some items are more likely to generate a nonresponse than others: for example items about private subjects such as income. Attrition is a type of missingness that can occur in longitudinal studies—for instance studying development where a measurement is repeated after a certain period of time. Missingness occurs when participants drop out before the test ends and one or more measurements are missing. Data often are missing in research in economics, sociology, and political science because governments or private entities choose not to, or fail to, report critical statistics, or because the information is not avai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

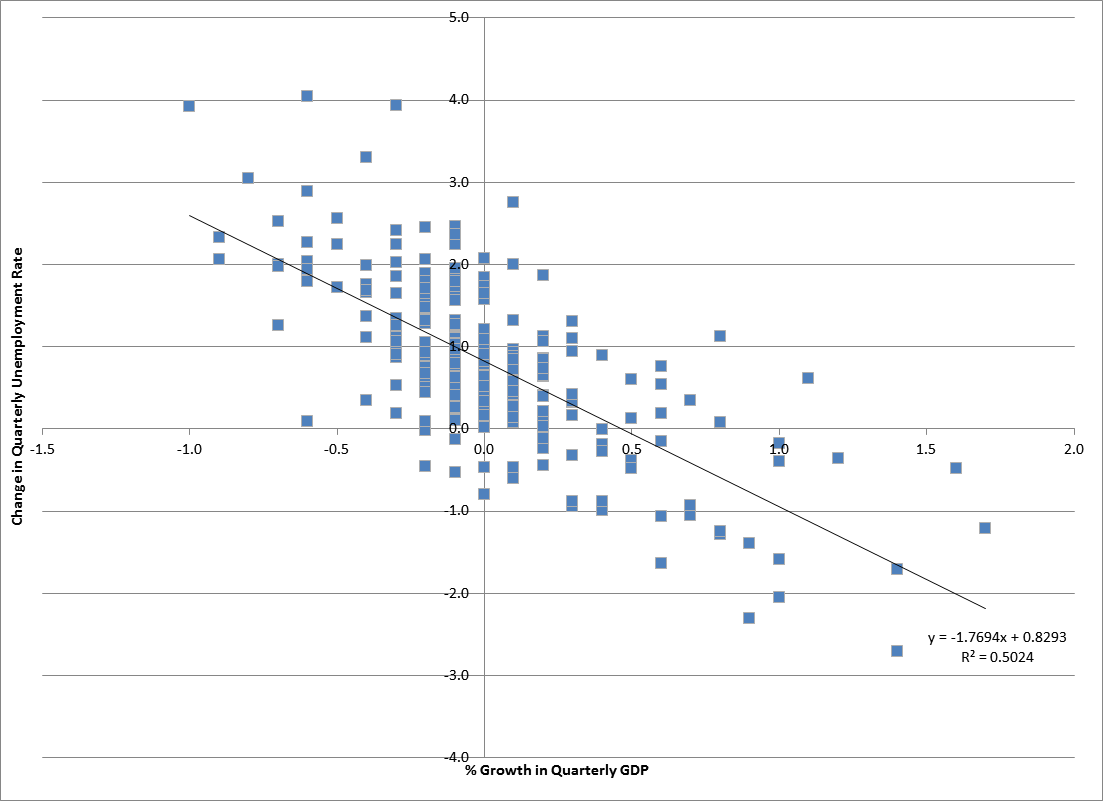

Econometrics is an application of statistical methods to economic data in order to give empirical content to economic relationships. M. Hashem Pesaran (1987). "Econometrics", '' The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 p. 8–22 Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1 p. 1–34Abstract ( 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference." An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships." Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today. A basic tool for econometrics is the multiple linear regression model. ''Econome ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |