|

Lossy

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. Higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression (reversible data compression) which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques. Well-designed lossy compression technology often reduces file sizes significantly before degradation is noticed by the end-user. Even when noticeable by the user, further data reduction may be desirable (e.g., for real-time communication or to reduce transmission times or storage needs). The most widely used lossy compression algorithm is the discrete cosine transform (DCT), first published by N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Audio Compression (data)

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating Redundancy (information theory), statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JPEG

JPEG ( , short for Joint Photographic Experts Group and sometimes retroactively referred to as JPEG 1) is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable trade off between storage size and image quality. JPEG typically achieves 10:1 compression with noticeable, but widely agreed to be acceptable perceptible loss in image quality. Since its introduction in 1992, JPEG has been the most widely used image compression standard in the world, and the most widely used digital image format, with several billion JPEG images produced every day as of 2015. The Joint Photographic Experts Group created the standard in 1992, based on the discrete cosine transform (DCT) algorithm. JPEG was largely responsible for the proliferation of digital images and digital photos across the Internet and later social media. JPEG compression is used in a number of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Compression

Image compression is a type of data compression applied to digital images, to reduce their cost for computer data storage, storage or data transmission, transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data. Lossy and lossless image compression Image compression may be lossy compression, lossy or lossless compression, lossless. Lossless compression is preferred for archival purposes and often for medical imaging, technical drawings, clip art, or comics. Lossy compression methods, especially when used at low bit rates, introduce compression artifacts. Lossy methods are especially suitable for natural images such as photographs in applications where minor (sometimes imperceptible) loss of fidelity is acceptable to achieve a substantial reduction in bit rate. Lossy compression that produces negligible differences ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Cosine Transform

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequency, frequencies. The DCT, first proposed by Nasir Ahmed (engineer), Nasir Ahmed in 1972, is a widely used transformation technique in signal processing and data compression. It is used in most digital media, including digital images (such as JPEG and HEIF), digital video (such as MPEG and ), digital audio (such as Dolby Digital, MP3 and Advanced Audio Coding, AAC), digital television (such as SDTV, HDTV and Video on demand, VOD), digital radio (such as AAC+ and DAB+), and speech coding (such as AAC-LD, Siren (codec), Siren and Opus (audio format), Opus). DCTs are also important to numerous other applications in science and engineering, such as digital signal processing, telecommunication devices, reducing network bandwidth usage, and spectral methods for the numerical solution of partial differential equations. A DCT is a List of Fourier ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Compression

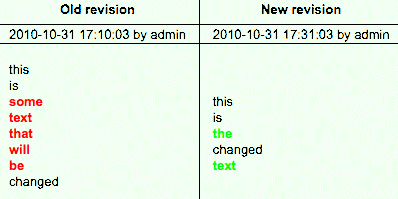

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Video Coding Standards

A video coding format (or sometimes video compression format) is a content representation format of digital video content, such as in a data file or bitstream. It typically uses a standardized video compression algorithm, most commonly based on discrete cosine transform (DCT) coding and motion compensation. A computer software or hardware component that compresses or decompresses a specific video coding format is a video codec. Some video coding formats are documented by a detailed technical specification document known as a video coding specification. Some such specifications are written and approved by standardization organizations as technical standards, and are thus known as a video coding standard. There are ''de facto'' standards and formal standards. Video content encoded using a particular video coding format is normally bundled with an audio stream (encoded using an audio coding format) inside a multimedia container format such as AVI, MP4, FLV, RealMedia, or M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transform Coding

Transform coding is a type of data compression for "natural" data like audio signals or photographic images. The transformation is typically lossless (perfectly reversible) on its own but is used to enable better (more targeted) quantization, which then results in a lower quality copy of the original input (lossy compression). In transform coding, knowledge of the application is used to choose information to discard, thereby lowering its bandwidth. The remaining information can then be compressed via a variety of methods. When the output is decoded, the result may not be identical to the original input, but is expected to be close enough for the purpose of the application. Colour television NTSC One of the most successful transform encoding system is typically not referred to as such—the example being NTSC color television. After an extensive series of studies in the 1950s, Alda Bedford showed that the human eye has high resolution only for black and white, somewhat less f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantization (signal Processing)

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set (often a continuous set) to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms. The difference between an input value and its quantized value (such as round-off error) is referred to as quantization error, noise or distortion. A device or algorithm function, algorithmic function that performs quantization is called a quantizer. An analog-to-digital converter is an example of a quantizer. Example For example, Rounding#Round half up, rounding a real number x to the nearest integer value forms a very basic type of q ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Visually Lossless

In data compression and psychoacoustics, transparency is the result of lossy data compression accurate enough that the compressed result is perceptually indistinguishable from the uncompressed input, i.e. perceptually lossless. A transparency threshold is a given value at which transparency is reached. It is commonly used to describe compressed data bitrates. For example, the transparency threshold for MP3 to linear PCM audio is said to be between 175 and 245 kbit/s, at 44.1 kHz, when encoded as VBR MP3 (corresponding to the -V3 and -V0 settings of the highly popular LAME MP3 encoder). This means that when an MP3 that was encoded at those bitrates is being played back, it is indistinguishable from the original PCM, and the compression is transparent to the listener. The term ''transparent compression'' can also refer to a filesystem feature that allows compressed files to be read and written just like regular ones. In this case, the compressor is typically a general ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |