|

Granularity (parallel Computing)

In parallel computing, granularity (or grain size) of a task is a measure of the amount of work (or computation) which is performed by that task. Another definition of granularity takes into account the communication overhead between multiple processors or processing elements. It defines granularity as the ratio of computation time to communication time, wherein computation time is the time required to perform the computation of a task and communication time is the time required to exchange data between processors. . If is the computation time and denotes the communication time, then the granularity of a task can be calculated as: ::G=\frac Granularity is usually measured in terms of the number of instructions which are executed in a particular task. Alternately, granularity can also be specified in terms of the execution time of a program, combining the computation time and communication time. Types of parallelism Depending on the amount of work which is performed by a p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brain

The brain is an organ (biology), organ that serves as the center of the nervous system in all vertebrate and most invertebrate animals. It consists of nervous tissue and is typically located in the head (cephalization), usually near organs for special senses such as visual perception, vision, hearing, and olfaction. Being the most specialized organ, it is responsible for receiving information from the sensory nervous system, processing that information (thought, cognition, and intelligence) and the coordination of motor control (muscle activity and endocrine system). While invertebrate brains arise from paired segmental ganglia (each of which is only responsible for the respective segmentation (biology), body segment) of the ventral nerve cord, vertebrate brains develop axially from the midline dorsal nerve cord as a brain vesicle, vesicular enlargement at the rostral (anatomical term), rostral end of the neural tube, with centralized control over all body segments. All vertebr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction-level Parallelism

Instruction-level parallelism (ILP) is the Parallel computing, parallel or simultaneous execution of a sequence of Instruction set, instructions in a computer program. More specifically, ILP refers to the average number of instructions run per step of this parallel execution. Discussion ILP must not be confused with Concurrency (computer science), concurrency. In ILP, there is a single specific Thread (computing), thread of execution of a Process (computing), process. On the other hand, concurrency involves the assignment of multiple threads to a Central processing unit, CPU's core in a strict alternation, or in true parallelism if there are enough CPU cores, ideally one core for each runnable thread. There are two approaches to instruction-level parallelism: Computer hardware, hardware and software. Hardware-level ILP works upon dynamic parallelism, whereas software-level ILP works on static parallelism. Dynamic parallelism means that the processor decides at run time whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scheduling

A schedule (, ) or a timetable, as a basic time-management tool, consists of a list of times at which possible tasks, events, or actions are intended to take place, or of a sequence of events in the chronological order in which such things are intended to take place. The process of creating a schedule — deciding how to order these tasks and how to commit resources between the variety of possible tasks — is called scheduling,Ofer Zwikael, John Smyrk, ''Project Management for the Creation of Organisational Value'' (2011), p. 196: "The process is called scheduling, the output from which is a timetable of some form". and a person responsible for making a particular schedule may be called a scheduler. Making and following schedules is an ancient human activity. Some scenarios associate this kind of planning with learning life skills. Schedules are necessary, or at least useful, in situations where individuals need to know what time they must be at a specific location to rece ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speedup

In computer architecture, speedup is a number that measures the relative performance of two systems processing the same problem. More technically, it is the improvement in speed of execution of a task executed on two similar architectures with different resources. The notion of speedup was established by Amdahl's law, which was particularly focused on parallel processing. However, speedup can be used more generally to show the effect on performance after any resource enhancement. Definitions Speedup can be defined for two different types of quantities: '' latency'' and ''throughput''. ''Latency'' of an architecture is the reciprocal of the execution speed of a task: : L = \frac = \frac, where * ''v'' is the execution speed of the task; * ''T'' is the execution time of the task; * ''W'' is the execution workload of the task. ''Throughput'' of an architecture is the execution rate of a task: : Q = \rho vA = \frac = \frac, where * ''ρ'' is the execution density (e.g., the numbe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

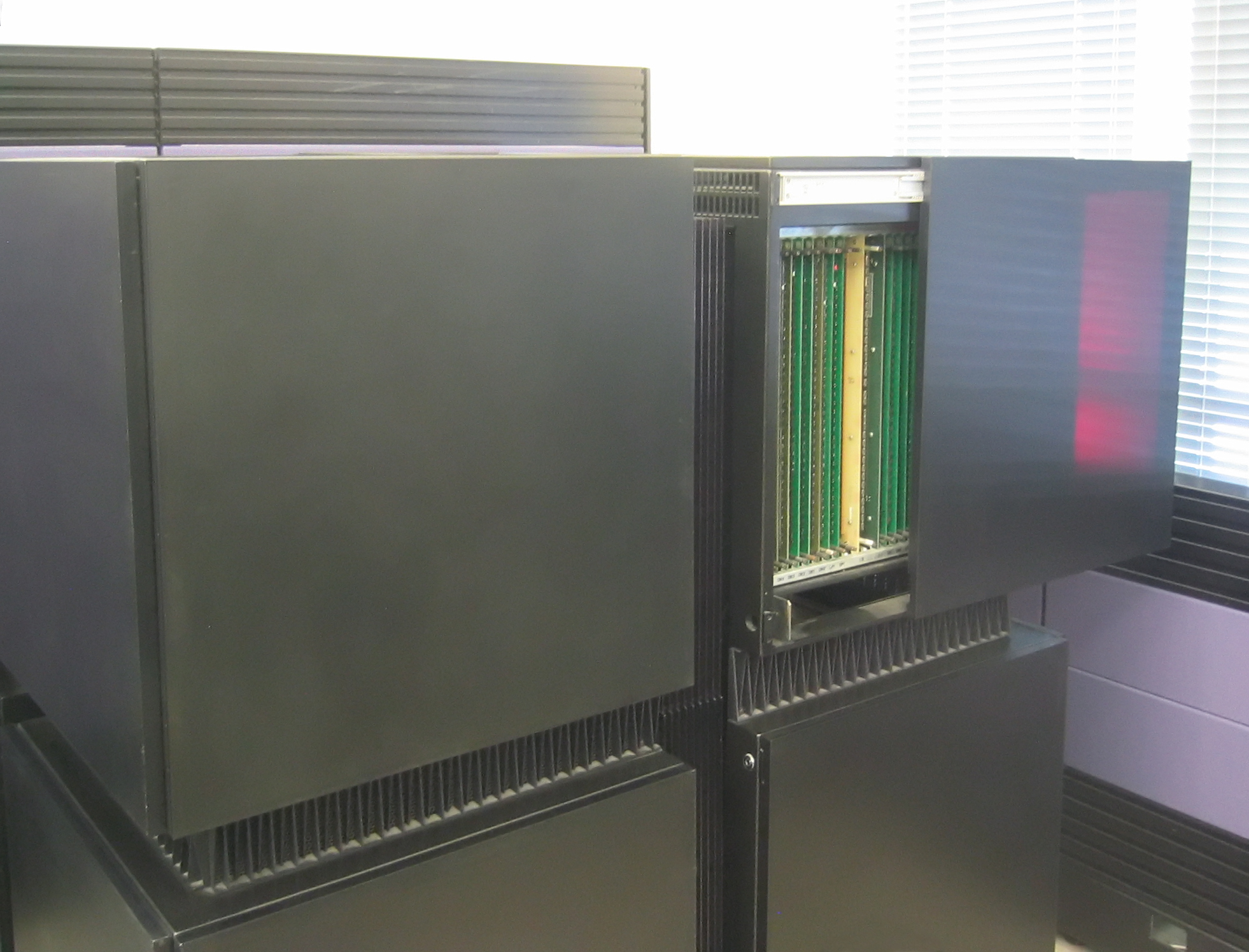

Intel IPSC

The Intel Personal SuperComputer (Intel iPSC) was a product line of parallel computers in the 1980s and 1990s. The iPSC/1 was superseded by the Intel iPSC/2, and then the Intel iPSC/860. iPSC/1 In 1984, Justin Rattner became manager of the Intel Scientific Computers group in Beaverton, Oregon. He hired a team that included mathematician Cleve Moler. The iPSC used a Hypercube internetwork topology of connections between the processors internally inspired by the Caltech Cosmic Cube research project. For that reason, it was configured with nodes numbering with power of two, which correspond to the corners of hypercubes of increasing dimension. Intel announced the iPSC/1 in 1985, with 32 to 128 nodes connected with Ethernet into a hypercube. The system was managed by a personal computer of the PC/AT era running Xenix, the "cube manager". Each node had a 80286 CPU with 80287 math coprocessor, 512K of RAM, and eight Ethernet ports (seven for the hypercube interconnect, and one to t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray Y-MP

The Cray Y-MP was a supercomputer sold by Cray, Cray Research from 1988, and the successor to the company's Cray X-MP, X-MP. The Y-MP retained software compatibility with the X-MP, but extended the address registers from 24 to 32 bits. High-density VLSI emitter-coupled logic, ECL technology was used and a new liquid-cooling system was devised. The Y-MP ran the Cray UNICOS operating system. The Y-MP could be equipped with two, four or eight vector processors, with two functional units each and a clock cycle time of 6 ns (167 MHz). Peak performance was thus 333 megaflops per processor. Main memory comprised 256, 512, or 1024 MB of static RAM, SRAM. (Memory was measured and allocated in 64 bit words, and offered in 32, 64, or 128 MWords). The original Y-MP (otherwise known as the Y-MP Model D) was housed in a chassis similar to the horseshoe-shaped X-MP, but with an extra rectangular cabinet added in the middle (containing the CPU boards), thus forming a "Y" shape in plan vie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Message-passing

In computer science, message passing is a technique for invoking behavior (i.e., running a program) on a computer. The invoking program sends a message to a process (which may be an actor or object) and relies on that process and its supporting infrastructure to then select and run some appropriate code. Message passing differs from conventional programming where a process, subroutine, or function is directly invoked by name. Message passing is key to some models of concurrency and object-oriented programming. Message passing is ubiquitous in modern computer software. It is used as a way for the objects that make up a program to work with each other and as a means for objects and systems running on different computers (e.g., the Internet) to interact. Message passing may be implemented by various mechanisms, including channels. Overview Message passing is a technique for invoking behavior (i.e., running a program) on a computer. In contrast to the traditional technique of call ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neurons

A neuron (American English), neurone (British English), or nerve cell, is an membrane potential#Cell excitability, excitable cell (biology), cell that fires electric signals called action potentials across a neural network (biology), neural network in the nervous system. They are located in the nervous system and help to receive and conduct impulses. Neurons communicate with other cells via synapses, which are specialized connections that commonly use minute amounts of chemical neurotransmitters to pass the electric signal from the presynaptic neuron to the target cell through the synaptic gap. Neurons are the main components of nervous tissue in all Animalia, animals except sponges and placozoans. Plants and fungi do not have nerve cells. Molecular evidence suggests that the ability to generate electric signals first appeared in evolution some 700 to 800 million years ago, during the Tonian period. Predecessors of neurons were the peptidergic secretory cells. They eventually ga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Granulation

Granulation is the process of forming grains or granules from a powdery or solid substance, producing a granular material. It is applied in several technological processes in the chemical and pharmaceutical industries. Typically, granulation involves agglomeration of fine particles into larger granules, typically of size range between 0.2 and 4.0 mm depending on their subsequent use. Less commonly, it involves shredding or grinding solid material into finer granules or pellets. From powder The granulation process combines one or more powder particles and forms a granule that will allow tableting to be within required limits. It is the process of collecting particles together by creating bonds between them. Bonds are formed by compression or by using a binding agent. Granulation is extensively used in the pharmaceutical industry, for manufacturing of tablets and pellets. This way predictable and repeatable process is possible and granules of consistent quality can be produce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |