|

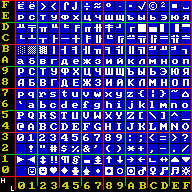

Bit Manipulation

Bit manipulation is the act of algorithmically manipulating bits or other pieces of data shorter than a word. Computer programming tasks that require bit manipulation include low-level device control, error detection and error correction, correction algorithms, data compression, encryption algorithms, and Optimization (computer science), optimization. For most other tasks, modern programming languages allow the programmer to work directly with Abstraction (computer science), abstractions instead of bits that represent those abstractions. Source code that does bit manipulation makes use of the bitwise operations: AND, OR, XOR, NOT, and possibly other operations analogous to the boolean operators; there are also bitwise operation#Bit shifts, bit shifts and operations to count ones and zeros, find high and low one or zero, set, reset and test bits, extract and insert fields, mask and zero fields, gather and scatter bits to and from specified bit positions or fields. Integer arithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Pattern

The bit is the most basic unit of information in computing and digital communication. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also widely used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bits is called one ''byte'', but historically the size of the byte is not strictly defined. Frequently, half, full, double and quadruple words consist of a number of bytes which is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Array

A bit array (also known as bitmask, bit map, bit set, bit string, or bit vector) is an array data structure that compactly stores bits. It can be used to implement a simple set data structure. A bit array is effective at exploiting bit-level parallelism in hardware to perform operations quickly. A typical bit array stores ''kw'' bits, where ''w'' is the number of bits in the unit of storage, such as a byte or Word (computer architecture), word, and ''k'' is some nonnegative integer. If ''w'' does not divide the number of bits to be stored, some space is wasted due to Fragmentation (computing), internal fragmentation. Definition A bit array is a mapping from some domain (almost always a range of integers) to values in the set . The values can be interpreted as dark/light, absent/present, locked/unlocked, valid/invalid, et cetera. The point is that there are only two possible values, so they can be stored in one bit. As with other arrays, the access to a single bit can be managed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predication (computer Architecture)

In computer architecture, predication is a feature that provides an alternative to conditional transfer of control, as implemented by conditional branch machine instructions. Predication works by having conditional (''predicated'') non-branch instructions associated with a ''predicate'', a Boolean value used by the instruction to control whether the instruction is allowed to modify the architectural state or not. If the predicate specified in the instruction is true, the instruction modifies the architectural state; otherwise, the architectural state is unchanged. For example, a predicated move instruction (a conditional move) will only modify the destination if the predicate is true. Thus, instead of using a conditional branch to select an instruction or a sequence of instructions to execute based on the predicate that controls whether the branch occurs, the instructions to be executed are associated with that predicate, so that they will be executed, or not executed, ba ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word (computer Architecture)

In computing, a word is any processor design's natural unit of data. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word (the ''word size'', ''word width'', or ''word length'') is an important characteristic of any specific processor design or computer architecture. The size of a word is reflected in many aspects of a computer's structure and operation; the majority of the registers in a processor are usually word-sized and the largest datum that can be transferred to and from the working memory in a single operation is a word in many (not all) architectures. The largest possible address size, used to designate a location in memory, is typically a hardware word (here, "hardware word" means the full-sized natural word of the processor, as opposed to any other definition used). Documentation for older computers with fixed word size commonly states memory sizes in words rather than bytes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nibble

In computing, a nibble, or spelled nybble to match byte, is a unit of information that is an aggregation of four- bits; half of a byte/ octet. The unit is alternatively called nyble, nybl, half-byte or tetrade. In networking or telecommunications, the unit is often called a semi-octet, quadbit, or quartet. As a nibble can represent sixteen () possible values, a nibble value is often shown as a hexadecimal digit (hex digit). A byte is two nibbles, and therefore, a value can be shown as two hex digits. Four-bit computers use nibble-sized data for storage and operations; as the word unit. Such computers were used in early microprocessors, pocket calculators and pocket computers. They continue to be used in some microcontrollers. In this context, 4-bit groups were sometimes also called characters rather than nibbles. History The term ''nibble'' originates from its representing half a byte, with ''byte'' a homophone of the English word ''bite''. In 2014, David B. Be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Field

A bit field is a data structure that maps to one or more adjacent bits which have been allocated for specific purposes, so that any single bit or group of bits within the structure can be set or inspected. A bit field is most commonly used to represent Primitive data type, integral types of known, fixed bit-width, such as single-bit Boolean data type, Booleans. The meaning of the individual bits within the field is determined by the programmer; for example, the first bit in a bit field (located at the field's base address) is sometimes used to determine the state of a particular attribute associated with the bit field. Within CPUs and other logic devices, collections of bit fields called flags are commonly used to control or to indicate the outcome of particular operations. Processors have a status register that is composed of flags. For example, if the result of an addition cannot be represented in the destination an arithmetic overflow is set. The flags can be used to decide subs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Find First Set

In computer software and hardware, find first set (ffs) or find first one is a bit operation that, given an unsigned Word (computer architecture), machine word, designates the index or position of the least significant bit set to one in the word counting from the least significant bit position. A nearly equivalent operation is count trailing zeros (ctz) or number of trailing zeros (ntz), which counts the number of zero bits following the least significant one bit. The complementary operation that finds the index or position of the most significant set bit is log base 2, so called because it computes the binary logarithm . This is #Properties and relations, closely related to count leading zeros (clz) or number of leading zeros (nlz), which counts the number of zero bits preceding the most significant one bit. There are two common variants of find first set, the POSIX definition which starts indexing of bits at 1, herein labelled ffs, and the variant which starts indexing of bits at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Popcnt

SSE4 (Streaming SIMD Extensions 4) is a SIMD CPU instruction set used in the Intel Core microarchitecture and AMD K10 (K8L). It was announced on September 27, 2006, at the Fall 2006 Intel Developer Forum, with vague details in a white paper;Intel Streaming SIMD Extensions 4 (SSE4) Instruction Set Innovation , Intel. more precise details of 47 instructions became available at the Spring 2007 Intel Developer Forum in , in the presentation. SSE4 extended the instruction set which was released in early 2004. All software using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Assembly Language

In computing, assembly language (alternatively assembler language or symbolic machine code), often referred to simply as assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture's machine code instructions. Assembly language usually has one statement per machine instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported. The first assembly code in which a language is used to represent machine code instructions is found in Kathleen and Andrew Donald Booth's 1947 work, ''Coding for A.R.C.''. Assembly code is converted into executable machine code by a utility program referred to as an '' assembler''. The term "assembler" is generally attributed to Wilkes, Wheeler and Gill in their 1951 book '' The Preparation of Programs for an Electronic Dig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |