|

Absolute Risk Reduction

The risk difference (RD), excess risk, or attributable risk is the difference between the risk of an outcome in the exposed group and the unexposed group. It is computed as I_e - I_u, where I_e is the incidence in the exposed group, and I_u is the incidence in the unexposed group. If the risk of an outcome is increased by the exposure, the term absolute risk increase (ARI) is used, and computed as I_e - I_u. Equivalently, if the risk of an outcome is decreased by the exposure, the term absolute risk reduction (ARR) is used, and computed as I_u - I_e. The inverse of the absolute risk reduction is the number needed to treat, and the inverse of the absolute risk increase is the number needed to harm. Usage in reporting It is recommended to use absolute measurements, such as risk difference, alongside the relative measurements, when presenting the results of randomized controlled trials. Their utility can be illustrated by the following example of a hypothetical drug which reduces t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Illustration Of Risk Reduction

An illustration is a decoration, interpretation, or visual explanation of a text, concept, or process, designed for integration in print and digitally published media, such as posters, Flyer (pamphlet), flyers, magazines, books, teaching materials, animations, video games and films. An illustration is typically created by an illustrator. Digital illustrations are often used to make websites and apps more user-friendly, such as the use of emojis to accompany digital type. Illustration also means providing an example; either in writing or in picture form. The origin of the word "illustration" is late Middle English (in the sense ‘illumination; spiritual or intellectual enlightenment’): via Old French from Latin ''illustratio''(n-), from the verb ''illustrare''. Illustration styles Contemporary illustration uses a wide range of styles and techniques, including drawing, painting, printmaking, collage, Photomontage, montage, Interaction design, digital design, multimedia, 3 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

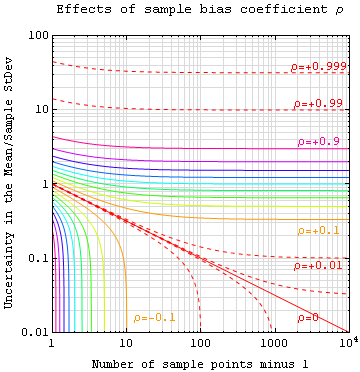

Sampling Distribution

In statistics, a sampling distribution or finite-sample distribution is the probability distribution of a given random-sample-based statistic. For an arbitrarily large number of samples where each sample, involving multiple observations (data points), is separately used to compute one value of a statistic (for example, the sample mean or sample variance) per sample, the sampling distribution is the probability distribution of the values that the statistic takes on. In many contexts, only one sample (i.e., a set of observations) is observed, but the sampling distribution can be found theoretically. Sampling distributions are important in statistics because they provide a major simplification en route to statistical inference. More specifically, they allow analytical considerations to be based on the probability distribution of a statistic, rather than on the joint probability distribution of all the individual sample values. Introduction The sampling distribution of a statistic i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relative Risk Reduction

In epidemiology, the relative risk reduction (RRR) or efficacy is the relative decrease in the risk In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value (such as health, well-being, wealth, property or the environ ... of an adverse event in the exposed group compared to an unexposed group. It is computed as (I_u - I_e) / I_u, where I_e is the incidence in the exposed group, and I_u is the incidence in the unexposed group. If the risk of an adverse event is increased by the exposure rather than decreased, the term relative risk increase (RRI) is used, and it is computed as (I_e - I_u)/I_u. If the direction of risk change is not assumed, the term relative effect is used, and it is computed in the same way as relative risk increase. Numerical examples Risk reduction Risk increase See also * Population Impact Measures * Vaccine efficacy R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Population Impact Measures

Population impact measures (PIMs) are biostatistical measures of risk and benefit used in epidemiological and public health research. They are used to describe the impact of health risks and benefits in a population, to inform health policy. Frequently used measures of risk and benefit identified by Jerkel, Katz and Elmore, describe measures of risk difference (attributable risk), rate difference (often expressed as the odds ratio or relative risk), population attributable risk (PAR), and the relative risk reduction, which can be recalculated into a measure of ''absolute benefit'', called the number needed to treat. Population impact measures are an extension of these statistics, as they are measures of absolute risk at the population level, which are calculations of number of people in the population who are at risk to be harmed, or who will benefit from public health interventions. They are measures of absolute risk and benefit, producing numbers of people who will benefit fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the p-value, ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is said to be ''statistically significant'', by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or Observational study, observation that involves drawing a Sampling (statistics), sample from a Statistical population, population, there is always the possibility that an observed effect would have occurred due to sampling error al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Score

In statistics, the standard score or ''z''-score is the number of standard deviations by which the value of a raw score (i.e., an observed value or data point) is above or below the mean value of what is being observed or measured. Raw scores above the mean have positive standard scores, while those below the mean have negative standard scores. It is calculated by subtracting the population mean from an individual raw score and then dividing the difference by the Statistical population, population standard deviation. This process of converting a raw score into a standard score is called standardizing or normalizing (however, "normalizing" can refer to many types of ratios; see ''Normalization (statistics), Normalization'' for more). Standard scores are most commonly called ''z''-scores; the two terms may be used interchangeably, as they are in this article. Other equivalent terms in use include z-value, z-statistic, normal score, standardized variable and pull in high energy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely arou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Point Estimate

In statistics, point estimation involves the use of sample data to calculate a single value (known as a point estimate since it identifies a point in some parameter space) which is to serve as a "best guess" or "best estimate" of an unknown population parameter (for example, the population mean). More formally, it is the application of a point estimator to the data to obtain a point estimate. Point estimation can be contrasted with interval estimation: such interval estimates are typically either confidence intervals, in the case of frequentist inference, or credible intervals, in the case of Bayesian inference. More generally, a point estimator can be contrasted with a set estimator. Examples are given by confidence sets or credible sets. A point estimator can also be contrasted with a distribution estimator. Examples are given by confidence distributions, randomized estimators, and Bayesian posteriors. Properties of point estimates Biasedness “Bias” is define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Risk

In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value (such as health, well-being, wealth, property or the environment), often focusing on negative, undesirable consequences. Many different definitions have been proposed. One ISO standard, international standard definition of risk is the "effect of uncertainty on objectives". The understanding of risk, the methods of assessment and management, the descriptions of risk and even the definitions of risk differ in different practice areas (business, economics, Environmental science, environment, finance, information technology, health, insurance, safety, security, security, privacy, etc). This article provides links to more detailed articles on these areas. The international standard for risk management, ISO 31000, provides principles and general guidelines on managing risks faced by organizations. Defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scientific Control

A scientific control is an experiment or observation designed to minimize the effects of variables other than the independent variable (i.e. confounding variables). This increases the reliability of the results, often through a comparison between control measurements and the other measurements. Scientific controls are a part of the scientific method. Controlled experiments Controls eliminate alternate explanations of experimental results, especially experimental errors and experimenter bias. Many controls are specific to the type of experiment being performed, as in the molecular markers used in SDS-PAGE experiments, and may simply have the purpose of ensuring that the equipment is working properly. The selection and use of proper controls to ensure that experimental results are valid (for example, absence of confounding variables) can be very difficult. Control measurements may also be used for other purposes: for example, a measurement of a microphone's background noise in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |