|

Unbiased Estimation Of Standard Deviation

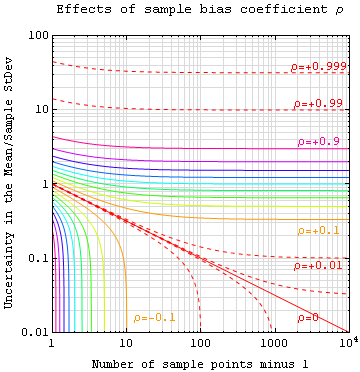

In statistics and in particular statistical theory, unbiased estimation of a standard deviation is the calculation from a statistical sample of an estimated value of the standard deviation (a measure of statistical dispersion) of a population of values, in such a way that the expected value of the calculation equals the true value. Except in some important situations, outlined later, the task has little relevance to applications of statistics since its need is avoided by standard procedures, such as the use of significance tests and confidence intervals, or by using Bayesian analysis. However, for statistical theory, it provides an exemplar problem in the context of estimation theory which is both simple to state and for which results cannot be obtained in closed form. It also provides an example where imposing the requirement for unbiased estimation might be seen as just adding inconvenience, with no real benefit. Motivation In statistics, the standard deviation of a population ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. It is the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for example, the variance of a sum of uncorrelated random variables is equal to the sum of their variances. A disadvantage of the variance for practical applications is that, unlike the standard deviation, its units differ from the random variable, which is why the standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when the random errors are assumed to have normal distributions with the same variance. From the perspective of Bayesian inference, ML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistically Independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error (statistics)

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely aro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Quality Control

Statistical process control (SPC) or statistical quality control (SQC) is the application of statistical methods to monitor and control the quality of a production process. This helps to ensure that the process operates efficiently, producing more specification-conforming products with less waste scrap. SPC can be applied to any process where the "conforming product" (product meeting specifications) output can be measured. Key tools used in SPC include run charts, control charts, a focus on continuous improvement, and the design of experiments. An example of a process where SPC is applied is manufacturing lines. SPC must be practiced in two phases: the first phase is the initial establishment of the process, and the second phase is the regular production use of the process. In the second phase, a decision of the period to be examined must be made, depending upon the change in 5M&E conditions (Man, Machine, Material, Method, Movement, Environment) and wear rate of parts used in t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Function

In mathematics, the gamma function (represented by Γ, capital Greek alphabet, Greek letter gamma) is the most common extension of the factorial function to complex numbers. Derived by Daniel Bernoulli, the gamma function \Gamma(z) is defined for all complex numbers z except non-positive integers, and for every positive integer z=n, \Gamma(n) = (n-1)!\,.The gamma function can be defined via a convergent improper integral for complex numbers with positive real part: \Gamma(z) = \int_0^\infty t^ e^\textt, \ \qquad \Re(z) > 0\,.The gamma function then is defined in the complex plane as the analytic continuation of this integral function: it is a meromorphic function which is holomorphic function, holomorphic except at zero and the negative integers, where it has simple Zeros and poles, poles. The gamma function has no zeros, so the reciprocal gamma function is an entire function. In fact, the gamma function corresponds to the Mellin transform of the negative exponential functi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi Distribution

In probability theory and statistics, the chi distribution is a continuous probability distribution over the non-negative real line. It is the distribution of the positive square root of a sum of squared independent Gaussian random variables. Equivalently, it is the distribution of the Euclidean distance between a multivariate Gaussian random variable and the origin. The chi distribution describes the positive square roots of a variable obeying a chi-squared distribution. If Z_1, \ldots, Z_k are k independent, normally distributed random variables with mean 0 and standard deviation 1, then the statistic :Y = \sqrt is distributed according to the chi distribution. The chi distribution has one positive integer parameter k, which specifies the degrees of freedom (i.e. the number of random variables Z_i). The most familiar examples are the Rayleigh distribution (chi distribution with two degrees of freedom) and the Maxwell–Boltzmann distribution of the molecular speeds i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of N independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to N-1. Mathematically, degrees of freedom is the number of dimensions of the domain of a random vector, or e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the \chi^2-distribution with k Degrees of freedom (statistics), degrees of freedom is the distribution of a sum of the squares of k Independence (probability theory), independent standard normal random variables. The chi-squared distribution \chi^2_k is a special case of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim \chi^2_k then X \sim \text(\alpha=\frac, \theta=2) (where \alpha is the shape parameter and \theta the scale parameter of the gamma distribution) and X \sim \text_1(1,k) . The scaled chi-squared distribution s^2 \chi^2_k is a reparametrization of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim s^2 \chi^2_k then X \sim \text(\alpha=\frac, \theta=2 s^2) and X \sim \text_1(s^2,k) . The chi-squared distribution is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in constru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cochran's Theorem

In statistics, Cochran's theorem, devised by William G. Cochran, is a theorem used to justify results relating to the probability distributions of statistics that are used in the analysis of variance. Examples Sample mean and sample variance If ''X''1, ..., ''X''''n'' are independent normally distributed random variables with mean ''μ'' and standard deviation ''σ'' then :U_i = \frac is standard normal for each ''i''. Note that the total ''Q'' is equal to sum of squared ''U''s as shown here: :\sum_iQ_i=\sum_ U_j B_^ U_k = \sum_ U_j U_k \sum_i B_^ = \sum_ U_j U_k\delta_ = \sum_ U_j^2 which stems from the original assumption that B_ + B_ \ldots = I. So instead we will calculate this quantity and later separate it into ''Q''''i'''s. It is possible to write : \sum_^n U_i^2=\sum_^n\left(\frac\right)^2 + n\left(\frac\right)^2 (here \overline is the sample mean). To see this identity, multiply throughout by \sigma^2 and note that : \sum(X_i-\mu)^2= \sum(X_i-\overline+\overlin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |