|

TOP500

The TOP500 project ranks and details the 500 most powerful non-distributed computing, distributed computer systems in the world. The project was started in 1993 and publishes an updated list of the supercomputers twice a year. The first of these updates always coincides with the International Supercomputing Conference in June, and the second is presented at the ACM/IEEE Supercomputing Conference in November. The project aims to provide a reliable basis for tracking and detecting trends in high-performance computing and bases rankings on HPL (benchmark), HPL benchmarks, a portable implementation of the high-performance LINPACK benchmarks, LINPACK benchmark written in Fortran for Distributed memory, distributed-memory computers. The most recent edition of TOP500 was published in June 2025 as the 65th edition of TOP500, while the next edition of TOP500 will be published in November 2025 as the 66th edition of TOP500. As of June 2025, the United States' El Capitan (supercomputer), El ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called Exascale computing, exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the TOP500, world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. Floating-point arithmetic Floating-point arithmetic is needed for very large or very small real numbers, or computations that require a large dynamic range. Floating-point representation is similar to scientific notation, except computers use base two (with rare exceptions), rather than base ten. The encoding scheme stores the sign, the exponent (in base two for Cray and VAX, base two or ten for IEEE floating point formats, and base 16 for IBM Floating Point Architecture) and the significand (number after the radix point). While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32-bit numbers called ''single precision'', a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HPL (benchmark)

The LINPACK benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense ''n'' × ''n'' system of linear equations ''Ax'' = ''b'', which is a common task in engineering. The latest version of these benchmarks is used to build the TOP500 list, ranking the world's most powerful supercomputers. The aim is to approximate how fast a computer will perform when solving real problems. It is a simplification, since no single computational task can reflect the overall performance of a computer system. Nevertheless, the LINPACK benchmark performance can provide a good correction over the peak performance provided by the manufacturer. The peak performance is the maximal theoretical performance a computer can achieve, calculated as the machine's frequency, in cycles per second, times the number of operations per cycle it can perform. The actual performance will always be lower than the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Summit (supercomputer)

Summit or OLCF-4 was a supercomputer developed by IBM for use at Oak Ridge Leadership Computing Facility (OLCF), a facility at the Oak Ridge National Laboratory, United States of America. It held the number 1 position on the TOP500 list from June 2018 to June 2020. As of June 2024, its LINPACK benchmark was clocked at 148.6 petaFLOPS. Summit was decommissioned on November 15, 2024. As of November 2019, the supercomputer had ranked as the 5th most energy efficient in the world with a measured power efficiency of 14.668 gigaFLOPS/watt. Summit was the first supercomputer to reach exaflop (a quintillion operations per second) speed, on a non-standard metric, achieving 1.88 exaflops during a genomic analysis and is expected to reach 3.3 exaflops using mixed-precision calculations. History The United States Department of Energy awarded a $325 million contract in November 2014 to IBM, Nvidia and Mellanox. The effort resulted in construction of Summit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EMT64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit vector registers (XMM registers). Each of these vector registers can store one or two double-precision floating-point numbers, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

X86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set architecture, instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit computing, 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit Processor register, vector registers (XMM registers). Each of these vector registers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Green500

The Green500 is a biannual ranking of supercomputers, from the TOP500 list of supercomputers, in terms of energy efficiency. The list measures performance per watt using the TOP500 measure of high performance LINPACK benchmarks at double-precision floating-point format. History The Green500 List was created by Kirk W. Cameron and Wu-chun Feng, then both associate professors in Computer Science at Virginia Tech, in 2006. The power measurement techniques that form the basis of the run rules were based on Cameron's early work in supercomputer energy efficiency initially funded by the National Science Foundation (Awards: #0347683, #0614705). The first Green500 List was presented at the 2007 ACM/IEEE conference on Supercomputing and described in the December issue of IEEE Computer 40(12): 50-55 (2007). The list was initially met with some controversy since several key stakeholders (e.g., SGI, DOE Laboratories) failed to submit measurements by the report deadlines and were deprecated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

El Capitan (supercomputer)

Hewlett Packard Enterprise El Capitan is an exascale supercomputer, hosted at the Lawrence Livermore National Laboratory in Livermore, California, United States, that became operational in 2024. It is based on the Cray EX Shasta architecture. El Capitan displaced Frontier as the world's fastest supercomputer in the 64th edition of the TOP500 (Nov. 2024). El Capitan is the third exascale system deployed by the United States and its primary purpose is to support the stockpile stewardship program of the US National Nuclear Security Administration. Design El Capitan uses a combined 11,039,616 CPU and GPU cores consisting of 43,808 AMD fourth Gen EPYC 24C "Genoa" 24-core 1.8 GHz CPUs (1,051,392 cores) and 43,808 AMD Instinct MI300A GPUs (9,988,224 cores). The MI300A consists of 24 Zen4-based CPU cores and a CDNA3-based GPU integrated onto a single organic package, along with 128GB of HBM3 memory. Blades are interconnected by an HPE Slingshot 64-port switch that provide ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Energy Research Scientific Computing Center

The National Energy Research Scientific Computing Center (NERSC) is a high-performance computing (supercomputer) research facility that was founded in 1974. The National User Facility is operated by Lawrence Berkeley National Laboratory for the United States Department of Energy Office of Science. Mission The mission is to establish a computing center for the Office of Science, NERSC houses high performance computing and data systems which can be used by 9,000 scientists at national laboratories and universities around the country. Research at NERSC is focused on fundamental and applied research with energy efficiency, storage, generation and Earth systems science, understanding of fundamental forces of nature and the Universe. The largest research areas are High Energy Physics, Materials Science, Chemical Sciences, Climate and Environmental Sciences, Nuclear Physics, and Fusion Energy research. History NERSC was founded in 1974 as the Controlled Thermonuclear Research Com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

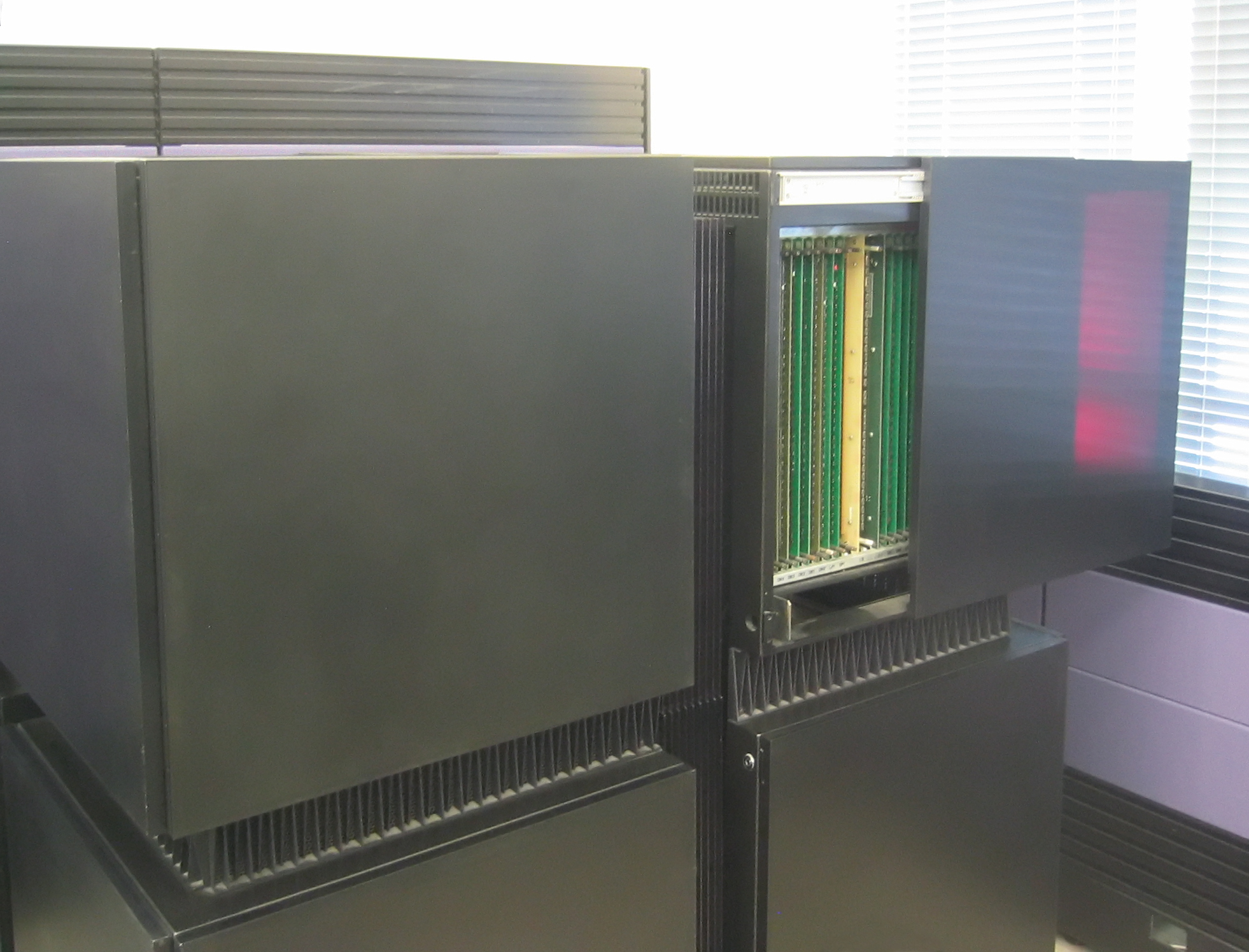

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |