|

Sample Maximum And Minimum

In statistics, the sample maximum and sample minimum, also called the largest observation and smallest observation, are the values of the greatest and least elements of a sample (statistics), sample. They are basic summary statistics, used in descriptive statistics such as the five-number summary and seven-number summary#Bowley’s seven-figure summary, Bowley's seven-figure summary and the associated box plot. The minimum and the maximum value are the first and last order statistics (often denoted ''X''(1) and ''X''(''n'') respectively, for a sample size of ''n''). If the sample has outliers, they necessarily include the sample maximum or sample minimum, or both, depending on whether they are extremely high or low. However, the sample maximum and minimum need not be outliers, if they are not unusually far from other observations. Robustness The sample maximum and minimum are the ''least'' robust statistics: they are maximally sensitive to outliers. This can either be an advan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Absolute Deviation

The average absolute deviation (AAD) of a data set is the average of the absolute deviations from a central point. It is a summary statistic of statistical dispersion or variability. In the general form, the central point can be a mean, median, mode, or the result of any other measure of central tendency or any reference value related to the given data set. AAD includes the mean absolute deviation and the '' median absolute deviation'' (both abbreviated as MAD). Measures of dispersion Several measures of statistical dispersion are defined in terms of the absolute deviation. The term "average absolute deviation" does not uniquely identify a measure of statistical dispersion, as there are several measures that can be used to measure absolute deviations, and there are several measures of central tendency that can be used as well. Thus, to uniquely identify the absolute deviation it is necessary to specify both the measure of deviation and the measure of central tendency. The sta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

German Tank Problem

German(s) may refer to: * Germany, the country of the Germans and German things **Germania (Roman era) * Germans, citizens of Germany, people of German ancestry, or native speakers of the German language ** For citizenship in Germany, see also German nationality law **Germanic peoples (Roman era) * German diaspora * German language * German cuisine, traditional foods of Germany People * German (given name) * German (surname) * Germán, a Spanish name Places * German (parish), Isle of Man * German, Albania, or Gërmej * German, Bulgaria * German, Iran * German, North Macedonia * German, New York, U.S. * Agios Germanos, Greece Other uses * German (mythology), a South Slavic mythological being * Germans (band), a Canadian rock band * "German" (song), a 2019 song by No Money Enterprise * ''The German'', a 2008 short film * "The Germans", an episode of ''Fawlty Towers'' * ''The German'', a nickname for Congolese rebel André Kisase Ngandu See also * Germanic (di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UMVU

In statistics a minimum-variance unbiased estimator (MVUE) or uniformly minimum-variance unbiased estimator (UMVUE) is an unbiased estimator that has lower variance than any other unbiased estimator for all possible values of the parameter. For practical statistics problems, it is important to determine the MVUE if one exists, since less-than-optimal procedures would naturally be avoided, other things being equal. This has led to substantial development of statistical theory related to the problem of optimal estimation. While combining the constraint of unbiasedness with the desirability metric of least variance leads to good results in most practical settings—making MVUE a natural starting point for a broad range of analyses—a targeted specification may perform better for a given problem; thus, MVUE is not always the best stopping point. Definition Consider estimation of g(\theta) based on data X_1, X_2, \ldots, X_n i.i.d. from some member of a family of densities p_\theta, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complete Statistic

In statistics, completeness is a property of a statistic computed on a sample dataset in relation to a parametric model of the dataset. It is opposed to the concept of an ancillary statistic. While an ancillary statistic contains no information about the model parameters, a complete statistic contains only information about the parameters, and no ancillary information. It is closely related to the concept of a sufficient statistic which contains all of the information that the dataset provides about the parameters. Definition Consider a random variable ''X'' whose probability distribution belongs to a parametric model ''P''''θ'' parametrized by ''θ''. Say ''T'' is a statistic; that is, the composition of a measurable function with a random sample ''X''1,...,''X''n. The statistic ''T'' is said to be complete for the distribution of ''X'' if, for every measurable function ''g,'' :\text\operatorname_\theta(g(T))=0\text\theta\text\mathbf_\theta(g(T)=0)=1\text\theta. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sufficient Statistic

In statistics, sufficiency is a property of a statistic computed on a sample dataset in relation to a parametric model of the dataset. A sufficient statistic contains all of the information that the dataset provides about the model parameters. It is closely related to the concepts of an ancillary statistic which contains no information about the model parameters, and of a complete statistic which only contains information about the parameters and no ancillary information. A related concept is that of linear sufficiency, which is weaker than ''sufficiency'' but can be applied in some cases where there is no sufficient statistic, although it is restricted to linear estimators. The Kolmogorov structure function deals with individual finite data; the related notion there is the algorithmic sufficient statistic. The concept is due to Sir Ronald Fisher in 1920. Stephen Stigler noted in 1973 that the concept of sufficiency had fallen out of favor in descriptive statistics because of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Distribution (discrete)

In probability theory and statistics, the discrete uniform distribution is a symmetric probability distribution wherein each of some finite whole number ''n'' of outcome values are equally likely to be observed. Thus every one of the ''n'' outcome values has equal probability 1/''n''. Intuitively, a discrete uniform distribution is "a known, finite number of outcomes all equally likely to happen." A simple example of the discrete uniform distribution comes from throwing a fair six-sided die. The possible values are 1, 2, 3, 4, 5, 6, and each time the die is thrown the probability of each given value is 1/6. If two dice were thrown and their values added, the possible sums would not have equal probability and so the distribution of sums of two dice rolls is not uniform. Although it is common to consider discrete uniform distributions over a contiguous range of integers, such as in this six-sided die example, one can define discrete uniform distributions over any finite set. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Efficiency (statistics)

In statistics, efficiency is a measure of quality of an estimator, of an experimental design, or of a hypothesis testing procedure. Essentially, a more efficient estimator needs fewer input data or observations than a less efficient one to achieve the Cramér–Rao bound. An ''efficient estimator'' is characterized by having the smallest possible variance, indicating that there is a small deviance between the estimated value and the "true" value in the L2 norm sense. The relative efficiency of two procedures is the ratio of their efficiencies, although often this concept is used where the comparison is made between a given procedure and a notional "best possible" procedure. The efficiencies and the relative efficiency of two procedures theoretically depend on the sample size available for the given procedure, but it is often possible to use the asymptotic relative efficiency (defined as the limit of the relative efficiencies as the sample size grows) as the principal comparison ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Platykurtic

In probability theory and statistics, kurtosis (from , ''kyrtos'' or ''kurtos'', meaning "curved, arching") refers to the degree of “tailedness” in the probability distribution of a real-valued random variable. Similar to skewness, kurtosis provides insight into specific characteristics of a distribution. Various methods exist for quantifying kurtosis in theoretical distributions, and corresponding techniques allow estimation based on sample data from a population. It’s important to note that different measures of kurtosis can yield varying interpretations. The standard measure of a distribution's kurtosis, originating with Karl Pearson, is a scaled version of the fourth moment of the distribution. This number is related to the tails of the distribution, not its peak; hence, the sometimes-seen characterization of kurtosis as " peakedness" is incorrect. For this measure, higher kurtosis corresponds to greater extremity of deviations (or outliers), and not the configuratio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deciles

In descriptive statistics, a decile is any of the nine values that divide the sorted data into ten equal parts, so that each part represents 1/10 of the sample or population. A decile is one possible form of a quantile; others include the quartile and percentile.. A decile rank arranges the data in order from lowest to highest and is done on a scale of one to ten where each successive number corresponds to an increase of 10 percentage points. Special usage: The decile mean A moderately robust measure of central tendency - known as the decile mean - can be computed by making use of a sample's deciles D_ to D_ (D_ = 10th percentile, D_ = 20th percentile and so on). It is calculated as follows: : DM = \frac Apart from serving as an alternative for the mean and the truncated mean, it also forms the basis for robust measures of skewness and kurtosis, and even a normality test. See also * Summary statistics * Socio-economic decile In the education in New Zealand, New Zealand edu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

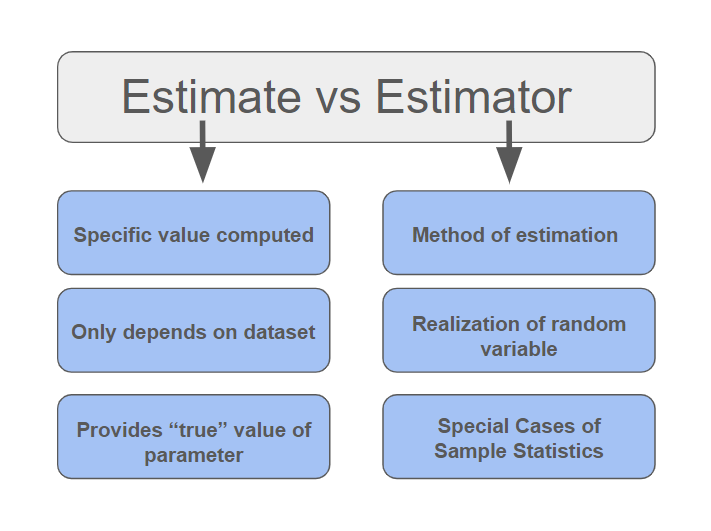

Estimators

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. However, in robust statistics, statisti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |