|

Runge's Phenomenon

In the mathematical field of numerical analysis, Runge's phenomenon () is a problem of oscillation at the edges of an interval that occurs when using polynomial interpolation with polynomials of high degree over a set of equispaced interpolation points. It was discovered by Carl David Tolmé Runge (1901) when exploring the behavior of errors when using polynomial interpolation to approximate certain functions. The discovery shows that going to higher degrees does not always improve accuracy. The phenomenon is similar to the Gibbs phenomenon in Fourier series approximations. The Weierstrass approximation theorem states that for every continuous function f(x) defined on an interval , b/math>, there exists a set of polynomial functions P_n(x) for n=0, 1, 2, \ldots, each of degree at most n, that approximates f(x) with uniform convergence over , b/math> as n tends to infinity. This can be expressed as: :\lim_ \left( \sup_ \left, f(x) - P_n(x) \ \right) = 0. Consider the case w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fake Nodes

Fake or fakes may refer to: Arts and entertainment Film and television * ''The Fake'' (1927 film), a silent British drama film * ''The Fake'' (1953 film), a British film * ''Fake'' (2003 film), a Thai movie * ''Fake'', a 2010 film featuring Fisher Stevens * ''The Fake'' (2013 film), a South Korean animated film * ''Fake'' (TV series), a 2024 Australian drama thriller television series * ''Fakes'' (TV series), a 2022 American-Canadian comedy television series Music Groups * Fake (Swedish band), a Swedish synthpop band active in the 1980s * Fake, an American electro band remixed by Imperative Reaction * Fake?, a Japanese rock musical project Recordings * ''Fake'' (album), by Adorable * "Fake" (Ai song) (2010) * "Fake" (Alexander O'Neal song) (1987) * "Fake" (Simply Red song) (2003) * "Fake", a song by Brand New Heavies from ''Brother Sister'' * "Fake", a song by Brockhampton from ''Saturation'' * "Fake", a 1994 song by Korn from ''Korn'' * "Fake", a song by Mötley Cr� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Wilkinson's Polynomial

In numerical analysis, Wilkinson's polynomial is a specific polynomial which was used by James H. Wilkinson in 1963 to illustrate a difficulty when finding the roots of a polynomial: the location of the roots can be very sensitive to perturbations in the coefficients of the polynomial. The polynomial is w(x) = \prod_^ (x - i) = (x-1) (x-2) \cdots (x-20). Sometimes, the term ''Wilkinson's polynomial'' is also used to refer to some other polynomials appearing in Wilkinson's discussion. Background Wilkinson's polynomial arose in the study of algorithms for finding the roots of a polynomial p(x) = \sum_^n c_i x^i. It is a natural question in numerical analysis to ask whether the problem of finding the roots of from the coefficients is well-conditioned. That is, we hope that a small change in the coefficients will lead to a small change in the roots. Unfortunately, this is not the case here. The problem is ill-conditioned when the polynomial has a multiple root. For instance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Taylor Series

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor series are equal near this point. Taylor series are named after Brook Taylor, who introduced them in 1715. A Taylor series is also called a Maclaurin series when 0 is the point where the derivatives are considered, after Colin Maclaurin, who made extensive use of this special case of Taylor series in the 18th century. The partial sum formed by the first terms of a Taylor series is a polynomial of degree that is called the th Taylor polynomial of the function. Taylor polynomials are approximations of a function, which become generally more accurate as increases. Taylor's theorem gives quantitative estimates on the error introduced by the use of such approximations. If the Taylor series of a function is convergent, its sum is the limit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Stone–Weierstrass Theorem

In mathematical analysis, the Weierstrass approximation theorem states that every continuous function defined on a closed interval (mathematics), interval can be uniform convergence, uniformly approximated as closely as desired by a polynomial function. Because polynomials are among the simplest functions, and because computers can directly evaluate polynomials, this theorem has both practical and theoretical relevance, especially in polynomial interpolation. The original version of this result was established by Karl Weierstrass in #Historical works, 1885 using the Weierstrass transform. Marshall H. Stone considerably generalized the theorem and simplified the proof. His result is known as the Stone–Weierstrass theorem. The Stone–Weierstrass theorem generalizes the Weierstrass approximation theorem in two directions: instead of the real interval , an arbitrary compact space, compact Hausdorff space is considered, and instead of the Algebra over a field, algebra of polynomial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Schwarz Lantern

In mathematics, the Schwarz lantern is a polyhedral approximation to a cylinder, used as a pathological example of the difficulty of defining the area of a smooth (curved) surface as the limit of the areas of polyhedra. It is formed by stacked rings of isosceles triangles, arranged within each ring in the same pattern as an antiprism. The resulting shape can be folded from paper, and is named after mathematician Hermann Schwarz and for its resemblance to a cylindrical paper lantern. It is also known as Schwarz's boot, Schwarz's polyhedron, or the Chinese lantern. As Schwarz showed, for the surface area of a polyhedron to converge to the surface area of a curved surface, it is not sufficient to simply increase the number of rings and the number of isosceles triangles per ring. Depending on the relation of the number of rings to the number of triangles per ring, the area of the lantern can converge to the area of the cylinder, to a limit arbitrarily larger than the area of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Overfitting

In mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. In the special case where the model consists of a polynomial function, these parameters represent the degree of a polynomial. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the Statistical noise, noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Underfitting would occur, for example, when fitting a linear model to nonlinear data. Such a model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

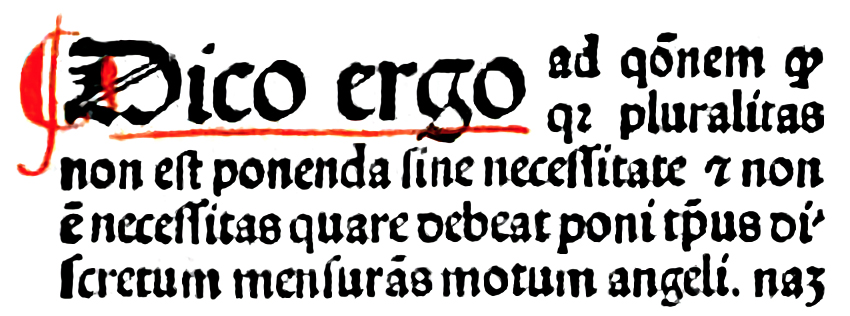

Occam's Razor

In philosophy, Occam's razor (also spelled Ockham's razor or Ocham's razor; ) is the problem-solving principle that recommends searching for explanations constructed with the smallest possible set of elements. It is also known as the principle of parsimony or the law of parsimony (). Attributed to William of Ockham, a 14th-century English philosopher and theologian, it is frequently cited as , which translates as "Entities must not be multiplied beyond necessity", although Occam never used these exact words. Popularly, the principle is sometimes paraphrased as "of two competing theories, the simpler explanation of an entity is to be preferred." This philosophical razor advocates that when presented with competing hypotheses about the same prediction and both hypotheses have equal explanatory power, one should prefer the hypothesis that requires the fewest assumptions, and that this is not meant to be a way of choosing between hypotheses that make different predictions. Similarl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Approximation Theory

In mathematics, approximation theory is concerned with how function (mathematics), functions can best be approximation, approximated with simpler functions, and with quantitative property, quantitatively characterization (mathematics), characterizing the approximation error, errors introduced thereby. What is meant by ''best'' and ''simpler'' will depend on the application. A closely related topic is the approximation of functions by generalized Fourier series, that is, approximations based upon summation of a series of terms based upon orthogonal polynomials. One problem of particular interest is that of approximating a function in a computer mathematical library, using operations that can be performed on the computer or calculator (e.g. addition and multiplication), such that the result is as close to the actual function as possible. This is typically done with polynomial or Rational function, rational (ratio of polynomials) approximations. The objective is to make the approxi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Maxima And Minima

In mathematical analysis, the maximum and minimum of a function are, respectively, the greatest and least value taken by the function. Known generically as extremum, they may be defined either within a given range (the ''local'' or ''relative'' extrema) or on the entire domain (the ''global'' or ''absolute'' extrema) of a function. Pierre de Fermat was one of the first mathematicians to propose a general technique, adequality, for finding the maxima and minima of functions. As defined in set theory, the maximum and minimum of a set are the greatest and least elements in the set, respectively. Unbounded infinite sets, such as the set of real numbers, have no minimum or maximum. In statistics, the corresponding concept is the sample maximum and minimum. Definition A real-valued function ''f'' defined on a domain ''X'' has a global (or absolute) maximum point at ''x''∗, if for all ''x'' in ''X''. Similarly, the function has a global (or absolute) minimum point at ''x''� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Lagrange Multiplier

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function (mathematics), function subject to constraint (mathematics), equation constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variable (mathematics), variables). It is named after the mathematician Joseph-Louis Lagrange. Summary and rationale The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function or Lagrangian. In the general case, the Lagrangian is defined as \mathcal(x, \lambda) \equiv f(x) + \langle \lambda, g(x)\rangle for functions f, g; the notation \langle \cdot, \cdot \rangle denotes an inner prod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |