|

Probabilistic Design

Probabilistic design is a discipline within engineering design. It deals primarily with the consideration of the effects of random variability upon the performance of an engineering system during the design phase. Typically, these effects are related to quality and reliability. Thus, probabilistic design is a tool that is mostly used in areas that are concerned with quality and reliability. For example, product design, quality control, systems engineering, machine design, civil engineering (particularly useful in limit state design) and manufacturing. It differs from the classical approach to design by assuming a small probability of failure instead of using the safety factor. Designer's perspective When using a probabilistic approach to design, the designer no longer thinks of each variable as a single value or number. Instead, each variable is viewed as a probability distribution. From this perspective, probabilistic design predicts the flow of variability (or distribu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Engineering Design

The engineering design process is a common series of steps that engineers use in creating functional products and processes. The process is highly iterative - parts of the process often need to be repeated many times before another can be entered - though the part(s) that get iterated and the number of such cycles in any given project may vary. It is a decision making process (often iterative) in which the basic sciences, mathematics, and engineering sciences are applied to convert resources optimally to meet a stated objective. Among the fundamental elements of the design process are the establishment of objectives and criteria, synthesis, analysis, construction, testing and evaluation. Common stages of the engineering design process It's important to understand that there are various framings/articulations of the engineering design process. Different terminology employed may have varying degrees of overlap, which affects what steps get stated explicitly or deemed "high lev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of ris ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Engineering Statistics

Engineering statistics combines engineering and statistics using scientific methods for analyzing data. Engineering statistics involves data concerning manufacturing processes such as: component dimensions, tolerances, type of material, and fabrication process control. There are many methods used in engineering analysis and they are often displayed as histograms to give a visual of the data as opposed to being just numerical. Examples of methods are: # Design of Experiments (DOE) is a methodology for formulating scientific and engineering problems using statistical models. The protocol specifies a randomization procedure for the experiment and specifies the primary data-analysis, particularly in hypothesis testing. In a secondary analysis, the statistical analyst further examines the data to suggest other questions and to help plan future experiments. In engineering applications, the goal is often to optimize a process or product, rather than to subject a scientific hypothesis to te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interval Finite Element

In numerical analysis, the interval finite element method (interval FEM) is a finite element method that uses interval parameters. Interval FEM can be applied in situations where it is not possible to get reliable probabilistic characteristics of the structure. This is important in concrete structures, wood structures, geomechanics, composite structures, biomechanics and in many other areas. The goal of the Interval Finite Element is to find upper and lower bounds of different characteristics of the model (e.g. stress, displacements, yield surface etc.) and use these results in the design process. This is so called worst case design, which is closely related to the limit state design. Worst case design requires less information than probabilistic design however the results are more conservative öylüoglu and Elishakoff 1998">Elishakoff.html" ;"title="öylüoglu and Elishakoff">öylüoglu and Elishakoff 1998 Applications of the interval parameters to the modeling of uncertaint ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Failure Mode And Effects Analysis

Failure mode and effects analysis (FMEA; often written with "failure modes" in plural) is the process of reviewing as many components, assemblies, and subsystems as possible to identify potential failure modes in a system and their causes and effects. For each component, the failure modes and their resulting effects on the rest of the system are recorded in a specific FMEA worksheet. There are numerous variations of such worksheets. An FMEA can be a qualitative analysis, but may be put on a quantitative basis when mathematical failure rate models are combined with a statistical failure mode ratio database. It was one of the first highly structured, systematic techniques for failure analysis. It was developed by reliability engineers in the late 1950s to study problems that might arise from malfunctions of military systems. An FMEA is often the first step of a system reliability study. A few different types of FMEA analyses exist, such as: * Functional * Design * Process Sometimes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

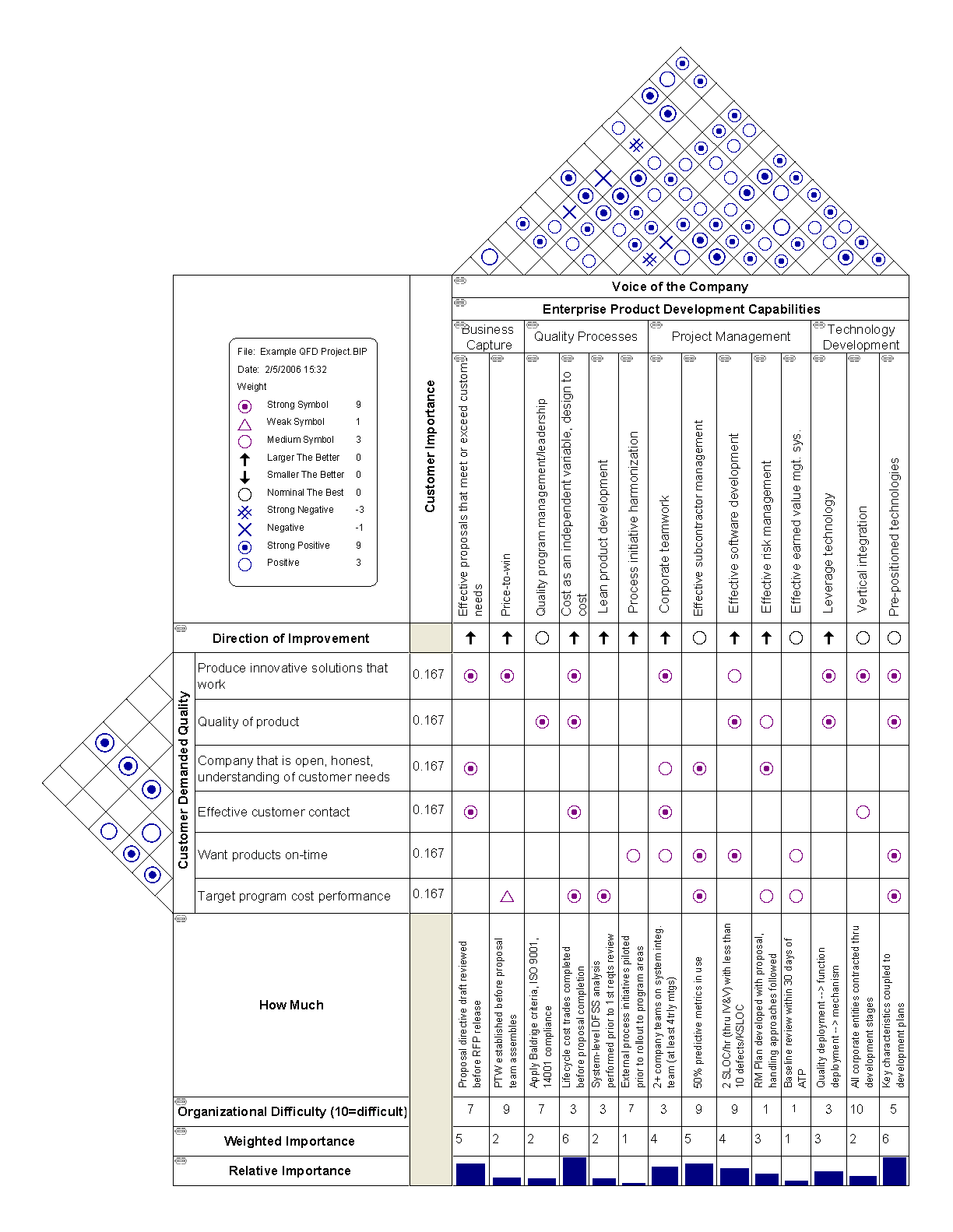

Quality Function Deployment

Quality function deployment (QFD) a method developed in Japan beginning in 1966 to help transform the voice of the customer into engineering characteristics for a product.Larson et al. (2009). p. 117. Yoji Akao, the original developer, described QFD as a "method to transform qualitative user demands into quantitative parameters, to deploy the functions forming quality, and to deploy methods for achieving the design quality into subsystems and component parts, and ultimately to specific elements of the manufacturing process." The author combined his work in quality assurance and quality control points with function deployment used in value engineering. House of quality The house of quality, a part of QFD, is the basic design tool of quality function deployment. It identifies and classifies customer desires (What's), identifies the importance of those desires, identifies engineering characteristics which may be relevant to those desires (How's), correlates the two, allows for ve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Interference

When two probability distributions overlap, statistical interference exists. Knowledge of the distributions can be used to determine the likelihood that one parameter exceeds another, and by how much. This technique can be used for dimensioning of mechanical parts, determining when an applied load exceeds the strength of a structure, and in many other situations. This type of analysis can also be used to estimate the ''probability of failure'' or the ''frequency of failure''. Dimensional interference Mechanical parts are usually designed to fit precisely together. For example, if a shaft is designed to have a "sliding fit" in a hole, the shaft must be a little smaller than the hole. (Traditional tolerance (engineering), tolerances may suggest that all dimensions fall within those intended tolerances. A process capability study of actual production, however, may reveal normal distributions with long tails.) Both the shaft and hole sizes will usually form normal distributions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Method Of Moments (statistics)

In statistics, the method of moments is a method of estimation of population parameters. The same principle is used to derive higher moments like skewness and kurtosis. It starts by expressing the population moments (i.e., the expected values of powers of the random variable under consideration) as functions of the parameters of interest. Those expressions are then set equal to the sample moments. The number of such equations is the same as the number of parameters to be estimated. Those equations are then solved for the parameters of interest. The solutions are estimates of those parameters. The method of moments was introduced by Pafnuty Chebyshev in 1887 in the proof of the central limit theorem. The idea of matching empirical moments of a distribution to the population moments dates back at least to Pearson. Method Suppose that the problem is to estimate k unknown parameters \theta_, \theta_2, \dots, \theta_k characterizing the distribution f_W(w; \theta) of the rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Design Of Experiments

The design of experiments (DOE, DOX, or experimental design) is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. The term is generally associated with experiments in which the design introduces conditions that directly affect the variation, but may also refer to the design of quasi-experiments, in which natural conditions that influence the variation are selected for observation. In its simplest form, an experiment aims at predicting the outcome by introducing a change of the preconditions, which is represented by one or more independent variables, also referred to as "input variables" or "predictor variables." The change in one or more independent variables is generally hypothesized to result in a change in one or more dependent variables, also referred to as "output variables" or "response variables." The experimental design may also identify control variables that must b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Propagation Of Error

In statistics, propagation of uncertainty (or propagation of error) is the effect of variables' uncertainties (or errors, more specifically random errors) on the uncertainty of a function based on them. When the variables are the values of experimental measurements they have uncertainties due to measurement limitations (e.g., instrument precision) which propagate due to the combination of variables in the function. The uncertainty ''u'' can be expressed in a number of ways. It may be defined by the absolute error . Uncertainties can also be defined by the relative error , which is usually written as a percentage. Most commonly, the uncertainty on a quantity is quantified in terms of the standard deviation, , which is the positive square root of the variance. The value of a quantity and its error are then expressed as an interval . If the statistical probability distribution of the variable is known or can be assumed, it is possible to derive confidence limits to describe t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latin Hypercube Sampling

Latin hypercube sampling (LHS) is a statistical method for generating a near-random sample of parameter values from a multidimensional distribution. The sampling method is often used to construct computer experiments or for Monte Carlo integration. LHS was described by Michael McKay of Los Alamos National Laboratory in 1979. An independently equivalent technique was proposed by Vilnis Eglājs in 1977. It was further elaborated by Ronald L. Iman and coauthors in 1981. Detailed computer codes and manuals were later published. In the context of statistical sampling, a square grid containing sample positions is a Latin square if (and only if) there is only one sample in each row and each column. A Latin hypercube is the generalisation of this concept to an arbitrary number of dimensions, whereby each sample is the only one in each axis-aligned hyperplane containing it. When sampling a function of N variables, the range of each variable is divided into M equally probable interv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Design For Six Sigma

Design for Six Sigma (DFSS) is an Engineering design process, business process management method related to traditional Six Sigma.Chowdhury, Subir (2002) Design for Six Sigma: The revolutionary process for achieving extraordinary profits, Prentice Hall, It is used in many industries, like finance, marketing, basic engineering, process industries, waste management, and electronics. It is based on the use of statistical tools like linear regression and enables empirical research similar to that performed in other fields, such as social science. While the tools and order used in Six Sigma require a process to be in place and functioning, DFSS has the objective of determining the needs of customers and the business, and driving those needs into the product solution so created. It is used for product or process ''design'' in contrast with process ''improvement''. Measurement is the most important part of most Six Sigma or DFSS tools, but whereas in Six Sigma measurements are made ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |