|

Passing–Bablok Regression

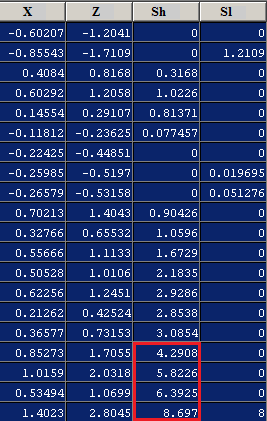

Passing–Bablok regression is a method from robust statistics for nonparametric regression analysis suitable for method comparison studies introduced by Wolfgang Bablok and Heinrich Passing in 1983. The procedure is adapted to fit linear errors-in-variables models. It is symmetrical and is robust in the presence of one or few outliers. The Passing-Bablok procedure fits the parameters a and b of the linear equation y = a + b * x using non-parametric methods. The coefficient b is calculated by taking the shifted median of all slopes of the straight lines between any two points, disregarding lines for which the points are identical or b = -1. The median is shifted based on the number of slopes where b < -1 to create an approximately consistent estimator. The estimator is therefore close in spirit to the Theil-Sen estimator. The parameter is calculated by [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robust Statistics

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, such as estimating location, scale, and regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly. Introduction Robust statistics seek to provide methods that emulate popular statistical methods, but which are not unduly affected by outliers or other small departures from model assumptions. In statistics, classical estimation methods rely heavily on assumptions which are often not ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonparametric Regression

Nonparametric regression is a category of regression analysis in which the predictor does not take a predetermined form but is constructed according to information derived from the data. That is, no parametric form is assumed for the relationship between predictors and dependent variable. Nonparametric regression requires larger sample sizes than regression based on parametric models because the data must supply the model structure as well as the model estimates. Definition In nonparametric regression, we have random variables X and Y and assume the following relationship: : \mathbb \mid X=x= m(x), where m(x) is some deterministic function. Linear regression is a restricted case of nonparametric regression where m(x) is assumed to be affine. Some authors use a slightly stronger assumption of additive noise: : Y = m(X) + U, where the random variable U is the `noise term', with mean 0. Without the assumption that m belongs to a specific parametric family of functions it is impos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wolfgang Bablok

Wolfgang is a German male given name traditionally popular in Germany, Austria and Switzerland. The name is a combination of the Old High German words ''wolf'', meaning "wolf", and ''gang'', meaning "path", "journey", "travel". Besides the regular "wolf", the first element also occurs in Old High German as the combining form "-olf". The earliest reference of the name being used was in the 8th century. The name was also attested as "Vulfgang" in the Reichenauer Verbrüderungsbuch in the 9th century. The earliest recorded famous bearer of the name was a tenth-century Saint Wolfgang of Regensburg. Due to the lack of conflict with the pagan reference in the name with Catholicism, it is likely a much more ancient name whose meaning had already been lost by the tenth century. Grimm (''Teutonic Mythology'' p. 1093) interpreted the name as that of a hero in front of whom walks the "wolf of victory". A Latin gloss by Arnold of St Emmeram interprets the name as ''Lupambulus''.E. För ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Errors-in-variables Models

In statistics, errors-in-variables models or measurement error models are regression models that account for measurement errors in the independent variables. In contrast, standard regression models assume that those regressors have been measured exactly, or observed without error; as such, those models account only for errors in the dependent variables, or responses. In the case when some regressors have been measured with errors, estimation based on the standard assumption leads to inconsistent estimates, meaning that the parameter estimates do not tend to the true values even in very large samples. For simple linear regression the effect is an underestimate of the coefficient, known as the ''attenuation bias''. In non-linear models the direction of the bias is likely to be more complicated. Motivating example Consider a simple linear regression model of the form : y_ = \alpha + \beta x_^ + \varepsilon_t\,, \quad t=1,\ldots,T, where x_^ denotes the ''true'' but un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduced Major Axis Regression

In applied statistics, total least squares is a type of errors-in-variables regression, a least squares data modeling technique in which observational errors on both dependent and independent variables are taken into account. It is a generalization of Deming regression and also of orthogonal regression, and can be applied to both linear and non-linear models. The total least squares approximation of the data is generically equivalent to the best, in the Frobenius norm, low-rank approximation of the data matrix. Linear model Background In the least squares method of data modeling, the objective function, ''S'', :S=\mathbf, is minimized, where ''r'' is the vector of residuals and ''W'' is a weighting matrix. In linear least squares the model contains equations which are linear in the parameters appearing in the parameter vector \boldsymbol\beta, so the residuals are given by :\mathbf. There are ''m'' observations in y and ''n'' parameters in β with ''m''>''n''. X is a ''m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity

In computer science, the computational complexity or simply complexity of an algorithm is the amount of resources required to run it. Particular focus is given to computation time (generally measured by the number of needed elementary operations) and memory storage requirements. The complexity of a problem is the complexity of the best algorithms that allow solving the problem. The study of the complexity of explicitly given algorithms is called analysis of algorithms, while the study of the complexity of problems is called computational complexity theory. Both areas are highly related, as the complexity of an algorithm is always an upper bound on the complexity of the problem solved by this algorithm. Moreover, for designing efficient algorithms, it is often fundamental to compare the complexity of a specific algorithm to the complexity of the problem to be solved. Also, in most cases, the only thing that is known about the complexity of a problem is that it is lower than the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confidence Interval

In frequentist statistics, a confidence interval (CI) is a range of estimates for an unknown parameter. A confidence interval is computed at a designated ''confidence level''; the 95% confidence level is most common, but other levels, such as 90% or 99%, are sometimes used. The confidence level represents the long-run proportion of corresponding CIs that contain the true value of the parameter. For example, out of all intervals computed at the 95% level, 95% of them should contain the parameter's true value. Factors affecting the width of the CI include the sample size, the variability in the sample, and the confidence level. All else being the same, a larger sample produces a narrower confidence interval, greater variability in the sample produces a wider confidence interval, and a higher confidence level produces a wider confidence interval. Definition Let be a random sample from a probability distribution with statistical parameter , which is a quantity to be esti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUSUM

In statistical quality control, the CUsUM (or cumulative sum control chart) is a sequential analysis technique developed by E. S. Page of the University of Cambridge. It is typically used for monitoring change detection. CUSUM was announced in Biometrika, in 1954, a few years after the publication of Wald's sequential probability ratio test (SPRT). E. S. Page referred to a "quality number" \theta, by which he meant a parameter of the probability distribution; for example, the mean. He devised CUSUM as a method to determine changes in it, and proposed a criterion for deciding when to take corrective action. When the CUSUM method is applied to changes in mean, it can be used for step detection of a time series. A few years later, George Alfred Barnard developed a visualization method, the V-mask chart, to detect both increases and decreases in \theta. Method As its name implies, CUSUM involves the calculation of a cumulative sum (which is what makes it "sequential"). Samples fro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analytical Chemistry

Analytical chemistry studies and uses instruments and methods to separate, identify, and quantify matter. In practice, separation, identification or quantification may constitute the entire analysis or be combined with another method. Separation isolates analytes. Qualitative analysis identifies analytes, while quantitative analysis determines the numerical amount or concentration. Analytical chemistry consists of classical, wet chemical methods and modern, instrumental methods. Classical qualitative methods use separations such as precipitation, extraction, and distillation. Identification may be based on differences in color, odor, melting point, boiling point, solubility, radioactivity or reactivity. Classical quantitative analysis uses mass or volume changes to quantify amount. Instrumental methods may be used to separate samples using chromatography, electrophoresis or field flow fractionation. Then qualitative and quantitative analysis can be performed, often with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |