|

Omnibus Test

Omnibus tests are a kind of statistical test. They test whether the explained variance in a set of data is significantly greater than the unexplained variance, overall. One example is the F-test in the analysis of variance. There can be legitimate significant effects within a model even if the omnibus test is not significant. For instance, in a model with two independent variables, if only one variable exerts a significant effect on the dependent variable and the other does not, then the omnibus test may be non-significant. This fact does not affect the conclusions that may be drawn from the one significant variable. In order to test effects within an omnibus test, researchers often use contrasts. ''Omnibus test'', as a general name, refers to an overall or a global test. Other names include F-test or Chi-squared test. It is a statistical test implemented on an overall hypothesis that tends to find general significance between parameters' variance, while examining parameters of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, " significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bonferroni Correction

In statistics, the Bonferroni correction is a method to counteract the multiple comparisons problem. Background The method is named for its use of the Bonferroni inequalities. An extension of the method to confidence intervals was proposed by Olive Jean Dunn. Statistical hypothesis testing is based on rejecting the null hypothesis if the likelihood of the observed data under the null hypotheses is low. If multiple hypotheses are tested, the probability of observing a rare event increases, and therefore, the likelihood of incorrectly rejecting a null hypothesis (i.e., making a Type I error) increases. The Bonferroni correction compensates for that increase by testing each individual hypothesis at a significance level of \alpha/m, where \alpha is the desired overall alpha level and m is the number of hypotheses. For example, if a trial is testing m = 20 hypotheses with a desired \alpha = 0.05, then the Bonferroni correction would test each individual hypothesis at \alpha = 0.05/20 = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neyman–Pearson Lemma

In statistics, the Neyman–Pearson lemma was introduced by Jerzy Neyman and Egon Pearson in a paper in 1933. The Neyman-Pearson lemma is part of the Neyman-Pearson theory of statistical testing, which introduced concepts like errors of the second kind, power function, and inductive behavior.The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two?: Journal of the American Statistical Association: Vol 88, No 424The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two?: Journal of the American Statistical Association: Vol 88, No 424/ref>Wald: Chapter II: The Neyman-Pearson Theory of Testing a Statistical HypothesisWald: Chapter II: The Neyman-Pearson Theory of Testing a Statistical Hypothesis/ref>The Empire of ChanceThe Empire of Chance/ref> The previous Fisherian theory of significance testing postulated only one hypothesis. By introducing a competing hypothesis, the Neyman-Pearsonian flavor of statistical testing allows investigating the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood-ratio Test

In statistics, the likelihood-ratio test assesses the goodness of fit of two competing statistical models based on the ratio of their likelihoods, specifically one found by maximization over the entire parameter space and another found after imposing some constraint. If the constraint (i.e., the null hypothesis) is supported by the observed data, the two likelihoods should not differ by more than sampling error. Thus the likelihood-ratio test tests whether this ratio is significantly different from one, or equivalently whether its natural logarithm is significantly different from zero. The likelihood-ratio test, also known as Wilks test, is the oldest of the three classical approaches to hypothesis testing, together with the Lagrange multiplier test and the Wald test. In fact, the latter two can be conceptualized as approximations to the likelihood-ratio test, and are asymptotically equivalent. In the case of comparing two models each of which has no unknown parameters, us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stepwise Regression

In statistics, stepwise regression is a method of fitting regression models in which the choice of predictive variables is carried out by an automatic procedure. In each step, a variable is considered for addition to or subtraction from the set of explanatory variables based on some prespecified criterion. Usually, this takes the form of a forward, backward, or combined sequence of ''F''-tests or ''t''-tests. The frequent practice of fitting the final selected model followed by reporting estimates and confidence intervals without adjusting them to take the model building process into account has led to calls to stop using stepwise model building altogetherFlom, P. L. and Cassell, D. L. (2007) "Stopping stepwise: Why stepwise and similar selection methods are bad, and what you should use," NESUG 2007. or to at least make sure model uncertainty is correctly reflected.Chatfield, C. (1995) "Model uncertainty, data mining and statistical inference," J. R. Statist. Soc. A 158, Part ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I And Type II Errors

In statistical hypothesis testing, a type I error is the mistaken rejection of an actually true null hypothesis (also known as a "false positive" finding or conclusion; example: "an innocent person is convicted"), while a type II error is the failure to reject a null hypothesis that is actually false (also known as a "false negative" finding or conclusion; example: "a guilty person is not convicted"). Much of statistical theory revolves around the minimization of one or both of these errors, though the complete elimination of either is a statistical impossibility if the outcome is not determined by a known, observable causal process. By selecting a low threshold (cut-off) value and modifying the alpha (α) level, the quality of the hypothesis test can be increased. The knowledge of type I errors and type II errors is widely used in medical science, biometrics and computer science. Intuitively, type I errors can be thought of as errors of ''commission'', i.e. the researcher unlu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Post-hoc Analysis

In a scientific study, post hoc analysis (from Latin '' post hoc'', "after this") consists of statistical analyses that were specified after the data were seen. They are usually used to uncover specific differences between three or more group means when an analysis of variance (ANOVA) test is significant. This typically creates a multiple testing problem because each potential analysis is effectively a statistical test. Multiple testing procedures are sometimes used to compensate, but that is often difficult or impossible to do precisely. Post hoc analysis that is conducted and interpreted without adequate consideration of this problem is sometimes called ''data dredging'' by critics because the statistical associations that it finds are often spurious. Common post hoc tests Some common post hoc tests include: {{Cite web , last=Pamplona , first=Fabricio , date=2022-07-28 , title=Post Hoc Analysis: Process and types of tests , url=https://mindthegraph.com/blog/post-hoc-analysis/ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SPSS

SPSS Statistics is a statistical software suite developed by IBM for data management, advanced analytics, multivariate analysis, business intelligence, and criminal investigation. Long produced by SPSS Inc., it was acquired by IBM in 2009. Current versions (post 2015) have the brand name: IBM SPSS Statistics. The software name originally stood for Statistical Package for the Social Sciences (SPSS), reflecting the original market, then later changed to Statistical Product and Service Solutions. Overview SPSS is a widely used program for statistical analysis in social science. It is also used by market researchers, health researchers, survey companies, government, education researchers, marketing organizations, data miners, and others. The original SPSS manual (Nie, Bent & Hull, 1970) has been described as one of "sociology's most influential books" for allowing ordinary researchers to do their own statistical analysis. In addition to statistical analysis, data management (case ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P-value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely under the null hypothesis. Reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields. Since the precise meaning of ''p''-value is hard to grasp, misuse is widespread and has been a major topic in metascience. Basic concepts In statistics, every conjecture concerning the unknown probability distribution of a collection of random variables representing the observed data X in some study is called a ''statistical hypothesis''. If we state one hypothesis only and the aim of the statistical test is to see whether this hypothesis is tenable, but not to investigate other specific hypotheses, then such a test is called a null ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is any test or metric that uses random sampling with replacement (e.g. mimicking the sampling process), and falls under the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Covariance

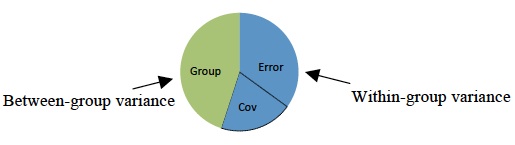

Analysis of covariance (ANCOVA) is a general linear model which blends ANOVA and regression. ANCOVA evaluates whether the means of a dependent variable (DV) are equal across levels of a categorical independent variable (IV) often called a treatment, while statistically controlling for the effects of other continuous variables that are not of primary interest, known as covariates (CV) or nuisance variables. Mathematically, ANCOVA decomposes the variance in the DV into variance explained by the CV(s), variance explained by the categorical IV, and residual variance. Intuitively, ANCOVA can be thought of as 'adjusting' the DV by the group means of the CV(s). The ANCOVA model assumes a linear relationship between the response (DV) and covariate (CV): y_ = \mu + \tau_i + \Beta(x_ - \overline) + \epsilon_. In this equation, the DV, y_ is the jth observation under the ith categorical group; the CV, x_ is the ''j''th observation of the covariate under the ''i''th group. Variables in th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |