|

Mutex

In computer science, a lock or mutex (from mutual exclusion) is a synchronization primitive that prevents state from being modified or accessed by multiple threads of execution at once. Locks enforce mutual exclusion concurrency control policies, and with a variety of possible methods there exist multiple unique implementations for different applications. Types Generally, locks are ''advisory locks'', where each thread cooperates by acquiring the lock before accessing the corresponding data. Some systems also implement ''mandatory locks'', where attempting unauthorized access to a locked resource will force an exception in the entity attempting to make the access. The simplest type of lock is a binary semaphore. It provides exclusive access to the locked data. Other schemes also provide shared access for reading data. Other widely implemented access modes are exclusive, intend-to-exclude and intend-to-upgrade. Another way to classify locks is by what happens when the lock stra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semaphore (programming)

In computer science, a semaphore is a variable or abstract data type used to control access to a common resource by multiple threads and avoid critical section problems in a concurrent system such as a multitasking operating system. Semaphores are a type of synchronization primitive. A trivial semaphore is a plain variable that is changed (for example, incremented or decremented, or toggled) depending on programmer-defined conditions. A useful way to think of a semaphore as used in a real-world system is as a record of how many units of a particular resource are available, coupled with operations to adjust that record ''safely'' (i.e., to avoid race conditions) as units are acquired or become free, and, if necessary, wait until a unit of the resource becomes available. Though semaphores are useful for preventing race conditions, they do not guarantee their absence. Semaphores that allow an arbitrary resource count are called counting semaphores, while semaphores that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronization Primitive

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Motivation The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until all other sub-jobs are done processing. Then, they are joined again and leave the system. Thus, parallel programming requires synchronization as all the parallel processes wait for several other processes to occur. '' Producer-Consumer:'' In a producer-consumer relationship, the consumer process is dependent on the producer process until the necessary data has been produced. ''Exclusive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronization (computer Science)

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Motivation The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until all other sub-jobs are done processing. Then, they are joined again and leave the system. Thus, parallel programming requires synchronization as all the parallel processes wait for several other processes to occur. '' Producer-Consumer:'' In a producer-consumer relationship, the consumer process is dependent on the producer process until the necessary data has been produced. ''Exclusiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interrupt

In digital computers, an interrupt (sometimes referred to as a trap) is a request for the processor to ''interrupt'' currently executing code (when permitted), so that the event can be processed in a timely manner. If the request is accepted, the processor will suspend its current activities, save its state, and execute a function called an '' interrupt handler'' (or an ''interrupt service routine'', ISR) to deal with the event. This interruption is often temporary, allowing the software to resume normal activities after the interrupt handler finishes, although the interrupt could instead indicate a fatal error. Interrupts are commonly used by hardware devices to indicate electronic or physical state changes that require time-sensitive attention. Interrupts are also commonly used to implement computer multitasking and system calls, especially in real-time computing. Systems that use interrupts in these ways are said to be interrupt-driven. History Hardware interrupts wer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resource Contention

In computer science, resource contention is a conflict over access to a shared resource such as random access memory, disk storage, cache memory, internal buses or external network devices. A resource experiencing ongoing contention can be described as oversubscribed. Resolving resource contention problems is one of the basic functions of operating systems. Various low-level mechanisms can be used to aid this, including locks, semaphores, mutexes and queues. The other techniques that can be applied by the operating systems include intelligent scheduling, application mapping decisions, and page coloring. Access to resources is also sometimes regulated by queuing; in the case of computing time on a CPU the controlling algorithm of the task queue is called a scheduler. Failure to properly resolve resource contention problems may result in a number of problems, including deadlock, livelock, and thrashing. Resource contention results when multiple processes attempt to use th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Java (programming Language)

Java is a High-level programming language, high-level, General-purpose programming language, general-purpose, Memory safety, memory-safe, object-oriented programming, object-oriented programming language. It is intended to let programmers ''write once, run anywhere'' (Write once, run anywhere, WORA), meaning that compiler, compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to Java bytecode, bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax (programming languages), syntax of Java is similar to C (programming language), C and C++, but has fewer low-level programming language, low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as Reflective programming, reflection and runtime code modification) that are typically not available in traditional compiled languages. Java gained popularity sh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C Sharp (programming Language)

C# ( pronounced: C-sharp) ( ) is a general-purpose high-level programming language supporting multiple paradigms. C# encompasses static typing, strong typing, lexically scoped, imperative, declarative, functional, generic, object-oriented (class-based), and component-oriented programming disciplines. The principal inventors of the C# programming language were Anders Hejlsberg, Scott Wiltamuth, and Peter Golde from Microsoft. It was first widely distributed in July 2000 and was later approved as an international standard by Ecma (ECMA-334) in 2002 and ISO/ IEC (ISO/IEC 23270 and 20619) in 2003. Microsoft introduced C# along with .NET Framework and Microsoft Visual Studio, both of which are technically speaking, closed-source. At the time, Microsoft had no open-source products. Four years later, in 2004, a free and open-source project called Microsoft Mono began, providing a cross-platform compiler and runtime environment for the C# programming language. A decad ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

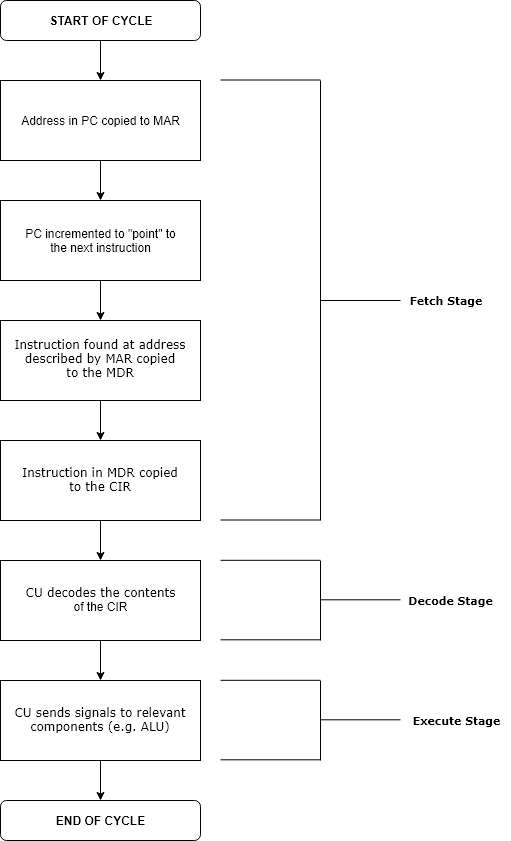

Run Time (program Lifecycle Phase)

Execution in computer and software engineering is the process by which a computer or virtual machine interprets and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a " fetch–decode–execute" cycle for each instruction done by the control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions. Programs for a computer may be executed in a batch process without human interaction or a user may type commands in an interactive session of an interpreter. In this case, the "commands" are simply program instructions, whose execution is chained together. The term run is used almost synonymously. A related meaning of both "to run" and "to execute" refers to the specific action of a user starting (or ''launching'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Livelock

In concurrent computing, deadlock is any situation in which no member of some group of entities can proceed because each waits for another member, including itself, to take action, such as sending a message or, more commonly, releasing a lock. Deadlocks are a common problem in multiprocessing systems, parallel computing, and distributed systems, because in these contexts systems often use software or hardware locks to arbitrate shared resources and implement process synchronization. In an operating system, a deadlock occurs when a process or thread enters a waiting state because a requested system resource is held by another waiting process, which in turn is waiting for another resource held by another waiting process. If a process remains indefinitely unable to change its state because resources requested by it are being used by another process that itself is waiting, then the system is said to be in a deadlock. In a communications system, deadlocks occur mainly due to loss ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peterson's Algorithm

Peterson's algorithm (or Peterson's solution) is a concurrent programming algorithm for mutual exclusion that allows two or more processes to share a single-use resource without conflict, using only shared memory for communication. It was formulated by Gary L. Peterson in 1981.G. L. Peterson: "Myths About the Mutual Exclusion Problem", ''Information Processing Letters'' 12(3) 1981, 115–116 While Peterson's original formulation worked with only two processes, the algorithm can be generalized for more than two.As discussed in ''Operating Systems Review'', January 1990 ("Proof of a Mutual Exclusion Algorithm", M Hofri). The algorithm The algorithm uses two variables: flag and turn. A flag /code> value of true indicates that the process n wants to enter the critical section. Entrance to the critical section is granted for process P0 if P1 does not want to enter its critical section or if P1 has given priority to P0 by setting turn to 0. The algorithm satisfies the three essent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |