|

Modulo-N Code

Modulo-''N'' code is a lossy compression algorithm used to compress correlated data sources using modular arithmetic. Compression When applied to two nodes in a network whose data are in close range of each other modulo-''N'' code requires one node (say odd) to send the coded data value as the raw data M_o = D_o; the even node is required to send the coded data as the M_e = D_e \bmod N . Hence the name modulo-''N'' code. Since at least \log_2 K bits are required to represent a number ''K'' in binary, the modulo coded data of the two nodes requires \log_2 M_o + \log_2 M_e bits. As we can generally expect \log_2 M_e \le \log_2 M_o always, because M_e \le N. This is how compression is achieved. A compression ratio achieved is \text = \frac. Decompression At the receiver, by joint decoding, we may complete the process of extracting the data and rebuilding the original values. The code from the even node is reconstructed by the ''assumption'' that it must be close to the data from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossy Compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. Higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression (reversible data compression) which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques. Well-designed lossy compression technology often reduces file sizes significantly before degradation is noticed by the end-user. Even when noticeable by the user, further data reduction may be desirable (e.g., for real-time communication or to reduce transmission times or storage needs). The most widely used lossy compression algorithm is the discrete cosine transform (DCT), first published by N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correlated

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. However, in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modular Arithmetic

In mathematics, modular arithmetic is a system of arithmetic operations for integers, other than the usual ones from elementary arithmetic, where numbers "wrap around" when reaching a certain value, called the modulus. The modern approach to modular arithmetic was developed by Carl Friedrich Gauss in his book '' Disquisitiones Arithmeticae'', published in 1801. A familiar example of modular arithmetic is the hour hand on a 12-hour clock. If the hour hand points to 7 now, then 8 hours later it will point to 3. Ordinary addition would result in , but 15 reads as 3 on the clock face. This is because the hour hand makes one rotation every 12 hours and the hour number starts over when the hour hand passes 12. We say that 15 is ''congruent'' to 3 modulo 12, written 15 ≡ 3 (mod 12), so that 7 + 8 ≡ 3 (mod 12). Similarly, if one starts at 12 and waits 8 hours, the hour hand will be at 8. If one instead waited twice as long, 16 hours, the hour hand would be on 4. This ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Networking

A computer network is a collection of communicating computers and other devices, such as printers and smart phones. In order to communicate, the computers and devices must be connected by wired media like copper cables, optical fibers, or by wireless communication. The devices may be connected in a variety of network topologies. In order to communicate over the network, computers use agreed-on rules, called communication protocols, over whatever medium is used. The computer network can include personal computers, Server (computing), servers, networking hardware, or other specialized or general-purpose Host (network), hosts. They are identified by network addresses and may have hostnames. Hostnames serve as memorable labels for the nodes and are rarely changed after initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol. Computer networks may be classified by many criteria, including the tr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phase Unwrapping

Instantaneous phase and frequency are important concepts in signal processing that occur in the context of the representation and analysis of time-varying functions. The instantaneous phase (also known as local phase or simply phase) of a ''complex-valued'' function ''s''(''t''), is the real-valued function: :\varphi(t) = \arg\, where arg is the complex argument function. The instantaneous frequency is the temporal rate of change of the instantaneous phase. And for a ''real-valued'' function ''s''(''t''), it is determined from the function's analytic representation, ''s''a(''t''): :\begin \varphi(t) &= \arg\ \\ pt &= \arg\, \end where \hat(t) represents the Hilbert transform of ''s''(''t''). When ''φ''(''t'') is constrained to its principal value, either the interval or , it is called ''wrapped phase''. Otherwise it is called ''unwrapped phase'', which is a continuous function of argument ''t'', assuming ''s''a(''t'') is a continuous function of ''t''. Unless ot ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

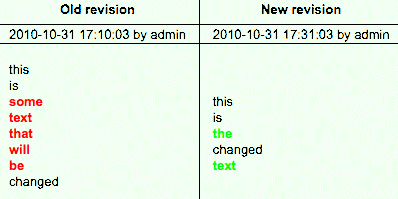

Delta Encoding

Delta encoding is a way of storing or transmitting data in the form of '' differences'' (deltas) between sequential data rather than complete files; more generally this is known as data differencing. Delta encoding is sometimes called delta compression, particularly where archival histories of changes are required (e.g., in revision control software). The differences are recorded in discrete files called "deltas" or "diffs". In situations where differences are small – for example, the change of a few words in a large document or the change of a few records in a large table – delta encoding greatly reduces data redundancy. Collections of unique deltas are substantially more space-efficient than their non-encoded equivalents. From a logical point of view, the difference between two data values is the information required to obtain one value from the other – see relative entropy. The difference between identical values (under some equivalence) is often called ''0'' or the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |