|

Linearizability

In concurrent programming, an operation (or set of operations) is linearizable if it consists of an ordered list of invocation and response events, that may be extended by adding response events such that: # The extended list can be re-expressed as a sequential history (is serializable). # That sequential history is a subset of the original unextended list. Informally, this means that the unmodified list of events is linearizable if and only if its invocations were serializable, but some of the responses of the serial schedule have yet to return. In a concurrent system, processes can access a shared object at the same time. Because multiple processes are accessing a single object, a situation may arise in which while one process is accessing the object, another process changes its contents. Making a system linearizable is one solution to this problem. In a linearizable system, although operations overlap on a shared object, each operation appears to take place instantaneousl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maurice Herlihy

Maurice Peter Herlihy (born 4 January 1954) is an American computer scientist active in the field of multiprocessor synchronization. Herlihy has contributed to areas including theoretical foundations of wait-free synchronization, linearizable data structures, applications of combinatorial topology to distributed computing, as well as hardware and software transactional memory. He is the An Wang Professor of Computer Science at Brown University, where he has been a member of the faculty since 1994. Herlihy was elected a member of the National Academy of Engineering in 2013 for concurrent computing techniques for linearizability, non-blocking data structures, and transactional memory. Recognition * 2003 Dijkstra Prize * 2004 Gödel Prize * 2005 Fellow of the Association for Computing Machinery * 2012 Dijkstra Prize * 2013 W. Wallace McDowell Award * 2013 National Academy of Engineering The National Academy of Engineering (NAE) is an American Nonprofit organization, nonpro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency Model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be data consistency, consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in Distributed computing, distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cache coherence, cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java (progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jeannette Wing

Jeannette Marie Wing is the Executive Vice President for Research at Columbia University, where she is also a professor of computer science. Prior to her appointment on September 1, 2021, she served as the Avanessians Director of the Data Science Institute at Columbia University. Until June 30, 2017, she was Corporate Vice President of Microsoft Research with oversight of its core research laboratories around the world and Microsoft Research Connections. Prior to 2013, she was the President's Professor of Computer Science at Carnegie Mellon University, Pittsburgh, Pennsylvania, United States. She also served as assistant director for Computer and Information Science and Engineering at the NSF from 2007 to 2010. She was appointed the Columbia University executive vice president for research in 2021. Background Wing earned her S.B. and S.M. in Electrical Engineering and Computer Science at MIT in June 1979. Her advisers were Ronald Rivest and John Reiser. In 1983, she earned h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Serializability

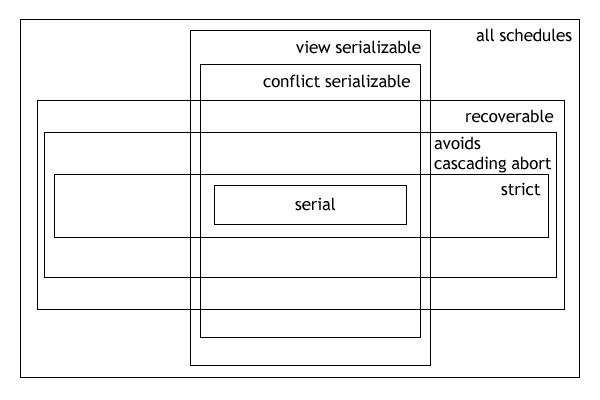

In the fields of databases and transaction processing (transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions in a set of transactions running in the system. Often it is a ''list'' of operations (actions) ordered by time, performed by a set of transactions that are executed together in the system. If the order in time between certain operations is not determined by the system, then a ''partial order'' is used. Examples of such operations are requesting a read operation, reading, writing, aborting, committing, requesting a lock, locking, etc. Often, only a subset of the transaction operation types are included in a schedule. Schedules are fundamental concepts in database concurrency control theory. In practice, most general purpose database systems employ conflict-serializable and strict recoverable schedules. Notation Grid notation: * Columns: The different transactions in the schedule. * Rows: The time order of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lock-free And Wait-free Algorithms

In computer science, an algorithm is called non-blocking if failure or suspension of any thread cannot cause failure or suspension of another thread; for some operations, these algorithms provide a useful alternative to traditional blocking implementations. A non-blocking algorithm is lock-free if there is guaranteed system-wide progress, and wait-free if there is also guaranteed per-thread progress. "Non-blocking" was used as a synonym for "lock-free" in the literature until the introduction of obstruction-freedom in 2003. The word "non-blocking" was traditionally used to describe telecommunications networks that could route a connection through a set of relays "without having to re-arrange existing calls" (see Clos network). Also, if the telephone exchange "is not defective, it can always make the connection" (see nonblocking minimal spanning switch). Motivation The traditional approach to multi-threaded programming is to use locks to synchronize access to shared res ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test-and-set

In computer science, the test-and-set instruction is an instruction used to write (set) 1 to a memory location and return its old value as a single atomic (i.e., non- interruptible) operation. The caller can then "test" the result to see if the state was changed by the call. If multiple processes may access the same memory location, and if a process is currently performing a test-and-set, no other process may begin another test-and-set until the first process's test-and-set is finished. A central processing unit (CPU) may use a test-and-set instruction offered by another electronic component, such as dual-port RAM; a CPU itself may also offer a test-and-set instruction. A lock Lock(s) or Locked may refer to: Common meanings *Lock and key, a mechanical device used to secure items of importance *Lock (water navigation), a device for boats to transit between different levels of water, as in a canal Arts and entertainme ... can be built using an atomic test-and-set instructio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fetch-and-add

In computer science, the fetch-and-add (FAA) CPU instruction atomically increments the contents of a memory location by a specified value. That is, fetch-and-add performs the following operation: increment the value at address by , where is a memory location and is some value, and return the original value at . in such a way that if this operation is executed by one process in a concurrent system, no other process will ever see an intermediate result. Fetch-and-add can be used to implement concurrency control structures such as mutex locks and semaphores. Overview The motivation for having an atomic fetch-and-add is that operations that appear in programming languages as are not safe in a concurrent system, where multiple processes or threads are running concurrently (either in a multi-processor system, or preemptively scheduled onto some single-core systems). The reason is that such an operation is actually implemented as multiple machine instructions: # load in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compare-and-swap

In computer science, compare-and-swap (CAS) is an atomic instruction used in multithreading to achieve synchronization. It compares the contents of a memory location with a given (the previous) value and, only if they are the same, modifies the contents of that memory location to a new given value. This is done as a single atomic operation. The atomicity guarantees that the new value is calculated based on up-to-date information; if the value had been updated by another thread in the meantime, the write would fail. The result of the operation must indicate whether it performed the substitution; this can be done either with a simple boolean response (this variant is often called compare-and-set), or by returning the value read from the memory location (''not'' the value written to it), thus "swapping" the read and written values. Overview A compare-and-swap operation is an atomic version of the following pseudocode, where denotes access through a pointer: function cas(p: poin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Load-link/store-conditional

In computer science, load-linked/store-conditional (LL/SC), sometimes known as load-reserved/store-conditional (LR/SC), are a pair of instructions used in multithreading to achieve synchronization. Load-link returns the current value of a memory location, while a subsequent store-conditional to the same memory location will store a new value only if no updates have occurred to that location since the load-link. Together, this implements a lock-free, atomic, read-modify-write operation. "Load-linked" is also known as load-link, load-reserved, and load-locked. LL/SC was originally proposed by Jensen, Hagensen, and Broughton for the S-1 AAP multiprocessor at Lawrence Livermore National Laboratory. Comparison of LL/SC and compare-and-swap If any updates have occurred, the store-conditional is guaranteed to fail, even if the value read by the load-link has since been restored. As such, an LL/SC pair is stronger than a read followed by a compare-and-swap (CAS), which will not de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Pipeline

In computer engineering, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming Machine code, instructions into a series of sequential steps (the eponymous "Pipeline (computing), pipeline") performed by different Central processing unit#Structure and implementation, processor units with different parts of instructions processed in parallel. Concept and motivation In a pipelined computer, instructions flow through the central processing unit (CPU) in stages. For example, it might have one stage for each step of the von Neumann architecture, von Neumann cycle: Fetch the instruction, fetch the operands, do the instruction, write the results. A pipelined computer usually has "pipeline registers" after each stage. These store information from the instruction and calculations so that the logic gates of the next stage can do th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |