|

Latent Diffusion Model

The Latent Diffusion Model (LDM) is a diffusion model architecture developed by the CompVis (Computer Vision & Learning) group at LMU Munich. Introduced in 2015, diffusion models (DMs) are trained with the objective of removing successive applications of noise (commonly Gaussian) on training images. The LDM is an improvement on standard DM by performing diffusion modeling in a latent space, and by allowing self-attention and cross-attention conditioning. LDMs are widely used in practical diffusion models. For instance, Stable Diffusion versions 1.1 to 2.1 were based on the LDM architecture. Version history Diffusion models were introduced in 2015 as a method to learn a model that can sample from a highly complex probability distribution. They used techniques from non-equilibrium thermodynamics, especially diffusion. It was accompanied by a software implementation in Theano. A 2019 paper proposed the noise conditional score network (NCSN) or score-matching with Langevin dynami ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Artificial Intelligence

Generative artificial intelligence (generative AI, GenAI, or GAI) is a subset of artificial intelligence that uses generative models to produce text, images, videos, or other forms of data. These models machine learning, learn the underlying patterns and structures of their training data set, training data and use them to produce new data based on the input, which often comes in the form of natural language Prompt (natural language), prompts. Improvements in transformer (machine learning model), transformer-based deep learning, deep neural networks, particularly large language model, large language models (LLMs), enabled an AI boom of generative AI systems in the early 2020s. These include chatbots such as ChatGPT, Microsoft Copilot, Copilot, Gemini (chatbot), Gemini, and LLaMA; text-to-image artificial intelligence art, artificial intelligence image generation systems such as Stable Diffusion, Midjourney, and DALL-E; and Text-to-video model, text-to-video AI generators such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variational Autoencoder

In machine learning, a variational autoencoder (VAE), is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling, belonging to the families of probabilistic graphical models and variational Bayesian methods. Variational autoencoders are often associated with the autoencoder model because of its architectural affinity, but with significant differences in the goal and mathematical formulation. Variational autoencoders are probabilistic generative models that require neural networks as only a part of their overall structure, as e.g. in VQ-VAE. The neural network components are typically referred to as the encoder and decoder for the first and second component respectively. The first neural network maps the input variable to a latent space that corresponds to the parameters of a variational distribution. In this way, the encoder can produce multiple different samples that all come from the same distribution. The decoder has the opposite function, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Encoder Cross-attention

Encoder may refer to: Electronic circuits * Audio encoder, converts digital audio to analog audio signals * Video encoder, converts digital video to analog video signals * Simple encoder, assigns a binary code to an active input line * Priority encoder, outputs a binary code representing the highest-priority active input * 8b/10b encoder, creates DC balance on a communication transmission line Media compression * Compressor, encodes data (e.g., audio/video/images) into a smaller form (see codec) Sensors * Encoder (position) ** Rotary encoder, converts rotary position to electronic signals ** Linear encoder, converts linear position to electronic signals ** Absolute encoder ** Incremental encoder, converts position changes to electronic signals in real time * Altitude encoder See also * Decoder (other) * Encode (other) The Encyclopedia of DNA Elements (ENCODE) is a public research project which aims to identify functional elements in the human genome. ENC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vision Transformer

A Vision Transformer (ViT) is a transformer that is targeted at vision processing tasks such as image recognition. Vision Transformers Transformers found their initial applications in natural language processing (NLP) tasks, as demonstrated by language models such as BERT and GPT-3. By contrast the typical image processing system uses a convolutional neural network (CNN). Well-known projects include Xception, ResNet, EfficientNet, DenseNet, and Inception. Transformers measure the relationships between pairs of input tokens (words in the case of text strings), termed attention. The cost is quadratic in the number of tokens. For images, the basic unit of analysis is the pixel. However, computing relationships for every pixel pair in a typical image is prohibitive in terms of memory and computation. Instead, ViT computes relationships among pixels in various small sections of the image (e.g., 16x16 pixels), at a drastically reduced cost. The sections (with positional embeddings) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DALL-E

DALL-E (stylized as DALL·E) and DALL-E 2 are deep learning models developed by OpenAI to generate digital images from natural language descriptions, called "prompts". DALL-E was revealed by OpenAI in a blog post in January 2021, and uses a version of GPT-3 modified to generate images. In April 2022, OpenAI announced DALL-E 2, a successor designed to generate more realistic images at higher resolutions that "can combine concepts, attributes, and styles". OpenAI has not released source code for either model. On 20 July 2022, DALL-E 2 entered into a beta phase with invitations sent to 1 million waitlisted individuals; users can generate a certain number of images for free every month and may purchase more. Access had previously been restricted to pre-selected users for a research preview due to concerns about ethics and safety. On 28 September 2022, DALL-E 2 was opened to anyone and the waitlist requirement was removed. In early November 2022, OpenAI released DALL-E 2 as an API ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

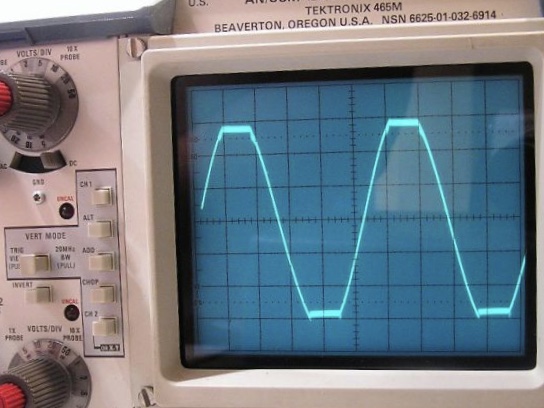

Clipping (signal Processing)

Clipping is a form of distortion that limits a signal once it exceeds a threshold. Clipping may occur when a signal is recorded by a sensor that has constraints on the range of data it can measure, it can occur when a signal is digitized, or it can occur any other time an analog or digital signal is transformed, particularly in the presence of gain or overshoot and undershoot. Clipping may be described as hard, in cases where the signal is strictly limited at the threshold, producing a flat cutoff; or it may be described as soft, in cases where the clipped signal continues to follow the original at a reduced gain. Hard clipping results in many high-frequency harmonics; soft clipping results in fewer higher-order harmonics and intermodulation distortion components. Audio In the frequency domain, clipping produces strong harmonics in the high-frequency range (as the clipped waveform comes closer to a squarewave). The extra high-frequency weighting of the signal could m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Whitening Transformation

A whitening transformation or sphering transformation is a linear transformation that transforms a vector of random variables with a known covariance matrix into a set of new variables whose covariance is the identity matrix, meaning that they are uncorrelated and each have variance 1. The transformation is called "whitening" because it changes the input vector into a white noise vector. Several other transformations are closely related to whitening: # the decorrelation transform removes only the correlations but leaves variances intact, # the standardization transform sets variances to 1 but leaves correlations intact, # a coloring transformation transforms a vector of white random variables into a random vector with a specified covariance matrix. Definition Suppose X is a random (column) vector with non-singular covariance matrix \Sigma and mean 0. Then the transformation Y = W X with a whitening matrix W satisfying the condition W^\mathrm W = \Sigma^ yields the whitened ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contrastive Language-Image Pre-training

DALL-E (stylized as DALL·E) and DALL-E 2 are deep learning models developed by OpenAI to generate digital images from natural language descriptions, called "prompts". DALL-E was revealed by OpenAI in a blog post in January 2021, and uses a version of GPT-3 modified to generate images. In April 2022, OpenAI announced DALL-E 2, a successor designed to generate more realistic images at higher resolutions that "can combine concepts, attributes, and styles". OpenAI has not released source code for either model. On 20 July 2022, DALL-E 2 entered into a beta phase with invitations sent to 1 million waitlisted individuals; users can generate a certain number of images for free every month and may purchase more. Access had previously been restricted to pre-selected users for a research preview due to concerns about ethics and safety. On 28 September 2022, DALL-E 2 was opened to anyone and the waitlist requirement was removed. In early November 2022, OpenAI released DALL-E 2 as an API, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Attention (machine Learning)

In artificial neural networks, attention is a technique that is meant to mimic cognitive attention. The effect enhances some parts of the input data while diminishing other parts — the motivation being that the network should devote more focus to the small, but important, parts of the data. Learning which part of the data is more important than another depends on the context, and this is trained by gradient descent. Attention-like mechanisms were introduced in the 1990s under names like multiplicative modules, sigma pi units, and hyper-networks. Its flexibility comes from its role as "soft weights" that can change during runtime, in contrast to standard weights that must remain fixed at runtime. Uses of attention include memory in neural Turing machines, reasoning tasks in differentiable neural computers, language processing in transformers, and LSTMs, and multi-sensory data processing (sound, images, video, and text) in perceivers. There are several types of attention incl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |