|

Koomey's Law

Koomey's law describes a trend in the history of computing hardware: for about a half-century, the number of computations per joule of energy dissipated doubled about every 1.57 years. Professor Jonathan Koomey described the trend in a 2010 paper in which he wrote that "at a fixed computing load, the amount of battery you need will fall by a factor of two every year and a half.". This trend had been remarkably stable since the 1950s ( ''R''2 of over 98%). But in 2011, Koomey re-examined this data and found that after 2000, the doubling slowed to about once every 2.6 years. This is related to the slowing of Moore's law, the ability to build smaller transistors; and the end around 2005 of Dennard scaling, the ability to build smaller transistors with constant power density. "The difference between these two growth rates is substantial. A doubling every year and a half results in a 100-fold increase in efficiency every decade. A doubling every two and a half years yields just a 16 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digital Signal Processor

A digital signal processor (DSP) is a specialized microprocessor chip, with its architecture optimized for the operational needs of digital signal processing. DSPs are fabricated on MOS integrated circuit chips. They are widely used in audio signal processing, telecommunications, digital image processing, radar, sonar and speech recognition systems, and in common consumer electronic devices such as mobile phones, disk drives and high-definition television (HDTV) products. The goal of a DSP is usually to measure, filter or compress continuous real-world analog signals. Most general-purpose microprocessors can also execute digital signal processing algorithms successfully, but may not be able to keep up with such processing continuously in real-time. Also, dedicated DSPs usually have better power efficiency, thus they are more suitable in portable devices such as mobile phones because of power consumption constraints. DSPs often use special memory architectures that are able t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications Of The ACM

''Communications of the ACM'' is the monthly journal of the Association for Computing Machinery (ACM). It was established in 1958, with Saul Rosen as its first managing editor. It is sent to all ACM members. Articles are intended for readers with backgrounds in all areas of computer science and information systems. The focus is on the practical implications of advances in information technology and associated management issues; ACM also publishes a variety of more theoretical journals. The magazine straddles the boundary of a science magazine, trade magazine, and a scientific journal. While the content is subject to peer review, the articles published are often summaries of research that may also be published elsewhere. Material published must be accessible and relevant to a broad readership. From 1960 onward, ''CACM'' also published algorithms, expressed in ALGOL. The collection of algorithms later became known as the Collected Algorithms of the ACM. See also * ''Journal of the A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

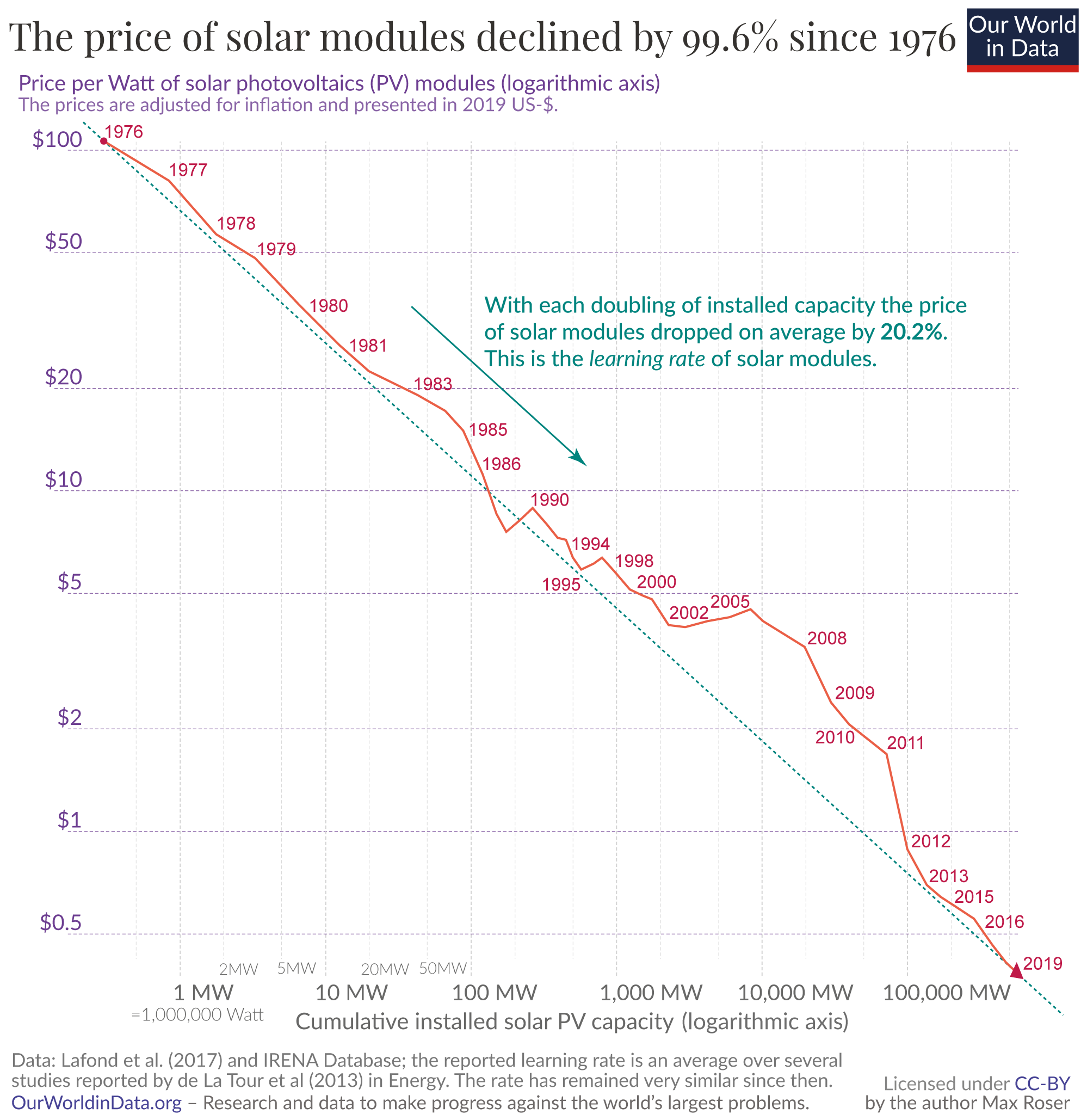

Swanson's Law

Swanson's law is the observation that the price of solar photovoltaic modules tends to drop 20 percent for every doubling of cumulative shipped volume. At present rates, costs go down 75% about every 10 years. Origin It is named after Richard Swanson, the founder of SunPower Corporation, a solar panel manufacturer. Note: Read more about current innovations in solar technology. The term ''Swanson's Law'' appears to have originated with an article in ''The Economist'' published in late 2012. Swanson had been presenting such curves at technical conferences for several years. It is a misnomer in that Swanson was not the first person to make this observation. Swanson's law has been compared to Moore's law, which predicts the growing computing power of processors. Swanson's Law is a solar industry specific application of the more general Wright's Law which states there will be a fixed cost reduction for each doubling of manufacturing volume. Technical Background The method used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Performance Per Watt

In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power consumed. This rate is typically measured by performance on the LINPACK benchmark when trying to compare between computing systems: an example using this is the Green500 list of supercomputers. Performance per watt has been suggested to be a more sustainable measure of computing than Moore’s Law. System designers building parallel computers, such as Google's hardware, pick CPUs based on their performance per watt of power, because the cost of powering the CPU outweighs the cost of the CPU itself. Spaceflight computers have hard limits on the maximum power available and also have hard requirements on minimum real-time performance. A ratio of processing speed to required electrical power is more useful than raw processing speed. D. J. Shirley; ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Limits Of Computation

The limits of computation are governed by a number of different factors. In particular, there are several physical and practical limits to the amount of computation or data storage that can be performed with a given amount of mass, volume, or energy. Hardware limits or physical limits Processing and memory density * The Bekenstein bound limits the amount of information that can be stored within a spherical volume to the entropy of a black hole with the same surface area. * Thermodynamics limit the data storage of a system based on its energy, number of particles and particle modes. In practice, it is a stronger bound than the Bekenstein bound. Processing speed * Bremermann's limit is the maximum computational speed of a self-contained system in the material universe, and is based on mass–energy versus quantum uncertainty constraints. Communication delays * The Margolus–Levitin theorem sets a bound on the maximum computational speed per unit of energy: 6 × 1033 op ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reversible Computing

Reversible computing is any model of computation where the computational process, to some extent, is time-reversible. In a model of computation that uses deterministic transitions from one state of the abstract machine to another, a necessary condition for reversibility is that the relation of the mapping from states to their successors must be one-to-one. Reversible computing is a form of unconventional computing. Due to the unitarity of quantum mechanics, quantum circuits are reversible, as long as they do not "collapse" the quantum states they operate on. Reversibility There are two major, closely related types of reversibility that are of particular interest for this purpose: physical reversibility and logical reversibility. A process is said to be ''physically reversible'' if it results in no increase in physical entropy; it is isentropic. There is a style of circuit design ideally exhibiting this property that is referred to as charge recovery logic, adiabatic circui ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Landauer's Principle

Landauer's principle is a physical principle pertaining to the lower theoretical limit of energy consumption of computation. It holds that "any logically irreversible manipulation of information, such as the erasure of a bit or the merging of two computation paths, must be accompanied by a corresponding entropy increase in non-information-bearing degrees of freedom of the information-processing apparatus or its environment".. Another way of phrasing Landauer's principle is that if an observer loses information about a physical system, heat is generated and the observer loses the ability to extract useful work from that system. A so-called logically reversible computation, in which no information is erased, may in principle be carried out without releasing any heat. This has led to considerable interest in the study of reversible computing. Indeed, without reversible computing, increases in the number of computations per joule of energy dissipated must eventually come to a halt. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

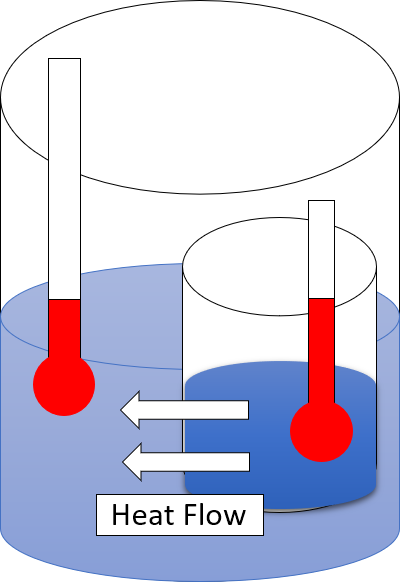

Second Law Of Thermodynamics

The second law of thermodynamics is a physical law based on universal experience concerning heat and Energy transformation, energy interconversions. One simple statement of the law is that heat always moves from hotter objects to colder objects (or "downhill"), unless energy in some form is supplied to reverse the direction of heat flow. Another definition is: "Not all heat energy can be converted into Work (thermodynamics), work in a cyclic process."Young, H. D; Freedman, R. A. (2004). ''University Physics'', 11th edition. Pearson. p. 764. The second law of thermodynamics in other versions establishes the concept of entropy as a physical property of a thermodynamic system. It can be used to predict whether processes are forbidden despite obeying the requirement of conservation of energy as expressed in the first law of thermodynamics and provides necessary criteria for spontaneous processes. The second law may be formulated by the observation that the entropy of isolated systems ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Micro

''IEEE Micro'' is a peer-reviewed scientific journal published by the IEEE Computer Society covering small systems and semiconductor chips, including integrated circuit processes and practices, project management, development tools and infrastructure, as well as chip design and architecture, empirical evaluations of small system and IC technologies and techniques, and human and social aspects of system development. Editors-in-chief The following people have been editor-in-chief: * 2019–present: Lizy Kurian John * 2015–2018: Lieven Eeckhout * 2011–2014: Erik R. Altman * 2007–2010: David H. Albonesi * 2003–2006: Pradip Bose * 1999–2001: Ken Sakamura * 1995–1998: Steve Diamond * 1991–1994: Dante Del Corso * 1987–1990: Joe Hootman * 1985–1987: James J. Farrell III * 1983–1984: Peter Rony and Tom Cain Peter may refer to: People * List of people named Peter, a list of people and fictional characters with the given name * Peter (given name) ** Saint ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CRC Press

The CRC Press, LLC is an American publishing group that specializes in producing technical books. Many of their books relate to engineering, science and mathematics. Their scope also includes books on business, forensics and information technology. CRC Press is now a division of Taylor & Francis, itself a subsidiary of Informa. History The CRC Press was founded as the Chemical Rubber Company (CRC) in 1903 by brothers Arthur, Leo and Emanuel Friedman in Cleveland, Ohio, based on an earlier enterprise by Arthur, who had begun selling rubber laboratory aprons in 1900. The company gradually expanded to include sales of laboratory equipment to chemists. In 1913 the CRC offered a short (116-page) manual called the ''Rubber Handbook'' as an incentive for any purchase of a dozen aprons. Since then the ''Rubber Handbook'' has evolved into the CRC's flagship book, the '' CRC Handbook of Chemistry and Physics''. In 1964, Chemical Rubber decided to focus on its publishing ventures ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Texas Instruments

Texas Instruments Incorporated (TI) is an American technology company headquartered in Dallas, Texas, that designs and manufactures semiconductors and various integrated circuits, which it sells to electronics designers and manufacturers globally. It is one of the top 10 semiconductor companies worldwide based on sales volume. The company's focus is on developing analog chips and embedded processors, which account for more than 80% of its revenue. TI also produces TI digital light processing technology and education technology products including calculators, microcontrollers, and multi-core processors. The company holds 45,000 patents worldwide as of 2016. Texas Instruments emerged in 1951 after a reorganization of Geophysical Service Incorporated, a company founded in 1930 that manufactured equipment for use in the seismic industry, as well as defense electronics. TI produced the world's first commercial silicon transistor in 1954, and the same year designed and manufactured t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |