|

Heteroscedasticity-consistent Standard Errors

The topic of heteroskedasticity-consistent (HC) standard errors arises in statistics and econometrics in the context of linear regression and time series analysis. These are also known as heteroskedasticity-robust standard errors (or simply robust standard errors), Eicker–Huber–White standard errors (also Huber–White standard errors or White standard errors), to recognize the contributions of Friedhelm Eicker, Peter J. Huber, and Halbert White. In regression and time-series modelling, basic forms of models make use of the assumption that the errors or disturbances ''u''''i'' have the same variance across all observation points. When this is not the case, the errors are said to be heteroskedastic, or to have heteroskedasticity, and this behaviour will be reflected in the residuals \widehat_i estimated from a fitted model. Heteroskedasticity-consistent standard errors are used to allow the fitting of a model that does contain heteroskedastic residuals. The first such appro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BLUE

Blue is one of the three primary colours in the RYB color model, RYB colour model (traditional colour theory), as well as in the RGB color model, RGB (additive) colour model. It lies between Violet (color), violet and cyan on the optical spectrum, spectrum of visible light. The term ''blue'' generally describes colours perceived by humans observing light with a dominant wavelength that's between approximately 450 and 495 nanometres. Most blues contain a slight mixture of other colours; Azure (color), azure contains some green, while ultramarine contains some violet. The clear daytime sky and the deep sea appear blue because of an optical effect known as Rayleigh scattering#Cause of the blue colour of the sky, Rayleigh scattering. An optical effect called the Tyndall effect explains Eye color#Blue, blue eyes. Distant objects appear more blue because of another optical effect called aerial perspective. Blue has been an important colour in art and decoration since ancient t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Least Squares

Weighted least squares (WLS), also known as weighted linear regression, is a generalization of ordinary least squares and linear regression in which knowledge of the unequal variance of observations (''heteroscedasticity'') is incorporated into the regression. WLS is also a specialization of generalized least squares, when all the off-diagonal entries of the covariance matrix of the errors, are null. Formulation The fit of a model to a data point is measured by its residual, r_i , defined as the difference between a measured value of the dependent variable, y_i and the value predicted by the model, f(x_i, \boldsymbol\beta): r_i(\boldsymbol\beta) = y_i - f(x_i, \boldsymbol\beta). If the errors are uncorrelated and have equal variance, then the function S(\boldsymbol\beta) = \sum_i r_i(\boldsymbol\beta)^2, is minimised at \boldsymbol\hat\beta, such that \frac(\hat\boldsymbol\beta) = 0. The Gauss–Markov theorem shows that, when this is so, \hat is a best linear unbiased es ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Probability Model

In statistics, a linear probability model (LPM) is a special case of a binary regression model. Here the dependent variable for each observation takes values which are either 0 or 1. The probability of observing a 0 or 1 in any one case is treated as depending on one or more explanatory variables. For the "linear probability model", this relationship is a particularly simple one, and allows the model to be fitted by linear regression. The model assumes that, for a binary outcome (Bernoulli trial), Y, and its associated vector of explanatory variables, X, : \Pr(Y=1 , X=x) = x'\beta . For this model, : E X= 0\cdot \Pr(Y=0, X) +1\cdot \Pr(Y=1, X) = \Pr(Y=1, X) =x'\beta, and hence the vector of parameters β can be estimated using least squares. This method of fitting would be inefficient, and can be improved by adopting an iterative scheme based on weighted least squares, in which the model from the previous iteration is used to supply estimates of the conditional variances, \o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

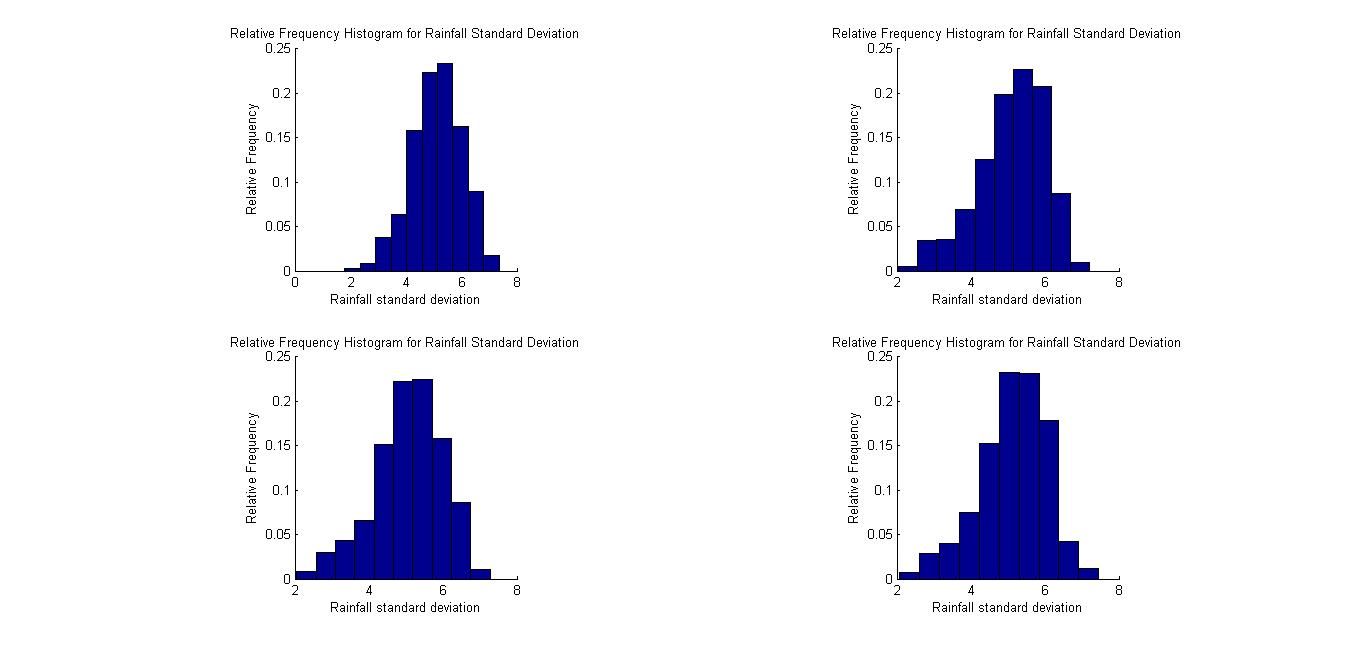

Bootstrapping is a procedure for estimating the distribution of an estimator by resampling (often with replacement) one's data or a model estimated from the data. Bootstrapping assigns measures of accuracy ( bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an estimand (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distributi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) for generating counterfactual samples # Bootstrapping # Cross validation # Jackknife Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Ap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a Critical value (statistics), critical value or equivalently by evaluating a p-value, ''p''-value computed from the test statistic. Roughly 100 list of statistical tests, specialized statistical tests are in use and noteworthy. History While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Choice of null hypothesis Paul Meehl has argued that the epistemological importance of the choice of null hypothesis has gone largely unacknowledged. When the null hypothesis is predicted by the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Econometrics

The ''Journal of Econometrics'' is a monthly peer-reviewed academic journal covering econometrics. It was established in 1973. The editors-in-chief are Michael Jansson (University of California Berkeley) and Aureo de Paula (University College London). According to the ''Journal Citation Reports'', the journal has a 2023 impact factor of 9.9. The journal covers work dealing with estimation and other methodological aspects of the application of statistical inference to economic data, as well as papers dealing with the application of econometric techniques to economics Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services. Economics focuses on the behaviour and interac .... Unusually among journals the title of Fellow of Journal of Econometrics is offered to anyone publishing four or more articles in the Journal.Maasoumi, E. (1992). Fel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leverage (statistics)

In statistics and in particular in regression analysis, leverage is a measure of how far away the independent variable values of an observation are from those of the other observations. ''High-leverage points'', if any, are outliers with respect to the independent variables. That is, high-leverage points have no neighboring points in \mathbb^ space, where '''' is the number of independent variables in a regression model. This makes the fitted model likely to pass close to a high leverage observation. Hence high-leverage points have the potential to cause large changes in the parameter estimates when they are deleted i.e., to be influential points. Although an influential point will typically have high leverage, a high leverage point is not necessarily an influential point. The leverage is typically defined as the diagonal elements of the hat matrix. Definition and interpretations Consider the linear regression model _i = \boldsymbol_i^\boldsymbol+_i, i=1,\, 2,\ldots,\, n. That is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Method Of Moments

In econometrics and statistics, the generalized method of moments (GMM) is a generic method for estimating parameters in statistical models. Usually it is applied in the context of semiparametric models, where the parameter of interest is finite-dimensional, whereas the full shape of the data's distribution function may not be known, and therefore maximum likelihood estimation is not applicable. The method requires that a certain number of ''moment conditions'' be specified for the model. These moment conditions are functions of the model parameters and the data, such that their expectation is zero at the parameters' true values. The GMM method then minimizes a certain norm of the sample averages of the moment conditions, and can therefore be thought of as a special case of minimum-distance estimation. The GMM estimators are known to be consistent, asymptotically normal, and most efficient in the class of all estimators that do not use any extra information aside from that conta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Greene (economist)

William H. Greene (born January 16, 1951) is an American economist. He was formerly the Robert Stansky Professor of Economics and Statistics at Stern School of Business at New York University. Greene is currently a professor of economics at the University of South Florida. Biography In 1972, Greene graduated with a Bachelor of Science in business administration from Ohio State University. He also earned a master's degree (1974) and a Ph.D. (1976) in econometrics from the University of Wisconsin–Madison. Before accepting his position in NYU, Greene worked as a consultant for the Civil Aeronautics Board in Washington, D.C. Greene is the author of a popular graduate-level econometrics textbook: ''Econometric Analysis,'' which has run to 8th edition . He is the founding editor-in-chief An editor-in-chief (EIC), also known as lead editor or chief editor, is a publication's editorial leader who has final responsibility for its operations and policies. The editor-in-chief heads a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Econometric Methods

A journal, from the Old French ''journal'' (meaning "daily"), may refer to: *Bullet journal, a method of personal organization *Diary, a record of personal secretive thoughts and as open book to personal therapy or used to feel connected to oneself. A record of what happened over the course of a day or other period *Daybook, also known as a general journal, a daily record of financial transactions *Logbook, a record of events important to the operation of a vehicle, facility, or otherwise *Transaction log, a chronological record of data processing *Travel journal, a record of the traveller's experience during the course of their journey In publishing, ''journal'' can refer to various periodicals or serials: *Academic journal, an academic or scholarly periodical **Scientific journal, an academic journal focusing on science **Medical journal, an academic journal focusing on medicine **Law review, a professional journal focusing on legal interpretation *Magazine, non-academic or scho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |