|

Feedforward Neural Network

Feedforward refers to recognition-inference architecture of neural networks. Artificial neural network architectures are based on inputs multiplied by weights to obtain outputs (inputs-to-output): feedforward. Recurrent neural networks, or neural networks with loops allow information from later processing stages to feed back to earlier stages for sequence processing. However, at every stage of inference a feedforward multiplication remains the core, essential for backpropagationRumelhart, David E., Geoffrey E. Hinton, and R. J. Williams.Learning Internal Representations by Error Propagation. David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986. or backpropagation through time. Thus neural networks cannot contain feedback like negative feedback or positive feedback where the outputs feed back to the ''very same'' inputs and modify them, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feed Forward Neural Net

Feed or The Feed may refer to: Animal foodstuffs * Animal feed, food given to domestic animals in the course of animal husbandry ** Fodder, foodstuffs manufactured for animal consumption ** Forage, foodstuffs that animals gather themselves, such as by grazing * Compound feed, foodstuffs that are blended from various raw materials and additives Arts, entertainment, and media Film * ''Feed'' (1992 film), a film directed by Kevin Rafferty * ''Feed'' (2005 film), a film directed by Brett Leonard * ''Feed'' (2017 film), a film directed by Tommy Bertelsen * Feed (2022 film), a Swedish horror film Literature * ''Feed'' (Anderson novel), a 2002 novel by M. T. Anderson * ''Feed'' (Grant novel), a 2010 novel by Seanan McGuire under the name "Mira Grant" Music * "Feed Us", 2007 song by Serj Tankian from '' Elect the Dead'' * "Feed", 2022 song by Demi Lovato from '' Holy Fvck'' Online media * '' Feed Magazine'', one of the earliest e-zines that relied entirely on its original onli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vanishing Gradient Problem

In machine learning, the vanishing gradient problem is the problem of greatly diverging gradient magnitudes between earlier and later layers encountered when training neural networks with backpropagation. In such methods, neural network weights are updated proportional to their partial derivative of the loss function. As the number of forward propagation steps in a network increases, for instance due to greater network depth, the gradients of earlier weights are calculated with increasingly many multiplications. These multiplications shrink the gradient magnitude. Consequently, the gradients of earlier weights will be exponentially smaller than the gradients of later weights. This difference in gradient magnitude might introduce instability in the training process, slow it, or halt it entirely. For instance, consider the hyperbolic tangent activation function. The gradients of this function are in range . The product of repeated multiplication with such gradients decreases exponent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frank Rosenblatt

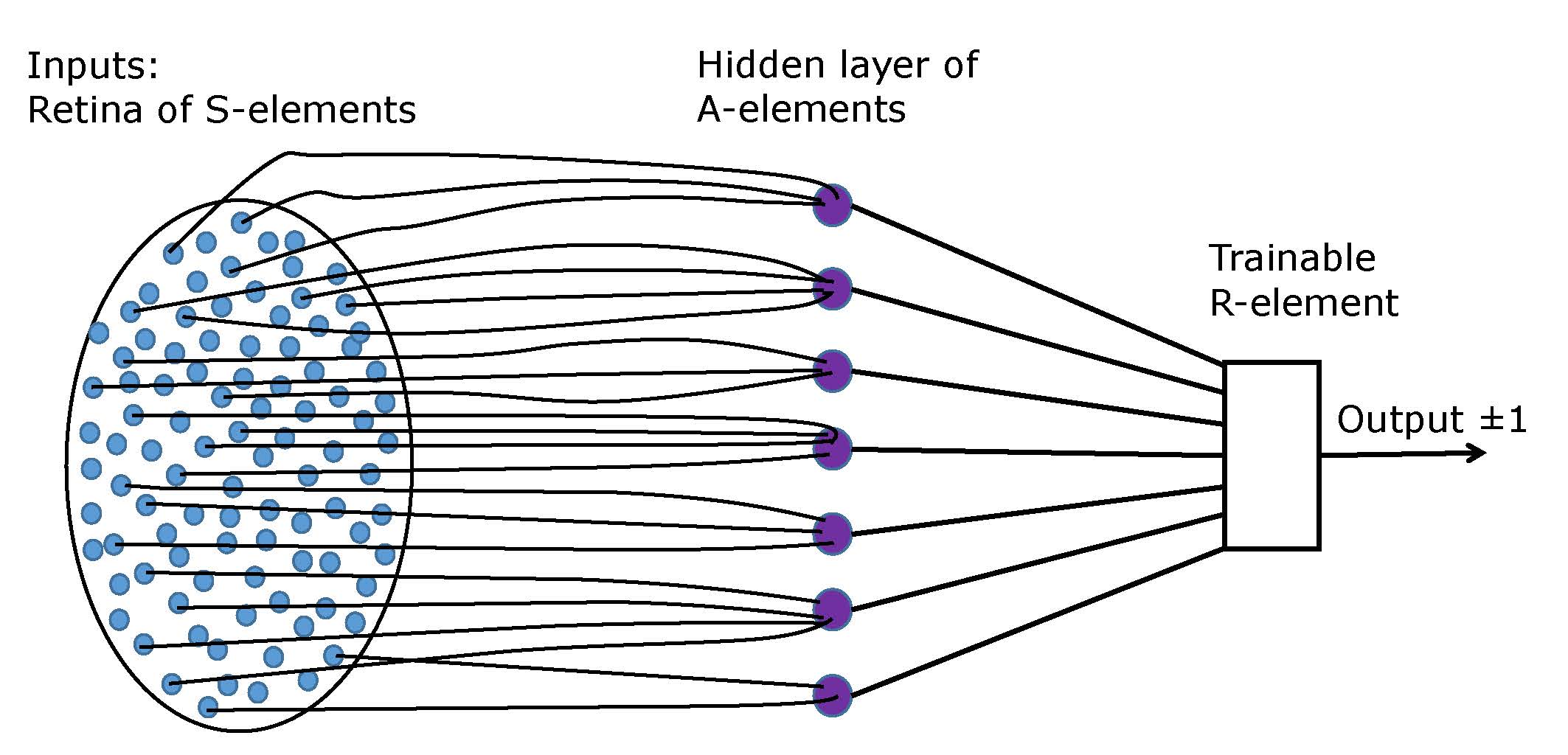

Frank Rosenblatt (July 11, 1928July 11, 1971) was an American psychologist notable in the field of artificial intelligence. He is sometimes called the father of deep learning for his pioneering work on artificial neural networks. Life and career Rosenblatt was born into a Jewish family in New Rochelle, New York as the son of Dr. Frank and Katherine Rosenblatt. After graduating from The Bronx High School of Science in 1946, he attended Cornell University, where he obtained his Bachelor of Arts, A.B. in 1950 and his Doctor of Philosophy, Ph.D. in 1956. For his PhD thesis he built a custom-made computer, the Electronic Profile Analyzing Computer (EPAC), to perform multidimensional analysis for psychometrics. He used it between 1951 and 1953 to analyze psychometric data collected for his PhD thesis. The data were collected from a paid, 600 item survey of more than 200 Cornell undergraduates. The total computational cost was 2.5 million arithmetic operations, necessitating the use of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neuron

An artificial neuron is a mathematical function conceived as a model of a biological neuron in a neural network. The artificial neuron is the elementary unit of an ''artificial neural network''. The design of the artificial neuron was inspired by biological neural circuitry. Its inputs are analogous to excitatory postsynaptic potentials and inhibitory postsynaptic potentials at neural dendrites, or . Its weights are analogous to synaptic weights, and its output is analogous to a neuron's action potential which is transmitted along its axon. Usually, each input is separately weighted, and the sum is often added to a term known as a ''bias'' (loosely corresponding to the threshold potential), before being passed through a nonlinear function known as an activation function. Depending on the task, these functions could have a sigmoid shape (e.g. for binary classification), but they may also take the form of other nonlinear functions, piecewise linear functions, or step fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Walter Pitts

Walter Harry Pitts, Jr. (April 23, 1923 – May 14, 1969) was an American logician who worked in the field of computational neuroscience.Smalheiser, Neil R"Walter Pitts", ''Perspectives in Biology and Medicine'', Volume 43, Number 2, Winter 2000, pp. 217–226, The Johns Hopkins University Press He proposed landmark theoretical formulations of neural activity and generative processes that influenced diverse fields such as cognitive sciences and psychology, philosophy, neurosciences, computer science, artificial neural networks, cybernetics and artificial intelligence, together with what has come to be known as the generative sciences. He is best remembered for having written along with Warren Sturgis McCulloch, a seminal paper in scientific history, titled '' A Logical Calculus of Ideas Immanent in Nervous Activity'' (1943). This paper proposed the first mathematical model of a neural network. The unit of this model, a simple formalized neuron, is still the standard of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Warren Sturgis McCulloch

Warren Sturgis McCulloch (November 16, 1898 – September 24, 1969) was an American neurophysiologist and cybernetician known for his work on the foundation for certain brain theories and his contribution to the cybernetics movement.Ken Aizawa (2004),McCulloch, Warren Sturgis. In: Dictionary of the Philosophy of Mind. Retrieved May 17, 2008. Along with Walter Pitts, McCulloch created computational models based on mathematical algorithms called threshold logic which split the inquiry into two distinct approaches, one approach focused on biological processes in the brain and the other focused on the application of neural networks to artificial intelligence. Biography Warren Sturgis McCulloch was born in Orange, New Jersey, in 1898. His brother was a chemical engineer and Warren was originally planning to join the Christian ministry. As a teenager he was associated with the theologians Henry Sloane Coffin, Harry Emerson Fosdick, Herman Karl Wilhelm Kumm and Julian F. Hecker ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Regression

In statistics, linear regression is a statistical model, model that estimates the relationship between a Scalar (mathematics), scalar response (dependent variable) and one or more explanatory variables (regressor or independent variable). A model with exactly one explanatory variable is a ''simple linear regression''; a model with two or more explanatory variables is a multiple linear regression. This term is distinct from multivariate linear regression, which predicts multiple correlated dependent variables rather than a single dependent variable. In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimation theory, estimated from the data. Most commonly, the conditional mean of the response given the values of the explanatory variables (or predictors) is assumed to be an affine function of those values; less commonly, the conditional median or some other quantile is used. Like all forms of regression analysis, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Squared Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the true value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the erro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Squares

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss. History Founding The method of least squares grew out of the fields of astronomy and geodesy, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gauss

Johann Carl Friedrich Gauss (; ; ; 30 April 177723 February 1855) was a German mathematician, astronomer, Geodesy, geodesist, and physicist, who contributed to many fields in mathematics and science. He was director of the Göttingen Observatory and professor of astronomy from 1807 until his death in 1855. While studying at the University of Göttingen, he propounded several mathematical theorems. As an independent scholar, he wrote the masterpieces ''Disquisitiones Arithmeticae'' and ''Theoria motus corporum coelestium''. Gauss produced the second and third complete proofs of the fundamental theorem of algebra. In number theory, he made numerous contributions, such as the Gauss composition law, composition law, the Quadratic reciprocity, law of quadratic reciprocity and the Fermat polygonal number theorem. He also contributed to the theory of binary and ternary quadratic forms, the construction of the heptadecagon, and the theory of Hypergeometric function, hypergeometric ser ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adrien-Marie Legendre

Adrien-Marie Legendre (; ; 18 September 1752 – 9 January 1833) was a French people, French mathematician who made numerous contributions to mathematics. Well-known and important concepts such as the Legendre polynomials and Legendre transformation are named after him. He is also known for his contributions to the Least squares, method of least squares, and was the first to officially publish on it, though Carl Friedrich Gauss had discovered it before him. Life Adrien-Marie Legendre was born in Paris on 18 September 1752 to a wealthy family. He received his education at the Collège Mazarin in Paris, and defended his thesis in physics and mathematics in 1770. He taught at the École Militaire in Paris from 1775 to 1780 and at the École Normale Supérieure, École Normale from 1795. At the same time, he was associated with the Bureau des Longitudes. In 1782, the Prussian Academy of Sciences, Berlin Academy awarded Legendre a prize for his treatise on projectiles in resistant m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Learning Rate

In machine learning and statistics, the learning rate is a tuning parameter in an optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. Since it influences to what extent newly acquired information overrides old information, it metaphorically represents the speed at which a machine learning model "learns". In the adaptive control literature, the learning rate is commonly referred to as gain. In setting a learning rate, there is a trade-off between the rate of convergence and overshooting. While the descent direction is usually determined from the gradient of the loss function, the learning rate determines how big a step is taken in that direction. A too high learning rate will make the learning jump over minima but a too low learning rate will either take too long to converge or get stuck in an undesirable local minimum. In order to achieve faster convergence, prevent oscillations and getting stuck in undesi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |