|

Extremum Estimator

In statistics and econometrics, extremum estimators are a wide class of estimators for parametric models that are calculated through maximization (or minimization) of a certain objective function, which depends on the data. The general theory of extremum estimators was developed by . Definition An estimator \scriptstyle\hat\theta is called an extremum estimator, if there is an ''objective function'' \scriptstyle\hat_n such that : \hat\theta = \underset\ \widehat_n(\theta), where Θ is the parameter space. Sometimes a slightly weaker definition is given: : \widehat Q_n(\hat\theta) \geq \max_\,\widehat Q_n(\theta) - o_p(1), where ''o''''p''(1) is the variable converging in probability to zero. With this modification \scriptstyle\hat\theta doesn't have to be the exact maximizer of the objective function, just be sufficiently close to it. The theory of extremum estimators does not specify what the objective function should be. There are various types of objective ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convergence In Probability

In probability theory, there exist several different notions of convergence of random variables. The convergence of sequences of random variables to some limit random variable is an important concept in probability theory, and its applications to statistics and stochastic processes. The same concepts are known in more general mathematics as stochastic convergence and they formalize the idea that a sequence of essentially random or unpredictable events can sometimes be expected to settle down into a behavior that is essentially unchanging when items far enough into the sequence are studied. The different possible notions of convergence relate to how such a behavior can be characterized: two readily understood behaviors are that the sequence eventually takes a constant value, and that values in the sequence continue to change but can be described by an unchanging probability distribution. Background "Stochastic convergence" formalizes the idea that a sequence of essentially rand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Distance Estimation

Minimum-distance estimation (MDE) is a conceptual method for fitting a statistical model to data, usually the empirical distribution. Often-used estimators such as ordinary least squares can be thought of as special cases of minimum-distance estimation. While consistent and asymptotically normal, minimum-distance estimators are generally not statistically efficient when compared to maximum likelihood estimators, because they omit the Jacobian usually present in the likelihood function. This, however, substantially reduces the computational complexity of the optimization problem. Definition Let \displaystyle X_1,\ldots,X_n be an independent and identically distributed (iid) random sample from a population with distribution F(x;\theta)\colon \theta\in\Theta and \Theta\subseteq\mathbb^k (k\geq 1). Let \displaystyle F_n(x) be the empirical distribution function based on the sample. Let \hat be an estimator for \displaystyle \theta. Then F(x;\hat) is an estimator for \displa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Function

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood function indicates which parameter values are more ''likely'' than others, in the sense that they would have made the observed data more probable. Consequently, the likelihood is often written as \mathcal(\theta\mid X) instead of P(X \mid \theta), to emphasize that it is to be understood as a function of the parameters \theta instead of the random variable X. In maximum likelihood estimation, the arg max of the likelihood function serves as a point estimate for \theta, while local curvature (approximated by the likelihood's Hessian matrix) indicates the estimate's precision. Meanwhile in Bayesian statistics, parameter estimates are derived from the converse of the likelihood, the so-called posterior probability, which is calculated via Baye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Density Function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) can be interpreted as providing a ''relative likelihood'' that the value of the random variable would be close to that sample. Probability density is the probability per unit length, in other words, while the ''absolute likelihood'' for a continuous random variable to take on any particular value is 0 (since there is an infinite set of possible values to begin with), the value of the PDF at two different samples can be used to infer, in any particular draw of the random variable, how much more likely it is that the random variable would be close to one sample compared to the other sample. In a more precise sense, the PDF is used to specify the probability of the random variable falling ''within a particular range of values'', as opposed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when all observed outcomes are assumed to have Normal distributions with the same variance. From the perspective of Bayesian inference ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Convergence In Probability

Uniform convergence in probability is a form of convergence in probability in statistical asymptotic theory and probability theory. It means that, under certain conditions, the ''empirical frequencies'' of all events in a certain event-family converge to their ''theoretical probabilities''. Uniform convergence in probability has applications to statistics as well as machine learning as part of statistical learning theory. The law of large numbers says that, for each ''single'' event A, its empirical frequency in a sequence of independent trials converges (with high probability) to its theoretical probability. In many application however, the need arises to judge simultaneously the probabilities of events of an entire class S from one and the same sample. Moreover it, is required that the relative frequency of the events converge to the probability uniformly over the entire class of events S The Uniform Convergence Theorem gives a sufficient condition for this convergence to h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Function

In mathematics, a continuous function is a function such that a continuous variation (that is a change without jump) of the argument induces a continuous variation of the value of the function. This means that there are no abrupt changes in value, known as '' discontinuities''. More precisely, a function is continuous if arbitrarily small changes in its value can be assured by restricting to sufficiently small changes of its argument. A discontinuous function is a function that is . Up until the 19th century, mathematicians largely relied on intuitive notions of continuity, and considered only continuous functions. The epsilon–delta definition of a limit was introduced to formalize the definition of continuity. Continuity is one of the core concepts of calculus and mathematical analysis, where arguments and values of functions are real and complex numbers. The concept has been generalized to functions between metric spaces and between topological spaces. The latter are t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compact Set

In mathematics, specifically general topology, compactness is a property that seeks to generalize the notion of a closed and bounded subset of Euclidean space by making precise the idea of a space having no "punctures" or "missing endpoints", i.e. that the space not exclude any ''limiting values'' of points. For example, the open interval (0,1) would not be compact because it excludes the limiting values of 0 and 1, whereas the closed interval ,1would be compact. Similarly, the space of rational numbers \mathbb is not compact, because it has infinitely many "punctures" corresponding to the irrational numbers, and the space of real numbers \mathbb is not compact either, because it excludes the two limiting values +\infty and -\infty. However, the ''extended'' real number line ''would'' be compact, since it contains both infinities. There are many ways to make this heuristic notion precise. These ways usually agree in a metric space, but may not be equivalent in other topologic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

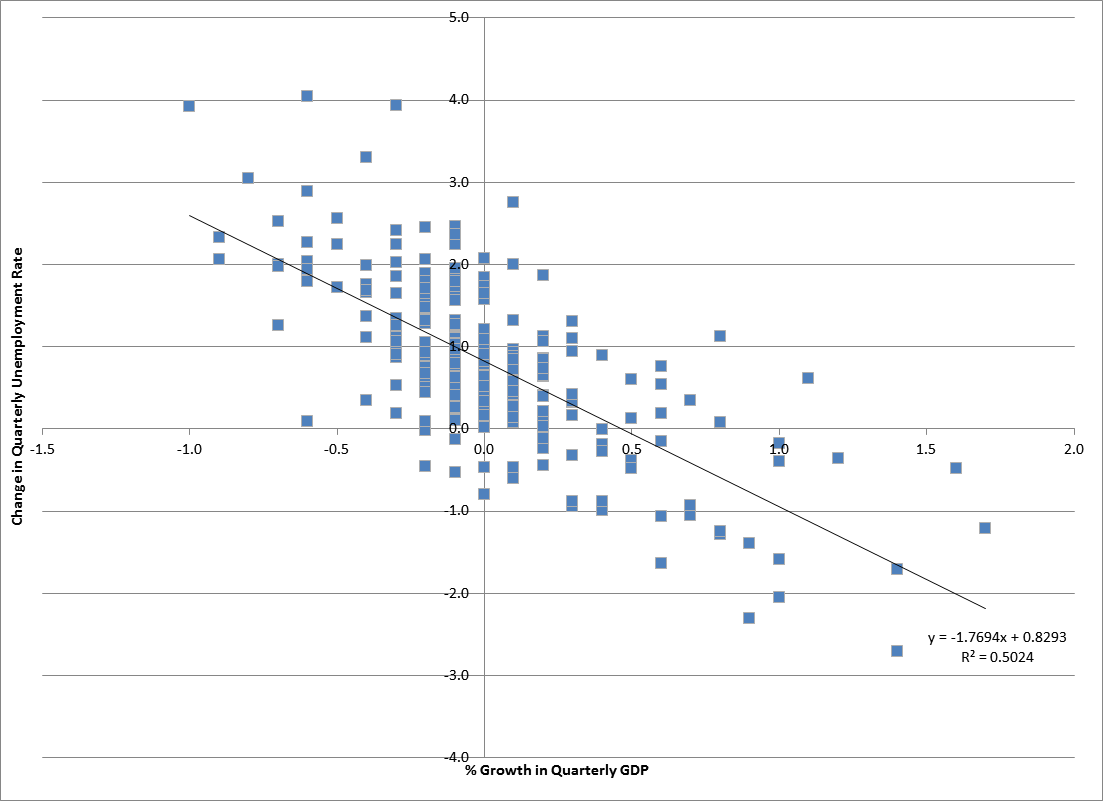

Econometrics is the application of statistical methods to economic data in order to give empirical content to economic relationships. M. Hashem Pesaran (1987). "Econometrics," '' The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 p. 8–22 Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1 p. 1–34Abstract ( 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference". An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships". Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today. A basic tool for econometrics is the multiple linear regression model. ''Econometri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |