|

Conjoint Analysis (in Marketing)

Conjoint analysis is a survey-based statistical technique used in market research that helps determine how people value different attributes (feature, function, benefits) that make up an individual product or service. The objective of conjoint analysis is to determine the influence of a set of attributes on respondent choice or decision making. In a conjoint experiment, a controlled set of potential products or services, broken down by attribute, is shown to survey respondents. By analyzing how respondents choose among the products, the respondents' valuation of the attributes making up the products or services can be determined. These implicit valuations (utilities or part-worths) can be used to create market models that estimate market share, revenue and even profitability of new designs. Conjoint analysis originated in mathematical psychology and was developed by marketing professor Paul E. Green at the Wharton School of the University of Pennsylvania. Other prominent conjoint ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simalto

SIMALTO – SImultaneous Multi-Attribute Trade Off – is a survey based statistical technique used in market research that helps determine how people prioritise and value alternative product and/or service options of the attributes that make up individual products or services. A particular specific application of the method is in political science. It can be applied to predicting which of the alternative combinations of optional service benefits provided by a local authority, state or national government in their annual budget would meet with the ‘maximum’ approval of a target population. Survey design SIMALTO is based on creating a matrix of the options that can combine to form the product or service. Each row of the matrix represents an attribute and the matrix columns are the various options (alternative features, levels of service, benefits) of that particular row attribute. Each option is associated with ‘cost points’ which indicates how much more or less that opt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Configurator

Configurators, also known as choice boards, design systems, toolkits, or co-design platforms, are responsible for guiding the user through the configuration process. Different variations are represented, visualized, assessed and priced which starts a learning-by-doing process for the user. While the term “configurator” or “configuration system” is quoted rather often in literature, it is used for the most part in a technical sense, addressing a software tool. The success of such an interaction system is, however, not only defined by its technological capabilities, but also by its integration in the whole sale environment, its ability to allow for learning by doing, to provide experience and process satisfaction, and its integration into the brand concept. () Advantages Configurators can be found in various forms and different industries (). They are employed in B2B (business to business), as well as B2C (business to consumer) markets and are operated either by trained sta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Probability

Bayesian probability ( or ) is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with hypotheses; that is, with propositions whose truth or falsity is unknown. In the Bayesian view, a probability is assigned to a hypothesis, whereas under frequentist inference, a hypothesis is typically tested without being assigned a probability. Bayesian probability belongs to the category of evidential probabilities; to evaluate the probability of a hypothesis, the Bayesian probabilist specifies a prior probability. This, in turn, is then updated to a posterior probability in the light of new, relevant data (evidence). The Bayesian interpretation provides a standard set of procedur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Market Segmentation

In marketing, market segmentation or customer segmentation is the process of dividing a consumer or business market into meaningful sub-groups of current or potential customers (or consumers) known as ''segments''. Its purpose is to identify profitable and growing segments that a company can target with distinct marketing strategies. In dividing or segmenting markets, researchers typically look for common characteristics such as shared needs, common interests, similar lifestyles, or even similar demographic profiles. The overall aim of segmentation is to identify ''high-yield segments'' – that is, those segments that are likely to be the most profitable or that have growth potential – so that these can be selected for special attention (i.e. become target markets). Many different ways to segment a market have been identified. Business-to-business (B2B) sellers might segment the market into different types of businesses or countries, while business-to-consumer (B2C) seller ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Revealed Preference

Revealed preference theory, pioneered by economist Paul Anthony Samuelson in 1938, is a method of analyzing choices made by individuals, mostly used for comparing the influence of policies on consumer behavior. Revealed preference models assume that the preferences of consumers can be revealed by their purchasing habits. Revealed preference theory arose because existing theories of consumer demand were based on a diminishing marginal rate of substitution (MRS). This diminishing MRS relied on the assumption that consumers make consumption decisions to maximise their utility. While utility maximisation was not a controversial assumption, the underlying utility functions could not be measured with great certainty. Revealed preference theory was a means to reconcile demand theory by defining utility functions by observing behaviour. Therefore, revealed preference is a way to infer preferences between available choices. It contrasts with attempts to directly measure preferences or u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

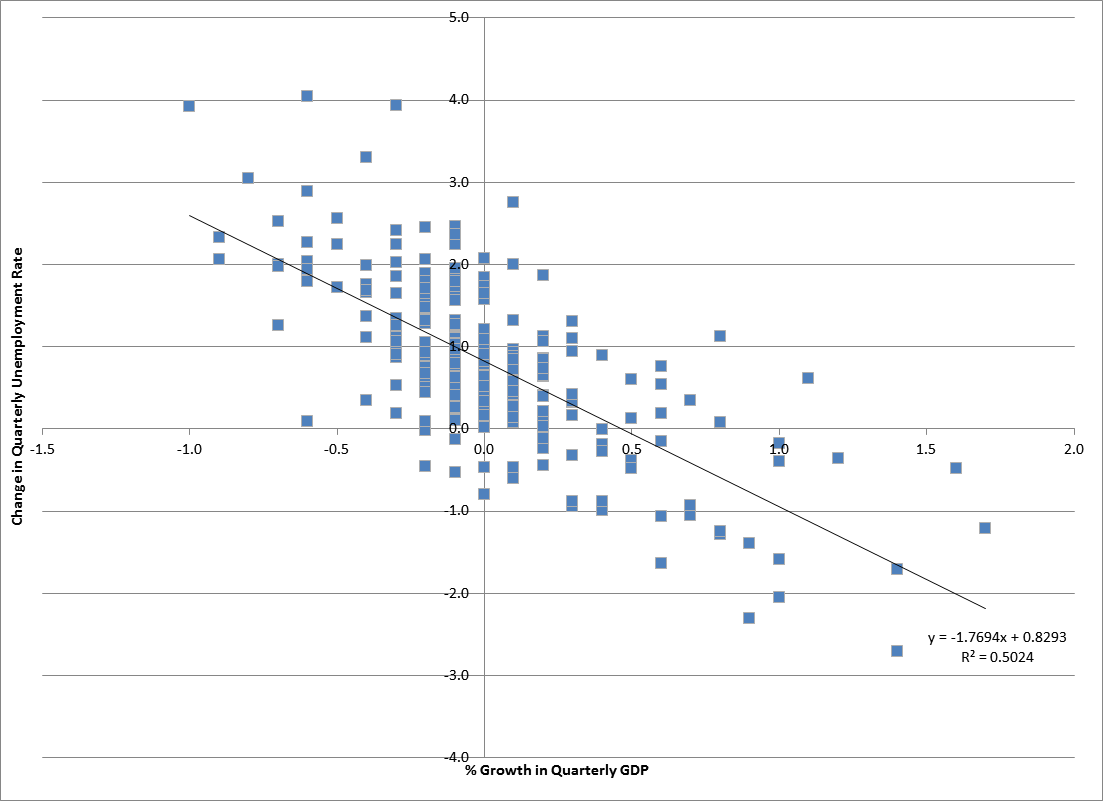

Econometric Modeling

Econometrics is an application of Statistics, statistical methods to economic data in order to give Empirical evidence, empirical content to economic relationships.M. Hashem Pesaran (1987). "Econometrics", ''The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 [pp. 8–22]. Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1 [pp. 1–34].Abstract (The New Palgrave Dictionary of Economics, 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic Phenomenon, phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference." An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships." Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used toda ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Choice Analysis

In economics, discrete choice models, or qualitative choice models, describe, explain, and predict choices between two or more discrete alternatives, such as entering or not entering the labor market, or choosing between modes of transport. Such choices contrast with standard consumption models in which the quantity of each good consumed is assumed to be a continuous variable. In the continuous case, calculus methods (e.g. first-order conditions) can be used to determine the optimum amount chosen, and demand can be modeled empirically using regression analysis. On the other hand, discrete choice analysis examines situations in which the potential outcomes are discrete, such that the optimum is not characterized by standard first-order conditions. Thus, instead of examining "how much" as in problems with continuous choice variables, discrete choice analysis examines "which one". However, discrete choice analysis can also be used to examine the chosen quantity when only a few distinc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dummy Variable (statistics)

In regression analysis, a dummy variable (also known as indicator variable or just dummy) is one that takes a binary value (0 or 1) to indicate the absence or presence of some categorical effect that may be expected to shift the outcome. For example, if we were studying the relationship between biological sex and income, we could use a dummy variable to represent the sex of each individual in the study. The variable could take on a value of 1 for males and 0 for females (or vice versa). In machine learning this is known as one-hot encoding. Dummy variables are commonly used in regression analysis to represent categorical variables that have more than two levels, such as education level or occupation. In this case, multiple dummy variables would be created to represent each level of the variable, and only one dummy variable would take on a value of 1 for each observation. Dummy variables are useful because they allow us to include categorical variables in our analysis, which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fractional Factorial Design

In statistics, a fractional factorial design is a way to conduct experiments with fewer experimental runs than a full factorial design. Instead of testing every single combination of factors, it tests only a carefully selected portion. This "fraction" of the full design is chosen to reveal the most important information about the system being studied ( sparsity-of-effects principle), while significantly reducing the number of runs required. It is based on the idea that many tests in a full factorial design can be redundant. However, this reduction in runs comes at the cost of potentially more complex analysis, as some effects can become intertwined, making it impossible to isolate their individual influences. Therefore, choosing which combinations to test in a fractional factorial design must be done carefully. History Fractional factorial design was introduced by British statistician David John Finney in 1945, extending previous work by Ronald Fisher on the full factorial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |