|

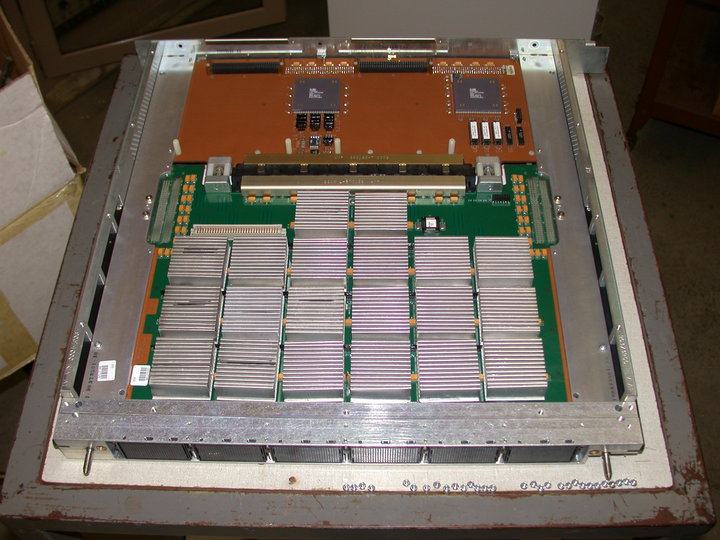

CDC Star-100

The CDC STAR-100 is a vector supercomputer that was designed, manufactured, and marketed by Control Data Corporation (CDC). It was one of the first machines to use a vector processor to improve performance on appropriate scientific applications. It was also the first supercomputer to use integrated circuits and the first to be equipped with one million words of computer memory. STAR is a blend of ''STrings'' (of binary digits) and ''ARrays.'' The 100 alludes to the nominal peak processing speed of 100 million floating point operations per second ( MFLOPS); the earlier CDC 7600 provided peak performance of 36 MFLOPS but more typically ran at around 10 MFLOPS. The design was part of a bid made to Lawrence Livermore National Laboratory (LLNL) in the mid-1960s. Livermore was looking for a partner who would build a much faster machine on their own budget and then lease the resulting design to the lab. It was announced publicly in the early 1970s, and on 17 August 1971, CDC ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer company that in the 1960s was one of the nine major U.S. computer companies, which group included IBM, the Burroughs Corporation, and the Digital Equipment Corporation (DEC), the NCR Corporation (NCR), General Electric, Honeywell, RCA, and UNIVAC. For most of the 1960s, the strength of CDC was the work of the electrical engineer Seymour Cray who developed a series of fast computers, then considered the fastest computing machines in the world; in the 1970s, Cray left the Control Data Corporation and founded Cray Research (CRI) to design and make supercomputers. In 1988, after much financial loss, the Control Data Corporation began withdrawing from making computers and sold the affiliated companies of CDC; in 1992, CDC established Control Data Systems, Inc. The remaining affiliate companies of CDC currently do business as the software company Dayforce. Background: World War II – 1957 During World War II the Un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CDC 7600

The CDC 7600 was designed by Seymour Cray to be the successor to the CDC 6600, extending Control Data Corporation, Control Data's dominance of the supercomputer field into the 1970s. The 7600 ran at 36.4 MHz (27.5 ns clock cycle) and had a 65 Kword primary memory (with a 60-bit word size) using Magnetic-core memory, magnetic core and variable-size (up to 512 Kword) secondary memory (depending on site). It was generally about ten times as fast as the CDC 6600 and could deliver about 10 MFLOPS on hand-compiled code, with a peak of 36 MFLOPS.Gordon BellA Seymour Cray Perspective. In addition, in benchmark tests in early 1970 it was shown to be slightly faster than its IBM rival, the IBM System/360, Model 195. When the system was released in 1967, it sold for around $5 million in base configurations, and considerably more as options and features were added. Among the 7600's notable state-of-the-art contributions, beyond extensive Instruction pipeline, pipelining, was the phys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microcode

In processor design, microcode serves as an intermediary layer situated between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. It consists of a set of hardware-level instructions that implement the higher-level machine code instructions or control internal finite-state machine sequencing in many digital processing components. While microcode is utilized in Intel and AMD general-purpose CPUs in contemporary desktops and laptops, it functions only as a fallback path for scenarios that the faster hardwired control unit is unable to manage. Housed in special high-speed memory, microcode translates machine instructions, state machine data, or other input into sequences of detailed circuit-level operations. It separates the machine instructions from the underlying electronics, thereby enabling greater flexibility in designing and altering instructions. Moreover, it facilitates the construction of complex multi-step inst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Pipelining

In computer engineering, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps (the eponymous "pipeline") performed by different processor units with different parts of instructions processed in parallel. Concept and motivation In a pipelined computer, instructions flow through the central processing unit (CPU) in stages. For example, it might have one stage for each step of the von Neumann cycle: Fetch the instruction, fetch the operands, do the instruction, write the results. A pipelined computer usually has "pipeline registers" after each stage. These store information from the instruction and calculations so that the logic gates of the next stage can do the next step. This arrangement lets the CPU complete an instruction on each clock cycle. It is common for even-nu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtual Memory

In computing, virtual memory, or virtual storage, is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory". The computer's operating system, using a combination of hardware and software, maps memory addresses used by a program, called '' virtual addresses'', into ''physical addresses'' in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit (MMU), automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities, utilizing, e.g., disk storage, to provide a virtual address space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Springer-Verlag

Springer Science+Business Media, commonly known as Springer, is a German multinational publishing company of books, e-books and peer-reviewed journals in science, humanities, technical and medical (STM) publishing. Originally founded in 1842 in Berlin, it expanded internationally in the 1960s, and through mergers in the 1990s and a sale to venture capitalists it fused with Wolters Kluwer and eventually became part of Springer Nature in 2015. Springer has major offices in Berlin, Heidelberg, Dordrecht, and New York City. History Julius Springer founded Springer-Verlag in Berlin in 1842 and his son Ferdinand Springer grew it from a small firm of 4 employees into Germany's then second-largest academic publisher with 65 staff in 1872.Chronology ". Springer Science+Business Media. In 1964, Springer expanded its business internationally, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

APL (programming Language)

APL (named after the book ''A Programming Language'') is a programming language developed in the 1960s by Kenneth E. Iverson. Its central datatype is the multidimensional array. It uses a large range of special graphic symbols to represent most functions and operators, leading to very concise code. It has been an important influence on the development of concept modeling, spreadsheets, functional programming, and computer math packages. It has also inspired several other programming languages. History Mathematical notation A mathematical notation for manipulating arrays was developed by Kenneth E. Iverson, starting in 1957 at Harvard University. In 1960, he began work for IBM where he developed this notation with Adin Falkoff and published it in his book ''A Programming Language'' in 1962. The preface states its premise: This notation was used inside IBM for short research reports on computer systems, such as the Burroughs B5000 and its stack mechanism when stack m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operator (computer Programming)

In computer programming, an operator is a programming language construct that provides functionality that may not be possible to define as a user-defined function (i.e. sizeof in C) or has syntax different than a function (i.e. infix addition as in a+b). Like other programming language concepts, ''operator'' has a generally accepted, although debatable meaning among practitioners while at the same time each language gives it specific meaning in that context, and therefore the meaning varies by language. Some operators are represented with symbols characters typically not allowed for a function identifier to allow for presentation that is more familiar looking than typical function syntax. For example, a function that tests for greater-than could be named gt, but many languages provide an infix symbolic operator so that code looks more familiar. For example, this: if gt(x, y) then return Can be: if x > y then return Some languages allow a language-defined operator t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its Instruction (computer science), instructions are designed to operate efficiently and effectively on large Array data structure, one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, Data compression, compression and similar tasks. Vector processing techniques also operate in video game console, video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction (computer Science)

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported Machine code, instructions, data types, Register (computer), registers, the hardware support for managing Computer memory, main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as Computer performance, performa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NASA Langley Research Center

The Langley Research Center (LaRC or NASA Langley), located in Hampton, Virginia, near the Chesapeake Bay front of Langley Air Force Base, is the oldest of NASA's field centers. LaRC has focused primarily on aeronautical research but has also tested space hardware such as the Apollo Lunar Module. In addition, many of the earliest high-profile space missions were planned and designed on-site. Langley was also considered a potential site for NASA's Manned Spacecraft Center prior to the eventual selection of Houston, Texas. Established in 1917 by the National Advisory Committee for Aeronautics (NACA), the research center devotes two-thirds of its programs to aeronautics and the rest to space. LaRC researchers use more than 40 wind tunnels to study and improve aircraft and spacecraft safety, performance, and efficiency. Between 1958 and 1963, when NASA (the successor agency to NACA) started Project Mercury, LaRC served as the main office of the Space Task Group. In September 2019, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |