|

Akaike's Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information criterion ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

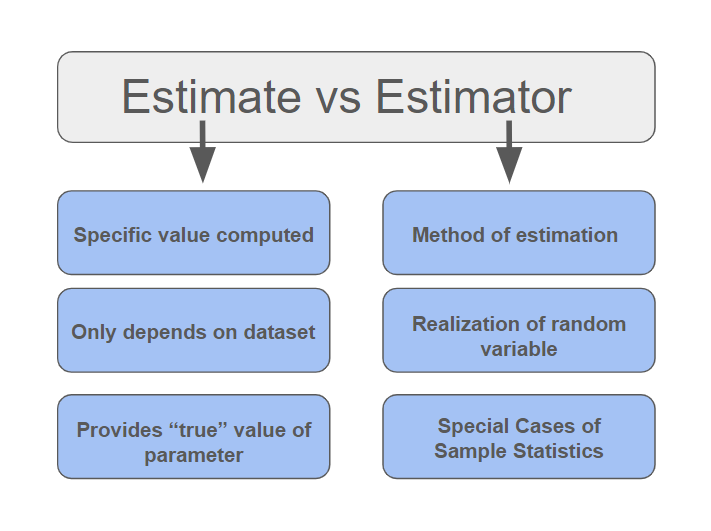

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ensemble Learning

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives. Overview Supervised learning algorithms search through a hypothesis space to find a suitable hypothesis that will make good predictions with a particular problem. Even if this space contains hypotheses that are very well-suited for a particular problem, it may be very difficult to find a good one. Ensembles combine multiple hypotheses to form one which should be theoretically better. ''Ensemble learning'' trains two or more machine learning algorithms on a specific classification or regression task. The algorithms wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interval Estimation

In statistics, interval estimation is the use of sample data to estimate an '' interval'' of possible values of a parameter of interest. This is in contrast to point estimation, which gives a single value. The most prevalent forms of interval estimation are '' confidence intervals'' (a frequentist method) and ''credible intervals'' (a Bayesian method). Less common forms include '' likelihood intervals,'' '' fiducial intervals,'' ''tolerance intervals,'' and ''prediction intervals''. For a non-statistical method, interval estimates can be deduced from fuzzy logic. Types Confidence intervals Confidence intervals are used to estimate the parameter of interest from a sampled data set, commonly the mean or standard deviation. A confidence interval states there is a 100γ% confidence that the parameter of interest is within a lower and upper bound. A common misconception of confidence intervals is 100γ% of the data set fits within or above/below the bounds, this is referred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Point Estimation

In statistics, point estimation involves the use of sample data to calculate a single value (known as a point estimate since it identifies a point in some parameter space) which is to serve as a "best guess" or "best estimate" of an unknown population parameter (for example, the population mean). More formally, it is the application of a point estimator to the data to obtain a point estimate. Point estimation can be contrasted with interval estimation: such interval estimates are typically either confidence intervals, in the case of frequentist inference, or credible intervals, in the case of Bayesian inference. More generally, a point estimator can be contrasted with a set estimator. Examples are given by confidence sets or credible sets. A point estimator can also be contrasted with a distribution estimator. Examples are given by confidence distributions, randomized estimators, and Bayesian posteriors. Properties of point estimates Biasedness “Bias” is defined as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population. Inferential statistics can be contrasted with descriptive statistics. Descriptive statistics is solely concerned with properties of the observed data, and it does not rest on the assumption that the data come from a larger population. In machine learning, the term ''inference'' is sometimes used instead to mean "make a prediction, by evaluating an already trained model"; in this context inferring properties of the model is referred to as ''training'' or ''learning'' (rather than ''inference''), and using a model for prediction is referred to as ''inference'' (instead of ''prediction''); se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binomially Distributed

In probability theory and statistics, the binomial distribution with parameters and is the discrete probability distribution of the number of successes in a sequence of independent experiments, each asking a yes–no question, and each with its own Boolean-valued outcome: ''success'' (with probability ) or ''failure'' (with probability ). A single success/failure experiment is also called a Bernoulli trial or Bernoulli experiment, and a sequence of outcomes is called a Bernoulli process; for a single trial, i.e., , the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the binomial test of statistical significance. The binomial distribution is frequently used to model the number of successes in a sample of size drawn with replacement from a population of size . If the sampling is carried out without replacement, the draws are not independent and so the resulting distribution is a hypergeometric distribution, not a binomial one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Categorical Variable

In statistics, a categorical variable (also called qualitative variable) is a variable that can take on one of a limited, and usually fixed, number of possible values, assigning each individual or other unit of observation to a particular group or nominal category on the basis of some qualitative property. In computer science and some branches of mathematics, categorical variables are referred to as enumerations or enumerated types. Commonly (though not in this article), each of the possible values of a categorical variable is referred to as a level. The probability distribution associated with a random categorical variable is called a categorical distribution. Categorical data is the statistical data type consisting of categorical variables or of data that has been converted into that form, for example as grouped data. More specifically, categorical data may derive from observations made of qualitative data that are summarised as counts or cross tabulations, or from observat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Welch's T-test

In statistics, Welch's ''t''-test, or unequal variances ''t''-test, is a two-sample location test which is used to test the (null) hypothesis that two populations have equal means. It is named for its creator, Bernard Lewis Welch, and is an adaptation of Student's ''t''-test, and is more reliable when the two samples have unequal variances and possibly unequal sample sizes. These tests are often referred to as "unpaired" or "independent samples" ''t''-tests, as they are typically applied when the statistical units underlying the two samples being compared are non-overlapping. Given that Welch's ''t''-test has been less popular than Student's ''t''-test and may be less familiar to readers, a more informative name is "Welch's unequal variances ''t''-test" — or "unequal variances ''t''-test" for brevity. Sometimes, it is referred as Satterthwaite or Welch–Satterthwaite test. Assumptions Student's ''t''-test assumes that the sample means being compared for two populations are no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student's T-test

Student's ''t''-test is a statistical test used to test whether the difference between the response of two groups is statistically significant or not. It is any statistical hypothesis test in which the test statistic follows a Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a scaling term in the test statistic were known (typically, the scaling term is unknown and is therefore a nuisance parameter). When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's ''t'' distribution. The ''t''-test's most common application is to test whether the means of two populations are significantly different. In many cases, a ''Z''-test will yield very similar results to a ''t''-test because the latter converges to the former as the size of the dataset increases. History The term "''t''-statistic" is abbreviated from " ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Hypothesis Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a critical value or equivalently by evaluating a ''p''-value computed from the test statistic. Roughly 100 specialized statistical tests are in use and noteworthy. History While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Choice of null hypothesis Paul Meehl has argued that the epistemological importance of the choice of null hypothesis has gone largely unacknowledged. When the null hypothesis is predicted by theory, a more precise experiment will be a more severe test of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |