|

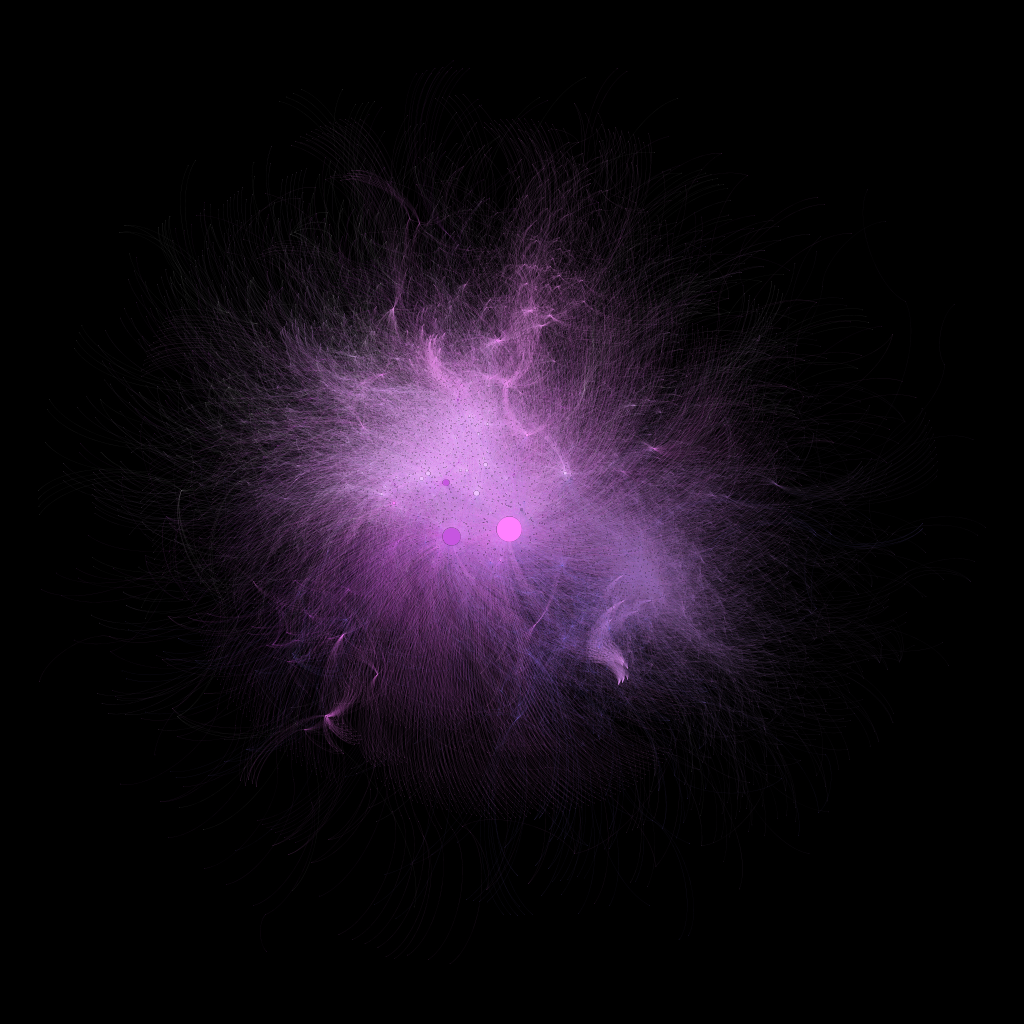

Affinity Propagation

In statistics and data mining, affinity propagation (AP) is a clustering algorithm based on the concept of "message passing" between data points. Unlike clustering algorithms such as -means or -medoids, affinity propagation does not require the number of clusters to be determined or estimated before running the algorithm. Similar to -medoids, affinity propagation finds "exemplars," members of the input set that are representative of clusters. Algorithm Let through be a set of data points, with no assumptions made about their internal structure, and let be a function that quantifies the similarity between any two points, such that if and only if is more similar to than to . For this example, the negative squared distance of two data points was used i.e. for points and , The diagonal of (i.e. s(i,i)) is particularly important, as it represents the instance preference, meaning how likely a particular instance is to become an exemplar. When it is set to the same value for a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Clustering

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution, one can construct a Markov chain whose elements' distribution approximates it – that is, the Markov chain's equilibrium distribution matches the target distribution. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Markov chain Monte Carlo methods are used to study probability distributions that are too complex or too highly dimensional to study with analytic techniques alone. Various algorithms exist for constructing such Markov chains, including the Metropolis–Hastings algorithm. General explanation Markov chain Monte Carlo methods create samples from a continuous random variable, with probability density proportional to a known function. These samples can be used to evaluate an integral over that variable, as its expected value or varianc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scikit-learn

scikit-learn (formerly scikits.learn and also known as sklearn) is a free and open-source machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support-vector machines, random forests, gradient boosting, ''k''-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy. Scikit-learn is a NumFOCUS fiscally sponsored project. Overview The scikit-learn project started as scikits.learn, a Google Summer of Code project by French data scientist David Cournapeau. The name of the project derives from its role as a "scientific toolkit for machine learning", originally developed and distributed as a third-party extension to SciPy. The original codebase was later rewritten by other developers. In 2010, contributors Fabian Pedregosa, Gaël Varoquaux, Alexandre Gramfort and Vincent Michel, from the French Institute for Research in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Python (programming Language)

Python is a high-level programming language, high-level, general-purpose programming language. Its design philosophy emphasizes code readability with the use of significant indentation. Python is type system#DYNAMIC, dynamically type-checked and garbage collection (computer science), garbage-collected. It supports multiple programming paradigms, including structured programming, structured (particularly procedural programming, procedural), object-oriented and functional programming. It is often described as a "batteries included" language due to its comprehensive standard library. Guido van Rossum began working on Python in the late 1980s as a successor to the ABC (programming language), ABC programming language, and he first released it in 1991 as Python 0.9.0. Python 2.0 was released in 2000. Python 3.0, released in 2008, was a major revision not completely backward-compatible with earlier versions. Python 2.7.18, released in 2020, was the last release of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Julia (programming Language)

Julia is a high-level programming language, high-level, general-purpose programming language, general-purpose dynamic programming language, dynamic programming language, designed to be fast and productive, for e.g. data science, artificial intelligence, machine learning, modeling and simulation, most commonly used for numerical analysis and computational science. Distinctive aspects of Julia's design include a type system with parametric polymorphism and the use of multiple dispatch as a core programming paradigm, a default just-in-time compilation, just-in-time (JIT) compiler (with support for ahead-of-time compilation) and an tracing garbage collection, efficient (multi-threaded) garbage collection implementation. Notably Julia does not support classes with encapsulated methods and instead it relies on structs with generic methods/functions not tied to them. By default, Julia is run similarly to scripting languages, using its runtime, and allows for read–eval–print loop, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ELKI

ELKI (''Environment for Developing KDD-Applications Supported by Index-Structures'') is a data mining (KDD, knowledge discovery in databases) software framework developed for use in research and teaching. It was originally created by the database systems research unit at the Ludwig Maximilian University of Munich, Germany, led by Professor Hans-Peter Kriegel. The project has continued at the Technical University of Dortmund, Germany. It aims at allowing the development and evaluation of advanced data mining algorithms and their interaction with database index structures. Description The ELKI framework is written in Java and built around a modular architecture. Most currently included algorithms perform clustering, outlier detection, and database indexes. The object-oriented architecture allows the combination of arbitrary algorithms, data types, distance functions, indexes, and evaluation measures. The Java just-in-time compiler optimizes all combinations to a simila ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Java (programming Language)

Java is a High-level programming language, high-level, General-purpose programming language, general-purpose, Memory safety, memory-safe, object-oriented programming, object-oriented programming language. It is intended to let programmers ''write once, run anywhere'' (Write once, run anywhere, WORA), meaning that compiler, compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to Java bytecode, bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax (programming languages), syntax of Java is similar to C (programming language), C and C++, but has fewer low-level programming language, low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as Reflective programming, reflection and runtime code modification) that are typically not available in traditional compiled languages. Java gained popularity sh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005), there are three perspectives of text mining: information extraction, data mining, and knowledge discovery in databases (KDD). Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

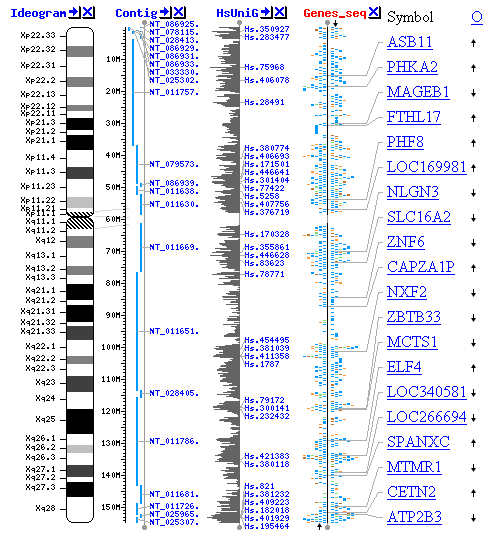

Protein Interaction Graph

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residues. Proteins perform a vast array of functions within organisms, including catalysing metabolic reactions, DNA replication, responding to stimuli, providing structure to cells and organisms, and transporting molecules from one location to another. Proteins differ from one another primarily in their sequence of amino acids, which is dictated by the nucleotide sequence of their genes, and which usually results in protein folding into a specific Protein structure, 3D structure that determines its activity. A linear chain of amino acid residues is called a polypeptide. A protein contains at least one long polypeptide. Short polypeptides, containing less than 20–30 residues, are rarely considered to be proteins and are commonly called peptides. The individual amino acid residues are bonded together by peptide bonds and adjacent amino acid residues. The Protein primary ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing. The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mining

Data mining is the process of extracting and finding patterns in massive data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal of extracting information (with intelligent methods) from a data set and transforming the information into a comprehensible structure for further use. Data mining is the analysis step of the " knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. The term "data mining" is a misnomer because the goal is the extraction of patterns and knowledge from large amounts of data, not the extraction (''mining'') of data itself. It also is a buzzwo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Biology

Computational biology refers to the use of techniques in computer science, data analysis, mathematical modeling and Computer simulation, computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and data science, the field also has foundations in applied mathematics, molecular biology, cell biology, chemistry, and genetics. History Bioinformatics, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field. By 1982, researchers shared information via Punched card, punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting relevant information. Per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |