|

Actor-critic Algorithm

The actor-critic algorithm (AC) is a family of reinforcement learning (RL) algorithms that combine policy-based RL algorithms such as policy gradient methods, and value-based RL algorithms such as value iteration, Q-learning, SARSA, and TD learning. An AC algorithm consists of two main components: an "actor" that determines which actions to take according to a policy function, and a "critic" that evaluates those actions according to a value function. Some AC algorithms are on-policy, some are off-policy. Some apply to either continuous or discrete action spaces. Some work in both cases. Overview The actor-critic methods can be understood as an improvement over pure policy gradient methods like REINFORCE via introducing a baseline. Actor The actor uses a policy function \pi(a, s), while the critic estimates either the value function V(s), the action-value Q-function Q(s,a), the advantage function A(s,a), or any combination thereof. The actor is a parameterized function \p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learning is one of the three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input-output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead, the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) with the goal of maximizing the cumulative reward (the feedback of which might be incomplete or delayed). The search for this balance is known as the exploration–exploitation dilemma. The environment is typically stated in the form of a Markov decision process (MDP), as many reinforcement learning algorithms use dyn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Policy Gradient Method

Policy gradient methods are a class of reinforcement learning algorithms. Policy gradient methods are a sub-class of policy optimization methods. Unlike value-based methods which learn a value function to derive a policy, policy optimization methods directly learn a policy function \pi that selects actions without consulting a value function. For policy gradient to apply, the policy function \pi_\theta is parameterized by a differentiable parameter \theta. Overview In policy-based RL, the actor is a parameterized policy function \pi_\theta, where \theta are the parameters of the actor. The actor takes as argument the state of the environment s and produces a probability distribution \pi_\theta(\cdot \mid s). If the action space is discrete, then \sum_ \pi_\theta(a \mid s) = 1. If the action space is continuous, then \int_ \pi_\theta(a \mid s) \mathrma = 1. The goal of policy optimization is to find some \theta that maximizes the expected episodic reward J(\theta):J(\theta) = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Q-learning

''Q''-learning is a reinforcement learning algorithm that trains an agent to assign values to its possible actions based on its current state, without requiring a model of the environment ( model-free). It can handle problems with stochastic transitions and rewards without requiring adaptations. For example, in a grid maze, an agent learns to reach an exit worth 10 points. At a junction, Q-learning might assign a higher value to moving right than left if right gets to the exit faster, improving this choice by trying both directions over time. For any finite Markov decision process, ''Q''-learning finds an optimal policy in the sense of maximizing the expected value of the total reward over any and all successive steps, starting from the current state. ''Q''-learning can identify an optimal action-selection policy for any given finite Markov decision process, given infinite exploration time and a partly random policy. "Q" refers to the function that the algorithm computes: th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

State–action–reward–state–action

State–action–reward–state–action (SARSA) is an algorithm for learning a Markov decision process policy, used in the reinforcement learning area of machine learning. It was proposed by Rummery and Niranjan in a technical note with the name "Modified Connectionist Q-Learning" (MCQ-L). The alternative name SARSA, proposed by Rich Sutton, was only mentioned as a footnote. This name reflects the fact that the main function for updating the Q-value depends on the current state of the agent "S1", the action the agent chooses "A1", the reward "R2" the agent gets for choosing this action, the state "S2" that the agent enters after taking that action, and finally the next action "A2" the agent chooses in its new state. The acronym for the quintuple (St, At, Rt+1, St+1, At+1) is SARSA. Some authors use a slightly different convention and write the quintuple (St, At, Rt, St+1, At+1), depending on which time step the reward is formally assigned. The rest of the article uses the form ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Temporal Difference Learning

Temporal difference (TD) learning refers to a class of model-free reinforcement learning methods which learn by bootstrapping from the current estimate of the value function. These methods sample from the environment, like Monte Carlo methods, and perform updates based on current estimates, like dynamic programming methods. While Monte Carlo methods only adjust their estimates once the final outcome is known, TD methods adjust predictions to match later, more accurate, predictions about the future before the final outcome is known. This is a form of bootstrapping, as illustrated with the following example: Suppose you wish to predict the weather for Saturday, and you have some model that predicts Saturday's weather, given the weather of each day in the week. In the standard case, you would wait until Saturday and then adjust all your models. However, when it is, for example, Friday, you should have a pretty good idea of what the weather would be on Saturday – and thus be able ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Value Function

The value function of an optimization problem gives the value attained by the objective function at a solution, while only depending on the parameters of the problem. In a controlled dynamical system, the value function represents the optimal payoff of the system over the interval , t1/var> when started at the time-t state variable x(t)=x. If the objective function represents some cost that is to be minimized, the value function can be interpreted as the cost to finish the optimal program, and is thus referred to as "cost-to-go function." In an economic context, where the objective function usually represents utility, the value function is conceptually equivalent to the indirect utility function. In a problem of optimal control, the value function is defined as the supremum of the objective function taken over the set of admissible controls. Given (t_, x_) \in , t_\times \mathbb^, a typical optimal control problem is to : \text \quad J(t_, x_; u) = \int_^ I(t,x(t), u(t)) \, \m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

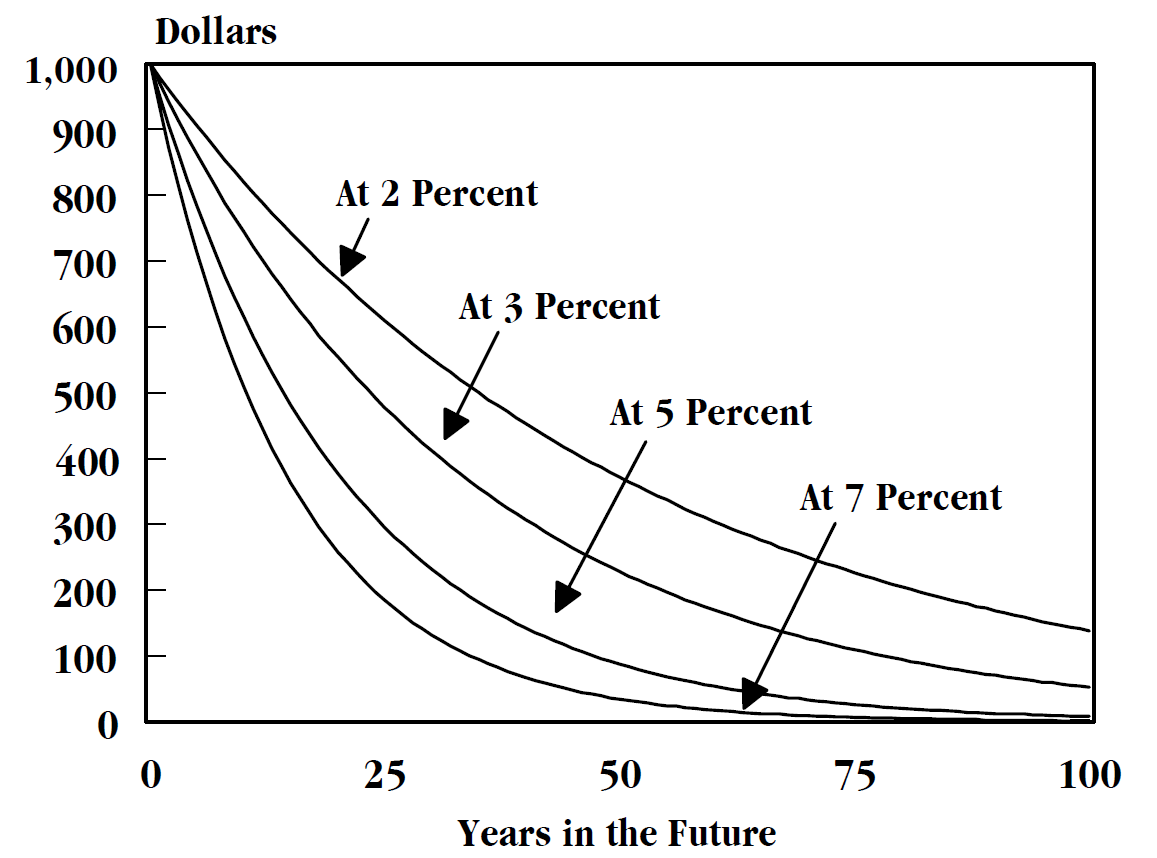

Discount Factor

In finance, discounting is a mechanism in which a debtor obtains the right to delay payments to a creditor, for a defined period of time, in exchange for a charge or fee.See "Time Value", "Discount", "Discount Yield", "Compound Interest", "Efficient Market", "Market Value" and "Opportunity Cost" in Downes, J. and Goodman, J. E. ''Dictionary of Finance and Investment Terms'', Baron's Financial Guides, 2003. Essentially, the party that owes money in the present purchases the right to delay the payment until some future date.See "Discount", "Compound Interest", "Efficient Markets Hypothesis", "Efficient Resource Allocation", "Pareto-Optimality", "Price", "Price Mechanism" and "Efficient Market" in Black, John, ''Oxford Dictionary of Economics'', Oxford University Press, 2002. This transaction is based on the fact that most people prefer current interest to delayed interest because of mortality effects, impatience effects, and salience effects. The discount, or charge, is the dif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient Descent

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate function. The idea is to take repeated steps in the opposite direction of the gradient (or approximate gradient) of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a trajectory that maximizes that function; the procedure is then known as ''gradient ascent''. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization. Gradient descent is generally attributed to Augustin-Louis Cauchy, who first suggested it in 1847. Jacques Hadamard independently proposed a similar method in 1907. Its convergence properties for non-linear optimization problems were first studied by Has ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Policy Gradient Method

Policy gradient methods are a class of reinforcement learning algorithms. Policy gradient methods are a sub-class of policy optimization methods. Unlike value-based methods which learn a value function to derive a policy, policy optimization methods directly learn a policy function \pi that selects actions without consulting a value function. For policy gradient to apply, the policy function \pi_\theta is parameterized by a differentiable parameter \theta. Overview In policy-based RL, the actor is a parameterized policy function \pi_\theta, where \theta are the parameters of the actor. The actor takes as argument the state of the environment s and produces a probability distribution \pi_\theta(\cdot \mid s). If the action space is discrete, then \sum_ \pi_\theta(a \mid s) = 1. If the action space is continuous, then \int_ \pi_\theta(a \mid s) \mathrma = 1. The goal of policy optimization is to find some \theta that maximizes the expected episodic reward J(\theta):J(\theta) = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unbiased Estimator

In statistics, the bias of an estimator (or bias function) is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called ''unbiased''. In statistics, "bias" is an property of an estimator. Bias is a distinct concept from Consistent estimator, consistency: consistent estimators converge in probability to the true value of the parameter, but may be biased or unbiased (see Consistent estimator#Bias versus consistency, bias versus consistency for more). All else being equal, an unbiased estimator is preferable to a biased estimator, although in practice, biased estimators (with generally small bias) are frequently used. When a biased estimator is used, bounds of the bias are calculated. A biased estimator may be used for various reasons: because an unbiased estimator does not exist without further assumptions about a population; because an estimator is difficult to compute (as in u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Differentiation

In mathematics and computer algebra, automatic differentiation (auto-differentiation, autodiff, or AD), also called algorithmic differentiation, computational differentiation, and differentiation arithmetic Hend Dawood and Nefertiti Megahed (2023). Automatic differentiation of uncertainties: an interval computational differentiation for first and higher derivatives with implementation. PeerJ Computer Science 9:e1301 https://doi.org/10.7717/peerj-cs.1301. Hend Dawood and Nefertiti Megahed (2019). A Consistent and Categorical Axiomatization of Differentiation Arithmetic Applicable to First and Higher Order Derivatives. Punjab University Journal of Mathematics. 51(11). pp. 77-100. doi: 10.5281/zenodo.3479546. http://doi.org/10.5281/zenodo.3479546. is a set of techniques to evaluate the partial derivative of a function specified by a computer program. Automatic differentiation is a subtle and central tool to automatize the simultaneous computation of the numerical values of arbitrarily ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |