|

Aixi

AIXI is a theoretical mathematical formalism for artificial general intelligence. It combines Solomonoff induction with sequential decision theory. AIXI was first proposed by Marcus Hutter in 2000 and several results regarding AIXI are proved in Hutter's 2005 book ''Universal Artificial Intelligence''. AIXI is a reinforcement learning (RL) agent. It maximizes the expected total rewards received from the environment. Intuitively, it simultaneously considers every computable hypothesis (or environment). In each time step, it looks at every possible program and evaluates how many rewards that program generates depending on the next action taken. The promised rewards are then weighted by the subjective belief that this program constitutes the true environment. This belief is computed from the length of the program: longer programs are considered less likely, in line with Occam's razor. AIXI then selects the action that has the highest expected total reward in the weighted sum of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Logic

Mathematical logic is the study of Logic#Formal logic, formal logic within mathematics. Major subareas include model theory, proof theory, set theory, and recursion theory (also known as computability theory). Research in mathematical logic commonly addresses the mathematical properties of formal systems of logic such as their expressive or deductive power. However, it can also include uses of logic to characterize correct mathematical reasoning or to establish foundations of mathematics. Since its inception, mathematical logic has both contributed to and been motivated by the study of foundations of mathematics. This study began in the late 19th century with the development of axiomatic frameworks for geometry, arithmetic, and Mathematical analysis, analysis. In the early 20th century it was shaped by David Hilbert's Hilbert's program, program to prove the consistency of foundational theories. Results of Kurt Gödel, Gerhard Gentzen, and others provided partial resolution to th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Turing Machine

In computer science, a universal Turing machine (UTM) is a Turing machine capable of computing any computable sequence, as described by Alan Turing in his seminal paper "On Computable Numbers, with an Application to the Entscheidungsproblem". Common sense might say that a universal machine is impossible, but Turing proves that it is possible. He suggested that we may compare a human in the process of computing a real number to a machine which is only capable of a finite number of conditions ; which will be called "-configurations". He then described the operation of such machine, as described below, and argued: Turing introduced the idea of such a machine in 1936–1937. Introduction Martin Davis makes a persuasive argument that Turing's conception of what is now known as "the stored-program computer", of placing the "action table"—the instructions for the machine—in the same "memory" as the input data, strongly influenced John von Neumann's conception of the first Amer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gödel Machine

A Gödel machine is a hypothetical self-improving computer program that solves problems in an optimal way. It uses a recursive self-improvement protocol in which it rewrites its own code when it can prove the new code provides a better strategy. The machine was invented by Jürgen Schmidhuber (first proposed in 2003), but is named after Kurt Gödel who inspired the mathematical theories. The Gödel machine is often discussed when dealing with issues of meta-learning, also known as "learning to learn." Applications include automating human design decisions and transfer of knowledge between multiple related tasks, and may lead to design of more robust and general learning architectures. Though theoretically possible, no full implementation has been created. The Gödel machine is often compared with Marcus Hutter's AIXI, another formal specification for an artificial general intelligence. Schmidhuber points out that the Gödel machine could start out by implementing AIXItl as its ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pac-Man

''Pac-Man,'' originally called in Japan, is a 1980 maze video game developed and published by Namco for arcades. In North America, the game was released by Midway Manufacturing as part of its licensing agreement with Namco America. The player controls Pac-Man, who must eat all the dots inside an enclosed maze while avoiding four colored ghosts. Eating large flashing dots called "Power Pellets" causes the ghosts to temporarily turn blue, allowing Pac-Man to also eat the ghosts for bonus points. Game development began in early 1979, led by Toru Iwatani with a nine-man team. Iwatani wanted to create a game that could appeal to women as well as men, because most video games of the time had themes that appealed to traditionally masculine interests, such as war or sports. Although the inspiration for the Pac-Man character was the image of a pizza with a slice removed, Iwatani has said he rounded out the Japanese character for mouth, kuchi (). The in-game characters were made t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partially Observable System

A partially observable system is one in which the entire state of the system is not fully visible to an external sensor. In a partially observable system the observer may utilise a memory system in order to add information to the observer's understanding of the system. Peter Norvig, Sebastian Thrun. UdacityIntroduction to Artificial Intelligence/ref> An example of a partially observable system would be a card game in which some of the cards are discarded into a pile face down. In this case the observer is only able to view their own cards and potentially those of the dealer. They are not able to view the face-down (used) cards, nor the cards that will be dealt at some stage in the future. A memory system can be used to remember the previously dealt cards that are now on the used pile. This adds to the total sum of knowledge that the observer can use to make decisions. In contrast, a fully observable system would be that of chess Chess is a board game for two players. It i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context Tree Weighting

The context tree weighting method (CTW) is a lossless compression and prediction algorithm by . The CTW algorithm is among the very few such algorithms that offer both theoretical guarantees and good practical performance (see, e.g. ). The CTW algorithm is an “ ensemble method”, mixing the predictions of many underlying variable order Markov model In probability theory, a Markov model is a stochastic model used to Mathematical model, model pseudo-randomly changing systems. It is assumed that future states depend only on the current state, not on the events that occurred before it (that is, ...s, where each such model is constructed using zero-order conditional probability ''estimators''. References * * * External links Relevant CTW papers and implementationsCTW Official Homepage Lossless compression algorithms Data compression {{comp-sci-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. The name comes from the Monte Carlo Casino in Monaco, where the primary developer of the method, mathematician Stanisław Ulam, was inspired by his uncle's gambling habits. Monte Carlo methods are mainly used in three distinct problem classes: optimization, numerical integration, and generating draws from a probability distribution. They can also be used to model phenomena with significant uncertainty in inputs, such as calculating the risk of a nuclear power plant failure. Monte Carlo methods are often implemented using computer simulations, and they can provide approximate solutions to problems that are otherwise intractable or too complex to analyze mathematically. Monte Carlo methods are widely used in va ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Undecidable Problem

In computability theory and computational complexity theory, an undecidable problem is a decision problem for which it is proved to be impossible to construct an algorithm that always leads to a correct yes-or-no answer. The halting problem is an example: it can be proven that there is no algorithm that correctly determines whether an arbitrary program eventually halts when run. Background A decision problem is a question which, for every input in some infinite set of inputs, requires a "yes" or "no" answer. Those inputs can be numbers (for example, the decision problem "is the input a prime number?") or values of some other kind, such as strings of a formal language. The formal representation of a decision problem is a subset of the natural numbers. For decision problems on natural numbers, the set consists of those numbers that the decision problem answers "yes" to. For example, the decision problem "is the input even?" is formalized as the set of even numbers. A decision pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jan Leike

Jan Leike (born ) is an AI alignment researcher who has worked at DeepMind and OpenAI. He joined Anthropic in May 2024. Education Jan Leike obtained his undergraduate degree from the University of Freiburg in Germany. After earning a master's degree in computer science, he pursued a PhD in machine learning at the Australian National University under the supervision of Marcus Hutter. Career Leike made a six-month postdoctoral fellowship at the Future of Humanity Institute before joining DeepMind to focus on empirical AI safety research, where he collaborated with Shane Legg. OpenAI In 2021, Leike joined OpenAI. In June 2023, he and Ilya Sutskever became the co-leaders of the newly introduced "superalignment" project, which aimed to determine how to align future artificial superintelligences within four years to ensure their safety. This project involved automating AI alignment research using relatively advanced AI systems. At the time, Sutskever was OpenAI's Chief Scient ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pareto Optimality

In welfare economics, a Pareto improvement formalizes the idea of an outcome being "better in every possible way". A change is called a Pareto improvement if it leaves at least one person in society better off without leaving anyone else worse off than they were before. A situation is called Pareto efficient or Pareto optimal if all possible Pareto improvements have already been made; in other words, there are no longer any ways left to make one person better off without making some other person worse-off. In social choice theory, the same concept is sometimes called the unanimity principle, which says that if ''everyone'' in a society ( non-strictly) prefers A to B, society as a whole also non-strictly prefers A to B. The Pareto front consists of all Pareto-efficient situations. In addition to the context of efficiency in ''allocation'', the concept of Pareto efficiency also arises in the context of ''efficiency in production'' vs. '' x-inefficiency'': a set of outputs of go ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

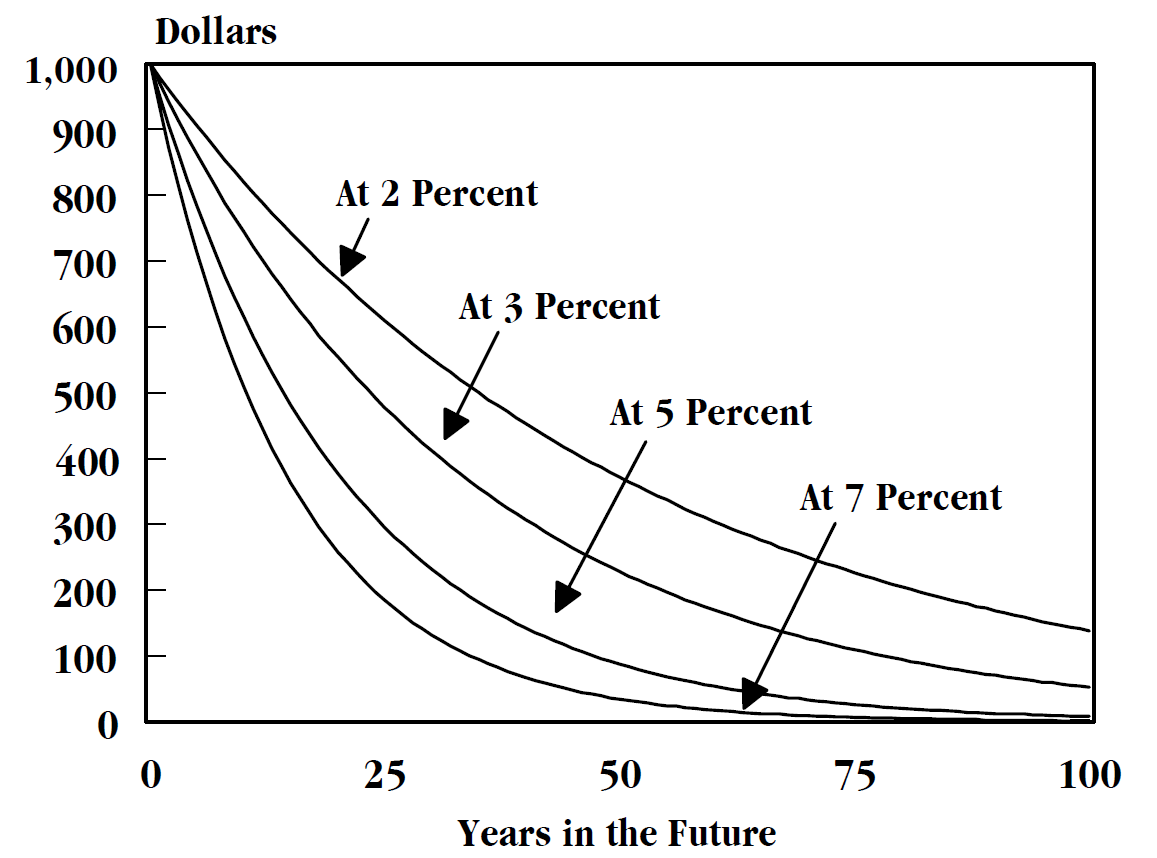

Discounting

In finance, discounting is a mechanism in which a debtor obtains the right to delay payments to a creditor, for a defined period of time, in exchange for a charge or fee.See "Time Value", "Discount", "Discount Yield", "Compound Interest", "Efficient Market", "Market Value" and "Opportunity Cost" in Downes, J. and Goodman, J. E. ''Dictionary of Finance and Investment Terms'', Baron's Financial Guides, 2003. Essentially, the party that owes money in the present purchases the right to delay the payment until some future date.See "Discount", "Compound Interest", "Efficient Markets Hypothesis", "Efficient Resource Allocation", "Pareto-Optimality", "Price", "Price Mechanism" and "Efficient Market" in Black, John, ''Oxford Dictionary of Economics'', Oxford University Press, 2002. This Financial transaction, transaction is based on the fact that most people prefer current interest to delayed interest because of Mortality salience, mortality effects, impatience effects, and Motivational sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture (probability)

In probability theory and statistics, a mixture is a probabilistic combination of two or more probability distributions. The concept arises mostly in two contexts: :* A mixture defining a new probability distribution from some existing ones, as in a mixture distribution or a compound distribution. Here a major problem often is to derive the properties of the resulting distribution. :* A mixture used as a statistical model such as is often used for statistical classification. The model may represent the population from which observations arise as a mixture distribution, mixture of several components, and the problem is that of a mixture model, in which the task is to infer from which of a ''discrete'' set of sub-populations each observation originated. See also * Mixture distribution * Compound distribution * Mixture model * Statistical classification, classification * Cluster analysis * Giry monad References Probability theory Compound probability distributions Statistical cl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |