Regression toward the mean on:

[Wikipedia]

[Google]

[Amazon]

In

In

The concept of regression comes from

The concept of regression comes from

Regression: A New Mode for an Old Meaning

'' The American Statistician'', Vol 44, No 2 (May 1990), pp. 181–183.

Regression Toward the Mean and the Study of Change

''Psychological Bulletin''

* ttps://onlinestatbook.com/stat_sim/reg_to_mean/index.html A simulation of regression toward the mean.*Amanda Wachsmuth, Leland Wilkinson, Gerard E. Dallal

Galton's Bend: An Undiscovered Nonlinearity in Galton's Family Stature Regression Data and a Likely Explanation Based on Pearson and Lee's Stature Data

''(A modern look at Galton's analysis.)'' *Massachusetts standardized test scores, interpreted by a statistician as an example of regression: se

discussion in sci.stat.edu

an

its continuation

* Gary Smith, What the Luck: The Surprising Role of Chance in Our Everyday Lives, New York: Overlook, London: Duckworth. .

In

In statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, indust ...

, regression toward the mean (also called reversion to the mean, and reversion to mediocrity) is the fact that if one sample

Sample or samples may refer to:

Base meaning

* Sample (statistics), a subset of a population – complete data set

* Sample (signal), a digital discrete sample of a continuous analog signal

* Sample (material), a specimen or small quantity of ...

of a random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

is extreme, the next sampling of the same random variable is likely to be closer to its mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value ( magnitude and sign) of a given data set.

For a data set, the '' ar ...

. Furthermore, when many random variables are sampled and the most extreme results are intentionally picked out, it refers to the fact that (in many cases) a second sampling of these picked-out variables will result in "less extreme" results, closer to the initial mean of all of the variables.

Mathematically, the strength of this "regression" effect is dependent on whether or not all of the random variables are drawn from the same distribution, or if there are genuine differences in the underlying distributions for each random variable. In the first case, the "regression" effect is statistically likely to occur, but in the second case, it may occur less strongly or not at all.

Regression toward the mean is thus a useful concept to consider when designing any scientific experiment, data analysis, or test, which intentionally selects the "most extreme" events - it indicates that follow-up checks may be useful in order to avoid jumping to false conclusions about these events; they may be "genuine" extreme events, a completely meaningless selection due to statistical noise, or a mix of the two cases.

Conceptual examples

Simple example: students taking a test

Consider a class of students taking a 100-item true/false test on a subject. Suppose that all students choose randomly on all questions. Then, each student's score would be a realization of one of a set of independent and identically distributedrandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

s, with an expected mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value ( magnitude and sign) of a given data set.

For a data set, the '' ar ...

of 50. Naturally, some students will score substantially above 50 and some substantially below 50 just by chance. If one selects only the top scoring 10% of the students and gives them a second test on which they again choose randomly on all items, the mean score would again be expected to be close to 50. Thus the mean of these students would "regress" all the way back to the mean of all students who took the original test. No matter what a student scores on the original test, the best prediction of their score on the second test is 50.

If choosing answers to the test questions was not random – i.e. if there were no luck (good or bad) or random guessing involved in the answers supplied by the students – then all students would be expected to score the same on the second test as they scored on the original test, and there would be no regression toward the mean.

Most realistic situations fall between these two extremes: for example, one might consider exam scores as a combination of skill

A skill is the learned ability to act with determined results with good execution often within a given amount of time, energy, or both. Skills can often be divided into domain-general and domain-specific skills. For example, in the domain of w ...

and luck

Luck is the phenomenon and belief that defines the experience of improbable events, especially improbably positive or negative ones. The naturalistic interpretation is that positive and negative events may happen at any time, both due to rand ...

. In this case, the subset of students scoring above average would be composed of those who were skilled and had not especially bad luck, together with those who were unskilled, but were extremely lucky. On a retest of this subset, the unskilled will be unlikely to repeat their lucky break, while the skilled will have a second chance to have bad luck. Hence, those who did well previously are unlikely to do quite as well in the second test even if the original cannot be replicated.

The following is an example of this second kind of regression toward the mean. A class of students takes two editions of the same test on two successive days. It has frequently been observed that the worst performers on the first day will tend to improve their scores on the second day, and the best performers on the first day will tend to do worse on the second day. The phenomenon occurs because student scores are determined in part by underlying ability and in part by chance. For the first test, some will be lucky, and score more than their ability, and some will be unlucky and score less than their ability. Some of the lucky students on the first test will be lucky again on the second test, but more of them will have (for them) average or below average scores. Therefore, a student who was lucky and over-performed their ability on the first test is more likely to have a worse score on the second test than a better score. Similarly, students who unluckily score less than their ability on the first test will tend to see their scores increase on the second test. The larger the influence of luck in producing an extreme event, the less likely the luck will repeat itself in multiple events.

Other examples

If your favourite sports team won the championship last year, what does that mean for their chances for winning next season? To the extent this result is due to skill (the team is in good condition, with a top coach, etc.), their win signals that it is more likely they will win again next year. But the greater the extent this is due to luck (other teams embroiled in a drug scandal, favourable draw, draft picks turned out to be productive, etc.), the less likely it is they will win again next year. If a business organisation has a highly profitable quarter, despite the underlying reasons for its performance being unchanged, it is likely to do less well the next quarter. Baseball players who hit well in their rookie season are likely to do worse their second; the " sophomore slump". Similarly, regression toward the mean is an explanation for the ''Sports Illustrated'' cover jinx — periods of exceptional performance which results in a cover feature are likely to be followed by periods of more mediocre performance, giving the impression that appearing on the cover causes an athlete's decline.History

Discovery

The concept of regression comes from

The concept of regression comes from genetics

Genetics is the study of genes, genetic variation, and heredity in organisms.Hartl D, Jones E (2005) It is an important branch in biology because heredity is vital to organisms' evolution. Gregor Mendel, a Moravian Augustinian friar work ...

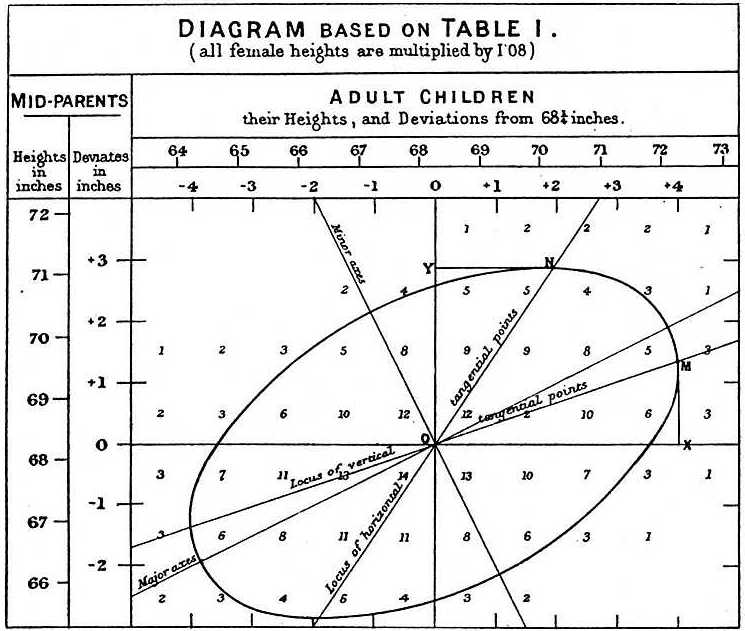

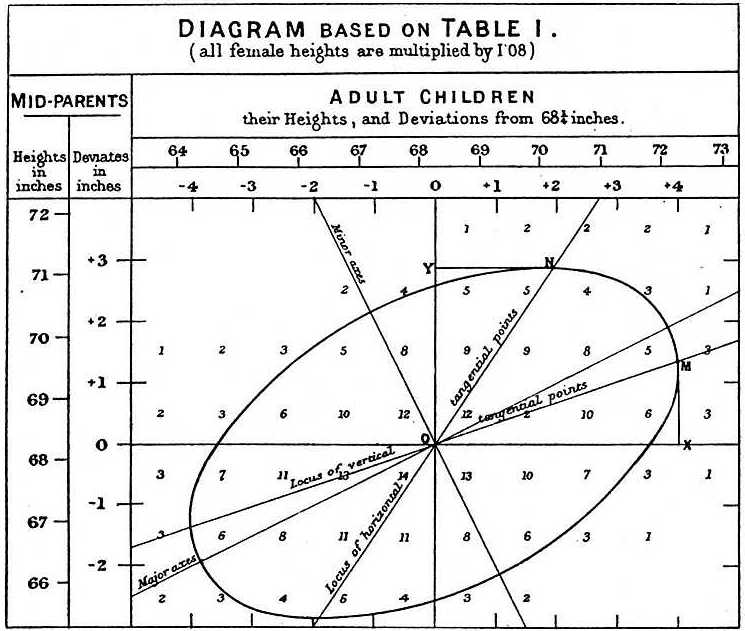

and was popularized by Sir Francis Galton during the late 19th century with the publication of ''Regression towards mediocrity in hereditary stature''. Galton observed that extreme characteristics (e.g., height) in parents are not passed on completely to their offspring. Rather, the characteristics in the offspring ''regress'' toward a ''mediocre'' point (a point which has since been identified as the mean). By measuring the heights of hundreds of people, he was able to quantify regression to the mean, and estimate the size of the effect. Galton wrote that, "the average regression of the offspring is a constant fraction of their respective mid-parental deviations". This means that the difference between a child and its parents for some characteristic is proportional to its parents' deviation from typical people in the population. If its parents are each two inches taller than the averages for men and women, then, on average, the offspring will be shorter than its parents by some factor (which, today, we would call one minus the regression coefficient) times two inches. For height, Galton estimated this coefficient to be about 2/3: the height of an individual will measure around a midpoint that is two thirds of the parents' deviation from the population average.

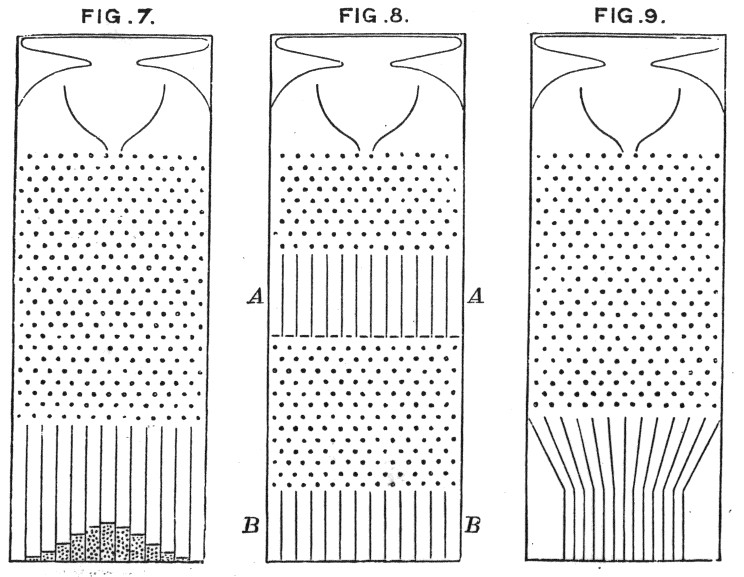

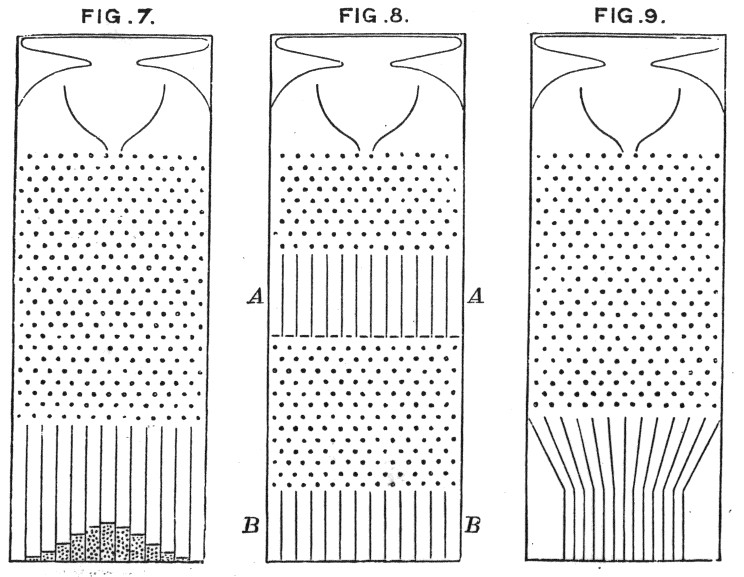

Galton also published these results using the simpler example of pellets falling through a Galton board to form a normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

centred directly under their entrance point. These pellets might then be released down into a second gallery corresponding to a second measurement. Galton then asked the reverse question: "From where did these pellets come?" The answer was not ''on average directly above''. Rather it was ''on average, more towards the middle'', for the simple reason that there were more pellets above it towards the middle that could wander left than there were in the left extreme that could wander to the right, inwards.

Evolving usage of the term

Galton coined the term "regression" to describe an observable fact in the inheritance of multi-factorial quantitative genetic traits: namely that traits of the offspring of parents who lie at the tails of the distribution often tend to lie closer to the centre, the mean, of the distribution. He quantified this trend, and in doing so inventedlinear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is cal ...

analysis, thus laying the groundwork for much of modern statistical modelling. Since then, the term "regression" has been used in other contexts, and it may be used by modern statisticians to describe phenomena such as sampling bias

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population have a lower or higher sampling probability than others. It results in a biased sample of a population (or non-human f ...

which have little to do with Galton's original observations in the field of genetics.

Galton's explanation for the regression phenomenon he observed in biology was stated as follows: "A child inherits partly from his parents, partly from his ancestors. Speaking generally, the further his genealogy goes back, the more numerous and varied will his ancestry become, until they cease to differ from any equally numerous sample taken at haphazard from the race at large." Galton's statement requires some clarification in light of knowledge of genetics: Children receive genetic material from their parents, but hereditary information (e.g. values of inherited traits) from earlier ancestors can be passed through their parents (and may not have been expressed in their parents). The mean for the trait may be nonrandom and determined by selection pressure, but the distribution of values around the mean reflects a normal statistical distribution.

The population-genetic phenomenon studied by Galton is a special case of "regression to the mean"; the term is often used to describe many statistical phenomena in which data exhibit a normal distribution around a mean.

Importance

Regression toward the mean is a significant consideration in thedesign of experiments

The design of experiments (DOE, DOX, or experimental design) is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. The term is generally associ ...

.

Take a hypothetical example of 1,000 individuals of a similar age who were examined and scored on the risk of experiencing a heart attack. Statistics could be used to measure the success of an intervention on the 50 who were rated at the greatest risk, as measured by a test with a degree of uncertainty. The intervention could be a change in diet, exercise, or a drug treatment. Even if the interventions are worthless, the test group would be expected to show an improvement on their next physical exam, because of regression toward the mean. The best way to combat this effect is to divide the group randomly into a treatment group that receives the treatment, and a group that does not. The treatment would then be judged effective only if the treatment group improves more than the untreated group.

Alternatively, a group of disadvantaged children could be tested to identify the ones with most college potential. The top 1% could be identified and supplied with special enrichment courses, tutoring, counseling and computers. Even if the program is effective, their average scores may well be less when the test is repeated a year later. However, in these circumstances it may be considered unethical to have a control group of disadvantaged children whose special needs are ignored. A mathematical calculation for shrinkage can adjust for this effect, although it will not be as reliable as the control group method (see also Stein's example).

The effect can also be exploited for general inference and estimation. The hottest place in the country today is more likely to be cooler tomorrow than hotter, as compared to today. The best performing mutual fund over the last three years is more likely to see relative performance decline than improve over the next three years. The most successful Hollywood actor of this year is likely to have less gross than more gross for his or her next movie. The baseball player with the highest batting average by the All-Star break is more likely to have a lower average than a higher average over the second half of the season.

Misunderstandings

The concept of regression toward the mean can be misused very easily. In the student test example above, it was assumed implicitly that what was being measured did not change between the two measurements. Suppose, however, that the course was pass/fail and students were required to score above 70 on both tests to pass. Then the students who scored under 70 the first time would have no incentive to do well, and might score worse on average the second time. The students just over 70, on the other hand, would have a strong incentive to study and concentrate while taking the test. In that case one might see movement ''away'' from 70, scores below it getting lower and scores above it getting higher. It is possible for changes between the measurement times to augment, offset or reverse the statistical tendency to regress toward the mean. Statistical regression toward the mean is not a causal phenomenon. A student with the worst score on the test on the first day will not necessarily increase his score substantially on the second day due to the effect. On average, the worst scorers improve, but that is only true because the worst scorers are more likely to have been unlucky than lucky. To the extent that a score is determined randomly, or that a score has random variation or error, as opposed to being determined by the student's academic ability or being a "true value", the phenomenon will have an effect. A classic mistake in this regard was in education. The students that received praise for good work were noticed to do more poorly on the next measure, and the students who were punished for poor work were noticed to do better on the next measure. The educators decided to stop praising and keep punishing on this basis. Such a decision was a mistake, because regression toward the mean is not based on cause and effect, but rather on random error in a natural distribution around a mean. Although extreme individual measurements regress toward the mean, the secondsample

Sample or samples may refer to:

Base meaning

* Sample (statistics), a subset of a population – complete data set

* Sample (signal), a digital discrete sample of a continuous analog signal

* Sample (material), a specimen or small quantity of ...

of measurements will be no closer to the mean than the first. Consider the students again. Suppose the tendency of extreme individuals is to regress 10% of the way toward the mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value ( magnitude and sign) of a given data set.

For a data set, the '' ar ...

of 80, so a student who scored 100 the first day is expected to score 98 the second day, and a student who scored 70 the first day is expected to score 71 the second day. Those expectations are closer to the mean than the first day scores. But the second day scores will vary around their expectations; some will be higher and some will be lower. In addition, individuals that measure very close to the mean should expect to move away from the mean. The effect is the exact reverse of regression toward the mean, and exactly offsets it. So for extreme individuals, we expect the second score to be closer to the mean than the first score, but for ''all'' individuals, we expect the distribution of distances from the mean to be the same on both sets of measurements.

Related to the point above, regression toward the mean works equally well in both directions. We expect the student with the highest test score on the second day to have done worse on the first day. And if we compare the best student on the first day to the best student on the second day, regardless of whether it is the same individual or not, there is no tendency to regress toward the mean going in either direction. We expect the best scores on both days to be equally far from the mean.

Regression fallacies

Many phenomena tend to be attributed to the wrong causes when regression to the mean is not taken into account. An extreme example isHorace Secrist

Horace Secrist (October 9, 1881 – March 5, 1943) was an American statistician and economist, a professor and the director of the Bureau of Economic Research at Northwestern University.

Life and career

Secrist was born in Farmington, Utah, and r ...

's 1933 book ''The Triumph of Mediocrity in Business'', in which the statistics professor collected mountains of data to prove that the profit rates of competitive businesses tend toward the average over time. In fact, there is no such effect; the variability of profit rates is almost constant over time. Secrist had only described the common regression toward the mean. One exasperated reviewer, Harold Hotelling

Harold Hotelling (; September 29, 1895 – December 26, 1973) was an American mathematical statistician and an influential economic theorist, known for Hotelling's law, Hotelling's lemma, and Hotelling's rule in economics, as well as Hotelling's ...

, likened the book to "proving the multiplication table by arranging elephants in rows and columns, and then doing the same for numerous other kinds of animals".

The calculation and interpretation of "improvement scores" on standardized educational tests in Massachusetts probably provides another example of the regression fallacy. In 1999, schools were given improvement goals. For each school, the Department of Education tabulated the difference in the average score achieved by students in 1999 and in 2000. It was quickly noted that most of the worst-performing schools had met their goals, which the Department of Education took as confirmation of the soundness of their policies. However, it was also noted that many of the supposedly best schools in the Commonwealth, such as Brookline High School (with 18 National Merit Scholarship finalists) were declared to have failed. As in many cases involving statistics and public policy, the issue is debated, but "improvement scores" were not announced in subsequent years and the findings appear to be a case of regression to the mean.

The psychologist Daniel Kahneman

Daniel Kahneman (; he, דניאל כהנמן; born March 5, 1934) is an Israeli-American psychologist and economist notable for his work on the psychology of judgment and decision-making, as well as behavioral economics, for which he was award ...

, winner of the 2002 Nobel Memorial Prize in Economic Sciences

The Nobel Memorial Prize in Economic Sciences, officially the Sveriges Riksbank Prize in Economic Sciences in Memory of Alfred Nobel ( sv, Sveriges riksbanks pris i ekonomisk vetenskap till Alfred Nobels minne), is an economics award administered ...

, pointed out that regression to the mean might explain why rebukes can seem to improve performance, while praise seems to backfire.

The regression fallacy is also explained in Rolf Dobelli's '' The Art of Thinking Clearly''.

UK law enforcement policies have encouraged the visible siting of static or mobile speed cameras at accident blackspot

In road safety management, an accident blackspot or black spot is a place where road traffic accidents have historically been concentrated. It may have occurred for a variety of reasons, such as a sharp drop or corner in a straight road, so oncom ...

s. This policy was justified by a perception that there is a corresponding reduction in serious road traffic accidents after a camera is set up. However, statisticians have pointed out that, although there is a net benefit in lives saved, failure to take into account the effects of regression to the mean results in the beneficial effects being overstated.

Statistical analysts have long recognized the effect of regression to the mean in sports; they even have a special name for it: the " sophomore slump". For example, Carmelo Anthony of the NBA's Denver Nuggets

The Denver Nuggets are an American professional basketball team based in Denver. The Nuggets compete in the National Basketball Association (NBA) as a member of the league's Western Conference Northwest Division. The team was founded as the D ...

had an outstanding rookie season in 2004. It was so outstanding that he could not be expected to repeat it: in 2005, Anthony's numbers had dropped from his rookie season. The reasons for the "sophomore slump" abound, as sports rely on adjustment and counter-adjustment, but luck-based excellence as a rookie is as good a reason as any. Regression to the mean in sports performance may also explain the apparent " ''Sports Illustrated'' cover jinx" and the " Madden Curse". John Hollinger

John Hollinger (born May 17, 1971) is the former Vice President of Basketball Operations for the Memphis Grizzlies of the National Basketball Association (NBA) and current Senior NBA columnist at The Athletic.

Prior to December 2012, he was an a ...

has an alternative name for the phenomenon of regression to the mean: the "fluke rule", while Bill James

George William James (born October 5, 1949) is an American baseball writer, historian, and statistician whose work has been widely influential. Since 1977, James has written more than two dozen books devoted to baseball history and statistics. ...

calls it the "Plexiglas Principle".

Because popular lore has focused on regression toward the mean as an account of declining performance of athletes from one season to the next, it has usually overlooked the fact that such regression can also account for improved performance. For example, if one looks at the batting average of Major League Baseball

Major League Baseball (MLB) is a professional baseball organization and the oldest major professional sports league in the world. MLB is composed of 30 total teams, divided equally between the National League (NL) and the American League (A ...

players in one season, those whose batting average was above the league mean tend to regress downward toward the mean the following year, while those whose batting average was below the mean tend to progress upward toward the mean the following year.

Other statistical phenomena

Regression toward the mean simply says that, following an extreme random event, the next random event is likely to be less extreme. In no sense does the future event "compensate for" or "even out" the previous event, though this is assumed in the gambler's fallacy (and the variant law of averages). Similarly, thelaw of large numbers

In probability theory, the law of large numbers (LLN) is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials shou ...

states that in the long term, the average will tend toward the expected value, but makes no statement about individual trials. For example, following a run of 10 heads on a flip of a fair coin (a rare, extreme event), regression to the mean states that the next run of heads will likely be less than 10, while the law of large numbers states that in the long term, this event will likely average out, and the average fraction of heads will tend to 1/2. By contrast, the gambler's fallacy incorrectly assumes that the coin is now "due" for a run of tails to balance out.

The opposite effect is regression to the tail, resulting from a distribution with non-vanishing probability density toward infinity.

Definition for simple linear regression of data points

This is the definition of regression toward the mean that closely follows Sir Francis Galton's original usage. Suppose there are ''n'' data points , where ''i'' = 1, 2, ..., ''n''. We want to find the equation of the regression line, ''i.e.'' the straight line : which would provide a "best" fit for the data points. (Note that a straight line may not be the appropriate regression curve for the given data points.) Here the "best" will be understood as in theleast-squares

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the res ...

approach: such a line that minimizes the sum of squared residuals of the linear regression model. In other words, numbers ''α'' and ''β'' solve the following minimization problem:

: Find , where

Using calculus

Calculus, originally called infinitesimal calculus or "the calculus of infinitesimals", is the mathematics, mathematical study of continuous change, in the same way that geometry is the study of shape, and algebra is the study of generalizati ...

it can be shown that the values of ''α'' and ''β'' that minimize the objective function ''Q'' are

:

where ''rxy'' is the sample correlation coefficient between ''x'' and ''y'', ''sx'' is the standard deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, whil ...

of ''x'', and ''sy'' is correspondingly the standard deviation of ''y''. Horizontal bar over a variable means the sample average of that variable. For example:

Substituting the above expressions for and into yields fitted values

:

which yields

:

This shows the role ''r''''xy'' plays in the regression line of standardized data points.

If −1 < ''r''''xy'' < 1, then we say that the data points exhibit regression toward the mean. In other words, if linear regression is the appropriate model for a set of data points whose sample correlation coefficient is not perfect, then there is regression toward the mean. The predicted (or fitted) standardized value of ''y'' is closer to its mean than the standardized value of ''x'' is to its mean.

Definitions for bivariate distribution with identical marginal distributions

Restrictive definition

Let ''X''1, ''X''2 berandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

s with identical marginal distributions with mean ''μ''. In this formalization, the bivariate distribution of ''X''1 and ''X''2 is said to exhibit regression toward the mean if, for every number ''c'' > ''μ'', we have

:''μ'' ≤ E ''X''1 = ''c''nbsp;< ''c'',

with the reverse inequalities holding for ''c'' < ''μ''..

The following is an informal description of the above definition. Consider a population of widgets. Each widget has two numbers, ''X''1 and ''X''2 (say, its left span (''X''1 ) and right span (''X''2)). Suppose that the probability distributions of ''X''1 and ''X''2 in the population are identical, and that the means of ''X''1 and ''X''2 are both ''μ''. We now take a random widget from the population, and denote its ''X''1 value by ''c''. (Note that ''c'' may be greater than, equal to, or smaller than ''μ''.) We have no access to the value of this widget's ''X''2 yet. Let ''d'' denote the expected value of ''X''2 of this particular widget. (''i.e.'' Let ''d'' denote the average value of ''X''2 of all widgets in the population with ''X''1=''c''.) If the following condition is true:

:Whatever the value ''c'' is, ''d'' lies between ''μ'' and ''c'' (''i.e.'' ''d'' is closer to ''μ'' than ''c '' is),

then we say that ''X''1 and ''X''2 show regression toward the mean.

This definition accords closely with the current common usage, evolved from Galton's original usage, of the term "regression toward the mean". It is "restrictive" in the sense that not every bivariate distribution with identical marginal distributions exhibits regression toward the mean (under this definition).

Theorem

If a pair (''X'', ''Y'') of random variables follows a bivariate normal distribution, then the conditional mean E(''Y'', ''X'') is a linear function of ''X''. The correlation coefficient ''r'' between ''X'' and ''Y'', along with the marginal means and variances of ''X'' and ''Y'', determines this linear relationship: : where ''E ' and ''E ' are the expected values of ''X'' and ''Y'', respectively, and σ''x'' and σ''y'' are the standard deviations of ''X'' and ''Y'', respectively. Hence the conditional expected value of ''Y'', given that ''X'' is ''t''standard deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, whil ...

s above its mean (and that includes the case where it's below its mean, when ''t'' < 0), is ''rt'' standard deviations above the mean of ''Y''. Since , ''r'', ≤ 1, ''Y'' is no farther from the mean than ''X'' is, as measured in the number of standard deviations.

Hence, if 0 ≤ ''r'' < 1, then (''X'', ''Y'') shows regression toward the mean (by this definition).

General definition

The following definition of ''reversion toward the mean'' has been proposed by Samuels as an alternative to the more restrictive definition of ''regression toward the mean'' above. Let ''X''1, ''X''2 berandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

s with identical marginal distributions with mean ''μ''. In this formalization, the bivariate distribution of ''X''1 and ''X''2 is said to exhibit reversion toward the mean if, for every number ''c'', we have

:''μ'' ≤ E ''X''1 > ''c''nbsp;< E ''X''1 > ''c'' and

:''μ'' ≥ E ''X''1 < ''c''nbsp;> E ''X''1 < ''c''

This definition is "general" in the sense that every bivariate distribution with identical marginal distributions exhibits ''reversion toward the mean'', provided some weak criteria are satisfied (non-degeneracy and weak positive dependence as described in Samuels's paper).

Alternative definition in financial usage

Jeremy Siegel uses the term "return to the mean" to describe a financialtime series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Ex ...

in which "returns

Return may refer to:

In business, economics, and finance

* Return on investment (ROI), the financial gain after an expense.

* Rate of return, the financial term for the profit or loss derived from an investment

* Tax return, a blank document or t ...

can be very unstable in the short run but very stable in the long run." More quantitatively, it is one in which the standard deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, whil ...

of average annual returns declines faster than the inverse of the holding period, implying that the process is not a random walk

In mathematics, a random walk is a random process that describes a path that consists of a succession of random steps on some mathematical space.

An elementary example of a random walk is the random walk on the integer number line \mathbb Z ...

, but that periods of lower returns are systematically followed by compensating periods of higher returns, as is the case in many seasonal businesses, for example.

See also

* Hardy–Weinberg principle *Internal validity

Internal validity is the extent to which a piece of evidence supports a claim about cause and effect, within the context of a particular study. It is one of the most important properties of scientific studies and is an important concept in reasoni ...

*Law of large numbers

In probability theory, the law of large numbers (LLN) is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials shou ...

*Martingale (probability theory)

In probability theory, a martingale is a sequence of random variables (i.e., a stochastic process) for which, at a particular time, the conditional expectation of the next value in the sequence is equal to the present value, regardless of all ...

* Regression dilution

*Selection bias

Selection bias is the bias introduced by the selection of individuals, groups, or data for analysis in such a way that proper randomization is not achieved, thereby failing to ensure that the sample obtained is representative of the population int ...

References

Further reading

* Article, including a diagram of Galton's original data. * * * * * * Stephen SennRegression: A New Mode for an Old Meaning

'' The American Statistician'', Vol 44, No 2 (May 1990), pp. 181–183.

Regression Toward the Mean and the Study of Change

''Psychological Bulletin''

* ttps://onlinestatbook.com/stat_sim/reg_to_mean/index.html A simulation of regression toward the mean.*Amanda Wachsmuth, Leland Wilkinson, Gerard E. Dallal

Galton's Bend: An Undiscovered Nonlinearity in Galton's Family Stature Regression Data and a Likely Explanation Based on Pearson and Lee's Stature Data

''(A modern look at Galton's analysis.)'' *Massachusetts standardized test scores, interpreted by a statistician as an example of regression: se

discussion in sci.stat.edu

an

its continuation

* Gary Smith, What the Luck: The Surprising Role of Chance in Our Everyday Lives, New York: Overlook, London: Duckworth. .

External links

* {{Statistics Regression analysis Statistical laws