Gamma distribution on:

[Wikipedia]

[Google]

[Amazon]

In

Unlike the mode and the mean, which have readily calculable formulas based on the parameters, the median does not have a closed-form equation. The median for this distribution is the value such that

:

A rigorous treatment of the problem of determining an asymptotic expansion and bounds for the median of the gamma distribution was handled first by Chen and Rubin, who proved that (for )

:

where is the mean and is the median of the distribution. For other values of the scale parameter, the mean scales to , and the median bounds and approximations would be similarly scaled by .

K. P. Choi found the first five terms in a Laurent series asymptotic approximation of the median by comparing the median to Ramanujan's function. Berg and Pedersen found more terms:

:

Unlike the mode and the mean, which have readily calculable formulas based on the parameters, the median does not have a closed-form equation. The median for this distribution is the value such that

:

A rigorous treatment of the problem of determining an asymptotic expansion and bounds for the median of the gamma distribution was handled first by Chen and Rubin, who proved that (for )

:

where is the mean and is the median of the distribution. For other values of the scale parameter, the mean scales to , and the median bounds and approximations would be similarly scaled by .

K. P. Choi found the first five terms in a Laurent series asymptotic approximation of the median by comparing the median to Ramanujan's function. Berg and Pedersen found more terms:

:

Partial sums of these series are good approximations for high enough ; they are not plotted in the figure, which is focused on the low- region that is less well approximated.

Berg and Pedersen also proved many properties of the median, showing that it is a convex function of ,Berg, Christian and Pedersen, Henrik L

Partial sums of these series are good approximations for high enough ; they are not plotted in the figure, which is focused on the low- region that is less well approximated.

Berg and Pedersen also proved many properties of the median, showing that it is a convex function of ,Berg, Christian and Pedersen, Henrik L

"Convexity of the median in the gamma distribution"

and that the asymptotic behavior near is (where is the

these notes

10.4-(ii)): multiplication by a positive constant ''c'' divides the rate (or, equivalently, multiplies the scale).

A Compendium of Conjugate Priors

In progress report: Extension and enhancement of methods for setting data quality objectives. (DOE contract 95‑831). : where ''Z'' is the normalizing constant with no closed-form solution. The posterior distribution can be found by updating the parameters as follows: : where ''n'' is the number of observations, and ''xi'' is the ''i''th observation.

"Model-based deconvolution of genome-wide DNA binding"

''Bioinformatics'', 24, 396–403 and

"Characterising ChIP-seq binding patterns by model-based peak shape deconvolution"

''BMC Genomics'', 14:834 data analysis. In Bayesian statistics, the gamma distribution is widely used as a

Uses of the gamma distribution in risk modeling, including applied examples in Excel

{{DEFAULTSORT:Gamma Distribution Continuous distributions Factorial and binomial topics Conjugate prior distributions Exponential family distributions Infinitely divisible probability distributions Survival analysis

probability theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set ...

and statistics, the gamma distribution is a two-parameter

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when ...

family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use:

#With a shape parameter

In probability theory and statistics, a shape parameter (also known as form parameter) is a kind of numerical parameter of a parametric family of probability distributionsEveritt B.S. (2002) Cambridge Dictionary of Statistics. 2nd Edition. CUP.

t ...

and a scale parameter

In probability theory and statistics, a scale parameter is a special kind of numerical parameter of a parametric family of probability distributions. The larger the scale parameter, the more spread out the distribution.

Definition

If a family o ...

.

#With a shape parameter and an inverse scale parameter , called a rate parameter.

In each of these forms, both parameters are positive real numbers.

The gamma distribution is the maximum entropy probability distribution (both with respect to a uniform base measure and a base measure) for a random variable for which E 'X''= ''kθ'' = ''α''/''β'' is fixed and greater than zero, and E n(''X'')= ''ψ''(''k'') + ln(''θ'') = ''ψ''(''α'') − ln(''β'') is fixed (''ψ'' is the digamma function

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function:

:\psi(x)=\frac\ln\big(\Gamma(x)\big)=\frac\sim\ln-\frac.

It is the first of the polygamma functions. It is strictly increasing and strict ...

).

Definitions

The parameterization with ''k'' and ''θ'' appears to be more common ineconometrics

Econometrics is the application of statistical methods to economic data in order to give empirical content to economic relationships. M. Hashem Pesaran (1987). "Econometrics," '' The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 p. 8 ...

and other applied fields, where the gamma distribution is frequently used to model waiting times. For instance, in life testing, the waiting time until death is a random variable that is frequently modeled with a gamma distribution. See Hogg and Craig for an explicit motivation.

The parameterization with and is more common in Bayesian statistics

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, ...

, where the gamma distribution is used as a conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

distribution for various types of inverse scale (rate) parameters, such as the ''λ'' of an exponential distribution or a Poisson distribution

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known co ...

– or for that matter, the ''β'' of the gamma distribution itself. The closely related inverse-gamma distribution

In probability theory and statistics, the inverse gamma distribution is a two-parameter family of continuous probability distributions on the positive real line, which is the distribution of the reciprocal of a variable distributed according to ...

is used as a conjugate prior for scale parameters, such as the variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbe ...

of a normal distribution.

If ''k'' is a positive integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the languag ...

, then the distribution represents an Erlang distribution; i.e., the sum of ''k'' independent exponentially distributed

In probability theory and statistics, the exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant averag ...

random variables, each of which has a mean of ''θ''.

Characterization using shape ''α'' and rate ''β''

The gamma distribution can be parameterized in terms of ashape parameter

In probability theory and statistics, a shape parameter (also known as form parameter) is a kind of numerical parameter of a parametric family of probability distributionsEveritt B.S. (2002) Cambridge Dictionary of Statistics. 2nd Edition. CUP.

t ...

''α'' = ''k'' and an inverse scale parameter ''β'' = 1/''θ'', called a rate parameter. A random variable ''X'' that is gamma-distributed with shape ''α'' and rate ''β'' is denoted

:

The corresponding probability density function in the shape-rate parameterization is

:

where is the gamma function

In mathematics, the gamma function (represented by , the capital letter gamma from the Greek alphabet) is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except ...

.

For all positive integers, .

The cumulative distribution function is the regularized gamma function:

:

where is the lower incomplete gamma function.

If ''α'' is a positive integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the languag ...

(i.e., the distribution is an Erlang distribution), the cumulative distribution function has the following series expansion:

:

Characterization using shape ''k'' and scale ''θ''

A random variable ''X'' that is gamma-distributed with shape ''k'' and scale ''θ'' is denoted by : Theprobability density function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) ca ...

using the shape-scale parametrization is

:

Here Γ(''k'') is the gamma function

In mathematics, the gamma function (represented by , the capital letter gamma from the Greek alphabet) is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except ...

evaluated at ''k''.

The cumulative distribution function is the regularized gamma function:

:

where is the lower incomplete gamma function.

It can also be expressed as follows, if ''k'' is a positive integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the languag ...

(i.e., the distribution is an Erlang distribution):Papoulis, Pillai, ''Probability, Random Variables, and Stochastic Processes'', Fourth Edition

:

Both parametrizations are common because either can be more convenient depending on the situation.

Properties

Mean and variance

The mean of gamma distribution is given by the product of its shape and scale parameters: : The variance is: : The square root of the inverse shape parameter gives the coefficient of variation: :Skewness

Theskewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined.

For a unimodal ...

of the gamma distribution only depends on its shape parameter, ''k'', and it is equal to

Higher moments

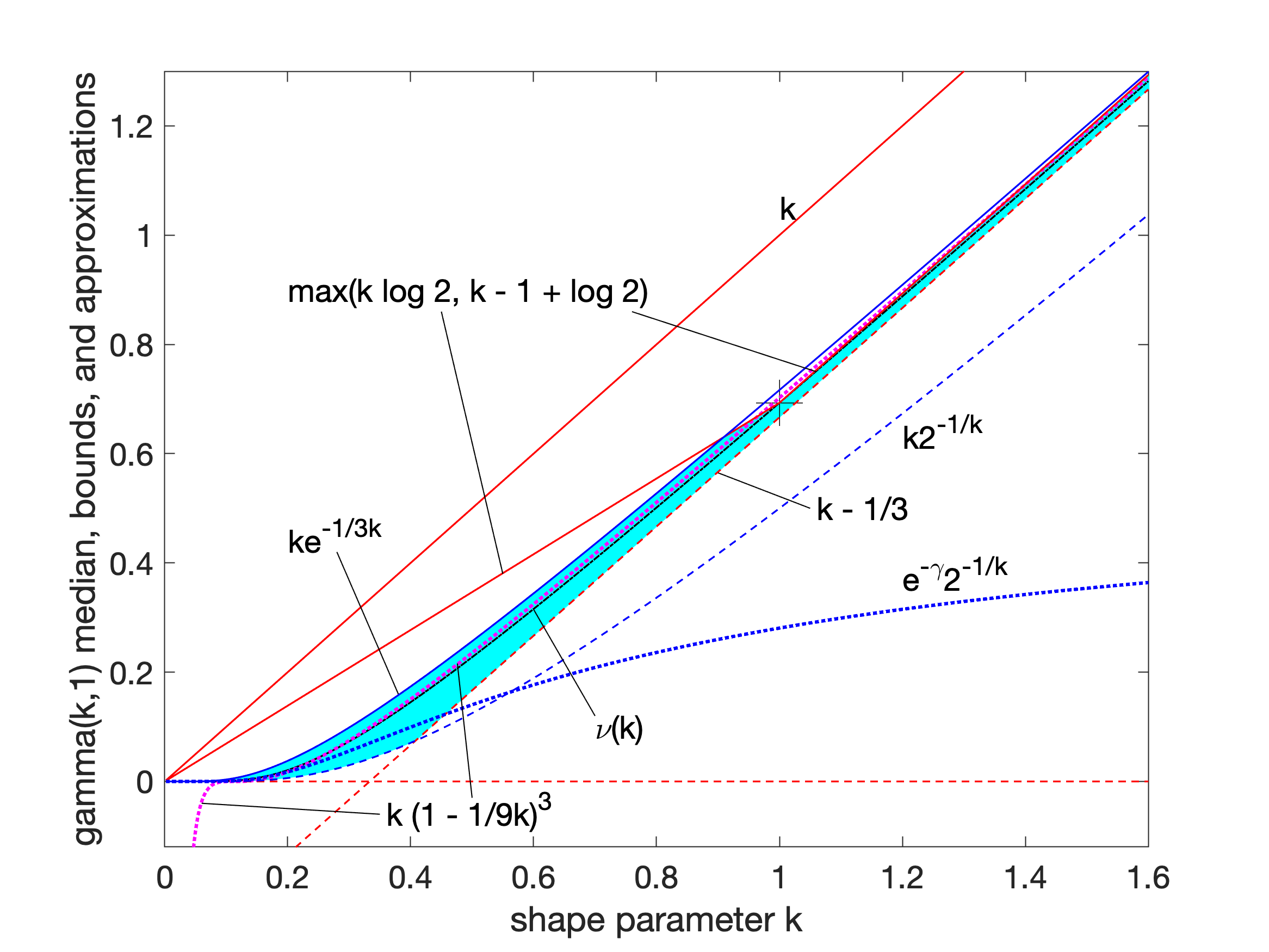

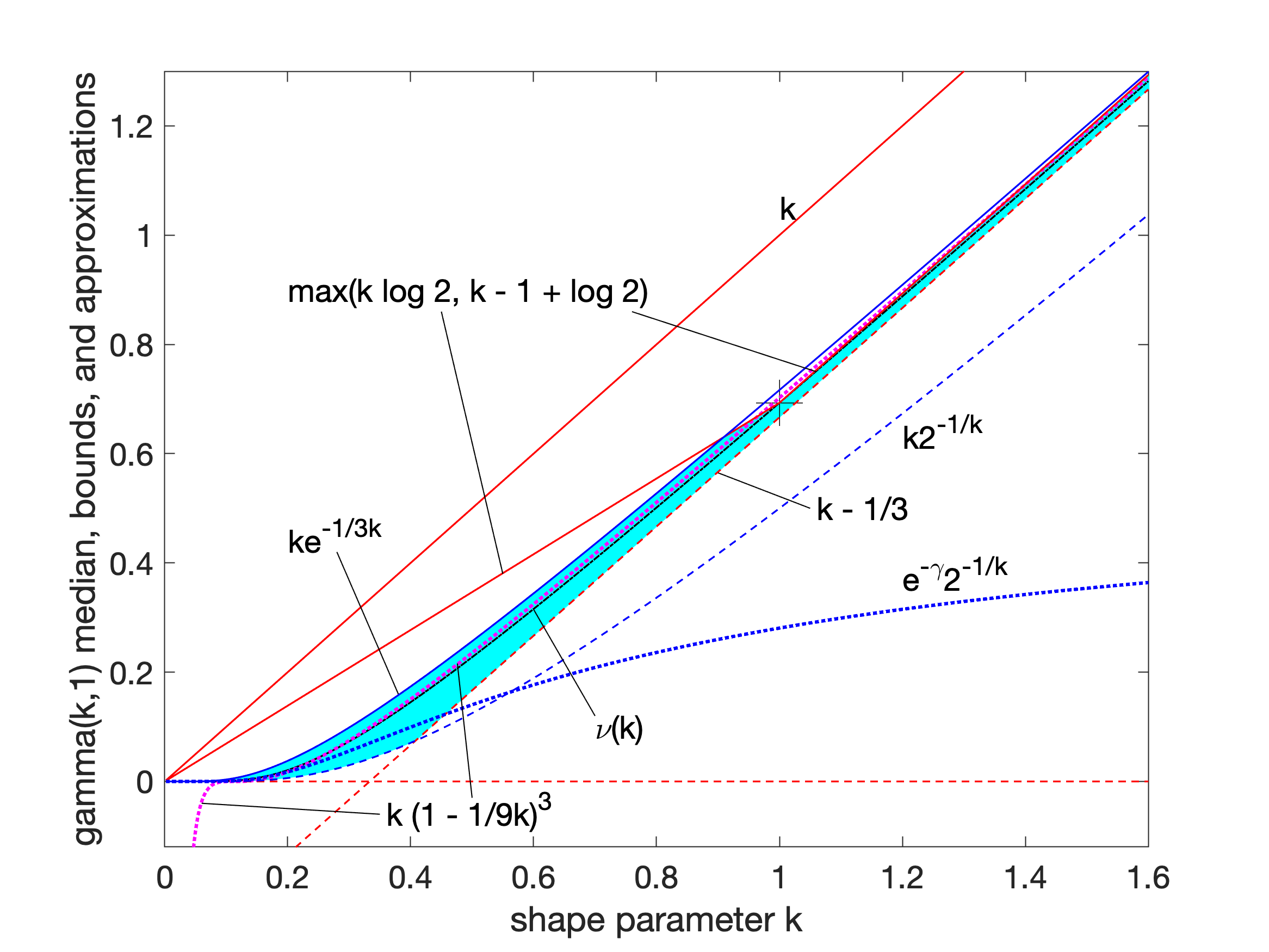

The ''n''th raw moment is given by: :Median approximations and bounds

Unlike the mode and the mean, which have readily calculable formulas based on the parameters, the median does not have a closed-form equation. The median for this distribution is the value such that

:

A rigorous treatment of the problem of determining an asymptotic expansion and bounds for the median of the gamma distribution was handled first by Chen and Rubin, who proved that (for )

:

where is the mean and is the median of the distribution. For other values of the scale parameter, the mean scales to , and the median bounds and approximations would be similarly scaled by .

K. P. Choi found the first five terms in a Laurent series asymptotic approximation of the median by comparing the median to Ramanujan's function. Berg and Pedersen found more terms:

:

Unlike the mode and the mean, which have readily calculable formulas based on the parameters, the median does not have a closed-form equation. The median for this distribution is the value such that

:

A rigorous treatment of the problem of determining an asymptotic expansion and bounds for the median of the gamma distribution was handled first by Chen and Rubin, who proved that (for )

:

where is the mean and is the median of the distribution. For other values of the scale parameter, the mean scales to , and the median bounds and approximations would be similarly scaled by .

K. P. Choi found the first five terms in a Laurent series asymptotic approximation of the median by comparing the median to Ramanujan's function. Berg and Pedersen found more terms:

:

Partial sums of these series are good approximations for high enough ; they are not plotted in the figure, which is focused on the low- region that is less well approximated.

Berg and Pedersen also proved many properties of the median, showing that it is a convex function of ,Berg, Christian and Pedersen, Henrik L

Partial sums of these series are good approximations for high enough ; they are not plotted in the figure, which is focused on the low- region that is less well approximated.

Berg and Pedersen also proved many properties of the median, showing that it is a convex function of ,Berg, Christian and Pedersen, Henrik L"Convexity of the median in the gamma distribution"

and that the asymptotic behavior near is (where is the

Euler–Mascheroni constant

Euler's constant (sometimes also called the Euler–Mascheroni constant) is a mathematical constant usually denoted by the lowercase Greek letter gamma ().

It is defined as the limiting difference between the harmonic series and the natural l ...

), and that for all the median is bounded by .

A closer linear upper bound, for only, was provided in 2021 by Gaunt and Merkle, relying on the Berg and Pedersen result that the slope of is everywhere less than 1:

: for (with equality at )

which can be extended to a bound for all by taking the max with the chord shown in the figure, since the median was proved convex.

An approximation to the median that is asymptotically accurate at high and reasonable down to or a bit lower follows from the Wilson–Hilferty transformation

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-square ...

:

:

which goes negative for .

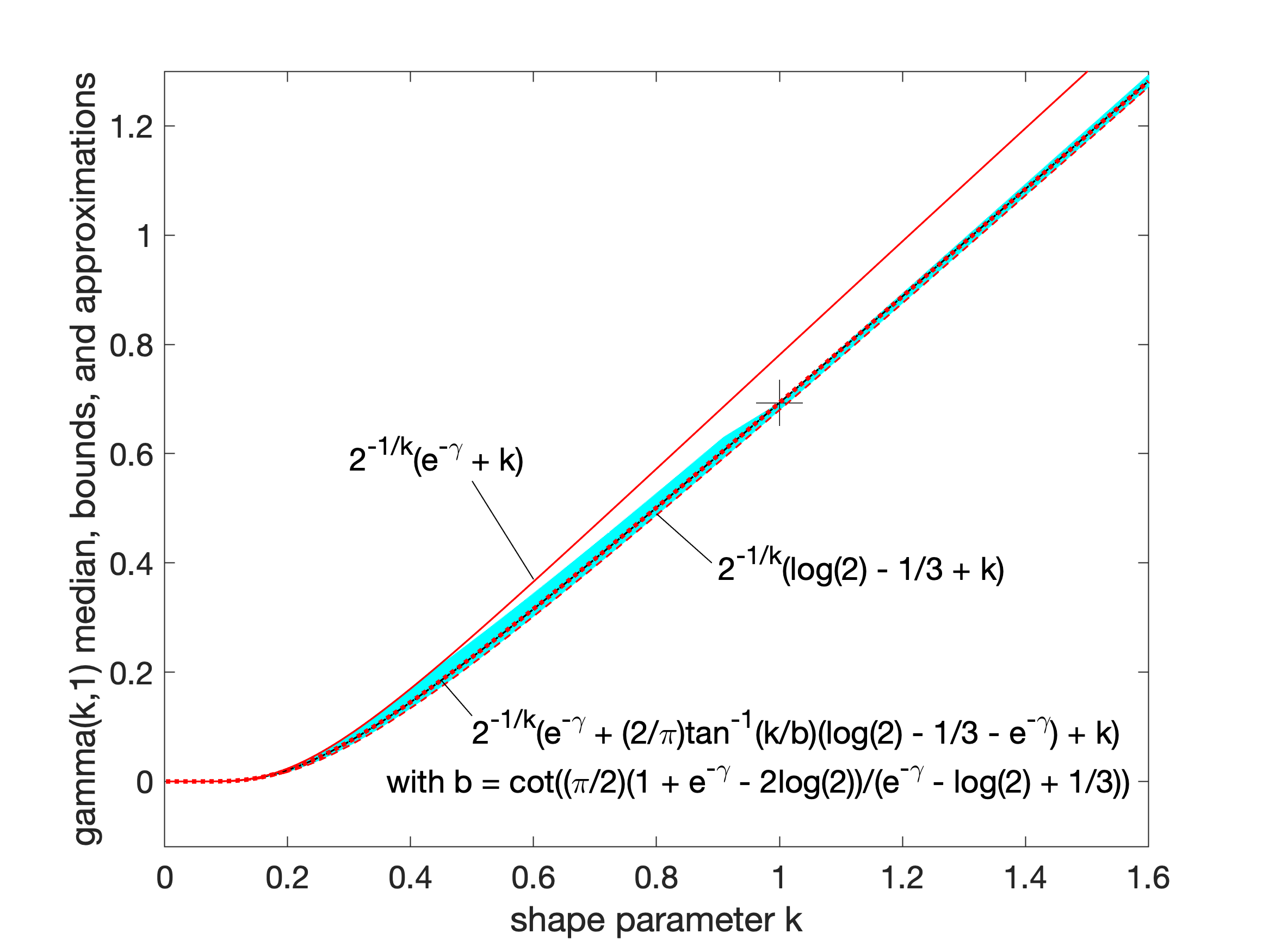

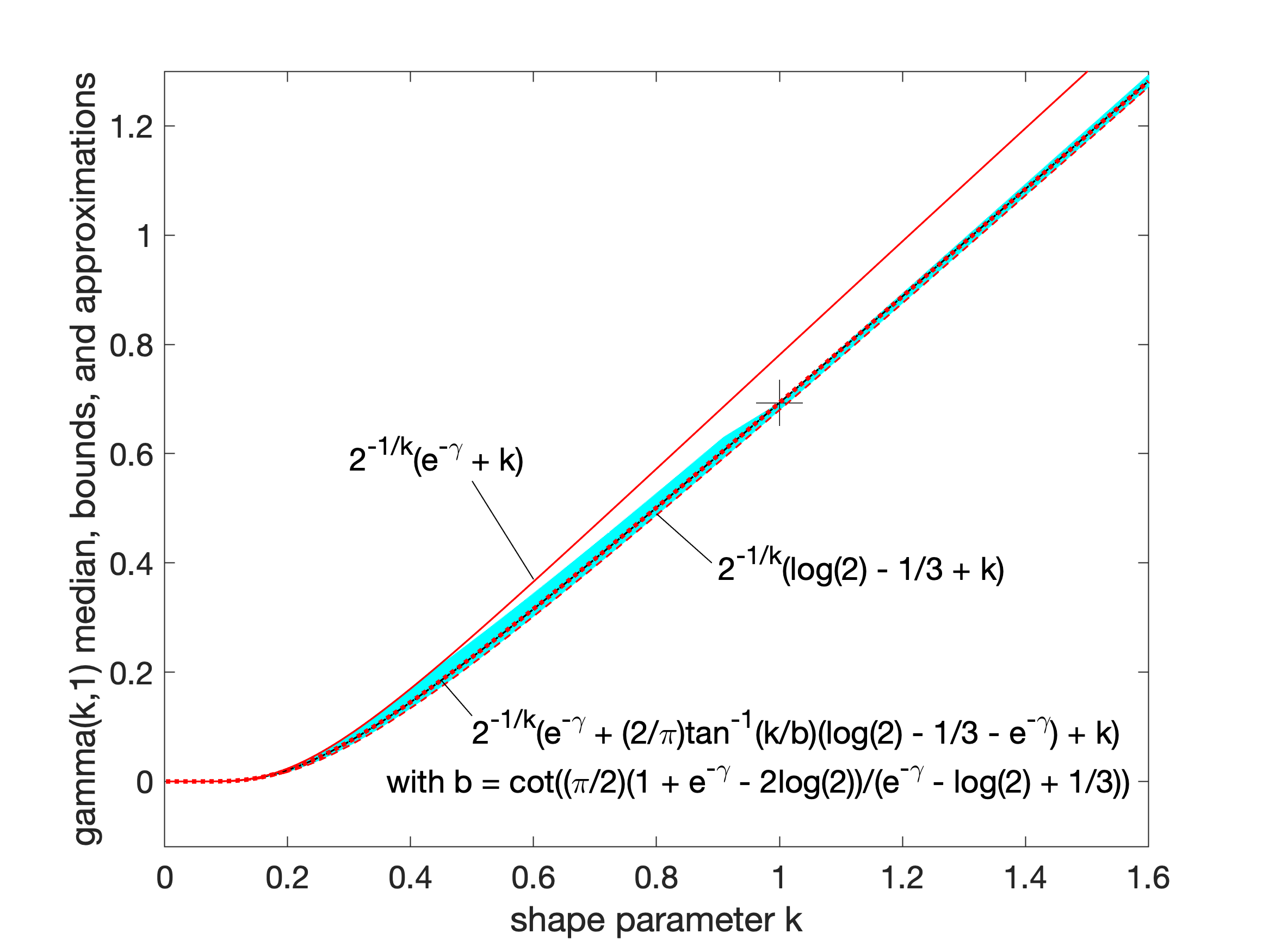

In 2021, Lyon proposed several closed-form approximations of the form . He conjectured closed-form values of and for which this approximation is an asymptotically tight upper or lower bound for all . In particular:

: is a lower bound, asymptotically tight as

: is an upper bound, asymptotically tight as

Lyon also derived two other lower bounds that are not closed-form expression

In mathematics, a closed-form expression is a mathematical expression that uses a finite number of standard operations. It may contain constants, variables, certain well-known operations (e.g., + − × ÷), and functions (e.g., ''n''th ro ...

s, including this one based on solving the integral expression substituting 1 for :

: (approaching equality as )

and the tangent line at where the derivative was found to be :

: (with equality at )

:

where Ei is the exponential integral

In mathematics, the exponential integral Ei is a special function on the complex plane.

It is defined as one particular definite integral of the ratio between an exponential function and its argument.

Definitions

For real non-zero values of ...

.

Additionally, he showed that interpolations between bounds could provide excellent approximations or tighter bounds to the median, including an approximation that is exact at (where ) and has a maximum relative error less than 0.6%. Interpolated approximations and bounds are all of the form

:

where is an interpolating function running monotonically from 0 at low to 1 at high , approximating an ideal, or exact, interpolator :

:

For the simplest interpolating function considered, a first-order rational function

:

the tightest lower bound has

:

and the tightest upper bound has

:

The interpolated bounds are plotted (mostly inside the yellow region) in the log–log plot shown. Even tighter bounds are available using different interpolating functions, but not usually with closed-form parameters like these.

Summation

If ''X''''i'' has a Gamma(''k''''i'', ''θ'') distribution for ''i'' = 1, 2, ..., ''N'' (i.e., all distributions have the same scale parameter ''θ''), then : provided all ''X''''i'' areindependent

Independent or Independents may refer to:

Arts, entertainment, and media Artist groups

* Independents (artist group), a group of modernist painters based in the New Hope, Pennsylvania, area of the United States during the early 1930s

* Independ ...

.

For the cases where the ''X''''i'' are independent

Independent or Independents may refer to:

Arts, entertainment, and media Artist groups

* Independents (artist group), a group of modernist painters based in the New Hope, Pennsylvania, area of the United States during the early 1930s

* Independ ...

but have different scale parameters, see Mathai or Moschopoulos.

The gamma distribution exhibits infinite divisibility.

Scaling

If : then, for any ''c'' > 0, : by moment generating functions, or equivalently, if : (shape-rate parameterization) : Indeed, we know that if ''X'' is an exponential r.v. with rate ''λ'', then ''cX'' is an exponential r.v. with rate ''λ''/''c''; the same thing is valid with Gamma variates (and this can be checked using themoment-generating function

In probability theory and statistics, the moment-generating function of a real-valued random variable is an alternative specification of its probability distribution. Thus, it provides the basis of an alternative route to analytical results compare ...

, see, e.gthese notes

10.4-(ii)): multiplication by a positive constant ''c'' divides the rate (or, equivalently, multiplies the scale).

Exponential family

The gamma distribution is a two-parameterexponential family

In probability and statistics, an exponential family is a parametric set of probability distributions of a certain form, specified below. This special form is chosen for mathematical convenience, including the enabling of the user to calculate ...

with natural parameters ''k'' − 1 and −1/''θ'' (equivalently, ''α'' − 1 and −''β''), and natural statistics

In theory of probability, probability and statistics, an exponential family is a parametric model, parametric set of probability distributions of a certain form, specified below. This special form is chosen for mathematical convenience, includin ...

''X'' and ln(''X'').

If the shape parameter ''k'' is held fixed, the resulting one-parameter family of distributions is a natural exponential family In probability and statistics, a natural exponential family (NEF) is a class of probability distributions that is a special case of an exponential family (EF).

Definition

Univariate case

The natural exponential families (NEF) are a subset of ...

.

Logarithmic expectation and variance

One can show that : or equivalently, : where is thedigamma function

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function:

:\psi(x)=\frac\ln\big(\Gamma(x)\big)=\frac\sim\ln-\frac.

It is the first of the polygamma functions. It is strictly increasing and strict ...

. Likewise,

:

where is the trigamma function

In mathematics, the trigamma function, denoted or , is the second of the polygamma functions, and is defined by

: \psi_1(z) = \frac \ln\Gamma(z).

It follows from this definition that

: \psi_1(z) = \frac \psi(z)

where is the digamma functio ...

.

This can be derived using the exponential family

In probability and statistics, an exponential family is a parametric set of probability distributions of a certain form, specified below. This special form is chosen for mathematical convenience, including the enabling of the user to calculate ...

formula for the moment generating function of the sufficient statistic, because one of the sufficient statistics of the gamma distribution is ln(''x'').

Information entropy

Theinformation entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \ ...

is

:

In the ''k'', ''θ'' parameterization, the information entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \ ...

is given by

:

Kullback–Leibler divergence

TheKullback–Leibler divergence

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how one probability distribution ''P'' is different fr ...

(KL-divergence), of Gamma(''α''''p'', ''β''''p'') ("true" distribution) from Gamma(''α''''q'', ''β''''q'') ("approximating" distribution) is given by

:

Written using the ''k'', ''θ'' parameterization, the KL-divergence of Gamma(''kp'', θ''p'') from Gamma(''kq'', ''θ''''q'') is given by

:

Laplace transform

TheLaplace transform

In mathematics, the Laplace transform, named after its discoverer Pierre-Simon Laplace (), is an integral transform that converts a function of a real variable (usually t, in the '' time domain'') to a function of a complex variable s (in the ...

of the gamma PDF is

:

Related distributions

General

* Let be independent and identically distributed random variables following an exponential distribution with rate parameter λ, then ~ Gamma(n, λ) where n is the shape parameter and ''λ'' is the rate, and where the rate changes ''nλ''. * If ''X'' ~ Gamma(1, 1/''λ'') (in the shape–scale parametrization), then ''X'' has an exponential distribution with rate parameter ''λ''. * If ''X'' ~ Gamma(''ν''/2, 2) (in the shape–scale parametrization), then ''X'' is identical to ''χ''2(''ν''), thechi-squared distribution

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-squar ...

with ''ν'' degrees of freedom. Conversely, if ''Q'' ~ ''χ''2(''ν'') and ''c'' is a positive constant, then ''cQ'' ~ Gamma(''ν''/2, 2''c'').

* If ''k'' is an integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the languag ...

, the gamma distribution is an Erlang distribution and is the probability distribution of the waiting time until the ''k''th "arrival" in a one-dimensional Poisson process

In probability, statistics and related fields, a Poisson point process is a type of random mathematical object that consists of points randomly located on a mathematical space with the essential feature that the points occur independently of one ...

with intensity 1/''θ''. If

::

:then

::

* If ''X'' has a Maxwell–Boltzmann distribution

In physics (in particular in statistical mechanics), the Maxwell–Boltzmann distribution, or Maxwell(ian) distribution, is a particular probability distribution named after James Clerk Maxwell and Ludwig Boltzmann.

It was first defined and use ...

with parameter ''a'', then

::

* If ''X'' ~ Gamma(''k'', ''θ''), then follows an exponential-gamma (abbreviated exp-gamma) distribution. It is sometimes referred to as the log-gamma distribution. Formulas for its mean and variance are in the section #Logarithmic expectation and variance.

* If ''X'' ~ Gamma(''k'', ''θ''), then follows a generalized gamma distribution

The generalized gamma distribution is a continuous probability distribution with two shape parameters (and a scale parameter). It is a generalization of the gamma distribution which has one shape parameter (and a scale parameter). Since many dis ...

with parameters ''p'' = 2, ''d'' = 2''k'', and .

* More generally, if ''X'' ~ Gamma(''k'',''θ''), then for follows a generalized gamma distribution

The generalized gamma distribution is a continuous probability distribution with two shape parameters (and a scale parameter). It is a generalization of the gamma distribution which has one shape parameter (and a scale parameter). Since many dis ...

with parameters ''p'' = 1/''q'', ''d'' = ''k''/''q'', and .

* If ''X'' ~ Gamma(''k'', ''θ'') with shape ''k'' and scale ''θ'', then 1/''X'' ~ Inv-Gamma(''k'', ''θ''−1) (see Inverse-gamma distribution

In probability theory and statistics, the inverse gamma distribution is a two-parameter family of continuous probability distributions on the positive real line, which is the distribution of the reciprocal of a variable distributed according to ...

for derivation).

*Parametrization 1: If are independent, then , or equivalently,

*Parametrization 2: If are independent, then , or equivalently,

* If ''X'' ~ Gamma(''α'', ''θ'') and ''Y'' ~ Gamma(''β'', ''θ'') are independently distributed, then ''X''/(''X'' + ''Y'') has a beta distribution with parameters ''α'' and ''β'', and ''X''/(''X'' + ''Y'') is independent of ''X'' + ''Y'', which is Gamma(''α'' + ''β'', ''θ'')-distributed.

* If ''X''''i'' ~ Gamma(''α''''i'', 1) are independently distributed, then the vector (''X''1/''S'', ..., ''Xn''/''S''), where ''S'' = ''X''1 + ... + ''Xn'', follows a Dirichlet distribution with parameters ''α''1, ..., ''α''''n''.

* For large ''k'' the gamma distribution converges to normal distribution with mean ''μ'' = ''kθ'' and variance ''σ''2 = ''kθ''2.

* The gamma distribution is the conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

for the precision of the normal distribution with known mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value (magnitude and sign) of a given data set.

For a data set, the '' ari ...

.

* The matrix gamma distribution

In statistics, a matrix gamma distribution is a generalization of the gamma distribution to positive-definite matrices.Iranmanesh, Anis, M. Arashib and S. M. M. Tabatabaey (2010)"On Conditional Applications of Matrix Variate Normal Distribution" ...

and the Wishart distribution are multivariate generalizations of the gamma distribution (samples are positive-definite matrices rather than positive real numbers).

* The gamma distribution is a special case of the generalized gamma distribution

The generalized gamma distribution is a continuous probability distribution with two shape parameters (and a scale parameter). It is a generalization of the gamma distribution which has one shape parameter (and a scale parameter). Since many dis ...

, the generalized integer gamma distribution, and the generalized inverse Gaussian distribution

In probability theory and statistics, the generalized inverse Gaussian distribution (GIG) is a three-parameter family of continuous probability distributions with probability density function

:f(x) = \frac x^ e^,\qquad x>0,

where ''Kp'' is a mod ...

.

* Among the discrete distributions, the negative binomial distribution is sometimes considered the discrete analog of the gamma distribution.

* Tweedie distribution

In probability and statistics, the Tweedie distributions are a family of probability distributions which include the purely continuous normal, gamma and inverse Gaussian distributions, the purely discrete scaled Poisson distribution, and the ...

s – the gamma distribution is a member of the family of Tweedie exponential dispersion model

In probability and statistics, the class of exponential dispersion models (EDM) is a set of probability distributions that represents a generalisation of the natural exponential family.Jørgensen, B. (1987). Exponential dispersion models (with dis ...

s.

* Modified Half-normal distribution

In probability theory and statistics, the half-normal distribution is a special case of the folded normal distribution.

Let X follow an ordinary normal distribution, N(0,\sigma^2). Then, Y=, X, follows a half-normal distribution. Thus, the ha ...

– the Gamma distribution is a member of the family of Modified half-normal distribution

In probability theory and statistics, the half-normal distribution is a special case of the folded normal distribution.

Let X follow an ordinary normal distribution, N(0,\sigma^2). Then, Y=, X, follows a half-normal distribution. Thus, the hal ...

. The corresponding density is , where denotes the Fox-Wright Psi function.

Compound gamma

If the shape parameter of the gamma distribution is known, but the inverse-scale parameter is unknown, then a gamma distribution for the inverse scale forms a conjugate prior. Thecompound distribution In probability and statistics, a compound probability distribution (also known as a mixture distribution or contagious distribution) is the probability distribution that results from assuming that a random variable is distributed according to som ...

, which results from integrating out the inverse scale, has a closed-form solution known as the compound gamma distribution

In probability theory and statistics, the beta prime distribution (also known as inverted beta distribution or beta distribution of the second kindJohnson et al (1995), p 248) is an probability distribution#Continuous probability distribution, ab ...

.

If, instead, the shape parameter is known but the mean is unknown, with the prior of the mean being given by another gamma distribution, then it results in K-distribution

In probability and statistics, the generalized K-distribution is a three-parameter family of continuous probability distributions.

The distribution arises by compounding two gamma distributions. In each case, a re-parametrization of the usual ...

.

Weibull and Stable count

The gamma distribution can be expressed as the product distribution of aWeibull distribution

In probability theory and statistics, the Weibull distribution is a continuous probability distribution. It is named after Swedish mathematician Waloddi Weibull, who described it in detail in 1951, although it was first identified by Maurice Re ...

and a variant form of the stable count distribution

In probability theory, the stable count distribution is the conjugate prior of a Stable distribution#One-sided stable distribution and stable count distribution, one-sided stable distribution. This distribution was discovered by Stephen Lihn (Chi ...

.

Its shape parameter can be regarded as the inverse of Lévy's stability parameter in the stable count distribution:

where is a standard stable count distribution of shape , and is a standard Weibull distribution of shape .

Statistical inference

Parameter estimation

Maximum likelihood estimation

The likelihood function for ''N'' iid observations (''x''1, ..., ''x''''N'') is : from which we calculate the log-likelihood function : Finding the maximum with respect to ''θ'' by taking the derivative and setting it equal to zero yields themaximum likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed stat ...

estimator of the ''θ'' parameter, which equals the sample mean

The sample mean (or "empirical mean") and the sample covariance are statistics computed from a sample of data on one or more random variables.

The sample mean is the average value (or mean value) of a sample of numbers taken from a larger popu ...

divided by the shape parameter ''k'':

:

Substituting this into the log-likelihood function gives

:

Finding the maximum with respect to ''k'' by taking the derivative and setting it equal to zero yields

:

where is the digamma function

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function:

:\psi(x)=\frac\ln\big(\Gamma(x)\big)=\frac\sim\ln-\frac.

It is the first of the polygamma functions. It is strictly increasing and strict ...

and is the sample mean of ln(''x''). There is no closed-form solution for ''k''. The function is numerically very well behaved, so if a numerical solution is desired, it can be found using, for example, Newton's method. An initial value of ''k'' can be found either using the method of moments, or using the approximation

:

If we let

:

then ''k'' is approximately

:

which is within 1.5% of the correct value. An explicit form for the Newton–Raphson update of this initial guess is:

:

Closed-form estimators

Consistent closed-form estimators of ''k'' and ''θ'' exists that are derived from the likelihood of thegeneralized gamma distribution

The generalized gamma distribution is a continuous probability distribution with two shape parameters (and a scale parameter). It is a generalization of the gamma distribution which has one shape parameter (and a scale parameter). Since many dis ...

.

The estimate for the shape ''k'' is

:

and the estimate for the scale ''θ'' is

:

Using the sample mean of ''x'', the sample mean of ln(''x''), and the sample mean of the product ''x''·ln(''x'') simplifies the expressions to:

:

:

If the rate parameterization is used, the estimate of .

These estimators are not strictly maximum likelihood estimators, but are instead referred to as mixed type log-moment estimators. They have however similar efficiency as the maximum likelihood estimators.

Although these estimators are consistent, they have a small bias. A bias-corrected variant of the estimator for the scale ''θ'' is

:

A bias correction for the shape parameter ''k'' is given as

:

Bayesian minimum mean squared error

With known ''k'' and unknown ''θ'', the posterior density function for theta (using the standard scale-invariant prior for ''θ'') is : Denoting : Integration with respect to ''θ'' can be carried out using a change of variables, revealing that 1/''θ'' is gamma-distributed with parameters ''α'' = ''Nk'', ''β'' = ''y''. : The moments can be computed by taking the ratio (''m'' by ''m'' = 0) : which shows that the mean ± standard deviation estimate of the posterior distribution for ''θ'' is :Bayesian inference

Conjugate prior

In Bayesian inference, the gamma distribution is theconjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

to many likelihood distributions: the Poisson, exponential

Exponential may refer to any of several mathematical topics related to exponentiation, including:

*Exponential function, also:

**Matrix exponential, the matrix analogue to the above

*Exponential decay, decrease at a rate proportional to value

*Expo ...

, normal Normal(s) or The Normal(s) may refer to:

Film and television

* ''Normal'' (2003 film), starring Jessica Lange and Tom Wilkinson

* ''Normal'' (2007 film), starring Carrie-Anne Moss, Kevin Zegers, Callum Keith Rennie, and Andrew Airlie

* ''Norma ...

(with known mean), Pareto, gamma with known shape ''σ'', inverse gamma with known shape parameter, and Gompertz with known scale parameter.

The gamma distribution's conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

is:Fink, D. 199A Compendium of Conjugate Priors

In progress report: Extension and enhancement of methods for setting data quality objectives. (DOE contract 95‑831). : where ''Z'' is the normalizing constant with no closed-form solution. The posterior distribution can be found by updating the parameters as follows: : where ''n'' is the number of observations, and ''xi'' is the ''i''th observation.

Occurrence and applications

Consider a sequence of events, with the waiting time for each event being an exponential distribution with rate . Then the waiting time for the -th event to occur is the gamma distribution with integer shape . This construction of the gamma distribution allows it to model a wide variety of phenomena where several sub-events, each taking time with exponential distribution, must happen in sequence for a major event to occur. Examples include the waiting time of cell-division events, number of compensatory mutations for a given mutation, waiting time until a repair is necessary for a hydraulic system, and so on. The gamma distribution has been used to model the size of insurance claims and rainfalls. This means that aggregate insurance claims and the amount of rainfall accumulated in a reservoir are modelled by a gamma process – much like the exponential distribution generates aPoisson process

In probability, statistics and related fields, a Poisson point process is a type of random mathematical object that consists of points randomly located on a mathematical space with the essential feature that the points occur independently of one ...

.

The gamma distribution is also used to model errors in multi-level Poisson regression

In statistics, Poisson regression is a generalized linear model form of regression analysis used to model count data and contingency tables. Poisson regression assumes the response variable ''Y'' has a Poisson distribution, and assumes the logari ...

models because a mixture of Poisson distribution

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known co ...

s with gamma-distributed rates has a known closed form distribution, called negative binomial

In probability theory and statistics, the negative binomial distribution is a discrete probability distribution that models the number of failures in a sequence of independent and identically distributed Bernoulli trials before a specified (non-r ...

.

In wireless communication, the gamma distribution is used to model the multi-path fading of signal power; see also Rayleigh distribution

In probability theory and statistics, the Rayleigh distribution is a continuous probability distribution for nonnegative-valued random variables. Up to rescaling, it coincides with the chi distribution with two degrees of freedom.

The distribut ...

and Rician distribution.

In oncology

Oncology is a branch of medicine that deals with the study, treatment, diagnosis and prevention of cancer. A medical professional who practices oncology is an ''oncologist''. The name's etymological origin is the Greek word ὄγκος (''� ...

, the age distribution of cancer

Cancer is a group of diseases involving abnormal cell growth with the potential to invade or spread to other parts of the body. These contrast with benign tumors, which do not spread. Possible signs and symptoms include a lump, abnormal b ...

incidence often follows the gamma distribution, wherein the shape and scale parameters predict, respectively, the number of driver events and the time interval between them.

In neuroscience

Neuroscience is the scientific study of the nervous system (the brain, spinal cord, and peripheral nervous system), its functions and disorders. It is a multidisciplinary science that combines physiology, anatomy, molecular biology, developme ...

, the gamma distribution is often used to describe the distribution of inter-spike intervals.J. G. Robson and J. B. Troy, "Nature of the maintained discharge of Q, X, and Y retinal ganglion cells of the cat", J. Opt. Soc. Am. A 4, 2301–2307 (1987)M.C.M. Wright, I.M. Winter, J.J. Forster, S. Bleeck "Response to best-frequency tone bursts in the ventral cochlear nucleus is governed by ordered inter-spike interval statistics", Hearing Research 317 (2014)

In bacterial

Bacteria (; singular: bacterium) are ubiquitous, mostly free-living organisms often consisting of one biological cell. They constitute a large domain of prokaryotic microorganisms. Typically a few micrometres in length, bacteria were amon ...

gene expression, the copy number

Copy number variation (CNV) is a phenomenon in which sections of the genome are repeated and the number of repeats in the genome varies between individuals. Copy number variation is a type of structural variation: specifically, it is a type of d ...

of a constitutively expressed

Gene expression is the process by which information from a gene is used in the synthesis of a functional gene product that enables it to produce end products, protein or non-coding RNA, and ultimately affect a phenotype, as the final effect. The ...

protein often follows the gamma distribution, where the scale and shape parameter are, respectively, the mean number of bursts per cell cycle and the mean number of protein molecule

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residues. Proteins perform a vast array of functions within organisms, including catalysing metabolic reactions, DNA replication, respond ...

s produced by a single mRNA during its lifetime.N. Friedman, L. Cai and X. S. Xie (2006) "Linking stochastic dynamics to population distribution: An analytical framework of gene expression", ''Phys. Rev. Lett.'' 97, 168302.

In genomics, the gamma distribution was applied in peak calling step (i.e., in recognition of signal) in ChIP-chip

ChIP-on-chip (also known as ChIP-chip) is a technology that combines chromatin immunoprecipitation ('ChIP') with DNA microarray (''"chip"''). Like regular ChIP, ChIP-on-chip is used to investigate interactions between proteins and DNA '' in viv ...

DJ Reiss, MT Facciotti and NS Baliga (2008"Model-based deconvolution of genome-wide DNA binding"

''Bioinformatics'', 24, 396–403 and

ChIP-seq

ChIP-sequencing, also known as ChIP-seq, is a method used to analyze protein interactions with DNA. ChIP-seq combines chromatin immunoprecipitation (ChIP) with massively parallel DNA sequencing to identify the binding sites of DNA-associated prote ...

MA Mendoza-Parra, M Nowicka, W Van Gool, H Gronemeyer (2013"Characterising ChIP-seq binding patterns by model-based peak shape deconvolution"

''BMC Genomics'', 14:834 data analysis. In Bayesian statistics, the gamma distribution is widely used as a

conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

. It is the conjugate prior for the precision

Precision, precise or precisely may refer to:

Science, and technology, and mathematics Mathematics and computing (general)

* Accuracy and precision, measurement deviation from true value and its scatter

* Significant figures, the number of digit ...

(i.e. inverse of the variance) of a normal distribution. It is also the conjugate prior for the exponential distribution.

Random variate generation

Given the scaling property above, it is enough to generate gamma variables with ''θ'' = 1, as we can later convert to any value of ''β'' with a simple division. Suppose we wish to generate random variables from Gamma(''n'' + ''δ'', 1), where n is a non-negative integer and 0 < ''δ'' < 1. Using the fact that a Gamma(1, 1) distribution is the same as an Exp(1) distribution, and noting the method of generating exponential variables, we conclude that if ''U'' is uniformly distributed on (0, 1], then −ln(''U'') is distributed Gamma(1, 1) (i.e.inverse transform sampling

Inverse transform sampling (also known as inversion sampling, the inverse probability integral transform, the inverse transformation method, Smirnov transform, or the golden ruleAalto University, N. Hyvönen, Computational methods in inverse probl ...

). Now, using the "''α''-addition" property of gamma distribution, we expand this result:

:

where ''U''''k'' are all uniformly distributed on (0, 1] and independent

Independent or Independents may refer to:

Arts, entertainment, and media Artist groups

* Independents (artist group), a group of modernist painters based in the New Hope, Pennsylvania, area of the United States during the early 1930s

* Independ ...

. All that is left now is to generate a variable distributed as Gamma(''δ'', 1) for 0 < ''δ'' < 1 and apply the "''α''-addition" property once more. This is the most difficult part.

Random generation of gamma variates is discussed in detail by Devroye, See Chapter 9, Section 3. noting that none are uniformly fast for all shape parameters. For small values of the shape parameter, the algorithms are often not valid. For arbitrary values of the shape parameter, one can apply the Ahrens and Dieter. See Algorithm GD, p. 53. modified acceptance-rejection method Algorithm GD (shape ''k'' ≥ 1), or transformation method when 0 < ''k'' < 1. Also see Cheng and Feast Algorithm GKM 3 or Marsaglia's squeeze method.

The following is a version of the Ahrens-Dieter rejection sampling, acceptance–rejection method:

# Generate ''U'', ''V'' and ''W'' as iid uniform (0, 1] variates.

# If then and . Otherwise, and .

# If then go to step 1.

# ''ξ'' is distributed as Γ(''δ'', 1).

A summary of this is

:

where is the integer part of ''k'', ''ξ'' is generated via the algorithm above with ''δ'' = (the fractional part of ''k'') and the ''U''''k'' are all independent.

While the above approach is technically correct, Devroye notes that it is linear in the value of ''k'' and generally is not a good choice. Instead, he recommends using either rejection-based or table-based methods, depending on context.

For example, Marsaglia's simple transformation-rejection method relying on one normal variate ''X'' and one uniform variate ''U'':

# Set and .

# Set .

# If and return , else go back to step 2.

With generates a gamma distributed random number in time that is approximately constant with ''k''. The acceptance rate does depend on ''k'', with an acceptance rate of 0.95, 0.98, and 0.99 for k=1, 2, and 4. For ''k'' < 1, one can use to boost ''k'' to be usable with this method.

References

External links

* * * ModelAssist (2017Uses of the gamma distribution in risk modeling, including applied examples in Excel

{{DEFAULTSORT:Gamma Distribution Continuous distributions Factorial and binomial topics Conjugate prior distributions Exponential family distributions Infinitely divisible probability distributions Survival analysis