|

Server (computing)

In computing, a server is a piece of computer hardware or software ( computer program) that provides functionality for other programs or devices, called " clients". This architecture is called the client–server model. Servers can provide various functionalities, often called "services", such as sharing data or resources among multiple clients, or performing computation for a client. A single server can serve multiple clients, and a single client can use multiple servers. A client process may run on the same device or may connect over a network to a server on a different device. Typical servers are database servers, file servers, mail servers, print servers, web servers, game servers, and application servers. Client–server systems are usually most frequently implemented by (and often identified with) the request–response model: a client sends a request to the server, which performs some action and sends a response back to the client, typically with a result or acknow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Game Server

A game server (also sometimes referred to as a host) is a server which is the authoritative source of events in a multiplayer video game. The server transmits enough data about its internal state to allow its connected clients to maintain their own accurate version of the game world for display to players. They also receive and process each player's input. Types Dedicated server Dedicated servers simulate game worlds without supporting direct input or output, except that required for their administration. Players must connect to the server with separate client programs in order to see and interact with the game. The foremost advantage of dedicated servers is their suitability for hosting in professional data centers, with all of the reliability and performance benefits that entails. Remote hosting also eliminates the low-latency advantage that would otherwise be held by any player who hosts and connects to a server from the same machine or local network. Dedicated servers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Daemon (computing)

In multitasking computer operating systems, a daemon ( or ) is a computer program that runs as a background process, rather than being under the direct control of an interactive user. Traditionally, the process names of a daemon end with the letter ''d'', for clarification that the process is in fact a daemon, and for differentiation between a daemon and a normal computer program. For example, is a daemon that implements system logging facility, and is a daemon that serves incoming SSH connections. In a Unix environment, the parent process of a daemon is often, but not always, the init process. A daemon is usually created either by a process forking a child process and then immediately exiting, thus causing init to adopt the child process, or by the init process directly launching the daemon. In addition, a daemon launched by forking and exiting typically must perform other operations, such as dissociating the process from any controlling terminal (tty). Such procedures ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jargon File

The Jargon File is a glossary and usage dictionary of slang used by computer programmers. The original Jargon File was a collection of terms from technical cultures such as the MIT AI Lab, the Stanford AI Lab (SAIL) and others of the old ARPANET AI/ LISP/PDP-10 communities, including Bolt, Beranek and Newman, Carnegie Mellon University, and Worcester Polytechnic Institute. It was published in paperback form in 1983 as ''The Hacker's Dictionary'' (edited by Guy Steele), revised in 1991 as ''The New Hacker's Dictionary'' (ed. Eric S. Raymond; third edition published 1996). The concept of the file began with the Tech Model Railroad Club (TMRC) that came out of early TX-0 and PDP-1 hackers in the 1950s, where the term hacker emerged and the ethic, philosophies and some of the nomenclature emerged. 1975 to 1983 The Jargon File (referred to here as "Jargon-1" or "the File") was made by Raphael Finkel at Stanford in 1975. From that time until the plug was finally pulled on the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Host (network)

A network host is a computer or other device connected to a computer network. A host may work as a server offering information resources, services, and applications to users or other hosts on the network. Hosts are assigned at least one network address. A computer participating in networks that use the Internet protocol suite may also be called an IP host. Specifically, computers participating in the Internet are called Internet hosts. Internet hosts and other IP hosts have one or more IP addresses assigned to their network interfaces. The addresses are configured either manually by an administrator, automatically at startup by means of the Dynamic Host Configuration Protocol (DHCP), or by stateless address autoconfiguration methods. Network hosts that participate in applications that use the client–server model of computing, are classified as server or client systems. Network hosts may also function as nodes in peer-to-peer applications, in which all nodes share and consume r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet

The Internet (or internet) is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a '' network of networks'' that consists of private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, wireless, and optical networking technologies. The Internet carries a vast range of information resources and services, such as the inter-linked hypertext documents and applications of the World Wide Web (WWW), electronic mail, telephony, and file sharing. The origins of the Internet date back to the development of packet switching and research commissioned by the United States Department of Defense in the 1960s to enable time-sharing of computers. The primary precursor network, the ARPANET, initially served as a backbone for interconnection of regional academic and military networks in the 1970s to enable resource shari ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ARPANET

The Advanced Research Projects Agency Network (ARPANET) was the first wide-area packet-switched network with distributed control and one of the first networks to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet. The ARPANET was established by the Advanced Research Projects Agency (ARPA) of the United States Department of Defense. Building on the ideas of J. C. R. Licklider, Bob Taylor initiated the ARPANET project in 1966 to enable access to remote computers. Taylor appointed Larry Roberts as program manager. Roberts made the key decisions about the network design. He incorporated Donald Davies' concepts and designs for packet switching, and sought input from Paul Baran. ARPA awarded the contract to build the network to Bolt Beranek & Newman who developed the first protocol for the network. Roberts engaged Leonard Kleinrock at UCLA to develop mathematical methods for analyzing the packet network technology. The first ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Engineering Task Force

The Internet Engineering Task Force (IETF) is a standards organization for the Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster or requirements and all its participants are volunteers. Their work is usually funded by employers or other sponsors. The IETF was initially supported by the federal government of the United States but since 1993 has operated under the auspices of the Internet Society, an international non-profit organization. Organization The IETF is organized into a large number of working groups and birds of a feather informal discussion groups, each dealing with a specific topic. The IETF operates in a bottom-up task creation mode, largely driven by these working groups. Each working group has an appointed chairperson (or sometimes several co-chairs); a charter that describes its focus; and what it is expected to produce, and when. It is open to all who want to partic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kendall's Notation

In queueing theory, a discipline within the mathematical theory of probability, Kendall's notation (or sometimes Kendall notation) is the standard system used to describe and classify a queueing node. D. G. Kendall proposed describing queueing models using three factors written A/S/''c'' in 1953 where A denotes the time between arrivals to the queue, S the service time distribution and ''c'' the number of service channels open at the node. It has since been extended to A/S/''c''/''K''/''N''/D where ''K'' is the capacity of the queue, ''N'' is the size of the population of jobs to be served, and D is the queueing discipline. When the final three parameters are not specified (e.g. M/M/1 queue), it is assumed ''K'' = ∞, ''N'' = ∞ and D = FIFO. A: The arrival process A code describing the arrival process. The codes used are: S: The service time distribution This gives the distribution of time of the service of a customer. Some common notations are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Queueing Theory

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service. Queueing theory has its origins in research by Agner Krarup Erlang when he created models to describe the system of Copenhagen Telephone Exchange company, a Danish company. The ideas have since seen applications including telecommunication, traffic engineering, computing and, particularly in industrial engineering, in the design of factories, shops, offices and hospitals, as well as in project management. Spelling The spelling "queueing" over "queuing" is typically encountered in the academic research field. In fact, one of the flagship journals of the field is ''Queueing Systems''. Single queueing nodes A queue, or queueing nod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing Cluster

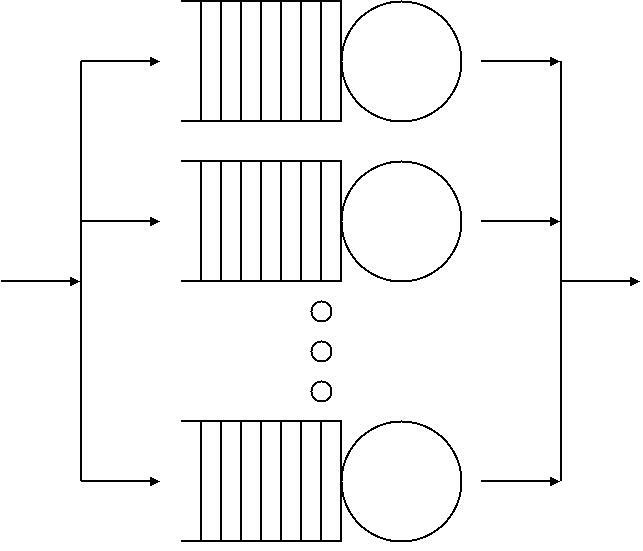

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as a result of convergence of a number of computing trends including th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Personal Computer

A personal computer (PC) is a multi-purpose microcomputer whose size, capabilities, and price make it feasible for individual use. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or technician. Unlike large, costly minicomputers and mainframes, time-sharing by many people at the same time is not used with personal computers. Primarily in the late 1970s and 1980s, the term home computer was also used. Institutional or corporate computer owners in the 1960s had to write their own programs to do any useful work with the machines. While personal computer users may develop their own applications, usually these systems run commercial software, free-of-charge software (" freeware"), which is most often proprietary, or free and open-source software, which is provided in "ready-to-run", or binary, form. Software for personal computers is typically developed and distributed independently from the hardware or operating system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |