|

Geotargeting

In geomarketing and internet marketing, geotargeting is the method of delivering different content to visitors based on their geolocation. This includes country, region/state, city, metro code/ zip code, organization, IP address, ISP, or other criteria. A common usage of geotargeting is found in online advertising, as well as internet television with sites such as iPlayer and Hulu. In these circumstances, content is often restricted to users geolocated in specific countries; this approach serves as a means of implementing digital rights management. Use of proxy servers and virtual private networks may give a false location. Geographical information provided by the visitor In geotargeting with geolocation software, the geolocation is based on geographical and other personal information that is provided by the visitor or others. Content by choice Some websites, for example FedEx and UPS, utilize geotargeting by giving users the choice to select their country location. The user is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Online Advertising

Online advertising, also known as online marketing, Internet advertising, digital advertising or web advertising, is a form of marketing and advertising which uses the Internet to promote products and services to audiences and platform users. Online advertising includes email marketing, search engine marketing (SEM), social media marketing, many types of display advertising (including web banner advertising), and mobile advertising. Advertisements are increasingly being delivered via automated software systems operating across multiple websites, media services and platforms, known as programmatic advertising. Like other advertising media, online advertising frequently involves a publisher, who integrates advertisements into its online content, and an advertiser, who provides the advertisements to be displayed on the publisher's content. Other potential participants include advertising agencies who help generate and place the ad copy, an ad server which technologically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geolocation Software

In computing, Internet geolocation is software capable of deducing the geographic position of a device connected to the Internet. For example, the device's IP address can be used to determine the country, city, or ZIP code, determining its geographical location. Other methods include examination of Wi-Fi hotspots, Data sources An IP address is assigned to each device (e.g. computer, printer) participating in a computer network that uses the Internet Protocol for communication., ''DOD Standard Internet Protocol'' (January 1980) The protocol specifies that each IP packet must have a header which contains, among other things, the IP address of the sender. There are a number of free and paid subscription geolocation databases, ranging from country level to state or city—including ZIP/post code level—each with varying claims of accuracy (generally higher at the country level). These databases typically contain IP address data which may be used in firewalls, ad servers, routing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proxy Server

In computer networking, a proxy server is a server application that acts as an intermediary between a client requesting a resource and the server providing that resource. Instead of connecting directly to a server that can fulfill a request for a resource, such as a file or web page, the client directs the request to the proxy server, which evaluates the request and performs the required network transactions. This serves as a method to simplify or control the complexity of the request, or provide additional benefits such as load balancing, privacy, or security. Proxies were devised to add structure and encapsulation to distributed systems. A proxy server thus functions on behalf of the client when requesting service, potentially masking the true origin of the request to the resource server. Types A proxy server may reside on the user's local computer, or at any point between the user's computer and destination servers on the Internet. A proxy server that passes unmodifie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wikimedia Donation Banners

The Wikimedia Foundation, Inc., or Wikimedia for short and abbreviated as WMF, is an American 501(c)(3) nonprofit organization headquartered in San Francisco, California and registered as foundation (United States law), a charitable foundation under Law of the United States, local laws. Best known as the hosting platform for Wikipedia, a crowdsourced online encyclopedia, it also hosts #Wikimedia projects, other related projects and MediaWiki, a wiki software. The Wikimedia Foundation was established in 2003 in St. Petersburg, Florida, by Jimmy Wales as a nonprofit way to fund Wikipedia, Wiktionary, and other crowdsourced wiki projects that had until then been hosted by Bomis, Wales's for-profit company. The Foundation finances itself mainly through millions of small donations from Wikipedia readers, collected through email campaigns and annual fundraising banners placed on Wikipedia and its sister projects. These are complemented by grants from philanthropic organizations and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Crawler

A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request bots to index only parts of a website, or nothing at all. The number of Internet pages is extremely larg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Online Locator Service

An online locator service (also known as location finder, store finder, or store locator, or similar) is a feature found on websites of businesses with multiple locations that allows visitors to the site to find locations of the business within proximity of an address or postal code or within a selected region.Henriette Martel-Lawso200 Marketing Ideas for Your Website Marketing Cues, 2004 , p. 183 Types of businesses that often have this feature include chain retailers, hotels, restaurants, and other businesses that can be found in multiple metropolitan areas. The locator also provides important information about each location, including its address, phone number, hours of operation, services provided, and sometimes directions to the location. On many sites, searches can be narrowed to locations providing certain services not provided at all locations (e.g. 24-hour operation, handicap accessibility, pharmacies). Location finders often operate in conjunction with a well-known onli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Traceroute

In computing, traceroute and tracert are computer network diagnostic commands for displaying possible routes (paths) and measuring transit delays of packets across an Internet Protocol (IP) network. The history of the route is recorded as the round-trip times of the packets received from each successive host (remote node) in the route (path); the sum of the mean times in each hop is a measure of the total time spent to establish the connection. Traceroute proceeds unless all (usually three) sent packets are lost more than twice; then the connection is lost and the route cannot be evaluated. Ping, on the other hand, only computes the final round-trip times from the destination point. For Internet Protocol Version 6 (IPv6) the tool sometimes has the name traceroute6 and tracert6. Implementations The command traceroute is available on many modern operating systems. On Unix-like systems such as FreeBSD, macOS, and Linux it is available as a command line tool. Traceroute is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

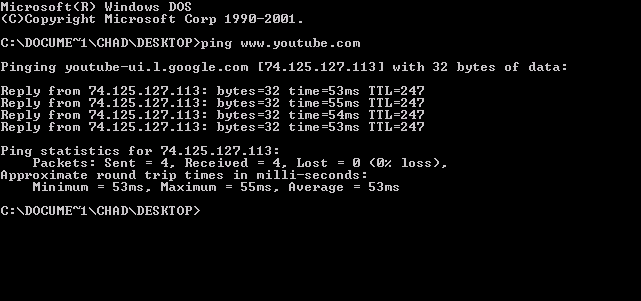

Ping (networking Utility)

ping is a computer network administration software utility used to test the reachability of a host on an Internet Protocol (IP) network. It is available for virtually all operating systems that have networking capability, including most embedded network administration software. Ping measures the round-trip time for messages sent from the originating host to a destination computer that are echoed back to the source. The name comes from active sonar terminology that sends a pulse of sound and listens for the echo to detect objects under water. Ping operates by means of Internet Control Message Protocol (ICMP) packets. ''Pinging'' involves sending an ICMP echo request to the target host and waiting for an ICMP echo reply. The program reports errors, packet loss, and a statistical summary of the results, typically including the minimum, maximum, the mean round-trip times, and standard deviation of the mean. The command-line options of the ping utility and its output vary bet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloaking

Cloaking is a search engine optimization (SEO) technique in which the content presented to the search engine spider is different from that presented to the user's browser. This is done by delivering content based on the IP addresses or the User-Agent HTTP header of the user requesting the page. When a user is identified as a search engine spider, a server-side script delivers a different version of the web page, one that contains content not present on the visible page, or that is present but not searchable. The purpose of cloaking is sometimes to deceive search engines so they display the page when it would not otherwise be displayed ( black hat SEO). However, it can also be a functional (though antiquated) technique for informing search engines of content they would not otherwise be able to locate because it is embedded in non-textual containers, such as video or certain Adobe Flash components. Since 2006, better methods of accessibility, including progressive enhancement, h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine Optimization

Search engine optimization (SEO) is the process of improving the quality and quantity of website traffic to a website or a web page from search engines. SEO targets unpaid traffic (known as "natural" or "organic" results) rather than direct traffic or paid traffic. Unpaid traffic may originate from different kinds of searches, including image search, video search, academic search, news search, and industry-specific vertical search engines. As an Internet marketing strategy, SEO considers how search engines work, the computer-programmed algorithms that dictate search engine behavior, what people search for, the actual search terms or keywords typed into search engines, and which search engines are preferred by their targeted audience. SEO is performed because a website will receive more visitors from a search engine when websites rank higher on the search engine results page (SERP). These visitors can then potentially be converted into customers. History Webmast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Wall Street Journal

''The Wall Street Journal'' is an American business-focused, international daily newspaper based in New York City, with international editions also available in Chinese and Japanese. The ''Journal'', along with its Asian editions, is published six days a week by Dow Jones & Company, a division of News Corp. The newspaper is published in the broadsheet format and online. The ''Journal'' has been printed continuously since its inception on July 8, 1889, by Charles Dow, Edward Jones, and Charles Bergstresser. The ''Journal'' is regarded as a newspaper of record, particularly in terms of business and financial news. The newspaper has won 38 Pulitzer Prizes, the most recent in 2019. ''The Wall Street Journal'' is one of the largest newspapers in the United States by circulation, with a circulation of about 2.834million copies (including nearly 1,829,000 digital sales) compared with '' USA Today''s 1.7million. The ''Journal'' publishes the luxury news and lifestyle magazi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

User Agent

In computing, a user agent is any software, acting on behalf of a user, which "retrieves, renders and facilitates end-user interaction with Web content". A user agent is therefore a special kind of software agent. Some prominent examples of user agents are web browsers and email readers. Often, a user agent acts as the client in a client–server system. In some contexts, such as within the Session Initiation Protocol (SIP), the term ''user agent'' refers to both end points of a communications session. User agent identification When a software agent operates in a network protocol, it often identifies itself, its application type, operating system, device model, software vendor, or software revision, by submitting a characteristic identification string to its operating peer. In HTTP, SIP,RFC 3261, ''SIP: Session Initiation Protocol'', IETF, The Internet Society (2002) and NNTP protocols, this identification is transmitted in a header field ''User-Agent''. Bots, such as W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)