|

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solid-state Drive

A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a semiconductor storage device, a solid-state device or a solid-state disk, even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks. SSD also has rich internal parallelism for data processing. In comparison to hard disk drives and similar electromechanical media which use moving parts, SSDs are typically more resistant to physical shock, run silently, and have higher input/output rates and lower latency. SSDs store data in semiconductor cells. cells can contain between 1 and 4 bits of data. SSD storage devices vary in their properties according to the number of bits stored in each cell, with single-bit cells ("Single Level Cells" or "SLC") ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Storage

Disk storage (also sometimes called drive storage) is a general category of storage mechanisms where data is recorded by various electronic, magnetic, optical, or mechanical changes to a surface layer of one or more rotating disks. A disk drive is a device implementing such a storage mechanism. Notable types are the hard disk drive (HDD) containing a non-removable disk, the floppy disk drive (FDD) and its removable floppy disk, and various optical disc drives (ODD) and associated optical disc media. (The spelling ''disk'' and ''disc'' are used interchangeably except where trademarks preclude one usage, e.g. the Compact Disc logo. The choice of a particular form is frequently historical, as in IBM's usage of the ''disk'' form beginning in 1956 with the " IBM 350 disk storage unit".) Background Audio information was originally recorded by analog methods (see Sound recording and reproduction). Similarly the first video disc used an analog recording method. In the music ind ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetch Input Queue

Fetching the instruction opcodes from program memory well in advance is known as prefetching and it is served by using prefetch input queue (PIQ).The pre-fetched instructions are stored in data structure - namely a queue. The fetching of opcodes well in advance, prior to their need for execution increases the overall efficiency of the processor boosting its speed. The processor no longer has to wait for the memory access operations for the subsequent instruction opcode to complete. This architecture was prominently used in the Intel 8086 microprocessor. Introduction Pipelining was brought to the forefront of computing architecture design during the 1960s due to the need for faster and more efficient computing. Pipelining is the broader concept and most modern processors load their instructions some clock cycles before they execute them. This is achieved by pre-loading machine code from memory into a ''prefetch input queue''. This behavior only applies to von Neumann compute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Demand Paging

In computer operating systems, demand paging (as opposed to anticipatory paging) is a method of virtual memory management. In a system that uses demand paging, the operating system copies a disk page into physical memory only if an attempt is made to access it and that page is not already in memory (''i.e.'', if a page fault occurs). It follows that a process begins execution with none of its pages in physical memory, and many page faults will occur until most of a process's working set of pages are located in physical memory. This is an example of a lazy loading technique. Basic concept Demand paging follows that pages should only be brought into memory if the executing process demands them. This is often referred to as lazy evaluation as only those pages demanded by the process are swapped from secondary storage to main memory. Contrast this to pure swapping, where all memory for a process is swapped from secondary storage to main memory during the process startup. Common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Write-back With Write-allocation

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Write-through With No-write-allocation

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Access Time

Access time is the time delay or latency between a request to an electronic system, and the access being completed or the requested data returned * In a computer, it is the time interval between the instant at which an instruction control unit initiates a call for data or a request to store data, and the instant at which delivery of the data is completed or the storage is started. See also * Memory latency * Mechanical latency * Rotational latency * Seek time Higher performance in hard disk drives comes from devices which have better performance characteristics. These performance characteristics can be grouped into two categories: access time and data transfer time (or rate). Access time The ''acces ... References Network access {{compu-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Algorithm

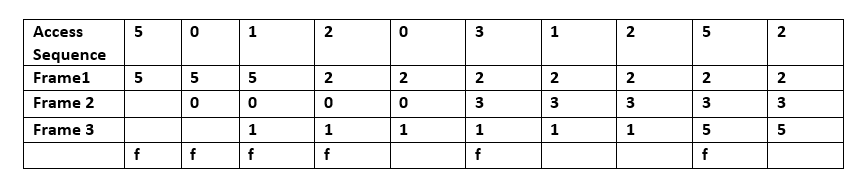

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Algorithm

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heuristic (computer Science)

In mathematical optimization and computer science, heuristic (from Greek εὑρίσκω "I find, discover") is a technique designed for solving a problem more quickly when classic methods are too slow for finding an approximate solution, or when classic methods fail to find any exact solution. This is achieved by trading optimality, completeness, accuracy, or precision for speed. In a way, it can be considered a shortcut. A heuristic function, also simply called a heuristic, is a function that ranks alternatives in search algorithms at each branching step based on available information to decide which branch to follow. For example, it may approximate the exact solution. Definition and motivation The objective of a heuristic is to produce a solution in a reasonable time frame that is good enough for solving the problem at hand. This solution may not be the best of all the solutions to this problem, or it may simply approximate the exact solution. But it is still valuabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operating System

An operating system (OS) is system software that manages computer hardware, software resources, and provides common daemon (computing), services for computer programs. Time-sharing operating systems scheduler (computing), schedule tasks for efficient use of the system and may also include accounting software for cost allocation of Scheduling (computing), processor time, mass storage, printing, and other resources. For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer from cellular phones and video game consoles to web servers and supercomputers. The dominant general-purpose personal computer operating system is Microsoft Windows with a market share of aroun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |