The

derivatives

The derivative of a function is the rate of change of the function's output relative to its input value.

Derivative may also refer to:

In mathematics and economics

* Brzozowski derivative in the theory of formal languages

* Formal derivative, an ...

of

scalars

Scalar may refer to:

*Scalar (mathematics), an element of a field, which is used to define a vector space, usually the field of real numbers

*Scalar (physics), a physical quantity that can be described by a single element of a number field such a ...

,

vectors, and second-order

tensor

In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects related to a vector space. Tensors may map between different objects such as vectors, scalars, and even other tenso ...

s with respect to second-order tensors are of considerable use in

continuum mechanics

Continuum mechanics is a branch of mechanics that deals with the mechanical behavior of materials modeled as a continuous mass rather than as discrete particles. The French mathematician Augustin-Louis Cauchy was the first to formulate such m ...

. These derivatives are used in the theories of

nonlinear elasticity

In continuum mechanics, the finite strain theory—also called large strain theory, or large deformation theory—deals with deformations in which strains and/or rotations are large enough to invalidate assumptions inherent in infinitesimal stra ...

and

plasticity

Plasticity may refer to:

Science

* Plasticity (physics), in engineering and physics, the propensity of a solid material to undergo permanent deformation under load

* Neuroplasticity, in neuroscience, how entire brain structures, and the brain it ...

, particularly in the design of

algorithms

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing c ...

for

numerical simulation

Computer simulation is the process of mathematical modelling, performed on a computer, which is designed to predict the behaviour of, or the outcome of, a real-world or physical system. The reliability of some mathematical models can be deter ...

s.

[J. C. Simo and T. J. R. Hughes, 1998, ''Computational Inelasticity'', Springer]

The

directional derivative

In mathematics, the directional derivative of a multivariable differentiable (scalar) function along a given vector v at a given point x intuitively represents the instantaneous rate of change of the function, moving through x with a velocity s ...

provides a systematic way of finding these derivatives.

[J. E. Marsden and T. J. R. Hughes, 2000, ''Mathematical Foundations of Elasticity'', Dover.]

Derivatives with respect to vectors and second-order tensors

The definitions of directional derivatives for various situations are given below. It is assumed that the functions are sufficiently smooth that derivatives can be taken.

Derivatives of scalar valued functions of vectors

Let ''f''(v) be a real valued function of the vector v. Then the derivative of ''f''(v) with respect to v (or at v) is the vector defined through its dot product with any vector u being

for all vectors u. The above dot product yields a scalar, and if u is a unit vector gives the directional derivative of ''f'' at v, in the u direction.

Properties:

# If

then

# If

then

# If

then

Derivatives of vector valued functions of vectors

Let f(v) be a vector valued function of the vector v. Then the derivative of f(v) with respect to v (or at v) is the second order tensor defined through its dot product with any vector u being

for all vectors u. The above dot product yields a vector, and if u is a unit vector gives the direction derivative of f at v, in the directional u.

Properties:

# If

then

# If

then

# If

then

Derivatives of scalar valued functions of second-order tensors

Let

be a real valued function of the second order tensor

. Then the derivative of

with respect to

(or at

) in the direction

is the second order tensor defined as

for all second order tensors

.

Properties:

# If

then

# If

then

# If

then

Derivatives of tensor valued functions of second-order tensors

Let

be a second order tensor valued function of the second order tensor

. Then the derivative of

with respect to

(or at

) in the direction

is the fourth order tensor defined as

for all second order tensors

.

Properties:

# If

then

# If

then

# If

then

# If

then

Gradient of a tensor field

The

gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gradi ...

,

, of a tensor field

in the direction of an arbitrary constant vector c is defined as:

The gradient of a tensor field of order ''n'' is a tensor field of order ''n''+1.

Cartesian coordinates

If

are the basis vectors in a

Cartesian coordinate

A Cartesian coordinate system (, ) in a plane is a coordinate system that specifies each point uniquely by a pair of numerical coordinates, which are the signed distances to the point from two fixed perpendicular oriented lines, measured in ...

system, with coordinates of points denoted by (

), then the gradient of the tensor field

is given by

Since the basis vectors do not vary in a Cartesian coordinate system we have the following relations for the gradients of a scalar field

, a vector field v, and a second-order tensor field

.

Curvilinear coordinates

If

are the

contravariant basis vector

In mathematics, a set of vectors in a vector space is called a basis if every element of may be written in a unique way as a finite linear combination of elements of . The coefficients of this linear combination are referred to as components ...

s in a

curvilinear coordinate system, with coordinates of points denoted by (

), then the gradient of the tensor field

is given by (see

[Ogden, R. W., 2000, Nonlinear Elastic Deformations, Dover.] for a proof.)

From this definition we have the following relations for the gradients of a scalar field

, a vector field v, and a second-order tensor field

.

where the

Christoffel symbol

In mathematics and physics, the Christoffel symbols are an array of numbers describing a metric connection. The metric connection is a specialization of the affine connection to surfaces or other manifolds endowed with a metric, allowing distan ...

is defined using

Cylindrical polar coordinates

In

cylindrical coordinate

A cylindrical coordinate system is a three-dimensional coordinate system that specifies point positions by the distance from a chosen reference axis ''(axis L in the image opposite)'', the direction from the axis relative to a chosen reference d ...

s, the gradient is given by

Divergence of a tensor field

The

divergence

In vector calculus, divergence is a vector operator that operates on a vector field, producing a scalar field giving the quantity of the vector field's source at each point. More technically, the divergence represents the volume density of the ...

of a tensor field

is defined using the recursive relation

where c is an arbitrary constant vector and v is a vector field. If

is a tensor field of order ''n'' > 1 then the divergence of the field is a tensor of order ''n''− 1.

Cartesian coordinates

In a Cartesian coordinate system we have the following relations for a vector field v and a second-order tensor field

.

where

tensor index notation

In mathematics, Ricci calculus constitutes the rules of index notation and manipulation for tensors and tensor fields on a differentiable manifold, with or without a metric tensor or connection. It is also the modern name for what used to be cal ...

for partial derivatives is used in the rightmost expressions. Note that

For a symmetric second-order tensor, the divergence is also often written as

The above expression is sometimes used as the definition of

in Cartesian component form (often also written as

). Note that such a definition is not consistent with the rest of this article (see the section on curvilinear co-ordinates).

The difference stems from whether the differentiation is performed with respect to the rows or columns of

, and is conventional. This is demonstrated by an example. In a Cartesian coordinate system the second order tensor (matrix)

is the gradient of a vector function

.

The last equation is equivalent to the alternative definition / interpretation

Curvilinear coordinates

In curvilinear coordinates, the divergences of a vector field v and a second-order tensor field

are

More generally,

Cylindrical polar coordinates

In

cylindrical polar coordinates

A cylindrical coordinate system is a three-dimensional coordinate system that specifies point positions by the distance from a chosen reference axis ''(axis L in the image opposite)'', the direction from the axis relative to a chosen reference di ...

Curl of a tensor field

The

curl

cURL (pronounced like "curl", UK: , US: ) is a computer software project providing a library (libcurl) and command-line tool (curl) for transferring data using various network protocols. The name stands for "Client URL".

History

cURL was fi ...

of an order-''n'' > 1 tensor field

is also defined using the recursive relation

where c is an arbitrary constant vector and v is a vector field.

Curl of a first-order tensor (vector) field

Consider a vector field v and an arbitrary constant vector c. In index notation, the cross product is given by

where

is the

permutation symbol

In mathematics, particularly in linear algebra, tensor analysis, and differential geometry, the Levi-Civita symbol or Levi-Civita epsilon represents a collection of numbers; defined from the sign of a permutation of the natural numbers , for s ...

, otherwise known as the Levi-Civita symbol. Then,

Therefore,

Curl of a second-order tensor field

For a second-order tensor

Hence, using the definition of the curl of a first-order tensor field,

Therefore, we have

Identities involving the curl of a tensor field

The most commonly used identity involving the curl of a tensor field,

, is

This identity holds for tensor fields of all orders. For the important case of a second-order tensor,

, this identity implies that

Derivative of the determinant of a second-order tensor

The derivative of the determinant of a second order tensor

is given by

In an orthonormal basis, the components of

can be written as a matrix A. In that case, the right hand side corresponds the cofactors of the matrix.

Derivatives of the invariants of a second-order tensor

The principal invariants of a second order tensor are

The derivatives of these three invariants with respect to

are

= \det(\boldsymbol)~\left

boldsymbol^\right\textsf ~.

For the derivatives of the other two invariants, let us go back to the characteristic equation

Using the same approach as for the determinant of a tensor, we can show that

Now the left hand side can be expanded as

Hence

or,

Expanding the right hand side and separating terms on the left hand side gives

or,

If we define

and

, we can write the above as

Collecting terms containing various powers of λ, we get

Then, invoking the arbitrariness of λ, we have

This implies that

Derivative of the second-order identity tensor

Let

be the second order identity tensor. Then the derivative of this tensor with respect to a second order tensor

is given by

This is because

is independent of

.

Derivative of a second-order tensor with respect to itself

Let

be a second order tensor. Then

Therefore,

Here

is the fourth order identity tensor. In index notation with respect to an orthonormal basis

This result implies that

where

Therefore, if the tensor

is symmetric, then the derivative is also symmetric and we get

where the symmetric fourth order identity tensor is

Derivative of the inverse of a second-order tensor

Let

and

be two second order tensors, then

In index notation with respect to an orthonormal basis

We also have

In index notation

If the tensor

is symmetric then

}:\boldsymbol = \boldsymbol

Since

, we can write

Using the product rule for second order tensors

we get

or,

Therefore,

Integration by parts

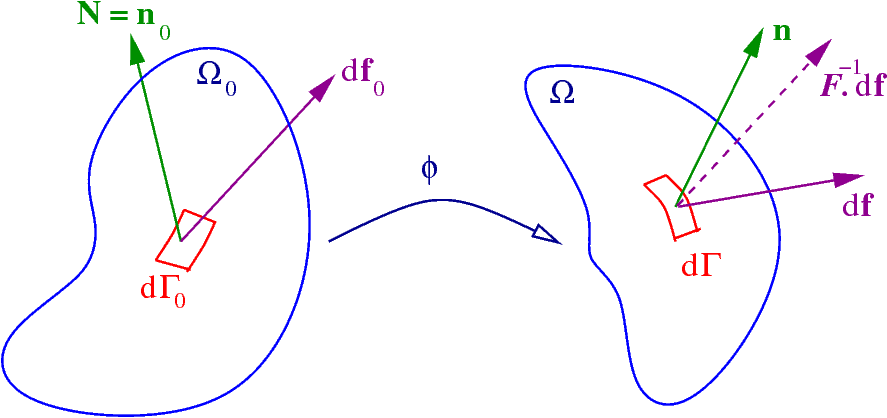

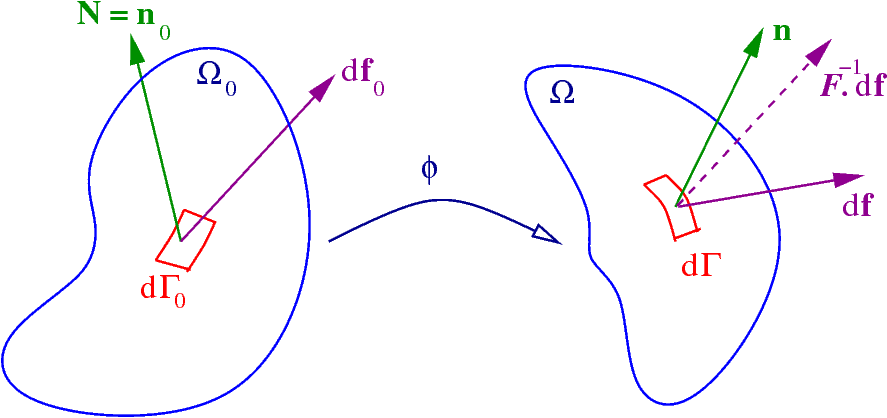

Another important operation related to tensor derivatives in continuum mechanics is integration by parts. The formula for integration by parts can be written as

where

and

are differentiable tensor fields of arbitrary order,

is the unit outward normal to the domain over which the tensor fields are defined,

represents a generalized tensor product operator, and

is a generalized gradient operator. When

is equal to the identity tensor, we get the

divergence theorem

In vector calculus, the divergence theorem, also known as Gauss's theorem or Ostrogradsky's theorem, reprinted in is a theorem which relates the ''flux'' of a vector field through a closed surface to the ''divergence'' of the field in the vol ...

We can express the formula for integration by parts in Cartesian index notation as

For the special case where the tensor product operation is a contraction of one index and the gradient operation is a divergence, and both

and

are second order tensors, we have

In index notation,

See also

*

Covariant derivative

In mathematics, the covariant derivative is a way of specifying a derivative along tangent vectors of a manifold. Alternatively, the covariant derivative is a way of introducing and working with a connection on a manifold by means of a different ...

*

Ricci calculus

In mathematics, Ricci calculus constitutes the rules of index notation and manipulation for tensors and tensor fields on a differentiable manifold, with or without a metric tensor or connection. It is also the modern name for what used to be cal ...

References

{{Reflist

Solid mechanics

Mechanics

Another important operation related to tensor derivatives in continuum mechanics is integration by parts. The formula for integration by parts can be written as

where and are differentiable tensor fields of arbitrary order, is the unit outward normal to the domain over which the tensor fields are defined, represents a generalized tensor product operator, and is a generalized gradient operator. When is equal to the identity tensor, we get the

Another important operation related to tensor derivatives in continuum mechanics is integration by parts. The formula for integration by parts can be written as

where and are differentiable tensor fields of arbitrary order, is the unit outward normal to the domain over which the tensor fields are defined, represents a generalized tensor product operator, and is a generalized gradient operator. When is equal to the identity tensor, we get the