Szemerédi Regularity Lemma on:

[Wikipedia]

[Google]

[Amazon]

In

In

We call a pair of parts -regular if, whenever you take a large subset of each part, their edge density isn't too far off the edge density of the pair of parts. Formally,

We call a pair of parts -regular if, whenever you take a large subset of each part, their edge density isn't too far off the edge density of the pair of parts. Formally,

We shall find an ε-regular partition for a given graph following an algorithm:

# Start with a partition

# While the partition isn't ε-regular:

#*Find the subsets which witness ε-irregularity for each irregular pair.

#Refine the partition using all the witnessing subsets.

We apply a technique called the energy increment argument to show that this process stops after a bounded number of steps. To do this, we define a measure which must increase by a certain amount in each step, but it's bounded above and thus cannot increase indefinitely. This measure is called 'energy' as it's an quantity.

We shall find an ε-regular partition for a given graph following an algorithm:

# Start with a partition

# While the partition isn't ε-regular:

#*Find the subsets which witness ε-irregularity for each irregular pair.

#Refine the partition using all the witnessing subsets.

We apply a technique called the energy increment argument to show that this process stops after a bounded number of steps. To do this, we define a measure which must increase by a certain amount in each step, but it's bounded above and thus cannot increase indefinitely. This measure is called 'energy' as it's an quantity.

In

In extremal graph theory

Extremal graph theory is a branch of combinatorics, itself an area of mathematics, that lies at the intersection of extremal combinatorics and graph theory. In essence, extremal graph theory studies how global properties of a graph influence loca ...

, Szemerédi’s regularity lemma states that a graph

Graph may refer to:

Mathematics

*Graph (discrete mathematics), a structure made of vertices and edges

**Graph theory, the study of such graphs and their properties

*Graph (topology), a topological space resembling a graph in the sense of discret ...

can be partitioned into a bounded number of parts so that the edges between parts are regular (in the sense defined below). The lemma shows that certain properties of random graphs

In mathematics, random graph is the general term to refer to probability distributions over Graph (discrete mathematics), graphs. Random graphs may be described simply by a probability distribution, or by a random process which generates them. ...

can be applied to dense graphs like counting the copies of a given subgraph within graphs. Endre Szemerédi

Endre Szemerédi (; born August 21, 1940) is a Hungarian-American mathematician and computer scientist, working in the field of combinatorics and theoretical computer science. He has been the State of New Jersey Professor of computer science a ...

proved the lemma over bipartite graphs

In the mathematical field of graph theory, a bipartite graph (or bigraph) is a graph whose vertices can be divided into two disjoint and independent sets U and V, that is, every edge connects a vertex in U to one in V. Vertex sets U and V a ...

for his theorem on arithmetic progressions in 1975 and for general graphs in 1978. Variants of the lemma use different notions of regularity and apply to other mathematical objects like hypergraphs

In mathematics, a hypergraph is a generalization of a graph in which an edge can join any number of vertices. In contrast, in an ordinary graph, an edge connects exactly two vertices.

Formally, a directed hypergraph is a pair (X,E), where X is ...

.

Statement

To state Szemerédi's regularity lemma formally, we must formalize what the edge distribution between parts behaving 'almost randomly' really means. By 'almost random', we're referring to a notion called -regularity. To understand what this means, we first state some definitions. In what follows is a graph with vertex set .Definition 1. Let be disjoint subsets of . The edge density of the pair is defined as: : where denotes the set of edges having one end vertex in and one in .

We call a pair of parts -regular if, whenever you take a large subset of each part, their edge density isn't too far off the edge density of the pair of parts. Formally,

We call a pair of parts -regular if, whenever you take a large subset of each part, their edge density isn't too far off the edge density of the pair of parts. Formally,

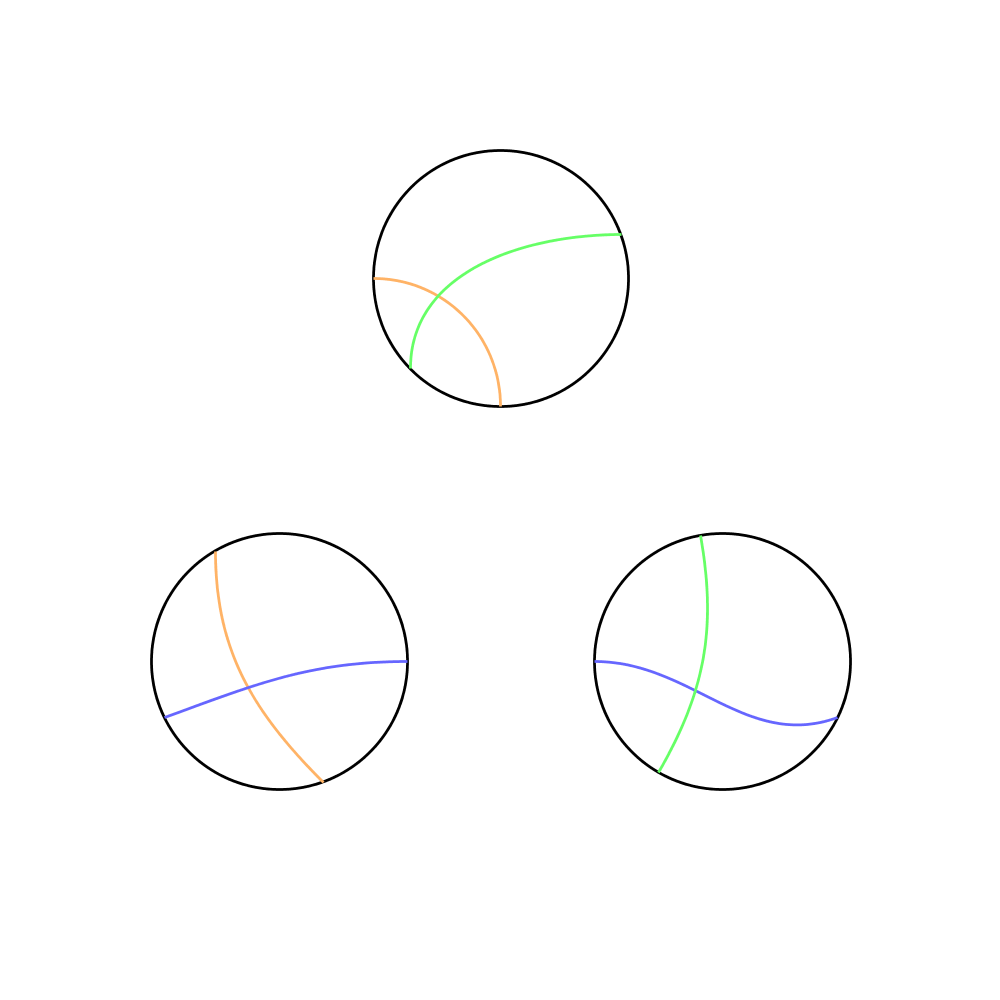

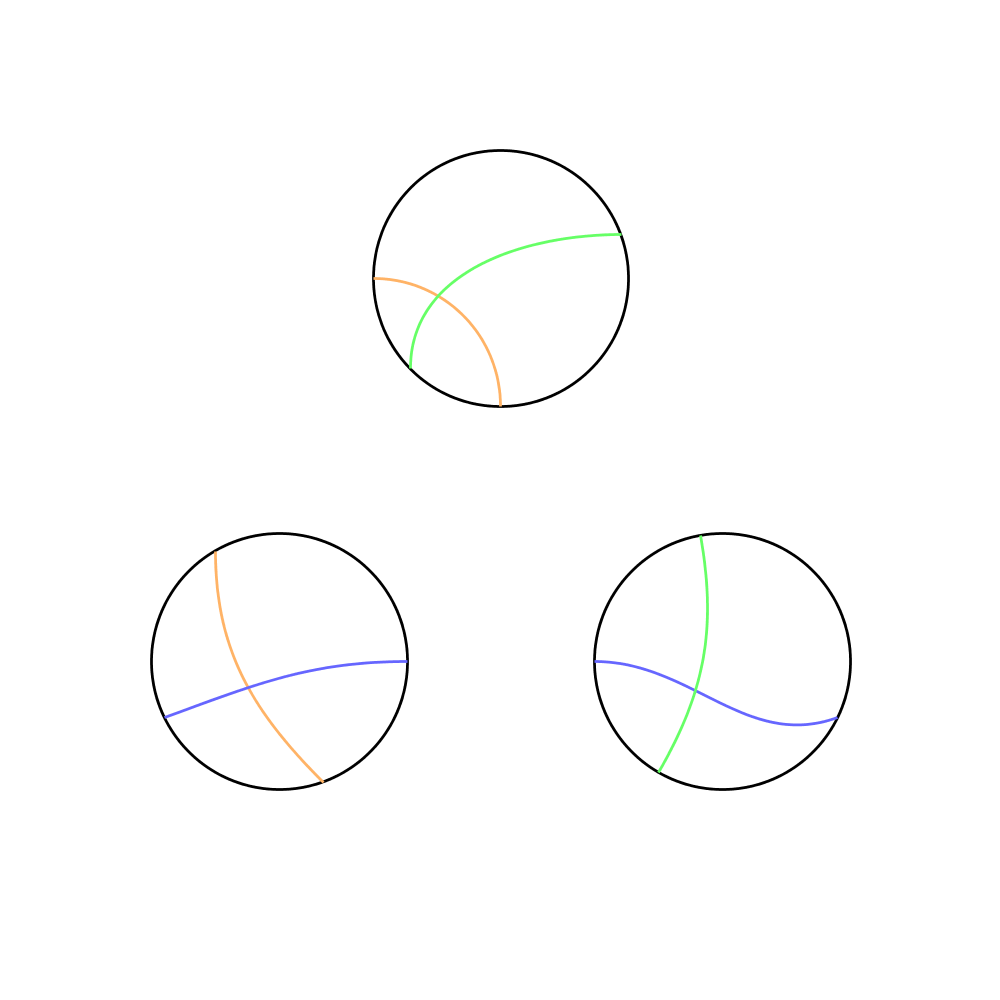

Definition 2. For , a pair of vertex sets and is called -regular, if for all subsets , satisfying , , we have :The natural way to define an -regular partition should be one where each pair of parts is -regular. However, some graphs, such as the half graphs, require many pairs of partitions (but a small fraction of all pairs) to be irregular. So we shall define -regular partitions to be one where most pairs of parts are -regular.

Definition 3. A partition of into sets is called an -regular partition if :Now we can state the lemma:

Szemerédi's regularity Lemma. For every and positiveThe bound for the number of parts in the partition of the graph given by the proofs of Szemeredi's regularity lemma is very large, given by a -level iterated exponential of . At one time it was hoped that the true bound was much smaller, which would have had several useful applications. However found examples of graphs for which does indeed grow very fast and is at least as large as a -level iterated exponential of .integer An integer is the number zero (0), a positive natural number (1, 2, 3, ...), or the negation of a positive natural number (−1, −2, −3, ...). The negations or additive inverses of the positive natural numbers are referred to as negative in ...there exists an integer such that if is a graph with at least vertices, there exists an integer in the range and an -regular partition of the vertex set of into sets.

Proof

We shall find an ε-regular partition for a given graph following an algorithm:

# Start with a partition

# While the partition isn't ε-regular:

#*Find the subsets which witness ε-irregularity for each irregular pair.

#Refine the partition using all the witnessing subsets.

We apply a technique called the energy increment argument to show that this process stops after a bounded number of steps. To do this, we define a measure which must increase by a certain amount in each step, but it's bounded above and thus cannot increase indefinitely. This measure is called 'energy' as it's an quantity.

We shall find an ε-regular partition for a given graph following an algorithm:

# Start with a partition

# While the partition isn't ε-regular:

#*Find the subsets which witness ε-irregularity for each irregular pair.

#Refine the partition using all the witnessing subsets.

We apply a technique called the energy increment argument to show that this process stops after a bounded number of steps. To do this, we define a measure which must increase by a certain amount in each step, but it's bounded above and thus cannot increase indefinitely. This measure is called 'energy' as it's an quantity.

Definition 4. Let be subsets of . Set . The energy of the pair is defined as: : For partitions of and of , we define the energy to be the sum of the energies between each pair of parts: : Finally, for a partition of , define the energy of to be . Specifically, :Note that energy is between 0 and 1 because edge density is bounded above by 1: : Now, we start by proving that energy does not decrease upon refinement.

Lemma 1. (Energy is nondecreasing under partitioning) For any partitions and of vertex sets and , .

Proof: Let and . Choose vertices uniformly from and uniformly from , with in part and in part . Then define the random variable . Let us look at properties of . The expectation is : The second moment is : By convexity, . Rearranging, we get that for all .If each part of is further partitioned, the new partition is called a refinement of . Now, if , applying Lemma 1 to each pair proves that for every refinement of , . Thus the refinement step in the algorithm doesn't lose any energy.

Lemma 2. (Energy boost lemma) If is not -regular as witnessed by , then, :

Proof: Define as above. Then, : But observe that with probability (corresponding to and ), so :Now we can prove the energy increment argument, which shows that energy increases substantially in each iteration of the algorithm.

Lemma 3 (Energy increment lemma) If a partition of is not -regular, then there exists a refinement of where every is partitioned into at most parts such that :

Proof: For all such that is not -regular, find and that witness irregularity (do this simultaneously for all irregular pairs). Let be a common refinement of by 's. Each is partitioned into at most parts as desired. Then, : Where is the partition of given by . By Lemma 1, the above quantity is at least : Since is cut by when creating , so is a refinement of . By lemma 2, the above sum is at least : But the second sum is at least since is not -regular, so we deduce the desired inequality.Now, starting from any partition, we can keep applying Lemma 3 as long as the resulting partition isn't -regular. But in each step energy increases by , and it's bounded above by 1. Then this process can be repeated at most times, before it terminates and we must have an -regular partition.

Applications

Graph counting lemma

If we have enough information about the regularity of a graph, we can count the number of copies of a specific subgraph within the graph up to small error.Graph Counting Lemma. Let be a graph with , and let . Let be an -vertex graph with vertex sets such that is -regular whenever . Then, the number of labeled copies of in is within of :This can be combined with Szemerédi's regularity lemma to prove the

Graph removal lemma

In graph theory, the graph removal lemma states that when a graph contains few copies of a given Subgraph (graph theory), subgraph, then all of the copies can be eliminated by removing a small number of edges. The special case in which the subgraph ...

. The graph removal lemma can be used to prove Roth's Theorem on Arithmetic Progressions, and a generalization of it, the hypergraph removal lemma

In graph theory, the hypergraph removal lemma states that when a hypergraph contains few copies of a given sub-hypergraph, then all of the copies can be eliminated by removing a small number of hyperedges. It is a generalization of the graph remova ...

, can be used to prove Szemerédi's theorem

In arithmetic combinatorics, Szemerédi's theorem is a result concerning arithmetic progressions in subsets of the integers. In 1936, Erdős and Turán conjectured that every set of integers ''A'' with positive natural density contains a ''k''- ...

.

The graph removal lemma generalizes to induced subgraph

In graph theory, an induced subgraph of a graph is another graph, formed from a subset of the vertices of the graph and ''all'' of the edges, from the original graph, connecting pairs of vertices in that subset.

Definition

Formally, let G=(V,E) ...

s, by considering edge edits instead of only edge deletions. This was proved by Alon, Fischer, Krivelevich, and Szegedy in 2000. However, this required a stronger variation of the regularity lemma.

Szemerédi's regularity lemma does not provide meaningful results in sparse graph

In mathematics, a dense graph is a Graph (discrete mathematics), graph in which the number of edges is close to the maximal number of edges (where every pair of Vertex (graph theory), vertices is connected by one edge). The opposite, a graph with ...

s. Since sparse graphs have subconstant edge densities, -regularity is trivially satisfied. Even though the result seems purely theoretical, some attempts have been made to use the regularity method as compression technique for large graphs.

Frieze-Kannan regularity

A different notion of regularity was introduced by Frieze and Kannan, known as the weak regularity lemma. This lemma defines a weaker notion of regularity than that of Szemerédi which uses better bounds and can be used in efficient algorithms. Given a graph , a partition of its vertices is said to be ''Frieze-Kannan'' -regular if for any pair of sets : : The weak regularity lemma for graphs states that every graph has a weak -regular partition into at most parts. This notion can be extended to graphons by defining a stepping operator. Given a graphon and a partition of