Runtime Verification on:

[Wikipedia]

[Google]

[Amazon]

Runtime verification is a computing system analysis and execution approach based on extracting information from a running system and using it to detect and possibly react to observed behaviors satisfying or violating certain properties. Some very particular properties, such as datarace and

The broad field of runtime verification methods can be classified by three dimensions:

* The system can be monitored during the execution itself (online) or after the execution e.g. in form of

The broad field of runtime verification methods can be classified by three dimensions:

* The system can be monitored during the execution itself (online) or after the execution e.g. in form of

The Jav

The Jav

Iterator

interface requires that the

The figure to the right shows a finite-state machine that defines a possible monitor for checking and enforcing this property with runtime verification. From the ''unknown'' state, it is always an error to call the

Th

Th

Vector

class in Java has two means for iterating over its elements. One may use the Iterator interface, as seen in the previous example, or one may use th

interface. Besides the addition of a remove method for the Iterator interface, the main difference is that Iterator is "fail fast" while Enumeration is not. What this means is that if one modifies the Vector (other than by using the Iterator remove method) when one is iterating over the Vector using an Iterator,

is thrown. However, when using an Enumeration this is not a case, as mentioned. This can result in non-deterministic results from a program because the Vector is left in an inconsistent state from the perspective of the Enumeration. For legacy programs that still use the Enumeration interface, one may wish to enforce that Enumerations are not used when their underlying Vector is modified. The following parametric regular pattern can be used to enforce this behavior: :∀ Vector ''v'', Enumeration ''e'': (''e'' = ''v''.elements()) (''e''.nextElement())* ''v''.update() ''e''.nextElement() This pattern is parametric in both the Enumeration and the Vector. Intuitively, and as above runtime verification systems need not implement their parametric monitors this way, one may think of the parametric monitor for this property as creating and keeping track of a non-parametric monitor instance for each possible pair of Vector and Enumeration. Some events may concern several monitors at the same time, such as

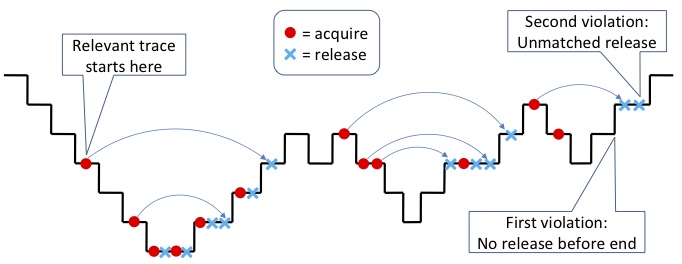

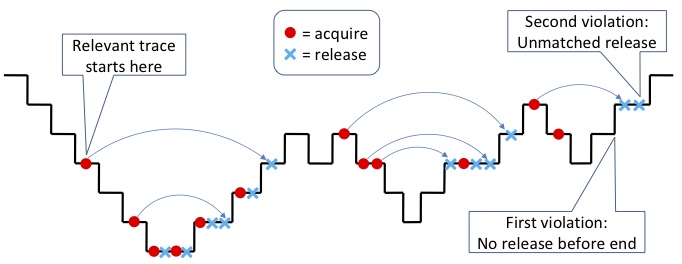

The pattern specifies balanced sequences of nested begin/end and acquire/release pairs for each Thread and Lock ( is the empty sequence). Here begin and end refer to the begin and end of every method in the program (except the calls to acquire and release themselves). They are parametric in the Thread because it is necessary to associate the beginning and end of methods if and only if they belong to the same Thread. The acquire and release events are also parametric in the Thread for the same reason. They are, additionally, parametric in Lock because we do not wish to associate the releases of one Lock with the acquires of another. In the extreme, it is possible that there will be an instance of the property, i.e., a copy of the context-free parsing mechanism, for each possible combination of Thread with Lock; this happens, again, intuitively, because runtime verification systems may implement the same functionality differently. For example, if a system has Threads , , and with Locks and , then it is possible to have to maintain property instances for the pairs <,>, <,>, <,>, <,>, <,>, and <,>. This property should be monitored for failures to match the pattern because the pattern specified correct behavior. The figure to the right shows a trace that produces two violations of this property. The steps down in the figure represent the beginning of a method, while the steps up are the end. The grey arrows in the figure show the matching between given acquires and releases of the same Lock. For simplicity, the trace shows only one Thread and one Lock.

The pattern specifies balanced sequences of nested begin/end and acquire/release pairs for each Thread and Lock ( is the empty sequence). Here begin and end refer to the begin and end of every method in the program (except the calls to acquire and release themselves). They are parametric in the Thread because it is necessary to associate the beginning and end of methods if and only if they belong to the same Thread. The acquire and release events are also parametric in the Thread for the same reason. They are, additionally, parametric in Lock because we do not wish to associate the releases of one Lock with the acquires of another. In the extreme, it is possible that there will be an instance of the property, i.e., a copy of the context-free parsing mechanism, for each possible combination of Thread with Lock; this happens, again, intuitively, because runtime verification systems may implement the same functionality differently. For example, if a system has Threads , , and with Locks and , then it is possible to have to maintain property instances for the pairs <,>, <,>, <,>, <,>, <,>, and <,>. This property should be monitored for failures to match the pattern because the pattern specified correct behavior. The figure to the right shows a trace that produces two violations of this property. The steps down in the figure represent the beginning of a method, while the steps up are the end. The grey arrows in the figure show the matching between given acquires and releases of the same Lock. For simplicity, the trace shows only one Thread and one Lock.

deadlock

Deadlock commonly refers to:

* Deadlock (computer science), a situation where two processes are each waiting for the other to finish

* Deadlock (locksmithing) or deadbolt, a physical door locking mechanism

* Political deadlock or gridlock, a si ...

freedom, are typically desired to be satisfied by all systems and may be best implemented algorithmically. Other properties can be more conveniently captured as formal specification

In computer science, formal specifications are mathematically based techniques whose purpose is to help with the implementation of systems and software. They are used to describe a system, to analyze its behavior, and to aid in its design by verify ...

s. Runtime verification specifications are typically expressed in trace predicate formalisms, such as finite-state machine

A finite-state machine (FSM) or finite-state automaton (FSA, plural: ''automata''), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number o ...

s, regular expression

A regular expression (shortened as regex or regexp), sometimes referred to as rational expression, is a sequence of characters that specifies a match pattern in text. Usually such patterns are used by string-searching algorithms for "find" ...

s, context-free patterns, linear temporal logic

In logic, linear temporal logic or linear-time temporal logic (LTL) is a modal logic, modal temporal logic with modalities referring to time. In LTL, one can encode formula (logic), formulae about the future of path (graph theory), paths, e.g., a c ...

s, etc., or extensions of these. This allows for a less ad-hoc approach than normal testing. However, any mechanism for monitoring an executing system is considered runtime verification, including verifying against test oracles and reference implementations . When formal requirements specifications are provided, monitors are synthesized from them and infused within the system by means of instrumentation. Runtime verification can be used for many purposes, such as security or safety policy monitoring Policy monitoring comes a range of activities describing and analyzing the development and implementation of policies, identifying potential gaps in the process, outlining areas for improvement, and holding policy implementers accountable for their ...

, debugging, testing, verification, validation, profiling, fault protection, behavior modification (e.g., recovery), etc. Runtime verification avoids the complexity of traditional formal verification

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of a system with respect to a certain formal specification or property, using formal methods of mathematics.

Formal ver ...

techniques, such as model checking

In computer science, model checking or property checking is a method for checking whether a finite-state model of a system meets a given specification (also known as correctness). This is typically associated with hardware or software syst ...

and theorem proving, by analyzing only one or a few execution traces and by working directly with the actual system, thus scaling up relatively well and giving more confidence in the results of the analysis (because it avoids the tedious and error-prone step of formally modelling the system), at the expense of less coverage. Moreover, through its reflective capabilities runtime verification can be made an integral part of the target system, monitoring and guiding its execution during deployment.

History and context

Checking formally or informally specified properties against executing systems or programs is an old topic (notable examples aredynamic typing

In computer programming, a type system is a logical system comprising a set of rules that assigns a property called a ''type'' (for example, integer, floating point, string) to every '' term'' (a word, phrase, or other set of symbols). Usu ...

in software, or fail-safe devices or watchdog timers in hardware), whose precise roots are hard to identify. The terminology ''runtime verification'' was formally introduced as the name of a 2001 workshop aimed at addressing problems at the boundary between formal verification and testing. For large code bases, manually writing test cases turns out to be very time consuming. In addition, not all errors can be detected during development. Early contributions to automated verification were made at the NASA Ames Research Center by Klaus Havelund and Grigore Rosu to archive high safety standards in spacecraft, rovers and avionics technology.Klaus Havelund and Grigore Rosu. 2004. An Overview of the Runtime Verification Tool Java PathExplorer. Formal Methods in System Design, 24(2), March 2004. They proposed a tool to verify specifications in temporal logic and to detect race condition

A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent ...

s and deadlocks in Java

Java is one of the Greater Sunda Islands in Indonesia. It is bordered by the Indian Ocean to the south and the Java Sea (a part of Pacific Ocean) to the north. With a population of 156.9 million people (including Madura) in mid 2024, proje ...

programs by analyzing single execution paths.

Currently, runtime verification techniques are often presented with various alternative names, such as runtime monitoring, runtime checking, runtime reflection, runtime analysis, dynamic analysis, runtime/dynamic symbolic analysis, trace analysis, log file analysis, etc., all referring to instances of the same high-level concept applied either to different areas or by scholars from different communities. Runtime verification is intimately related to other well-established areas, such as testing (particularly model-based testing) when used before deployment and fault-tolerant system

Fault tolerance is the ability of a system to maintain proper operation despite failures or faults in one or more of its components. This capability is essential for high-availability, mission-critical, or even life-critical systems.

Fault to ...

s when used during deployment.

Within the broad area of runtime verification, one can distinguish several categories, such as:

* "specification-less" monitoring that targets a fixed set of mostly concurrency-related properties such as atomicity. The pioneering work in this area is by Savage ''et al.'' with the Eraser algorithmStefan Savage, Michael Burrows, Greg Nelson, Patrick Sobalvarro, and Thomas Anderson. 1997. Eraser: a Dynamic Data Race Detector for Multithreaded Programs. ACM Trans. Comput. Syst. 15(4), November 1997, pp. 391-411.

* monitoring with respect to temporal logic specifications; early contributions in this direction has been made by Lee, Kannan, and their collaborators,Insup Lee, Sampath Kannan, Moonjoo Kim, Oleg Sokolsky, Mahesh Viswanathan, Runtime Assurance Based On Formal Specifications, Proceedings of International Conference on Parallel and Distributed Processing Techniques and Applications, June 1999. and Havelund and Rosu,.Klaus Havelund, Using Runtime Analysis to Guide Model Checking of Java Programs, 7th International SPIN Workshop, August 2000.Klaus Havelund and Grigore Rosu, Monitoring Programs using Rewriting, Automated Software Engineering (ASE'01), November 2001.

Basic approaches

log analysis

In computer log management and intelligence, log analysis (or ''system and network log analysis'') is an art and science seeking to make sense of computer-generated records (also called log or audit trail records). The process of creating such reco ...

(offline).

* The verifying code is integrated into the system (as done in Aspect-oriented Programming

In computing, aspect-oriented programming (AOP) is a programming paradigm that aims to increase modularity by allowing the separation of cross-cutting concerns. It does so by adding behavior to existing code (an advice) ''without'' modifying t ...

) or is provided as an external entity.

* The monitor can report violation or validation of the desired specification.

Nevertheless, the basic process in runtime verification remains similar:Yliès Falcone, Klaus Havelund and Giles, A Tutorial on Runtime Verification, 2013

# A monitor is created from some formal specification. This process usually can be done automatically if there are equivalent automata

An automaton (; : automata or automatons) is a relatively self-operating machine, or control mechanism designed to automatically follow a sequence of operations, or respond to predetermined instructions. Some automata, such as bellstrikers i ...

for the formulas of the formal language

In logic, mathematics, computer science, and linguistics, a formal language is a set of strings whose symbols are taken from a set called "alphabet".

The alphabet of a formal language consists of symbols that concatenate into strings (also c ...

the property is specified in. To transform a regular expression

A regular expression (shortened as regex or regexp), sometimes referred to as rational expression, is a sequence of characters that specifies a match pattern in text. Usually such patterns are used by string-searching algorithms for "find" ...

, a finite-state machine

A finite-state machine (FSM) or finite-state automaton (FSA, plural: ''automata''), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number o ...

can be used; a property in linear temporal logic

In logic, linear temporal logic or linear-time temporal logic (LTL) is a modal logic, modal temporal logic with modalities referring to time. In LTL, one can encode formula (logic), formulae about the future of path (graph theory), paths, e.g., a c ...

can be transformed into a Büchi automaton

In computer science and automata theory, a deterministic Büchi automaton is a theoretical machine which either accepts or rejects infinite inputs. Such a machine has a set of states and a transition function, which determines which state the mach ...

(see also Linear temporal logic to Büchi automaton

In mathematics, the term ''linear'' is used in two distinct senses for two different properties:

* linearity of a ''function'' (or '' mapping'');

* linearity of a ''polynomial''.

An example of a linear function is the function defined by f(x)=( ...

).

# The system is instrumented to send events concerning its execution state to the monitor.

# The system is executed and gets verified by the monitor.

# The monitor verifies the received event trace and produces a verdict whether the specification is satisfied. Additionally, the monitor sends feedback to the system to possibly correct false behaviour. When using offline monitoring the system of cause cannot receive any feedback, as the verification is done at a later point in time.

Examples

The examples below discuss some simple properties that have been considered, possibly with small variations, by several runtime verification groups by the time of this writing (April 2011). To make them more interesting, each property below uses a different specification formalism and all of them are parametric. Parametric properties are properties about traces formed with parametric events, which are events that bind data to parameters. Here a parametric property has the form , where is a specification in some appropriate formalism referring to generic (uninstantiated) parametric events. The intuition for such parametric properties is that the property expressed by must hold for all parameter instances encountered (through parametric events) in the observed trace. None of the following examples are specific to any particular runtime verification system, though support for parameters is obviously needed. In the following examples Java syntax is assumed, thus "" is logical equality, while "=" is assignment. Some methods (e.g.,update() in the UnsafeEnumExample) are dummy methods, which are not part of the Java API, that are used for clarity.

HasNext

The Jav

The JavIterator

interface requires that the

hasNext() method be called and return true before the next() method is called. If this

does not occur, it is very possible that a user will iterate "off the end of" The figure to the right shows a finite-state machine that defines a possible monitor for checking and enforcing this property with runtime verification. From the ''unknown'' state, it is always an error to call the

next() method because such an operation could be unsafe. If hasNext() is called and returns true, it is safe to call next(), so the monitor enters the ''more'' state. If, however, the hasNext() method returns false, there are no more elements, and the monitor enters the ''none'' state. In the ''more'' and ''none'' states, calling the hasNext() method provides no new information. It is safe to call the next() method from the ''more'' state, but it becomes unknown if more elements exist, so the monitor reenters the initial ''unknown'' state. Finally, calling the next() method from the ''none'' state results in entering the ''error'' state. What follows is a representation of this property using parametric past time linear temporal logic

In logic, linear temporal logic or linear-time temporal logic (LTL) is a modal logic, modal temporal logic with modalities referring to time. In LTL, one can encode formula (logic), formulae about the future of path (graph theory), paths, e.g., a c ...

.

This formula says that any call to the next() method must be immediately preceded by a call to hasNext() method that returns true. The property here is parametric in the Iterator i. Conceptually, this means that there will be one copy of the monitor for each possible Iterator in a test program, although runtime verification systems need not implement their parametric monitors this way. The monitor for this property would be set to trigger a handler when the formula is violated (equivalently when the finite-state machine enters the ''error'' state), which will occur when either next() is called without first calling hasNext(), or when hasNext() is called before next(), but returned false.

UnsafeEnum

Th

ThVector

class in Java has two means for iterating over its elements. One may use the Iterator interface, as seen in the previous example, or one may use th

interface. Besides the addition of a remove method for the Iterator interface, the main difference is that Iterator is "fail fast" while Enumeration is not. What this means is that if one modifies the Vector (other than by using the Iterator remove method) when one is iterating over the Vector using an Iterator,

is thrown. However, when using an Enumeration this is not a case, as mentioned. This can result in non-deterministic results from a program because the Vector is left in an inconsistent state from the perspective of the Enumeration. For legacy programs that still use the Enumeration interface, one may wish to enforce that Enumerations are not used when their underlying Vector is modified. The following parametric regular pattern can be used to enforce this behavior: :∀ Vector ''v'', Enumeration ''e'': (''e'' = ''v''.elements()) (''e''.nextElement())* ''v''.update() ''e''.nextElement() This pattern is parametric in both the Enumeration and the Vector. Intuitively, and as above runtime verification systems need not implement their parametric monitors this way, one may think of the parametric monitor for this property as creating and keeping track of a non-parametric monitor instance for each possible pair of Vector and Enumeration. Some events may concern several monitors at the same time, such as

v.update(), so the runtime verification system must (again conceptually) dispatch them to all interested monitors. Here the property is specified so that it states the bad behaviors of the program. This property, then, must be monitored for the match of the pattern. The figure to the right shows Java code that matches this pattern, thus violating the property. The Vector, v, is updated after the Enumeration, e, is created, and e is then used.

SafeLock

The previous two examples show finite state properties, but properties used in runtime verification may be much more complex. The SafeLock property enforces the policy that the number of acquires and releases of a (reentrant) Lock class are matched within a given method call. This, of course, disallows release of Locks in methods other than the ones that acquire them, but this is very possibly a desirable goal for the tested system to achieve. Below is a specification of this property using a parametric context-free pattern: :∀ Thread ''t'', Lock ''l'': ''S''→ε , ''S'' begin(''t'') ''S'' end(''t'') , ''S'' ''l''.acquire(''t'') ''S'' ''l''.release(''t'') The pattern specifies balanced sequences of nested begin/end and acquire/release pairs for each Thread and Lock ( is the empty sequence). Here begin and end refer to the begin and end of every method in the program (except the calls to acquire and release themselves). They are parametric in the Thread because it is necessary to associate the beginning and end of methods if and only if they belong to the same Thread. The acquire and release events are also parametric in the Thread for the same reason. They are, additionally, parametric in Lock because we do not wish to associate the releases of one Lock with the acquires of another. In the extreme, it is possible that there will be an instance of the property, i.e., a copy of the context-free parsing mechanism, for each possible combination of Thread with Lock; this happens, again, intuitively, because runtime verification systems may implement the same functionality differently. For example, if a system has Threads , , and with Locks and , then it is possible to have to maintain property instances for the pairs <,>, <,>, <,>, <,>, <,>, and <,>. This property should be monitored for failures to match the pattern because the pattern specified correct behavior. The figure to the right shows a trace that produces two violations of this property. The steps down in the figure represent the beginning of a method, while the steps up are the end. The grey arrows in the figure show the matching between given acquires and releases of the same Lock. For simplicity, the trace shows only one Thread and one Lock.

The pattern specifies balanced sequences of nested begin/end and acquire/release pairs for each Thread and Lock ( is the empty sequence). Here begin and end refer to the begin and end of every method in the program (except the calls to acquire and release themselves). They are parametric in the Thread because it is necessary to associate the beginning and end of methods if and only if they belong to the same Thread. The acquire and release events are also parametric in the Thread for the same reason. They are, additionally, parametric in Lock because we do not wish to associate the releases of one Lock with the acquires of another. In the extreme, it is possible that there will be an instance of the property, i.e., a copy of the context-free parsing mechanism, for each possible combination of Thread with Lock; this happens, again, intuitively, because runtime verification systems may implement the same functionality differently. For example, if a system has Threads , , and with Locks and , then it is possible to have to maintain property instances for the pairs <,>, <,>, <,>, <,>, <,>, and <,>. This property should be monitored for failures to match the pattern because the pattern specified correct behavior. The figure to the right shows a trace that produces two violations of this property. The steps down in the figure represent the beginning of a method, while the steps up are the end. The grey arrows in the figure show the matching between given acquires and releases of the same Lock. For simplicity, the trace shows only one Thread and one Lock.

Research challenges and applications

Most of the runtime verification research addresses one or more of the topics listed below.Reducing runtime overhead

Observing an executing system typically incurs some runtime overhead (hardware monitors may make an exception). It is important to reduce the overhead of runtime verification tools as much as possible, particularly when the generated monitors are deployed with the system. Runtime overhead reducing techniques include: * ''Improved instrumentation''. Extracting events from the executing system and sending them to monitors can generate a large runtime overhead if done naively. Good system instrumentation is critical for any runtime verification tool, unless the tool explicitly targets existing execution logs. There are many instrumentation approaches in current use, each with its advantages and disadvantages, ranging from custom or manual instrumentation, to specialized libraries, to compilation into aspect-oriented languages, to augmenting the virtual machine, to building upon hardware support. * ''Combination with static analysis''. A common combination of static and dynamic analyses, particularly encountered in compilers, is to monitor all the requirements that cannot be discharged statically. A dual and ultimately equivalent approach tends to become the norm in runtime verification, namely to usestatic analysis

Static analysis, static projection, or static scoring is a simplified analysis wherein the effect of an immediate change to a system is calculated without regard to the longer-term response of the system to that change. If the short-term effect i ...

to reduce the amount of otherwise exhaustive monitoring. Static analysis can be performed both on the property to monitor and on the system to be monitored. Static analysis of the property to monitor can reveal that certain events are unnecessary to monitor, that the creation of certain monitors can be delayed, and that certain existing monitors will never trigger and thus can be garbage collected. Static analysis of the system to monitor can detect code that can never influence the monitors. For example, when monitoring the HasNext property above, one needs not instrument portions of code where each call i.next() is immediately preceded on any path by a call i.hasnext() that returns true (visible on the control-flow graph).

* ''Efficient monitor generation and management''. When monitoring parametric properties like the ones in the examples above, the monitoring system needs to keep track of the status of the monitored property with respect to each parameter instance. The number of such instances is theoretically unbounded and tends to be enormous in practice. An important research challenge is how to efficiently dispatch observed events to precisely those instances that need them. A related challenge is how to keep the number of such instances small (so that dispatching is faster), or in other words, how to avoid creating unnecessary instances for as long as possible and, dually, how to remove already created instances as soon as they become unnecessary. Finally, parametric monitoring algorithms typically generalize similar algorithms for generating non-parametric monitors. Thus, the quality of the generated non-parametric monitors dictates the quality of the resulting parametric monitors. However, unlike in other verification approaches (e.g., model checking), the number of states or the size of the generated monitor is less important in runtime verification; in fact, some monitors can have infinitely many states, such as the one for the SafeLock property above, although at any point in time only a finite number of states may have occurred. What is important is how efficiently the monitor transits from a state to its next state when it receives an event from the executing system.

Specifying properties

One of the major practical impediments of all formal approaches is that their users are reluctant to, or don't know and don't want to learn how to read or write specifications. In some cases the specifications are implicit, such as those for deadlocks and data-races, but in most cases they need to be produced. An additional inconvenience, particularly in the context of runtime verification, is that many existing specification languages are not expressive enough to capture the intended properties. * ''Better formalisms.'' A significant amount of work in the runtime verification community has been put into designing specification formalisms that fit the desired application domains for runtime verification better than the conventional specification formalisms. Some of these consist of slight or no syntactic changes to the conventional formalisms, but only of changes to their semantics (e.g., finite trace versus infinite trace semantics, etc.) and to their implementation (optimized finite-state machines instead of Büchi automata, etc.). Others extend existing formalisms with features that are amenable for runtime verification but may not easily be for other verification approaches, such as adding parameters, as seen in the examples above. Finally, there are specification formalisms that have been designed specifically for runtime verification, attempting to achieve their best for this domain and caring little about other application domains. Designing universally better or domain-specifically better specification formalisms for runtime verification is and will continue to be one of its major research challenges. * ''Quantitative properties.'' Compared to other verification approaches, runtime verification is able to operate on concrete values of system state variables, which makes it possible to collect statistical information about the program execution and use this information to assess complex quantitative properties. More expressive property languages that will allow us to fully utilize this capability are needed. * ''Better interfaces.'' Reading and writing property specifications is not easy for non-experts. Even experts often stare for minutes at relatively small temporal logic formulae (particularly when they have nested "until" operators). An important research area is to develop powerful user interfaces for various specification formalisms that would allow users to more easily understand, write and maybe even visualize properties. * ''Mining specifications.'' No matter what tool support is available to help users produce specifications, they will almost always be more pleased to have to write no specifications at all, particularly when they are trivial. Fortunately, there are plenty of programs out there making supposedly correct use of the actions/events that one wants to have properties about. If that is the case, then it is conceivable that one would like to make use of those correct programs by automatically learning from them the desired properties. Even if the overall quality of the automatically mined specifications is expected to be lower than that of manually produced specifications, they can serve as a start point for the latter or as the basis for automatic runtime verification tools aimed specifically at finding bugs (where a poor specification turns into false positives or negatives, often acceptable during testing).Execution models and predictive analysis

The capability of a runtime verifier to detect errors strictly depends on its capability to analyze execution traces. When the monitors are deployed with the system, instrumentation is typically minimal and the execution traces are as simple as possible to keep the runtime overhead low. When runtime verification is used for testing, one can afford more comprehensive instrumentations that augment events with important system information that can be used by the monitors to construct and therefore analyze more refined models of the executing system. For example, augmenting events withVector clock

A vector clock is a data structure used for determining the partial ordering of events in a distributed system and detecting causality violations. Just as in Lamport timestamps, inter-process messages contain the state of the sending process's ...

information and with data and control flow information allows the monitors to construct a ''causal model'' of the running system in which the observed execution was only one possible instance. Any other permutation of events that is consistent with the model is a feasible execution of the system, which could happen under a different thread interleaving. Detecting property violations in such inferred executions (by monitoring them) makes the monitor ''predict'' errors that did not happen in the observed execution, but which can happen in another execution of the same system. An important research challenge is to extract models from execution traces that comprise as many other execution traces as possible.

Behavior modification

Unlike testing or exhaustive verification, runtime verification holds the promise to allow the system to recover from detected violations, through reconfiguration, micro-resets, or through finer intervention mechanisms sometimes referred to as tuning or steering. Implementation of these techniques within the rigorous framework of runtime verification gives rise to additional challenges. * ''Specification of actions.'' One needs to specify the modification to be performed in an abstract enough fashion that does not require the user to know irrelevant implementation details. In addition, when such a modification can take place needs to be specified in order to maintain the integrity of the system. * ''Reasoning about intervention effects.'' It is important to know that an intervention improves the situation, or at least does not make the situation worse. * ''Action interfaces.'' Similar to the instrumentation for monitoring, we need to enable the system to receive action invocations. Invocation mechanisms are by necessity going to be dependent on the implementation details of the system. However, at the specification level, we need to provide the user with a declarative way of providing feedback to the system by specifying what actions should be applied when under what conditions.Related work

Aspect-oriented programming

Researchers in Runtime Verification recognized the potential for usingAspect-oriented Programming

In computing, aspect-oriented programming (AOP) is a programming paradigm that aims to increase modularity by allowing the separation of cross-cutting concerns. It does so by adding behavior to existing code (an advice) ''without'' modifying t ...

as a technique for defining program instrumentation in a modular way. Aspect-oriented programming (AOP) generally promotes the modularization of crosscutting concerns. Runtime Verification naturally is one such concern and can hence benefit from certain properties of AOP. Aspect-oriented monitor definitions are largely declarative, and hence tend to be simpler to reason about than instrumentation expressed through a program transformation

A program transformation is any operation that takes a computer program and generates another program. In many cases the transformed program is required to be semantically equivalent to the original, relative to a particular Formal semantics of p ...

written in an imperative programming language. Further, static analyses can reason about monitoring aspects more easily than about other forms of program instrumentation, as all instrumentation is contained within a single aspect. Many current runtime verification tools are hence built in the form of specification compilers, that take an expressive high-level specification as input and produce as output code written in some Aspect-oriented programming language (such as AspectJ

AspectJ is an aspect-oriented programming (AOP) extension for the Java programming language, created at PARC. It is available in Eclipse Foundation open-source projects, both stand-alone and integrated into Eclipse. AspectJ has become a widely ...

).

Combination with formal verification

Runtime verification, if used in combination with provably correct recovery code, can provide an invaluable infrastructure for program verification, which can significantly lower the latter's complexity. For example, formally verifying heap-sort algorithm is very challenging. One less challenging technique to verify it is to monitor its output to be sorted (a linear complexity monitor) and, if not sorted, then sort it using some easily verifiable procedure, say insertion sort. The resulting sorting program is now more easily verifiable, the only thing being required from heap-sort is that it does not destroy the original elements regarded as a multiset, which is much easier to prove. Looking at from the other direction, one can use formal verification to reduce the overhead of runtime verification, as already mentioned above for static analysis instead of formal verification. Indeed, one can start with a fully runtime verified, but probably slow program. Then one can use formal verification (or static analysis) to discharge monitors, same way a compiler uses static analysis to discharge runtime checks of type correctness ormemory safety

Memory safety is the state of being protected from various software bugs and security vulnerabilities when dealing with memory access, such as buffer overflows and dangling pointers. For example, Java is said to be memory-safe because its ru ...

.

Increasing coverage

Compared to the more traditional verification approaches, an immediate disadvantage of runtime verification is its reduced coverage. This is not problematic when the runtime monitors are deployed with the system (together with appropriate recovery code to be executed when the property is violated), but it may limit the effectiveness of runtime verification when used to find errors in systems. Techniques to increase the coverage of runtime verification for error detection purposes include: * ''Input generation.'' It is well known that generating a good set of inputs (program input variable values, system call values, thread schedules, etc.) can enormously increase the effectiveness of testing. That holds true for runtime verification used for error detection, too, but in addition to using the program code to drive the input generation process, in runtime verification one can also use the property specifications, when available, and can also use monitoring techniques to induce desired behaviors. This use of runtime verification makes it closely related to model-based testing, although the runtime verification specifications are typically general purpose, not necessarily crafted for testing reasons. Consider, for example, that one wants to test the general-purpose ''UnsafeEnum'' property above. Instead of just generating the above-mentioned monitor to passively observe the system execution, one can generate a smarter monitor that freezes the thread attempting to generate the second ''e.nextElement()'' event (right before it generates it), letting the other threads execute in a hope that one of them may generate a ''v.update()'' event, in which case an error has been found. * ''Dynamic symbolic execution.'' In symbolic execution programs are executed and monitored symbolically, that is, without concrete inputs. One symbolic execution of the system may cover a large set of concrete inputs. Off-the-shelf constraint solving or satisfiability checking techniques are often used to drive symbolic executions or to systematically explore their space. When the underlying satisfiability checkers cannot handle a choice point, then a concrete input can be generated to pass that point; this combination of ''conc''rete and symb''olic'' execution is also referred to as concolic execution.See also

*Dynamic program analysis

Dynamics (from Greek δυναμικός ''dynamikos'' "powerful", from δύναμις ''dynamis'' " power") or dynamic may refer to:

Physics and engineering

* Dynamics (mechanics), the study of forces and their effect on motion

Brands and en ...

* Profiling (computer programming)

In software engineering, profiling (program profiling, software profiling) is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the freque ...

* Runtime error detection

* Runtime application self-protection

Runtime application self-protection (RASP) is a security technology that uses runtime instrumentation to detect and block computer attacks by taking advantage of information from inside the running software. The technology differs from perimeter- ...

(RASP)

References

{{Reflist Formal methods Logic in computer science