Robust Regression And Outlier Detection on:

[Wikipedia]

[Google]

[Amazon]

''Robust Regression and Outlier Detection'' is a book on

robust statistics

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, suc ...

, particularly focusing on the breakdown point

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, such ...

of methods for robust regression

In robust statistics, robust regression seeks to overcome some limitations of traditional regression analysis. A regression analysis models the relationship between one or more independent variables and a dependent variable. Standard types of reg ...

. It was written by Peter Rousseeuw

Peter J. Rousseeuw (born 13 October 1956) is a statistician known for his work on robust statistics and cluster analysis. He obtained his PhD in 1981 at the Vrije Universiteit Brussel, following research carried out at the ETH in Zurich, which led ...

and Annick M. Leroy, and published in 1987 by Wiley.

Background

Linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is call ...

is the problem of inferring a linear functional relationship between a dependent variable

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand ...

and one or more independent variables

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand ...

, from data sets where that relation has been obscured by noise. Ordinary least squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression model (with fixed level-one effects of a linear function of a set of explanatory variables) by the prin ...

assumes that the data all lie near the fit line or plane, but depart from it by the addition of normally distributed residual values. In contrast, robust regression methods work even when some of the data points are outlier

In statistics, an outlier is a data point that differs significantly from other observations. An outlier may be due to a variability in the measurement, an indication of novel data, or it may be the result of experimental error; the latter are ...

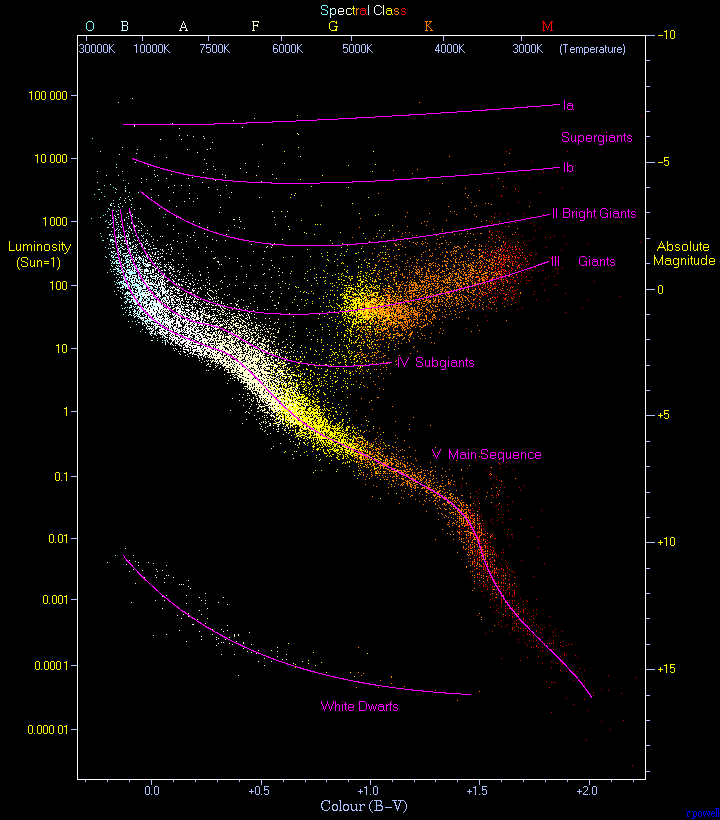

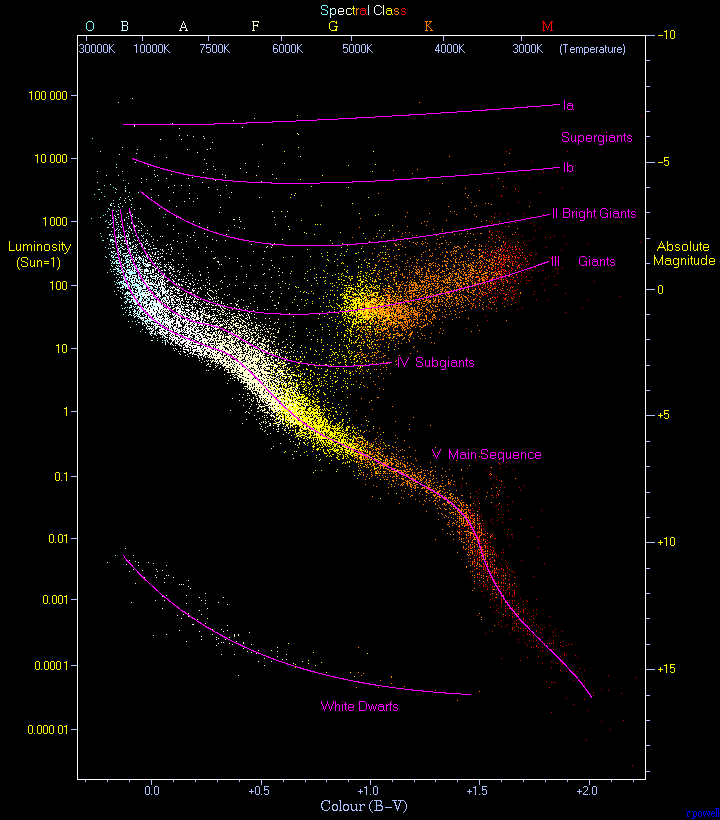

s that bear no relation to the fit line or plane, possibly because the data draws from a mixture of sources or possibly because an adversarial agent is trying to corrupt the data to cause the regression method to produce an inaccurate result. A typical application, discussed in the book, involves the Hertzsprung–Russell diagram

The Hertzsprung–Russell diagram, abbreviated as H–R diagram, HR diagram or HRD, is a scatter plot of stars showing the relationship between the stars' absolute magnitudes or luminosity, luminosities versus their stellar classifications or eff ...

of star types, in which one wishes to fit a curve through the main sequence

In astronomy, the main sequence is a continuous and distinctive band of stars that appears on plots of stellar color versus brightness. These color-magnitude plots are known as Hertzsprung–Russell diagrams after their co-developers, Ejnar Her ...

of stars without the fit being thrown off by the outlying giant star

A giant star is a star with substantially larger radius and luminosity than a main sequence, main-sequence (or ''dwarf'') star of the same effective temperature, surface temperature.Giant star, entry in ''Astronomy Encyclopedia'', ed. Patrick Moo ...

s and white dwarf

A white dwarf is a stellar core remnant composed mostly of electron-degenerate matter. A white dwarf is very dense: its mass is comparable to the Sun's, while its volume is comparable to the Earth's. A white dwarf's faint luminosity comes fro ...

s. The ''breakdown point'' of a robust regression method is the fraction of outlying data that it can tolerate while remaining accurate. For this style of analysis, higher breakdown points are better. The breakdown point for ordinary least squares is near zero (a single outlier can make the fit become arbitrarily far from the remaining uncorrupted data) while some other methods have breakdown points as high as 50%. Although these methods require few assumptions about the data, and work well for data whose noise is not well understood, they may have somewhat lower efficiency than ordinary least squares (requiring more data for a given accuracy of fit) and their implementation may be complex and slow.

Topics

The book has seven chapters. The first is introductory; it describessimple linear regression

In statistics, simple linear regression is a linear regression model with a single explanatory variable. That is, it concerns two-dimensional sample points with one independent variable and one dependent variable (conventionally, the ''x'' and ...

(in which there is only one independent variable), discusses the possibility of outliers that corrupt either the dependent or the independent variable, provides examples in which outliers produce misleading results, defines the breakdown point, and briefly introduces several methods for robust simple regression, including repeated median regression In robust statistics, repeated median regression, also known as the repeated median estimator, is a robust linear regression algorithm.

The estimator has a breakdown point of 50%. Although it is equivariant under scaling, or under linear transforma ...

. The second and third chapters analyze in more detail the least median of squares method for regression (in which one seeks a fit that minimizes the median

In statistics and probability theory, the median is the value separating the higher half from the lower half of a data sample, a population, or a probability distribution. For a data set, it may be thought of as "the middle" value. The basic fe ...

of the squared residuals) and the least trimmed squares

Least trimmed squares (LTS), or least trimmed sum of squares, is a robust statistical method that fits a function to a set of data whilst not being unduly affected by the presence of outliers. It is one of a number of methods for robust regression ...

method (in which one seeks to minimize the sum of the squared residuals that are below the median). These two methods both have breakdown point 50% and can be applied for both simple regression (chapter two) and multivariate regression (chapter three). Although the least median has an appealing geometric description (as finding a strip of minimum height containing half the data), its low efficiency leads to the recommendation that the least trimmed squares be used instead; least trimmed squares can also be interpreted as using the least median method to find and eliminate outliers and then using simple regression for the remaining data, and approaches simple regression in its efficiency. As well as describing these methods and analyzing their statistical properties, these chapters also describe how to use the authors' software for implementing these methods. The third chapter also includes descriptions of some alternative estimators with high breakdown points.

The fourth chapter describes one-dimensional estimation of a location parameter

In geography, location or place are used to denote a region (point, line, or area) on Earth's surface or elsewhere. The term ''location'' generally implies a higher degree of certainty than ''place'', the latter often indicating an entity with an ...

or central tendency

In statistics, a central tendency (or measure of central tendency) is a central or typical value for a probability distribution.Weisberg H.F (1992) ''Central Tendency and Variability'', Sage University Paper Series on Quantitative Applications ...

and its software implementation, and the fifth chapter goes into more detail about the algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specificat ...

s used by the software to compute these estimates efficiently. The sixth chapter concerns outlier detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority o ...

, comparing methods for identifying data points as outliers based on robust statistics with other widely used methods, and the final chapter concerns higher-dimensional location problems as well as time series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Exa ...

analysis and problems of fitting an ellipsoid or covariance matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of ...

to data. As well as using the breakdown point to compare statistical methods, the book also looks at their equivariance

In mathematics, equivariance is a form of symmetry for functions from one space with symmetry to another (such as symmetric spaces). A function is said to be an equivariant map when its domain and codomain are acted on by the same symmetry grou ...

: for which families of data transformations does the fit for transformed data equal the transformed version of the fit for the original data?

In keeping with the book's focus on applications, it features many examples of analyses done using robust methods, comparing the resulting estimates with the estimates obtained by standard non-robust methods. Theoretical material is included, but set aside so that it can be easily skipped over by less theoretically inclined readers. The authors take the position that robust methods can be used both to check the applicability of ordinary regression (when the results of both methods agree) and to supplant them in cases where the results disagree.

Audience and reception

The book is aimed at applied statisticians, with the goal of convincing them to use the robust methods that it describes. Unlike previous work in robust statistics, it makes robust methods both understandable by and (through its associated software) available to practitioners. No prior knowledge of robust statistics is required, although some background in basic statistical techniques is assumed. The book could also be used as a textbook, although reviewer P. J. Laycock calls the possibility of such a use "bold and progressive" and reviewers Seheult and Green point out that such a course would be unlikely to fit into British statistical curricula. Reviewers Seheult and Green complain that too much of the book acts as a user guide to the authors' software, and should have been trimmed. However, reviewer Gregory F. Piepel writes that "the presentation is very good", and he recommends the book to any user of statistical methods. And, while suggesting the reordering of some material,Karen Kafadar

Karen Kafadar is an American statistician. She is Commonwealth Professor of Statistics at the University of Virginia, and chair of the statistics department there. She was editor-in-chief of ''Technometrics'' from 1999 to 2001, and was president of ...

strongly recommends the book as a textbook for graduate students and a reference for professionals. And reviewer A. C. Atkinson concisely summarizes the book as "interesting and important".

Related books

There have been multiple previous books on robust regression and outlier detection, including: *''Identification of Outliers'' by D. M. Hawkins (1980) *''Robust Statistics'' by Peter J. Huber (1981) *''Introduction to Robust and Quasi-Robust Statistical Methods'' by W. J. J. Rey (1983) *''Understanding Robust and Exploratory Data Analysis'' by David C. Hoaglin,Frederick Mosteller

Charles Frederick Mosteller (December 24, 1916 – July 23, 2006) was an American mathematician, considered one of the most eminent statisticians of the 20th century. He was the founding chairman of Harvard's statistics department from 1957 ...

, and John Tukey

John Wilder Tukey (; June 16, 1915 – July 26, 2000) was an American mathematician and statistician, best known for the development of the fast Fourier Transform (FFT) algorithm and box plot. The Tukey range test, the Tukey lambda distributi ...

(1983)

*''Robust Statistics'' by Hampel, Ronchetti, Rousseeuw, and Stahel (1986)

In comparison, ''Robust Regression and Outlier Detection'' combines both robustness and the detection of outliers. It is less theoretical, more focused on data and software, and more focused on the breakdown point than on other measures of robustness. Additionally, it is the first to highlight the importance of "leverage", the phenomenon that samples with outlying values of the independent variable can have a stronger influence on the fit than samples where the independent variable has a central value.

References

{{reflist, refs= {{citation , last = Atkinson , first = A. C. , date = June 1988 , doi = 10.2307/2531877 , issue = 2 , journal = Biometrics , jstor = 2531877 , pages = 626–627 , title = Review of ''Robust Statistics'' and ''Robust Regression and Outlier Detection'' , volume = 44 {{citation , last = Kafadar , first = Karen , authorlink = Karen Kafadar , date = June 1989 , doi = 10.2307/2289958 , issue = 406 , journal = Journal of the American Statistical Association , jstor = 2289958 , pages = 617–618 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 84 {{citation , last = Laycock , first = P. J. , doi = 10.2307/2348319 , issue = 2 , journal = Journal of the Royal Statistical Society, Series D (The Statistician) , jstor = 2348319 , page = 138 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 38 , year = 1989 {{citation , last = Piepel , first = Gregory F. , date = May 1989 , doi = 10.2307/1268828 , issue = 2 , journal = Technometrics , jstor = 1268828 , pages = 260–261 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 31 {{citation , last1 = Seheult , first1 = A. H. , last2 = Green , first2 = P. J. , doi = 10.2307/2982847 , issue = 1 , journal = Journal of the Royal Statistical Society, Series A (Statistics in Society) , jstor = 2982847 , pages = 133–134 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 152 , year = 1989 {{citation , last = Sonnberger , first = Harold , date = July–September 1989 , issue = 3 , journal = Journal of Applied Econometrics , jstor = 2096530 , pages = 309–311 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 4 {{citation , last = Weisberg , first = Stanford , date = July–August 1989 , issue = 4 , journal = American Scientist , jstor = 27855903 , pages = 402–403 , title = Review of ''Robust Regression and Outlier Detection'' , volume = 77 {{citation , last = Yohai , first = V. J. , journal = Mathematical Reviews and zbMATH , mr = 0914792 , title = Review of ''Robust Regression and Outlier Detection'' , year = 1989 , zbl = 0711.62030 Robust regression Statistics books 1987 non-fiction books