Q-value (statistics) on:

[Wikipedia]

[Google]

[Amazon]

In

qvalue

package in R estimates ''q''-values from a list of ''p''-values.

statistical hypothesis testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis.

Hypothesis testing allows us to make probabilistic statements about population parameters.

...

, specifically multiple hypothesis testing, the ''q''-value in the Storey-Tibshirani procedure provides a means to control the positive false discovery rate (pFDR). Just as the ''p''-value gives the expected false positive rate

In statistics, when performing multiple comparisons, a false positive ratio (also known as fall-out or false alarm ratio) is the probability of falsely rejecting the null hypothesis for a particular test. The false positive rate is calculated as th ...

obtained by rejecting the null hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is d ...

for any result with an equal or smaller ''p''-value, the ''q''-value gives the expected pFDR obtained by rejecting the null hypothesis for any result with an equal or smaller ''q''-value.

History

In statistics, testing multiple hypotheses simultaneously using methods appropriate for testing single hypotheses tends to yield many false positives: the so-calledmultiple comparisons problem

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values.

The more inferences ...

. For example, assume that one were to test 1,000 null hypotheses, all of which are true, and (as is conventional in single hypothesis testing) to reject null hypotheses with a significance level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the ...

of 0.05; due to random chance, one would expect 5% of the results to appear significant ('' P'' < 0.05), yielding 50 false positives (rejections of the null hypothesis). Since the 1950s, statisticians had been developing methods for multiple comparisons that reduced the number of false positives, such as controlling the family-wise error rate

In statistics, family-wise error rate (FWER) is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests.

Familywise and Experimentwise Error Rates

Tukey (1953) developed the concept of a ...

(FWER) using the Bonferroni correction

In statistics, the Bonferroni correction is a method to counteract the multiple comparisons problem.

Background

The method is named for its use of the Bonferroni inequalities.

An extension of the method to confidence intervals was proposed by Oliv ...

, but these methods also increased the number of false negatives (i.e. reduced the statistical power

In statistics, the power of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis (H_0) when a specific alternative hypothesis (H_1) is true. It is commonly denoted by 1-\beta, and represents the chances ...

). In 1995, Yoav Benjamini and Yosef Hochberg proposed controlling the false discovery rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expe ...

(FDR) as a more statistically powerful alternative to controlling the FWER in multiple hypothesis testing. The pFDR and the ''q-''value were introduced by John D. Storey in 2002 in order to improve upon a limitation of the FDR, namely that the FDR is not defined when there are no positive results.

Definition

Let there be a null hypothesis and analternative hypothesis

In statistical hypothesis testing, the alternative hypothesis is one of the proposed proposition in the hypothesis test. In general the goal of hypothesis test is to demonstrate that in the given condition, there is sufficient evidence supporting ...

. Perform hypothesis tests; let the test statistic

A test statistic is a statistic (a quantity derived from the sample) used in statistical hypothesis testing.Berger, R. L.; Casella, G. (2001). ''Statistical Inference'', Duxbury Press, Second Edition (p.374) A hypothesis test is typically specif ...

s be i.i.d. random variables

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is us ...

such that . That is, if is true for test (), then follows the null distribution

In statistical hypothesis testing, the null distribution is the probability distribution of the test statistic when the null hypothesis is true.

For example, in an F-test, the null distribution is an F-distribution.

Null distribution is a tool scie ...

; while if is true (), then follows the alternative distribution . Let , that is, for each test, is true with probability and is true with probability . Denote the critical region

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis.

Hypothesis testing allows us to make probabilistic statements about population parameters.

...

(the values of for which is rejected) at significance level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the ...

by . Let an experiment yield a value for the test statistic. The ''q''-value of is formally defined as

:

That is, the ''q''-value is the infimum

In mathematics, the infimum (abbreviated inf; plural infima) of a subset S of a partially ordered set P is a greatest element in P that is less than or equal to each element of S, if such an element exists. Consequently, the term ''greatest low ...

of the pFDR if is rejected for test statistics with values . Equivalently, the ''q''-value equals

:

which is the infimum of the probability that is true given that is rejected (the false discovery rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expe ...

).

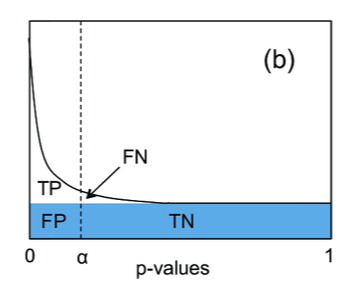

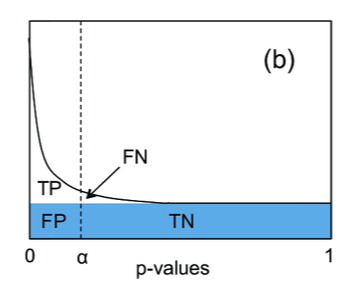

Relationship to the ''p''-value

The ''p''-value is defined as : the infimum of the probability that is rejected given that is true (thefalse positive rate

In statistics, when performing multiple comparisons, a false positive ratio (also known as fall-out or false alarm ratio) is the probability of falsely rejecting the null hypothesis for a particular test. The false positive rate is calculated as th ...

). Comparing the definitions of the ''p''- and ''q''-values, it can be seen that the ''q''-value is the minimum posterior probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior p ...

that is true.

Interpretation

The ''q''-value can be interpreted as the false discovery rate (FDR): the proportion of false positives among all positive results. Given a set of test statistics and their associated ''q''-values, rejecting the null hypothesis for all tests whose ''q''-value is less than or equal to some threshold ensures that the expected value of the false discovery rate is .Applications

Biology

Gene expression

Genome-wide analyses of differential gene expression involve simultaneously testing theexpression

Expression may refer to:

Linguistics

* Expression (linguistics), a word, phrase, or sentence

* Fixed expression, a form of words with a specific meaning

* Idiom, a type of fixed expression

* Metaphorical expression, a particular word, phrase, o ...

of thousands of genes. Controlling the FWER (usually to 0.05) avoids excessive false positives (i.e. detecting differential expression in a gene that is not differentially expressed) but imposes a strict threshold for the ''p''-value that results in many false negatives (many differentially expressed genes are overlooked). However, controlling the pFDR by selecting genes with significant ''q''-values lowers the number of false negatives (increases the statistical power) while ensuring that the expected value of the proportion of false positives among all positive results is low (e.g. 5%).

For example, suppose that among 10,000 genes tested, 1,000 are actually differentially expressed and 9,000 are not:

* If we consider every gene with a ''p''-value of less than 0.05 to be differentially expressed, we expect that 450 (5%) of the 9,000 genes that are not differentially expressed will appear to be differentially expressed (450 false positives).

* If we control the FWER to 0.05, there is only a 5% probability of obtaining at least one false positive. However, this very strict criterion will reduce the power such that few of the 1,000 genes that are actually differentially expressed will appear to be differentially expressed (many false negatives).

* If we control the pFDR to 0.05 by considering all genes with a ''q''-value of less than 0.05 to be differentially expressed, then we expect 5% of the positive results to be false positives (e.g. 900 true positives, 45 false positives, 100 false negatives, 8,955 true negatives). This strategy enables one to obtain relatively low numbers of both false positives and false negatives.

Implementations

Note: the following is an incomplete list.R

* Thqvalue

package in R estimates ''q''-values from a list of ''p''-values.

References

{{reflist Multiple comparisons Statistical hypothesis testing