Pseudo-R-squared on:

[Wikipedia]

[Google]

[Amazon]

Pseudo-R-squared values are used when the outcome variable is nominal or ordinal such that the

Pseudo-R-squared values are used when the outcome variable is nominal or ordinal such that the

Pseudo-R-squared values are used when the outcome variable is nominal or ordinal such that the

Pseudo-R-squared values are used when the outcome variable is nominal or ordinal such that the coefficient of determination

In statistics, the coefficient of determination, denoted ''R''2 or ''r''2 and pronounced "R squared", is the proportion of the variation in the dependent variable that is predictable from the independent variable(s).

It is a statistic used ...

2 cannot be applied as a measure for goodness of fit and when a likelihood function

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood funct ...

is used to fit a model.

In linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is ...

, the squared multiple correlation, 2 is used to assess goodness of fit as it represents the proportion of variance in the criterion that is explained by the predictors.

In logistic regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear function (calculus), linear combination of one or more independent var ...

analysis, there is no agreed upon analogous measure, but there are several competing measures each with limitations.

Four of the most commonly used indices and one less commonly used one are examined in this article:

* Likelihood ratio 2

* Cox and Snell 2

* Nagelkerke 2

* McFadden 2

* Tjur 2

2L by Cohen

2L is given by Cohen: :: This is the most analogous index to the squared multiple correlations in linear regression. It represents the proportional reduction in the deviance wherein the deviance is treated as a measure of variation analogous but not identical to thevariance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of number ...

in linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is ...

analysis. One limitation of the likelihood ratio 2 is that it is not monotonically related to the odds ratio, meaning that it does not necessarily increase as the odds ratio increases and does not necessarily decrease as the odds ratio decreases.

2CS by Cox and Snell

2CS is an alternative index of goodness of fit related to the 2 value from linear regression. It is given by: :: where and are the likelihoods for the model being fitted and the null model, respectively. The Cox and Snell index is problematic as its maximum value is . The highest this upper bound can be is 0.75, but it can easily be as low as 0.48 when the marginal proportion of cases is small.2N by Nagelkerke

2N, proposed byNico Nagelkerke

Nicolaas Jan Dirk "Nico" Nagelkerke (born 1951) is a Dutch biostatistician and epidemiologist. As of 2012, he was a professor of biostatistics at the United Arab Emirates University. He previously taught at the University of Leiden in the Nether ...

in a highly cited Biometrika paper, provides a correction to the Cox and Snell 2 so that the maximum value is equal to 1. Nevertheless, the Cox and Snell and likelihood ratio 2s show greater agreement with each other than either does with the Nagelkerke 2. Of course, this might not be the case for values exceeding 0.75 as the Cox and Snell index is capped at this value. The likelihood ratio 2 is often preferred to the alternatives as it is most analogous to 2 in linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is ...

, is independent of the base rate (both Cox and Snell and Nagelkerke 2s increase as the proportion of cases increase from 0 to 0.5) and varies between 0 and 1.

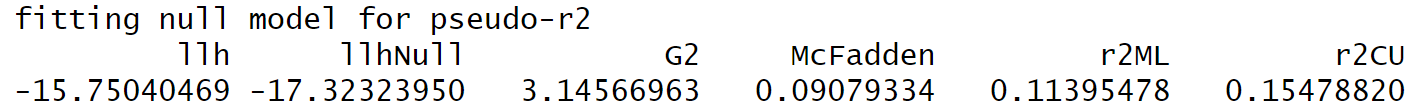

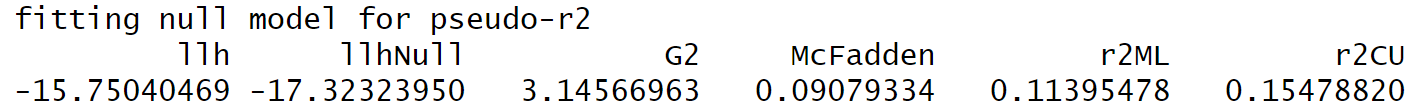

2 by McFadden

The pseudo 2 by McFadden (sometimes calledlikelihood ratio

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood functi ...

index) is defined as

::

and is preferred over 2 by Allison. The two expressions 2 and 2 are then related respectively by,

::

2 by Tjur

However, Allison now prefers 2 which is a relatively new measure developed by Tjur. It can be calculated in two steps: # For each level of the dependent variable, find the mean of the predicted probabilities of an event. # Take the absolute value of the difference between these meansInterpretation

A word of caution is in order when interpreting pseudo-2 statistics. The reason these indices of fit are referred to as ''pseudo'' 2 is that they do not represent the proportionate reduction in error as the 2 inlinear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is ...

does. Linear regression assumes homoscedasticity

In statistics, a sequence (or a vector) of random variables is homoscedastic () if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity. The s ...

, that the error variance is the same for all values of the criterion. Logistic regression will always be heteroscedastic

In statistics, a sequence (or a vector) of random variables is homoscedastic () if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity. The s ...

– the error variances differ for each value of the predicted score. For each value of the predicted score there would be a different value of the proportionate reduction in error. Therefore, it is inappropriate to think of 2 as a proportionate reduction in error in a universal sense in logistic regression.

References

{{reflist Statistical ratios Regression diagnostics