Principle Of Optimality on:

[Wikipedia]

[Google]

[Amazon]

A Bellman equation, named after

A Bellman equation, named after

Meyn & Tweedie

.

A Bellman equation, named after

A Bellman equation, named after Richard E. Bellman

Richard Ernest Bellman (August 26, 1920 – March 19, 1984) was an American applied mathematician, who introduced dynamic programming in 1953, and made important contributions in other fields of mathematics, such as biomathematics. He founde ...

, is a necessary condition

In logic and mathematics, necessity and sufficiency are terms used to describe a conditional or implicational relationship between two statements. For example, in the conditional statement: "If then ", is necessary for , because the truth of ...

for optimality associated with the mathematical optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfi ...

method known as dynamic programming

Dynamic programming is both a mathematical optimization method and a computer programming method. The method was developed by Richard Bellman in the 1950s and has found applications in numerous fields, from aerospace engineering to economics.

I ...

. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is calle ...

of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used.

The Bellman equation was first applied to engineering control theory

Control theory is a field of mathematics that deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a ...

and to other topics in applied mathematics, and subsequently became an important tool in economic theory

Economics () is the social science that studies the production, distribution, and consumption of goods and services.

Economics focuses on the behaviour and interactions of economic agents and how economies work. Microeconomics analyzes ...

; though the basic concepts of dynamic programming are prefigured in John von Neumann

John von Neumann (; hu, Neumann János Lajos, ; December 28, 1903 – February 8, 1957) was a Hungarian-American mathematician, physicist, computer scientist, engineer and polymath. He was regarded as having perhaps the widest cove ...

and Oskar Morgenstern

Oskar Morgenstern (January 24, 1902 – July 26, 1977) was an Austrian-American economist. In collaboration with mathematician John von Neumann, he founded the mathematical field of game theory as applied to the social sciences and strategic decis ...

's ''Theory of Games and Economic Behavior

''Theory of Games and Economic Behavior'', published in 1944 by Princeton University Press, is a book by mathematician John von Neumann and economist Oskar Morgenstern which is considered the groundbreaking text that created the interdisciplinar ...

'' and Abraham Wald

Abraham Wald (; hu, Wald Ábrahám, yi, אברהם וואַלד; – ) was a Jewish Hungarian mathematician who contributed to decision theory, geometry, and econometrics and founded the field of statistical sequential analysis. One of ...

's ''sequential analysis

In statistics, sequential analysis or sequential hypothesis testing is statistical analysis where the sample size is not fixed in advance. Instead data are evaluated as they are collected, and further sampling is stopped in accordance with a pre- ...

''. The term 'Bellman equation' usually refers to the dynamic programming equation associated with discrete-time

In mathematical dynamics, discrete time and continuous time are two alternative frameworks within which variables that evolve over time are modeled.

Discrete time

Discrete time views values of variables as occurring at distinct, separate "po ...

optimization problems. In continuous-time optimization problems, the analogous equation is a partial differential equation

In mathematics, a partial differential equation (PDE) is an equation which imposes relations between the various partial derivatives of a Multivariable calculus, multivariable function.

The function is often thought of as an "unknown" to be sol ...

that is called the Hamilton–Jacobi–Bellman equation In optimal control theory, the Hamilton-Jacobi-Bellman (HJB) equation gives a necessary and sufficient condition for optimality of a control with respect to a loss function. It is, in general, a nonlinear partial differential equation in the value ...

.

In discrete time any multi-stage optimization problem can be solved by analyzing the appropriate Bellman equation. The appropriate Bellman equation can be found by introducing new state variables (state augmentation). However, the resulting augmented-state multi-stage optimization problem has a higher dimensional state space than the original multi-stage optimization problem - an issue that can potentially render the augmented problem intractable due to the “curse of dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The ...

”. Alternatively, it has been shown that if the cost function of the multi-stage optimization problem satisfies a "backward separable" structure, then the appropriate Bellman equation can be found without state augmentation.

Analytical concepts in dynamic programming

To understand the Bellman equation, several underlying concepts must be understood. First, any optimization problem has some objective: minimizing travel time, minimizing cost, maximizing profits, maximizing utility, etc. The mathematical function that describes this objective is called the ''objective function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cos ...

''.

Dynamic programming breaks a multi-period planning problem into simpler steps at different points in time. Therefore, it requires keeping track of how the decision situation is evolving over time. The information about the current situation that is needed to make a correct decision is called the "state". For example, to decide how much to consume and spend at each point in time, people would need to know (among other things) their initial wealth. Therefore, wealth would be one of their ''state variable

A state variable is one of the set of variables that are used to describe the mathematical "state" of a dynamical system. Intuitively, the state of a system describes enough about the system to determine its future behaviour in the absence of a ...

s'', but there would probably be others.

The variables chosen at any given point in time are often called the '' control variables''. For instance, given their current wealth, people might decide how much to consume now. Choosing the control variables now may be equivalent to choosing the next state; more generally, the next state is affected by other factors in addition to the current control. For example, in the simplest case, today's wealth (the state) and consumption (the control) might exactly determine tomorrow's wealth (the new state), though typically other factors will affect tomorrow's wealth too.

The dynamic programming approach describes the optimal plan by finding a rule that tells what the controls should be, given any possible value of the state. For example, if consumption (''c'') depends ''only'' on wealth (''W''), we would seek a rule that gives consumption as a function of wealth. Such a rule, determining the controls as a function of the states, is called a ''policy function'' (See Bellman, 1957, Ch. III.2).

Finally, by definition, the optimal decision rule is the one that achieves the best possible value of the objective. For example, if someone chooses consumption, given wealth, in order to maximize happiness (assuming happiness ''H'' can be represented by a mathematical function, such as a utility

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosopher ...

function and is something defined by wealth), then each level of wealth will be associated with some highest possible level of happiness, . The best possible value of the objective, written as a function of the state, is called the ''value function''.

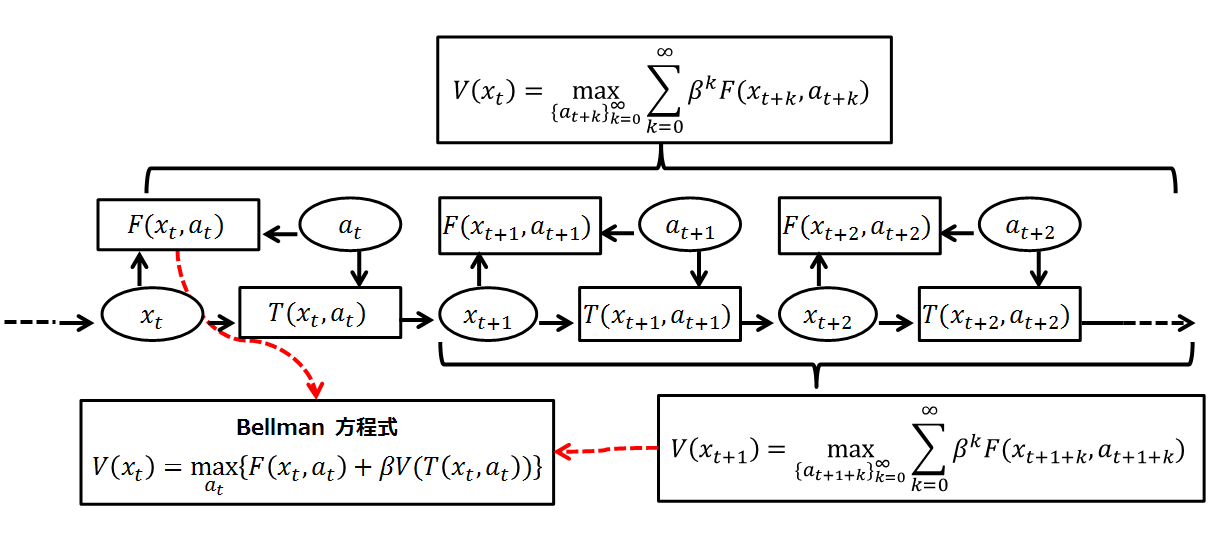

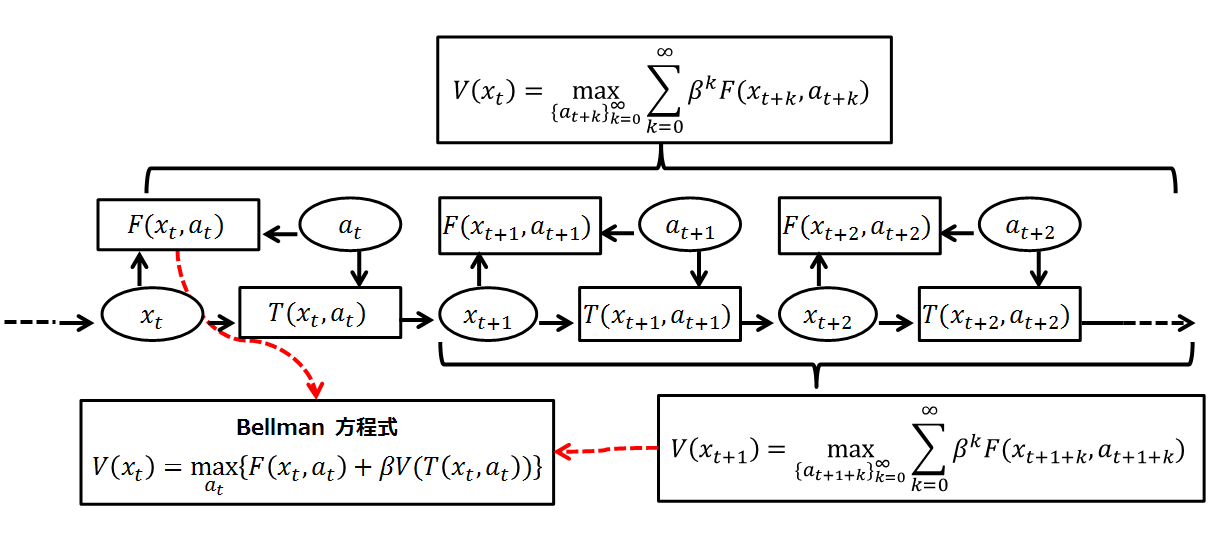

Bellman showed that a dynamic optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfi ...

problem in discrete time

In mathematical dynamics, discrete time and continuous time are two alternative frameworks within which variables that evolve over time are modeled.

Discrete time

Discrete time views values of variables as occurring at distinct, separate "po ...

can be stated in a recursive

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics ...

, step-by-step form known as backward induction

Backward induction is the process of reasoning backwards in time, from the end of a problem or situation, to determine a sequence of optimal actions. It proceeds by examining the last point at which a decision is to be made and then identifying wha ...

by writing down the relationship between the value function in one period and the value function in the next period. The relationship between these two value functions is called the "Bellman equation". In this approach, the optimal policy in the last time period is specified in advance as a function of the state variable's value at that time, and the resulting optimal value of the objective function is thus expressed in terms of that value of the state variable. Next, the next-to-last period's optimization involves maximizing the sum of that period's period-specific objective function and the optimal value of the future objective function, giving that period's optimal policy contingent upon the value of the state variable as of the next-to-last period decision. This logic continues recursively back in time, until the first period decision rule is derived, as a function of the initial state variable value, by optimizing the sum of the first-period-specific objective function and the value of the second period's value function, which gives the value for all the future periods. Thus, each period's decision is made by explicitly acknowledging that all future decisions will be optimally made.

Derivation

A dynamic decision problem

Let the state at time be . For a decision that begins at time 0, we take as given the initial state . At any time, the set of possible actions depends on the current state; we can write this as , where the action represents one or more control variables. We also assume that the state changes from to a new state when action is taken, and that the current payoff from taking action in state is . Finally, we assume impatience, represented by adiscount factor

Discounting is a financial mechanism in which a debtor obtains the right to delay payments to a creditor, for a defined period of time, in exchange for a charge or fee.See "Time Value", "Discount", "Discount Yield", "Compound Interest", "Efficient ...

.

Under these assumptions, an infinite-horizon decision problem takes the following form:

:

subject to the constraints

:

Notice that we have defined notation to denote the optimal value that can be obtained by maximizing this objective function subject to the assumed constraints. This function is the ''value function''. It is a function of the initial state variable , since the best value obtainable depends on the initial situation.

Bellman's principle of optimality

The dynamic programming method breaks this decision problem into smaller subproblems. Bellman's ''principle of optimality'' describes how to do this:Principle of Optimality: An optimal policy has the property that whatever the initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision. (See Bellman, 1957, Chap. III.3.)In computer science, a problem that can be broken apart like this is said to have

optimal substructure

In computer science, a problem is said to have optimal substructure if an optimal solution can be constructed from optimal solutions of its subproblems. This property is used to determine the usefulness of greedy algorithms for a problem.{{cite boo ...

. In the context of dynamic game theory

Game theory is the study of mathematical models of strategic interactions among rational agents. Myerson, Roger B. (1991). ''Game Theory: Analysis of Conflict,'' Harvard University Press, p.&nbs1 Chapter-preview links, ppvii–xi It has appli ...

, this principle is analogous to the concept of subgame perfect equilibrium

In game theory, a subgame perfect equilibrium (or subgame perfect Nash equilibrium) is a refinement of a Nash equilibrium used in dynamic games. A strategy profile is a subgame perfect equilibrium if it represents a Nash equilibrium of every ...

, although what constitutes an optimal policy in this case is conditioned on the decision-maker's opponents choosing similarly optimal policies from their points of view.

As suggested by the ''principle of optimality'', we will consider the first decision separately, setting aside all future decisions (we will start afresh from time 1 with the new state ). Collecting the future decisions in brackets on the right, the above infinite-horizon decision problem is equivalent to:

:

subject to the constraints

:

Here we are choosing , knowing that our choice will cause the time 1 state to be . That new state will then affect the decision problem from time 1 on. The whole future decision problem appears inside the square brackets on the right.

The Bellman equation

So far it seems we have only made the problem uglier by separating today's decision from future decisions. But we can simplify by noticing that what is inside the square brackets on the right is ''the value'' of the time 1 decision problem, starting from state . Therefore, we can rewrite the problem as arecursive

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics ...

definition of the value function:

:, subject to the constraints:

This is the Bellman equation. It can be simplified even further if we drop time subscripts and plug in the value of the next state:

:

The Bellman equation is classified as a functional equation

In mathematics, a functional equation

is, in the broadest meaning, an equation in which one or several functions appear as unknowns. So, differential equations and integral equations are functional equations. However, a more restricted meaning ...

, because solving it means finding the unknown function , which is the ''value function''. Recall that the value function describes the best possible value of the objective, as a function of the state . By calculating the value function, we will also find the function that describes the optimal action as a function of the state; this is called the ''policy function''.

In a stochastic problem

In the deterministic setting, other techniques besides dynamic programming can be used to tackle the aboveoptimal control

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in science, engineering and ...

problem. However, the Bellman Equation is often the most convenient method of solving ''stochastic'' optimal control problems.

For a specific example from economics, consider an infinitely-lived consumer with initial wealth endowment at period . They have an instantaneous utility function

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosopher ...

where denotes consumption and discounts the next period utility at a rate of . Assume that what is not consumed in period carries over to the next period with interest rate . Then the consumer's utility maximization problem is to choose a consumption plan that solves

:

subject to

:

and

:

The first constraint is the capital accumulation/law of motion specified by the problem, while the second constraint is a transversality condition In optimal control theory, a transversality condition is a Boundary value problem, boundary condition for the terminal values of the costate equation, costate variables. They are one of the necessary conditions for optimality infinite-horizon optima ...

that the consumer does not carry debt at the end of their life. The Bellman equation is

:

Alternatively, one can treat the sequence problem directly using, for example, the Hamiltonian equations.

Now, if the interest rate varies from period to period, the consumer is faced with a stochastic optimization problem. Let the interest ''r'' follow a Markov process

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happe ...

with probability transition function where denotes the probability measure

In mathematics, a probability measure is a real-valued function defined on a set of events in a probability space that satisfies measure properties such as ''countable additivity''. The difference between a probability measure and the more gener ...

governing the distribution of interest rate next period if current interest rate is . In this model the consumer decides their current period consumption after the current period interest rate is announced.

Rather than simply choosing a single sequence , the consumer now must choose a sequence for each possible realization of a in such a way that their lifetime expected utility is maximized:

:

The expectation is taken with respect to the appropriate probability measure given by ''Q'' on the sequences of ''r''s. Because ''r'' is governed by a Markov process, dynamic programming simplifies the problem significantly. Then the Bellman equation is simply:

:

Under some reasonable assumption, the resulting optimal policy function ''g''(''a'',''r'') is measurable

In mathematics, the concept of a measure is a generalization and formalization of Geometry#Length, area, and volume, geometrical measures (length, area, volume) and other common notions, such as mass and probability of events. These seemingly ...

.

For a general stochastic sequential optimization problem with Markovian shocks and where the agent is faced with their decision ''ex-post

References

Notes

References

Further reading

*

*

{{Latin phrases

Lists of Latin phrases, E ...

'', the Bellman equation takes a very similar form

:

Solution methods

* Themethod of undetermined coefficients

In mathematics, the method of undetermined coefficients is an approach to finding a particular solution to certain nonhomogeneous ordinary differential equations and recurrence relations. It is closely related to the annihilator method, but inste ...

, also known as 'guess and verify', can be used to solve some infinite-horizon, autonomous

In developmental psychology and moral, political, and bioethical philosophy, autonomy, from , ''autonomos'', from αὐτο- ''auto-'' "self" and νόμος ''nomos'', "law", hence when combined understood to mean "one who gives oneself one's ow ...

Bellman equations.

* The Bellman equation can be solved by backwards induction, either analytically in a few special cases, or numerically on a computer. Numerical backwards induction is applicable to a wide variety of problems, but may be infeasible when there are many state variables, due to the curse of dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The ...

. Approximate dynamic programming has been introduced by D. P. Bertsekas and J. N. Tsitsiklis with the use of artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected unit ...

s (multilayer perceptron

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean ''any'' feedforward ANN, sometimes strictly to refer to networks composed of mu ...

s) for approximating the Bellman function. This is an effective mitigation strategy for reducing the impact of dimensionality by replacing the memorization of the complete function mapping for the whole space domain with the memorization of the sole neural network parameters. In particular, for continuous-time systems, an approximate dynamic programming approach that combines both policy iterations with neural networks was introduced. In discrete-time, an approach to solve the HJB equation combining value iterations and neural networks was introduced.

* By calculating the first-order conditions associated with the Bellman equation, and then using the envelope theorem

In mathematics and economics, the envelope theorem is a major result about the differentiability properties of the value function of a parameterized optimization problem. As we change parameters of the objective, the envelope theorem shows that, i ...

to eliminate the derivatives of the value function, it is possible to obtain a system of difference equation

In mathematics, a recurrence relation is an equation according to which the nth term of a sequence of numbers is equal to some combination of the previous terms. Often, only k previous terms of the sequence appear in the equation, for a parameter ...

s or differential equation

In mathematics, a differential equation is an equation that relates one or more unknown functions and their derivatives. In applications, the functions generally represent physical quantities, the derivatives represent their rates of change, an ...

s called the 'Euler equation

200px, Leonhard Euler (1707–1783)

In mathematics and physics, many topics are named in honor of Swiss mathematician Leonhard Euler (1707–1783), who made many important discoveries and innovations. Many of these items named after Euler include ...

s'. Standard techniques for the solution of difference or differential equations can then be used to calculate the dynamics of the state variables and the control variables of the optimization problem.

Applications in economics

The first known application of a Bellman equation in economics is due to Martin Beckmann andRichard Muth

Richard Ferris Muth (May 14, 1927 – April 10, 2018) was an American economist, who is considered to be one of the founders of urban economics (along with William Alonso and Edwin Mills (economist), Edwin Mills).

Muth obtained his Ph.D. from th ...

. Martin Beckmann also wrote extensively on consumption theory using the Bellman equation in 1959. His work influenced Edmund S. Phelps

Edmund Strother Phelps (born July 26, 1933) is an American economist and the recipient of the 2006 Nobel Memorial Prize in Economic Sciences.

Early in his career, he became known for his research at Yale's Cowles Foundation in the first half of ...

, among others.

A celebrated economic application of a Bellman equation is Robert C. Merton

Robert Cox Merton (born July 31, 1944) is an American economist, Nobel Memorial Prize in Economic Sciences laureate, and professor at the MIT Sloan School of Management, known for his pioneering contributions to continuous-time finance, especia ...

's seminal 1973 article on the intertemporal capital asset pricing model. (See also Merton's portfolio problem

Merton's portfolio problem is a well known problem in continuous-time finance and in particular intertemporal portfolio choice. An investor must choose how much to consume and must allocate their wealth between stocks and a risk-free asset so as ...

).The solution to Merton's theoretical model, one in which investors chose between income today and future income or capital gains, is a form of Bellman's equation. Because economic applications of dynamic programming usually result in a Bellman equation that is a difference equation

In mathematics, a recurrence relation is an equation according to which the nth term of a sequence of numbers is equal to some combination of the previous terms. Often, only k previous terms of the sequence appear in the equation, for a parameter ...

, economists refer to dynamic programming as a "recursive method" and a subfield of recursive economics Recursive economics is a branch of modern economics based on a paradigm of individuals making a series of two-period optimization decisions over time.

Differences between recursive and neoclassical paradigms

The neoclassical model assumes a one-per ...

is now recognized within economics.

Nancy Stokey

Nancy Laura Stokey (born May 8, 1950) has been the Frederick Henry Prince Distinguished Service Professor of Economics at the University of Chicago since 1990 and focuses particularly on mathematical economics while recently conducting research a ...

, Robert E. Lucas

Robert Emerson Lucas Jr. (born September 15, 1937) is an American economist at the University of Chicago, where he is currently the John Dewey Distinguished Service Professor Emeritus in Economics and the College. Widely regarded as the central ...

, and Edward Prescott

Edward Christian Prescott (December 26, 1940 – November 6, 2022) was an American economist. He received the Nobel Memorial Prize in Economics in 2004, sharing the award with Finn E. Kydland, "for their contributions to dynamic macroeconomics ...

describe stochastic and nonstochastic dynamic programming in considerable detail, and develop theorems for the existence of solutions to problems meeting certain conditions. They also describe many examples of modeling theoretical problems in economics using recursive methods. This book led to dynamic programming being employed to solve a wide range of theoretical problems in economics, including optimal economic growth

Economic growth can be defined as the increase or improvement in the inflation-adjusted market value of the goods and services produced by an economy in a financial year. Statisticians conventionally measure such growth as the percent rate of ...

, resource extraction

Extractivism is the process of extracting natural resources from the Earth to sell on the world market. It exists in an economy that depends primarily on the extraction or removal of natural resources that are considered valuable for exportation w ...

, principal–agent problem

The principal–agent problem refers to the conflict in interests and priorities that arises when one person or entity (the "agent") takes actions on behalf of another person or entity (the " principal"). The problem worsens when there is a gre ...

s, public finance

Public finance is the study of the role of the government in the economy. It is the branch of economics that assesses the government revenue and government expenditure of the public authorities and the adjustment of one or the other to achie ...

, business investment

Investment is the dedication of money to purchase of an asset to attain an increase in value over a period of time. Investment requires a sacrifice of some present asset, such as time, money, or effort.

In finance, the purpose of investing i ...

, asset pricing

In financial economics, asset pricing refers to a formal treatment and development of two main Price, pricing principles, outlined below, together with the resultant models.

There have been many models developed for different situations, but cor ...

, factor

Factor, a Latin word meaning "who/which acts", may refer to:

Commerce

* Factor (agent), a person who acts for, notably a mercantile and colonial agent

* Factor (Scotland), a person or firm managing a Scottish estate

* Factors of production, suc ...

supply, and industrial organization

In economics, industrial organization is a field that builds on the theory of the firm by examining the structure of (and, therefore, the boundaries between) firms and market (economics), markets. Industrial organization adds real-world complic ...

. Lars Ljungqvist

Lars Ljungqvist (born May 12, 1959) is a Swedish economistLjungqvist's faculty page

probably best known a ...

and probably best known a ...

Thomas Sargent

Thomas John Sargent (born July 19, 1943) is an American economist and the W.R. Berkley Professor of Economics and Business at New York University. He specializes in the fields of macroeconomics, monetary economics, and time series econometrics ...

apply dynamic programming to study a variety of theoretical questions in monetary policy

Monetary policy is the policy adopted by the monetary authority of a nation to control either the interest rate payable for very short-term borrowing (borrowing by banks from each other to meet their short-term needs) or the money supply, often a ...

, fiscal policy

In economics and political science, fiscal policy is the use of government revenue collection (taxes or tax cuts) and expenditure to influence a country's economy. The use of government revenue expenditures to influence macroeconomic variables ...

, taxation

A tax is a compulsory financial charge or some other type of levy imposed on a taxpayer (an individual or legal person, legal entity) by a governmental organization in order to fund government spending and various public expenditures (regiona ...

, economic growth

Economic growth can be defined as the increase or improvement in the inflation-adjusted market value of the goods and services produced by an economy in a financial year. Statisticians conventionally measure such growth as the percent rate of ...

, search theory

In microeconomics, search theory studies buyers or sellers who cannot instantly find a trading partner, and must therefore search for a partner prior to transacting.

Search theory clarifies how buyers and sellers choose when to acknowledge a coo ...

, and labor economics. Avinash Dixit

Avinash Kamalakar Dixit (born 6 August 1944) is an Indian-American economist. He is the John J. F. Sherrerd '52 University Professor of Economics Emeritus at Princeton University, and has been Distinguished Adjunct Professor of Economics at Li ...

and Robert Pindyck showed the value of the method for thinking about capital budgeting

Capital budgeting in corporate finance is the planning process used to determine whether an organization's long term capital investments such as new machinery, replacement of machinery, new plants, new products, and research development project ...

. Anderson adapted the technique to business valuation, including privately held businesses.

Using dynamic programming to solve concrete problems is complicated by informational difficulties, such as choosing the unobservable discount rate. There are also computational issues, the main one being the curse of dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The ...

arising from the vast number of possible actions and potential state variables that must be considered before an optimal strategy can be selected. For an extensive discussion of computational issues, see Miranda and Fackler, and Meyn 2007. Appendix contains abridgeMeyn & Tweedie

.

Example

In Markov decision processes, a Bellman equation is arecursion

Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics ...

for expected rewards. For example, the expected reward for being in a particular state ''s'' and following some fixed policy has the Bellman equation:

:

This equation describes the expected reward for taking the action prescribed by some policy .

The equation for the optimal policy is referred to as the ''Bellman optimality equation'':

:

where is the optimal policy and refers to the value function of the optimal policy. The equation above describes the reward for taking the action giving the highest expected return.

See also

* * * * * * * *References

{{DEFAULTSORT:Bellman Equation Equations Dynamic programming Control theory