One-copy Serializability on:

[Wikipedia]

[Google]

[Amazon]

In the fields of

''Concurrency Control and Recovery in Database Systems''

(free PDF download), Addison Wesley Publishing Company, Gerhard Weikum, Gottfried Vossen (2001)

''Transactional Information Systems''

Elsevier,

, but not .

Conflict serializability can be enforced by restarting any transaction within the cycle in the precedence graph, or by implementing

"Serializable isolation for snapshot databases"

''Proceedings of the 2008 ACM SIGMOD international conference on Management of data'', pp. 729-738, Vancouver, Canada, June 2008, (SIGMOD 2008 best paper award)

.

Since determining whether a schedule is view-serializable is

database

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and a ...

s and transaction processing

In computer science, transaction processing is information processing that is divided into individual, indivisible operations called ''transactions''. Each transaction must succeed or fail as a complete unit; it can never be only partially c ...

(transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions

Capital punishment, also known as the death penalty and formerly called judicial homicide, is the state-sanctioned killing of a person as punishment for actual or supposed misconduct. The sentence ordering that an offender be punished in s ...

in a set of transactions running in the system. Often it is a ''list'' of operations (actions) ordered by time, performed by a set of transactions that are executed together in the system. If the order in time between certain operations is not determined by the system, then a ''partial order

In mathematics, especially order theory, a partial order on a set is an arrangement such that, for certain pairs of elements, one precedes the other. The word ''partial'' is used to indicate that not every pair of elements needs to be comparable ...

'' is used. Examples of such operations are requesting a read operation, reading, writing, aborting, committing, requesting a lock

Lock(s) or Locked may refer to:

Common meanings

*Lock and key, a mechanical device used to secure items of importance

*Lock (water navigation), a device for boats to transit between different levels of water, as in a canal

Arts and entertainme ...

, locking, etc. Often, only a subset of the transaction operation types are included in a schedule.

Schedules are fundamental concepts in database concurrency control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for concurrent operations are generated, whil ...

theory. In practice, most general purpose database systems employ conflict-serializable and strict recoverable schedules.

Notation

Grid notation: * Columns: The different transactions in the schedule. * Rows: The time order of operations (a.k.a., actions). Operations (a.k.a., actions): * R(X): The corresponding transaction "reads" object X (i.e., it retrieves the data stored at X). This is done so that it can modify the data (e.g., X=X+4) during a "write" operation rather than merely overwrite it. When the schedule is represented as a list rather than a grid, the action is represented as where is a number corresponding to a specific transaction. * W(X): The corresponding transaction "writes" to object X (i.e., it modifies the data stored at X). When the schedule is represented as a list rather than a grid, the action is represented as where is a number corresponding to a specific transaction. * Com.: This represents a "commit" operation in which the corresponding transaction has successfully completed its preceding actions, and has made all its changes permanent in the database. Alternatively, a schedule can be represented with a ''directed acyclic graph

In mathematics, particularly graph theory, and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called ''arcs''), with each edge directed from one ...

'' (or DAG) in which there is an arc (i.e., directed edge) between each ''ordered pair

In mathematics, an ordered pair, denoted (''a'', ''b''), is a pair of objects in which their order is significant. The ordered pair (''a'', ''b'') is different from the ordered pair (''b'', ''a''), unless ''a'' = ''b''. In contrast, the '' unord ...

'' of operations.

Example

The following is an example of a schedule: In this example, the columns represent the different transactions in the schedule D. Schedule D consists of three transactions T1, T2, T3. First T1 Reads and Writes to object X, and then Commits. Then T2 Reads and Writes to object Y and Commits, and finally, T3 Reads and Writes to object Z and Commits. The schedule D above can be represented as list in the following way: D = R1(X) W1(X) Com1 R2(Y) W2(Y) Com2 R3(Z) W3(Z) Com3Duration and order of actions

Usually, for the purpose of reasoning about concurrency control in databases, an operation is modelled as '' atomic'', occurring at a point in time, without duration. Real executed operations always have some duration. Operations of transactions in a schedule can interleave (i.e., transactions can be executed concurrently), but time orders between operations in each transaction must remain unchanged. The schedule is in ''partial order

In mathematics, especially order theory, a partial order on a set is an arrangement such that, for certain pairs of elements, one precedes the other. The word ''partial'' is used to indicate that not every pair of elements needs to be comparable ...

'' when the operations of transactions in a schedule interleave (i.e., when the schedule is conflict-serializable but not serial). The schedule is in ''total order

In mathematics, a total order or linear order is a partial order in which any two elements are comparable. That is, a total order is a binary relation \leq on some set X, which satisfies the following for all a, b and c in X:

# a \leq a ( re ...

'' when the operations of transactions in a schedule do not interleave (i.e., when the schedule is serial).

Types of schedule

A complete schedule is one that contains either an abort (a.k.a. rollback) or commit action for each of its transactions. A transaction's last action is either to commit or abort. To maintain atomicity, a transaction must undo all its actions if it is aborted.Serial

A schedule is serial if the executed transactions are non-interleaved (i.e., a serial schedule is one in which no transaction starts until a running transaction has ended). Schedule D is an example of a serial schedule:Serializable

A schedule is serializable if it is equivalent (in its outcome) to a serial schedule. In schedule E, the order in which the actions of the transactions are executed is not the same as in D, but in the end, E gives the same result as D. Serializability is used to keep the data in the data item in a consistent state. It is the major criterion for the correctness of concurrent transactions' schedule, and thus supported in all general purpose database systems. Schedules that are not serializable are likely to generate erroneous outcomes; which can be extremely harmful (e.g., when dealing with money within banks). Philip A. Bernstein, Vassos Hadzilacos, Nathan Goodman (1987)''Concurrency Control and Recovery in Database Systems''

(free PDF download), Addison Wesley Publishing Company, Gerhard Weikum, Gottfried Vossen (2001)

''Transactional Information Systems''

Elsevier,

Maurice Herlihy

Maurice Peter Herlihy (born 4 January 1954) is an American computer scientist active in the field of multiprocessor synchronization. Herlihy has contributed to areas including theoretical foundations of wait-free synchronization, linearizable da ...

and J. Eliot B. Moss. ''Transactional memory: architectural support for lock-free data structures.'' Proceedings of the 20th annual international symposium on Computer architecture (ISCA '93). Volume 21, Issue 2, May 1993.

If any specific order between some transactions is requested by an application, then it is enforced independently of the underlying serializability mechanisms. These mechanisms are typically indifferent to any specific order, and generate some unpredictable partial order

In mathematics, especially order theory, a partial order on a set is an arrangement such that, for certain pairs of elements, one precedes the other. The word ''partial'' is used to indicate that not every pair of elements needs to be comparable ...

that is typically compatible with multiple serial orders of these transactions.

Conflicting actions

Two actions are said to be in conflict (conflicting pair) if and only if all of the 3 following conditions are satisfied: # The actions belong to different transactions. # At least one of the actions is a write operation. # The actions access the same object (read or write). Equivalently, two actions are considered conflicting if and only if they are noncommutative. Equivalently, two actions are considered conflicting if and only if they are a read-write, write-read, or write-write conflict. The following set of actions is conflicting: * R1(X), W2(X), W3(X) (3 conflicting pairs) While the following sets of actions are not conflicting: * R1(X), R2(X), R3(X) * R1(X), W2(Y), R3(X) Reducing conflicts, such as through commutativity, enhances performance because conflicts are the fundamental cause of delays and aborts. The conflict is materialized if the requested conflicting operation is actually executed: in many cases a requested/issued conflicting operation by a transaction is delayed and even never executed, typically by alock

Lock(s) or Locked may refer to:

Common meanings

*Lock and key, a mechanical device used to secure items of importance

*Lock (water navigation), a device for boats to transit between different levels of water, as in a canal

Arts and entertainme ...

on the operation's object, held by another transaction, or when writing to a transaction's temporary private workspace and materializing, copying to the database itself, upon commit; as long as a requested/issued conflicting operation is not executed upon the database itself, the conflict is non-materialized; non-materialized conflicts are not represented by an edge in the precedence graph.

Conflict equivalence

The schedules S1 and S2 are said to be conflict-equivalent if and only if both of the following two conditions are satisfied: # Both schedules S1 and S2 involve the same set of transactions such that each transaction has the same actions in the same order. # Both schedules have the same set of conflicting pairs (such that the actions in each conflicting pair are in the same order). This is equivalent to requiring that all conflicting operations (i.e., operations in any conflicting pair) are in the same order in both schedules. Equivalently, two schedules are said to be conflict equivalent if and only if one can be transformed to another by swapping pairs of non-conflicting operations (whether adjacent or not) while maintaining the order of actions for each transaction. Equivalently, two schedules are said to be conflict equivalent if and only if one can be transformed to another by swapping pairs of non-conflicting adjacent operations with different transactions.Conflict-serializable

A schedule is said to be conflict-serializable when the schedule is conflict-equivalent to one or more serial schedules. Equivalently, a schedule is conflict-serializable if and only if itsprecedence graph A precedence graph, also named conflict graph and serializability graph, is used in the context of concurrency control in databases. It is the directed graph representing precedence of transactions in the schedule, as reflected by precedence of con ...

is acyclic when only committed transactions are considered. Note that if the graph is defined to also include uncommitted transactions, then cycles involving uncommitted transactions may occur without conflict serializability violation.

The schedule K is conflict-equivalent to the serial schedule two-phase locking

In databases and transaction processing, two-phase locking (2PL) is a pessimistic concurrency control method that guarantees conflict-serializability. Philip A. Bernstein, Vassos Hadzilacos, Nathan Goodman (1987) ''Concurrency Control and Recov ...

, timestamp ordering, or serializable snapshot isolation.Michael J. Cahill, Uwe Röhm, Alan D. Fekete (2008)"Serializable isolation for snapshot databases"

''Proceedings of the 2008 ACM SIGMOD international conference on Management of data'', pp. 729-738, Vancouver, Canada, June 2008, (SIGMOD 2008 best paper award)

View equivalence

Two schedules S1 and S2 are said to be view-equivalent when the following conditions are satisfied: # If the transaction in S1 reads an initial value for object X, so does the same transaction in S2. # If the transaction reads a value (for an object X) written by the transaction in S1, it must do so S2. # If the transaction in S1 does the final write for object X, so does the same transaction in S2. Additionally, two view-equivalent schedules must involve the same set of transactions such that each transaction has the same actions in the same order. In the example below, the schedules S1 and S2 are view-equivalent, but neither S1 nor S2 are view-equivalent to the schedule S3. The conditions for S3 to be view-equivalent to S1 and S2 were not satisfied at the corresponding superscripts for the following reasons: # Failed the first condition of view equivalence because T1 read the initial value for B in S1 and S2, but T2 read the initial value for B in S3. # Failed the second condition of view equivalence because T2 read the value written by T1 for B in S1 and S2, but T1 read the value written by T2 for B in S3. # Failed the third condition of view equivalence because T2 did the final write for B in S1 and S2, but T1 did the final write for B in S3. To quickly analyze whether two schedules are view-equivalent, write both schedules as a list with each action's subscript representing which view-equivalence condition they match. The schedules are view equivalent if and only if all the actions have the same subscript (or lack thereof) in both schedules: * S1: R1(A)initial read, W1(A), R1(B)initial read, W1(B), Com1, R2(A)written by T1, W2(A)final write, R2(B)written by T1, W2(B)final write, Com2 * S2: R1(A)initial read, W1(A), R2(A)written by T1, W2(A)final write, R1(B)initial read, W1(B), Com1, R2(B)written by T1, W2(B)final write, Com2 * S3: R1(A)initial read, W1(A), R2(A)written by T1, W2(A)final write, R2(B)initial read, W2(B), R1(B)written by T2, W1(B)final write, Com1, Com2View-serializable

A schedule is view-serializable if it is view-equivalent to some serial schedule. Note that by definition, all conflict-serializable schedules are view-serializable. Notice that the above example (which is the same as the example in the discussion of conflict-serializable) is both view-serializable and conflict-serializable at the same time. There are however view-serializable schedules that are not conflict-serializable: those schedules with a transaction performing ablind write

In computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hard ...

:

The above example is not conflict-serializable, but it is view-serializable since it has a view-equivalent serial schedule NP-complete

In computational complexity theory, NP-complete problems are the hardest of the problems to which ''solutions'' can be verified ''quickly''.

Somewhat more precisely, a problem is NP-complete when:

# It is a decision problem, meaning that for any ...

, view-serializability has little practical interest.

Recoverable

In a recoverable schedule, transactions only commit after all transactions whose changes they read have committed. A schedule becomes unrecoverable if a transaction reads and relies on changes from another transaction , and then commits and aborts. These schedules are recoverable. The schedule F is recoverable because T1 commits before T2, that makes the value read by T2 correct. Then T2 can commit itself. In the F2 schedule, if T1 aborted, T2 has to abort because the value of A it read is incorrect. In both cases, the database is left in a consistent state. Schedule J is unrecoverable because T2 committed before T1 despite previously reading the value written by T1. Because T1 aborted after T2 committed, the value read by T2 is wrong. Because a transaction cannot be rolled-back after it commits, the schedule is unrecoverable.Cascadeless

Cascadeless schedules (a.k.a. "Avoiding Cascading Aborts (ACA) schedules") are schedules which avoid cascading aborts by disallowing dirty reads. Cascading aborts occur when one transaction's abort causes another transaction to abort because it read and relied on the first transaction's changes to an object. A dirty read occurs when a transaction reads data from uncommitted write in another transaction. The following examples are the same as the ones in the discussion on recoverable: In this example, although F2 is recoverable, it does not avoid cascading aborts. It can be seen that if T1 aborts, T2 will have to be aborted too in order to maintain the correctness of the schedule as T2 has already read the uncommitted value written by T1. The following is a recoverable schedule which avoids cascading abort. Note, however, that the update of A by T1 is always lost (since T1 is aborted). Note that this Schedule would not be serializable if T1 would be committed. Cascading aborts avoidance is sufficient but not necessary for a schedule to be recoverable.Strict

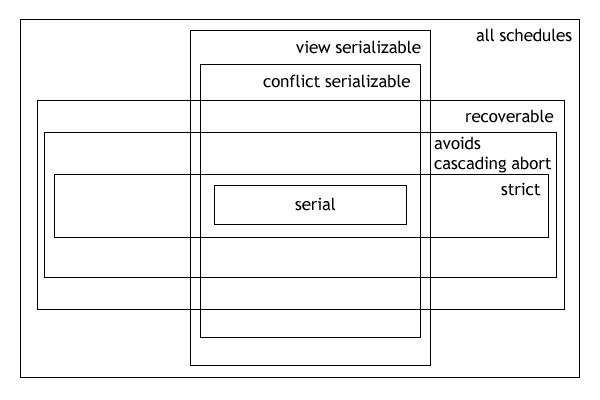

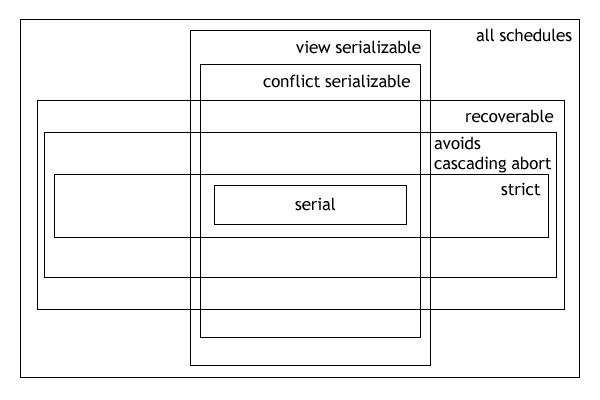

A schedule is strict if for any two transactions T1, T2, if a write operation of T1 precedes a ''conflicting'' operation of T2 (either read or write), then the commit or abort event of T1 also precedes that conflicting operation of T2. For example, the schedule F3 above is strict. Any strict schedule is cascade-less, but not the converse. Strictness allows efficient recovery of databases from failure.Serializability class relationships

The following expressions illustrate the hierarchical (containment) relationships betweenserializability

In the fields of databases and transaction processing (transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions in a set of transactions running in the system. Often it is a ''list'' o ...

and recoverability classes:

* Serial ⊂ conflict-serializable ⊂ view-serializable ⊂ all schedules

* Serial ⊂ strict ⊂ cascadeless (ACA) ⊂ recoverable ⊂ all schedules

The Venn diagram

A Venn diagram is a widely used diagram style that shows the logical relation between set (mathematics), sets, popularized by John Venn (1834–1923) in the 1880s. The diagrams are used to teach elementary set theory, and to illustrate simple ...

(below) illustrates the above clauses graphically.

See also

*Schedule (project management)

In project management, a schedule is a listing of a project's milestones, activities, and deliverables. Usually dependencies and resources are defined for each task, then start and finish dates are estimated from the resource allocation, budge ...

* Strong strict two-phase locking (SS2PL or Rigorousness).

* Making snapshot isolation serializable in Snapshot isolation

In databases, and transaction processing (transaction management), snapshot isolation is a guarantee that all reads made in a transaction will see a consistent snapshot of the database (in practice it reads the last committed values that existed a ...

.

* Global serializability, where the ''Global serializability problem'' and its proposed solutions are described.

* Linearizability, a more general concept in concurrent computing

Concurrent computing is a form of computing in which several computations are executed '' concurrently''—during overlapping time periods—instead of ''sequentially—''with one completing before the next starts.

This is a property of a syst ...

.

References

{{Reflist Data management Databases Concurrency control Transaction processing Distributed computing problems NP-complete problems