Method of averaging on:

[Wikipedia]

[Google]

[Amazon]

In mathematics, more specifically in

Consider a perturbed

Consider a perturbed

The method contains some assumptions and restrictions. These limitations play important role when we average the original equation which is not into the standard form, and we can discuss counterexample of it. The following example in order to discourage this hurried averaging:

where we put following the previous notation.

This systems corresponds to a

The method contains some assumptions and restrictions. These limitations play important role when we average the original equation which is not into the standard form, and we can discuss counterexample of it. The following example in order to discourage this hurried averaging:

where we put following the previous notation.

This systems corresponds to a

Van der Pol was concerned with obtaining approximate solution for equations of the type

where following the previous notation. This system is named

Van der Pol was concerned with obtaining approximate solution for equations of the type

where following the previous notation. This system is named

Then there exists such that for all , has a unique hyperbolic periodic orbit of the same stability type as . The proof can be found at Guckenheimer and Holmes, Sanders ''et al.'' and for the angle case in Chicone.

The average theorem assumes existence of a connected and bounded region which affects the time interval of the result validity. The following example points it out. Consider the

where . The averaged system consists of

which under this initial condition indicates that the original solution behaves like

where it holds on a bounded region over .

The average theorem assumes existence of a connected and bounded region which affects the time interval of the result validity. The following example points it out. Consider the

where . The averaged system consists of

which under this initial condition indicates that the original solution behaves like

where it holds on a bounded region over .

dynamical system

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in ...

s, the method of averaging (also called averaging theory) exploits systems containing time-scales separation: a ''fast oscillation'' versus a ''slow drift''. It suggests that we perform an averaging over a given amount of time in order to iron out the fast oscillations and observe the qualitative behavior from the resulting dynamics. The approximated solution holds under finite time inversely proportional to the parameter denoting the slow time scale. It turns out to be a customary problem where there exists the trade off between how good is the approximated solution balanced by how much time it holds to be close to the original solution.

More precisely, the system has the following form

of a phase space variable The ''fast oscillation'' is given by versus a ''slow drift'' of . The averaging method yields an autonomous dynamical system

which approximates the solution curves of inside a connected and compact region of the phase space and over time of .

Under the validity of this averaging technique, the asymptotic behavior of the original system is captured by the dynamical equation for . In this way, qualitative methods for autonomous dynamical systems may be employed to analyze the equilibria and more complex structures, such as slow manifold and invariant manifolds, as well as their stability

Stability may refer to:

Mathematics

*Stability theory, the study of the stability of solutions to differential equations and dynamical systems

** Asymptotic stability

** Linear stability

** Lyapunov stability

** Orbital stability

** Structural sta ...

in the phase space of the averaged system.

In addition, in a physical application it might be reasonable or natural to replace a mathematical model, which is given in the form of the differential equation for , with the corresponding averaged system , in order to use the averaged system to make a prediction and then test the prediction against the results of a physical experiment.

The averaging method has a long history, which is deeply rooted in perturbation

Perturbation or perturb may refer to:

* Perturbation theory, mathematical methods that give approximate solutions to problems that cannot be solved exactly

* Perturbation (geology), changes in the nature of alluvial deposits over time

* Perturbat ...

problems that arose in celestial mechanics

Celestial mechanics is the branch of astronomy that deals with the motions of objects in outer space. Historically, celestial mechanics applies principles of physics (classical mechanics) to astronomical objects, such as stars and planets, to ...

(see, for example in ).

First example

Consider a perturbed

Consider a perturbed logistic growth

A logistic function or logistic curve is a common S-shaped curve (sigmoid curve) with equation

f(x) = \frac,

where

For values of x in the domain of real numbers from -\infty to +\infty, the S-curve shown on the right is obtained, with the ...

and the averaged equation

The purpose of the method of averaging is to tell us the qualitative behavior of the vector field when we average it over a period of time. It guarantees that the solution approximates for times Exceptionally: in this example the approximation is even better, it is valid for all times. We present it in a section below.

Definitions

We assume the vector field to be ofdifferentiability class

In mathematical analysis, the smoothness of a function is a property measured by the number of continuous derivatives it has over some domain, called ''differentiability class''. At the very minimum, a function could be considered smooth if ...

with (or even we will only say smooth), which we will denote . We expand this time-dependent vector field in a Taylor series (in powers of ) with remainder . We introduce the following notation:

where is the -th derivative with . As we are concerned with averaging problems, in general is zero, so it turns out that we will be interested in vector fields given by

Besides, we define the following initial value problem to be in the standard form:

Theorem: averaging in the periodic case

Consider for every connected and bounded and every there exist and such that the original system (a non-autonomous dynamical system) given by has solution , where is periodic with period and both with bounded on bounded sets. Then there exists a constant such that the solution of the ''averaged'' ''system'' (autonomous dynamical system) is is for and .Remarks

* There are two approximations in this what is called ''first approximation'' estimate: reduction to the average of the vector field and negligence of terms. * Uniformity with respect to the initial condition : if we vary this affects the estimation of and . The proof and discussion of this can be found in J. Murdock's book. * Reduction of regularity: there is a more general form of this theorem which requires only to be Lipschitz and continuous. It is a more recent proof and can be seen in Sanders ''et al.''. The theorem statement presented here is due to the proof framework proposed by Krylov-Bogoliubov which is based on an introduction of a near-identity transformation. The advantage of this method is the extension to more general settings such as infinite-dimensional systems - partial differential equation or delay differential equations. *J. Hale presents generalizations to almost periodic vector-fields.Strategy of the proof

Krylov-Bogoliubov realized that the slow dynamics of the system determines the leading order of the asymptotic solution. In order to proof it, they proposed a ''near-identity transformation,'' which turned out to be a change of coordinates with its own time-scale transforming the original system to the averaged one.Sketch of the proof

# Determination of a near-identity transformation: the smooth mapping where is assumed to be regular enough and periodic. The proposed change of coordinates is given by . # Choose an appropriate solving the homological equation of the averaging theory: . #Change of coordinates carries the original system to # Estimation of error due to truncation and comparison to the original variable.Non-autonomous class of systems: more examples

Along the history of the averaging technique, there is class of system extensively studied which give us meaningful examples we will discuss below. The class of system is given by: where is smooth. This system is similar to a linear system with a small nonlinear perturbation given by : differing from the standard form. Hence there is a necessity to perform a transformation to make it in the standard form explicitly. We are able to change coordinates using variation of constants method. We look at the unperturbed system, i.e. , given by which has thefundamental solution

In mathematics, a fundamental solution for a linear partial differential operator is a formulation in the language of distribution theory of the older idea of a Green's function (although unlike Green's functions, fundamental solutions do not a ...

corresponding to a rotation. Then the time-dependent change of coordinates is where is the coordinates respective to the standard form.

If we take the time derivative in both sides and invert the fundamental matrix we obtain

Remarks

* The same can be done to time-dependent linear parts. Although the fundamental solution may be non-trivial to write down explicitly, the procedure is similar. See Sanders ''et al.'' for further details. * If the eigenvalues of are not all purely imaginary this is called hyperbolicity condition. For this occasion, the perturbation equation may present some serious problems even whether is bounded, since the solution grows exponentially fast. However, qualitatively, we may be able to know the asymptotic solution, such as Hartman-Grobman results and more. * Occasionally in order to obtain standard forms which are easier to work on, we may choose a rotating reference frame set of coordinates - polar coordinates - given by which determines the initial condition as well, and defines system: If we average it as long as a neighborhood of the origin is excluded (since the polar coordinates fail) yields: where the averaged system isExample: Misleading averaging results

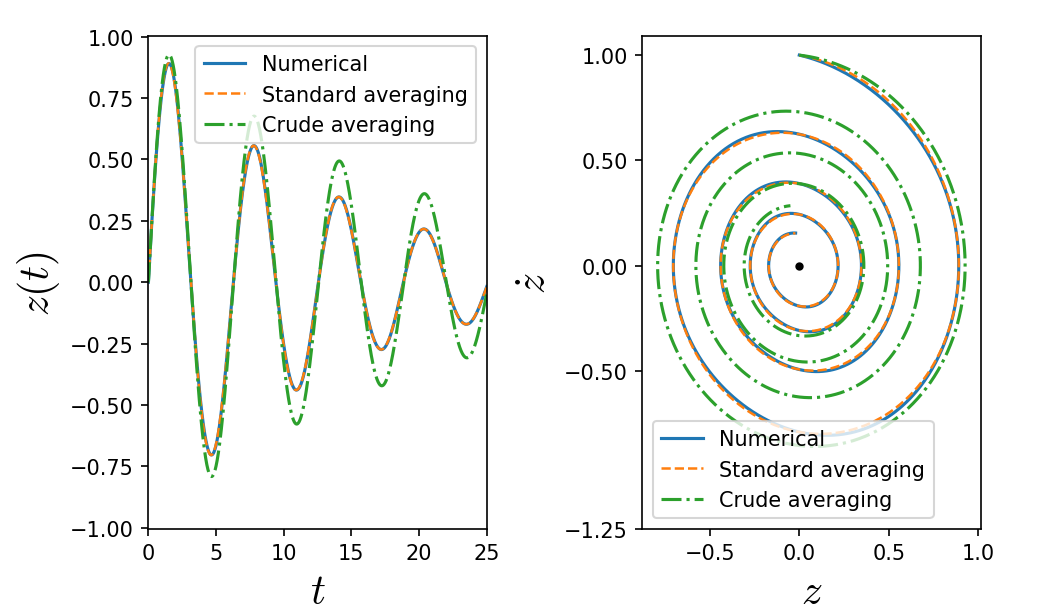

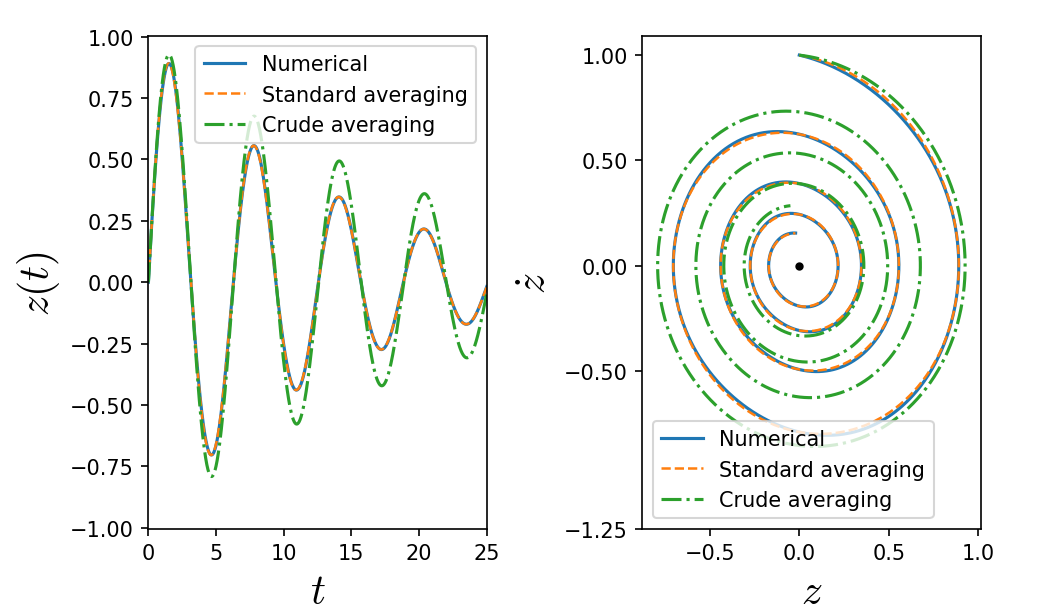

The method contains some assumptions and restrictions. These limitations play important role when we average the original equation which is not into the standard form, and we can discuss counterexample of it. The following example in order to discourage this hurried averaging:

where we put following the previous notation.

This systems corresponds to a

The method contains some assumptions and restrictions. These limitations play important role when we average the original equation which is not into the standard form, and we can discuss counterexample of it. The following example in order to discourage this hurried averaging:

where we put following the previous notation.

This systems corresponds to a damped harmonic oscillator

In classical mechanics, a harmonic oscillator is a system that, when displaced from its equilibrium position, experiences a restoring force ''F'' proportional to the displacement ''x'':

\vec F = -k \vec x,

where ''k'' is a positive constan ...

where the damping term oscillates between and . Averaging the friction term over one cycle of yields the equation:

The solution is

which the convergence rate to the origin is . The averaged system obtained from the standard form yields:

which in the rectangular coordinate shows explicitly that indeed the rate of convergence to the origin is differing from the previous crude averaged system:

Example: Van der Pol Equation

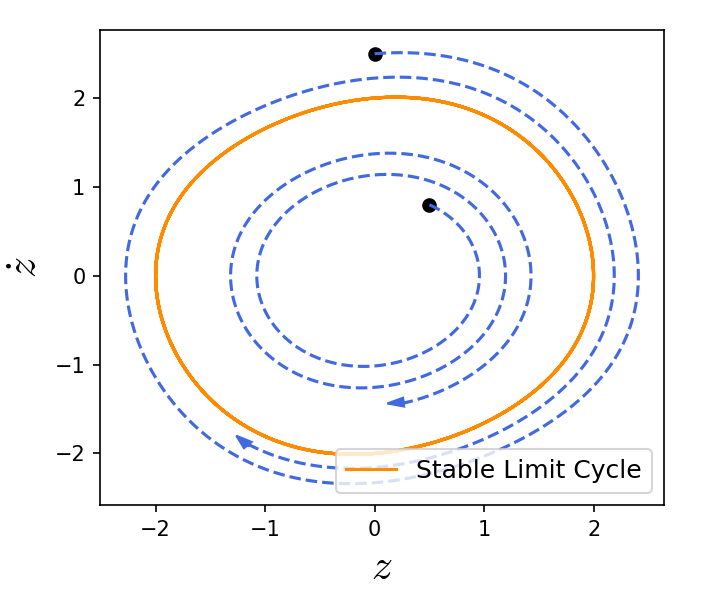

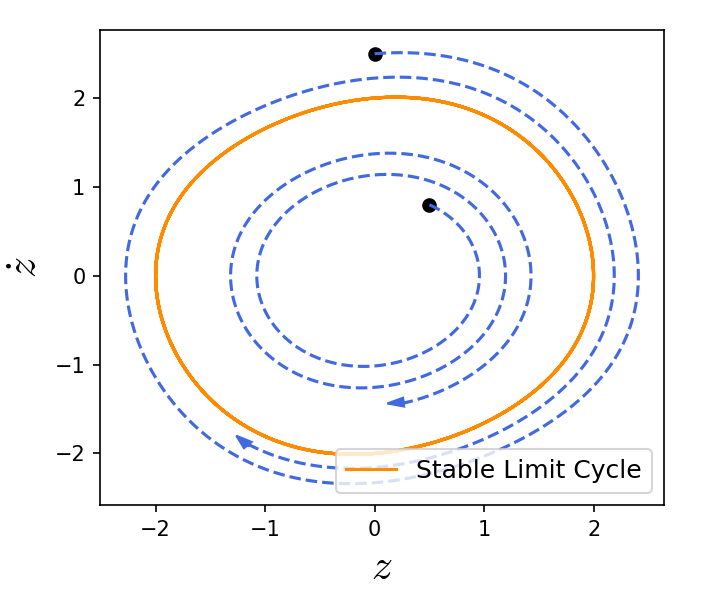

Van der Pol was concerned with obtaining approximate solution for equations of the type

where following the previous notation. This system is named

Van der Pol was concerned with obtaining approximate solution for equations of the type

where following the previous notation. This system is named Van der Pol oscillator

In dynamics, the Van der Pol oscillator is a non-conservative oscillator with non-linear damping. It evolves in time according to the second-order differential equation:

:-\mu(1-x^2)+x= 0,

where ''x'' is the position coordinate—which is a f ...

. If we apply periodic averaging to this nonlinear oscillator, this give us qualitative knowledge of the phase space without solving explicitly the system.

The averaged system is

and we can analyze the fixed points and their stability. There is an unstable fixed point at the origin and a stable limit cycle represented by .

The existence of such stable limit-cycle can be stated as a theorem.

Theorem (Existence of a periodic orbit): If is a hyperbolic fixed point of Then there exists such that for all , has a unique hyperbolic periodic orbit of the same stability type as . The proof can be found at Guckenheimer and Holmes, Sanders ''et al.'' and for the angle case in Chicone.

Example: Restricting the time interval

The average theorem assumes existence of a connected and bounded region which affects the time interval of the result validity. The following example points it out. Consider the

where . The averaged system consists of

which under this initial condition indicates that the original solution behaves like

where it holds on a bounded region over .

The average theorem assumes existence of a connected and bounded region which affects the time interval of the result validity. The following example points it out. Consider the

where . The averaged system consists of

which under this initial condition indicates that the original solution behaves like

where it holds on a bounded region over .

Damped Pendulum

Consider a damped pendulum whose point of suspension is vibrated vertically by a small amplitude, high frequency signal (this is usually known as ''dithering

Dither is an intentionally applied form of noise used to randomize quantization error, preventing large-scale patterns such as color banding in images. Dither is routinely used in processing of both digital audio and video data, and is often ...

''). The equation of motion for such a pendulum is given by

where describes the motion of the suspension point, describes the damping of the pendulum, and is the angle made by the pendulum with the vertical.

The phase space form of this equation is given by

where we have introduced the variable and written the system as an ''autonomous'', first-order system in -space.

Suppose that the angular frequency of the vertical vibrations, , is much greater than the natural frequency of the pendulum, . Suppose also that the amplitude of the vertical vibrations, , is much less than the length of the pendulum. The pendulum's trajectory in phase space will trace out a spiral around a curve , moving along at the slow rate but moving around it at the fast rate . The radius of the spiral around will be small and proportional to . The average behaviour of the trajectory, over a timescale much larger than , will be to follow the curve .

Extension error estimates

Average technique for initial value problems has been treated up to now with an validity error estimates of order . However, there are circumstances where the estimates can be extended for further times, even the case for all times. Below we deal with a system containing an asymptotically stable fixed point. Such situation recapitulates what is illustrated in Figure 1. Theorem (Eckhaus /Sanchez-Palencia ) Consider the initial value problem Suppose exists and contains an asymptotically stable fixed point in the linear approximation. Moreover, is continuously differentiable with respect to in and has a domain of attraction . For any compact and for all with in the general case and in the periodic case.References

{{Reflist Dynamical systems