In

computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, ...

, load balancing is the process of distributing a set of

tasks over a set of

resources

Resource refers to all the materials available in our environment which are technologically accessible, economically feasible and culturally sustainable and help us to satisfy our needs and wants. Resources can broadly be classified upon their ...

(computing units), with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle.

Load balancing is the subject of research in the field of

parallel computers

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different for ...

. Two main approaches exist: static algorithms, which do not take into account the state of the different machines, and dynamic algorithms, which are usually more general and more efficient but require exchanges of information between the different computing units, at the risk of a loss of efficiency.

Problem overview

A load-balancing algorithm always tries to answer a specific problem. Among other things, the nature of the tasks, the algorithmic

complexity

Complexity characterises the behaviour of a system or model whose components interact in multiple ways and follow local rules, leading to nonlinearity, randomness, collective dynamics, hierarchy, and emergence.

The term is generally used to c ...

, the hardware architecture on which the algorithms will run as well as required

error tolerance, must be taken into account. Therefore compromise must be found to best meet application-specific requirements.

Nature of tasks

The efficiency of load balancing algorithms critically depends on the nature of the tasks. Therefore, the more information about the tasks is available at the time of decision making, the greater the potential for optimization.

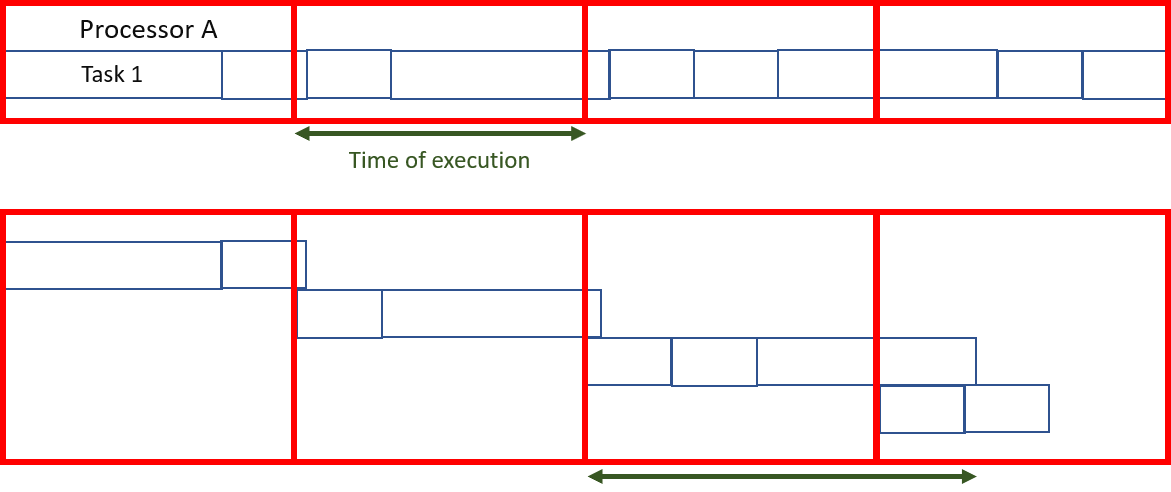

Size of tasks

Perfect knowledge of the

execution time

In computer science, runtime, run time, or execution time is the final phase of a computer programs life cycle, in which the code is being executed on the computer's central processing unit (CPU) as machine code. In other words, "runtime" is t ...

of each of the tasks allows to reach an optimal load distribution (see algorithm of

prefix sum In computer science, the prefix sum, cumulative sum, inclusive scan, or simply scan of a sequence of numbers is a second sequence of numbers , the sums of prefixes (running totals) of the input sequence:

:

:

:

:...

For instance, the prefix sums of ...

).

Unfortunately, this is in fact an idealized case. Knowing the exact

execution time

In computer science, runtime, run time, or execution time is the final phase of a computer programs life cycle, in which the code is being executed on the computer's central processing unit (CPU) as machine code. In other words, "runtime" is t ...

of each task is an extremely rare situation.

For this reason, there are several techniques to get an idea of the different execution times. First of all, in the fortunate scenario of having tasks of relatively homogeneous size, it is possible to consider that each of them will require approximately the average execution time. If, on the other hand, the execution time is very irregular, more sophisticated techniques must be used. One technique is to add some

metadata to each task. Depending on the previous execution time for similar metadata, it is possible to make inferences for a future task based on statistics.

Dependencies

In some cases, tasks depend on each other. These interdependencies can be illustrated by a

directed acyclic graph

In mathematics, particularly graph theory, and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called ''arcs''), with each edge directed from one v ...

. Intuitively, some tasks cannot begin until others are completed.

Assuming that the required time for each of the tasks is known in advance, an optimal execution order must lead to the minimization of the total execution time. Although this is an

NP-hard

In computational complexity theory, NP-hardness ( non-deterministic polynomial-time hardness) is the defining property of a class of problems that are informally "at least as hard as the hardest problems in NP". A simple example of an NP-hard pr ...

problem and therefore can be difficult to be solved exactly. There are algorithms, like

job scheduler

A job scheduler is a computer application for controlling unattended background program execution of jobs. This is commonly called batch scheduling, as execution of non-interactive jobs is often called batch processing, though traditional ''job' ...

, that calculate optimal task distributions using

metaheuristic

In computer science and mathematical optimization, a metaheuristic is a higher-level procedure or heuristic designed to find, generate, or select a heuristic (partial search algorithm) that may provide a sufficiently good solution to an optimizatio ...

methods.

Segregation of tasks

Another feature of the tasks critical for the design of a load balancing algorithm is their ability to be broken down into subtasks during execution. The "Tree-Shaped Computation" algorithm presented later takes great advantage of this specificity.

Static and dynamic algorithms

Static

A load balancing algorithm is "static" when it does not take into account the state of the system for the distribution of tasks. Thereby, the system state includes measures such as the

load level (and sometimes even overload) of certain processors. Instead, assumptions about the overall system are made beforehand, such as the arrival times and resource requirements of incoming tasks. In addition, the number of processors, their respective power and communication speeds are known. Therefore, static load balancing aims to associate a known set of tasks with the available processors in order to minimize a certain performance function. The trick lies in the concept of this performance function.

Static load balancing techniques are commonly centralized around a router, or

Master

Master or masters may refer to:

Ranks or titles

* Ascended master, a term used in the Theosophical religious tradition to refer to spiritually enlightened beings who in past incarnations were ordinary humans

*Grandmaster (chess), National Master ...

, which distributes the loads and optimizes the performance function. This minimization can take into account information related to the tasks to be distributed, and derive an expected execution time.

The advantage of static algorithms is that they are easy to set up and extremely efficient in the case of fairly regular tasks (such as processing HTTP requests from a website). However, there is still some statistical variance in the assignment of tasks which can lead to the overloading of some computing units.

Dynamic

Unlike static load distribution algorithms, dynamic algorithms take into account the current load of each of the computing units (also called nodes) in the system. In this approach, tasks can be moved dynamically from an overloaded node to an underloaded node in order to receive faster processing. While these algorithms are much more complicated to design, they can produce excellent results, in particular, when the execution time varies greatly from one task to another.

Dynamic load balancing architecture can be more

modular

Broadly speaking, modularity is the degree to which a system's components may be separated and recombined, often with the benefit of flexibility and variety in use. The concept of modularity is used primarily to reduce complexity by breaking a s ...

since it is not mandatory to have a specific node dedicated to the distribution of work. When tasks are uniquely assigned to a processor according to their state at a given moment, it is a unique assignment. If, on the other hand, the tasks can be permanently redistributed according to the state of the system and its evolution, this is called dynamic assignment. Obviously, a load balancing algorithm that requires too much communication in order to reach its decisions runs the risk of slowing down the resolution of the overall problem.

Hardware architecture

Heterogenous machines

Parallel computing infrastructures are often composed of units of different

computing power

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructio ...

, which should be taken into account for the load distribution.

For example, lower-powered units may receive requests that require a smaller amount of computation, or, in the case of homogeneous or unknown request sizes, receive fewer requests than larger units.

Shared and distributed memory

Parallel computers are often divided into two broad categories: those where all processors share a single common memory on which they read and write in parallel (

PRAM

Pram or PRAM may refer to: a bulbous growth on senior canines, varying in size, usually benign and painless. If it bursts, it will ooze pus and blood.

Places

* Pram, Austria, a municipality in the district of Grieskirchen in the Austrian state of ...

model), and those where each computing unit has its own memory (

distributed memory

In computer science, distributed memory refers to a multiprocessor computer system in which each processor has its own private memory. Computational tasks can only operate on local data, and if remote data are required, the computational task m ...

model), and where information is exchanged by messages.

For

shared-memory

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between progr ...

computers, managing write conflicts greatly slows down the speed of individual execution of each computing unit. However, they can work perfectly well in parallel. Conversely, in the case of message exchange, each of the processors can work at full speed. On the other hand, when it comes to collective message exchange, all processors are forced to wait for the slowest processors to start the communication phase.

In reality, few systems fall into exactly one of the categories. In general, the processors each have an internal memory to store the data needed for the next calculations and are organized in successive

clusters. Often, these processing elements are then coordinated through

distributed memory

In computer science, distributed memory refers to a multiprocessor computer system in which each processor has its own private memory. Computational tasks can only operate on local data, and if remote data are required, the computational task m ...

and

message passing

In computer science, message passing is a technique for invoking behavior (i.e., running a program) on a computer. The invoking program sends a message to a process (which may be an actor or object) and relies on that process and its supporti ...

. Therefore, the load balancing algorithm should be uniquely adapted to a parallel architecture. Otherwise, there is a risk that the efficiency of parallel problem solving will be greatly reduced.

Hierarchy

Adapting to the hardware structures seen above, there are two main categories of load balancing algorithms. On the one hand, the one where tasks are assigned by “master” and executed by “workers” who keep the master informed of the progress of their work, and the master can then take charge of assigning or reassigning the workload in case of the dynamic algorithm. The literature refers to this as

"Master-Worker" architecture. On the other hand, the control can be distributed between the different nodes. The load balancing algorithm is then executed on each of them and the responsibility for assigning tasks (as well as re-assigning and splitting as appropriate) is shared. The last category assumes a dynamic load balancing algorithm.

Since the design of each load balancing algorithm is unique, the previous distinction must be qualified. Thus, it is also possible to have an intermediate strategy, with, for example, "master" nodes for each sub-cluster, which are themselves subject to a global "master". There are also multi-level organizations, with an alternation between master-slave and distributed control strategies. The latter strategies quickly become complex and are rarely encountered. Designers prefer algorithms that are easier to control.

Adaptation to larger architectures (scalability)

In the context of algorithms that run over the very long term (servers, cloud...), the computer architecture evolves over time. However, it is preferable not to have to design a new algorithm each time.

An extremely important parameter of a load balancing algorithm is therefore its ability to adapt to scalable hardware architecture. This is called the

scalability

Scalability is the property of a system to handle a growing amount of work by adding resources to the system.

In an economic context, a scalable business model implies that a company can increase sales given increased resources. For example, a ...

of the algorithm. An algorithm is called scalable for an input parameter when its performance remains relatively independent of the size of that parameter.

When the algorithm is capable of adapting to a varying number of computing units, but the number of computing units must be fixed before execution, it is called moldable. If, on the other hand, the algorithm is capable of dealing with a fluctuating amount of processors during its execution, the algorithm is said to be malleable. Most load balancing algorithms are at least moldable.

Fault tolerance

Especially in large-scale

computing cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

The comp ...

s, it is not tolerable to execute a parallel algorithm that cannot withstand the failure of one single component. Therefore,

fault tolerant

Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of one or more faults within some of its components. If its operating quality decreases at all, the decrease is proportional to the ...

algorithms are being developed which can detect outages of processors and recover the computation.

Approaches

Static distribution with full knowledge of the tasks:

prefix sum In computer science, the prefix sum, cumulative sum, inclusive scan, or simply scan of a sequence of numbers is a second sequence of numbers , the sums of prefixes (running totals) of the input sequence:

:

:

:

:...

For instance, the prefix sums of ...

If the tasks are independent of each other, and if their respective execution time and the tasks can be subdivided, there is a simple and optimal algorithm.

By dividing the tasks in such a way as to give the same amount of computation to each processor, all that remains to be done is to group the results together. Using a

prefix sum In computer science, the prefix sum, cumulative sum, inclusive scan, or simply scan of a sequence of numbers is a second sequence of numbers , the sums of prefixes (running totals) of the input sequence:

:

:

:

:...

For instance, the prefix sums of ...

algorithm, this division can be calculated in

logarithmic time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by t ...

with respect to the number of processors.

If, however, the tasks cannot be subdivided (i.e., they are

atomic), although optimizing task assignment is a difficult problem, it is still possible to approximate a relatively fair distribution of tasks, provided that the size of each of them is much smaller than the total computation performed by each of the nodes.

Most of the time, the execution time of a task is unknown and only rough approximations are available. This algorithm, although particularly efficient, is not viable for these scenarios.

Static load distribution without prior knowledge

Even if the execution time is not known in advance at all, static load distribution is always possible.

Round-robin scheduling

Round-robin (RR) is one of the algorithms employed by process and network schedulers in computing. Guowang Miao, Jens Zander, Ki Won Sung, and Ben Slimane, Fundamentals of Mobile Data Networks, Cambridge University Press, , 2016.

As the term ...

In a round-robin algorithm, the first request is sent to the first server, then the next to the second, and so on down to the last. Then it is started again, assigning the next request to the first server, and so on.

This algorithm can be weighted such that the most powerful units receive the largest number of requests and receive them first.

Randomized static

Randomized static load balancing is simply a matter of randomly assigning tasks to the different servers. This method works quite well. If, on the other hand, the number of tasks is known in advance, it is even more efficient to calculate a random permutation in advance. This avoids communication costs for each assignment. There is no longer a need for a distribution master because every processor knows what task is assigned to it. Even if the number of tasks is unknown, it is still possible to avoid communication with a pseudo-random assignment generation known to all processors.

The performance of this strategy (measured in total execution time for a given fixed set of tasks) decreases with the maximum size of the tasks.

Others

Of course, there are other methods of assignment as well:

* Less work: Assign more tasks to the servers by performing less (the method can also be weighted).

* Hash: allocates queries according to a

hash table

In computing, a hash table, also known as hash map, is a data structure that implements an associative array or dictionary. It is an abstract data type that maps keys to values. A hash table uses a hash function to compute an ''index'', ...

.

* Power of Two Choices: pick two servers at random and choose the better of the two options.

Master-Worker Scheme

Master-Worker schemes are among the simplest dynamic load balancing algorithms. A master distributes the workload to all workers (also sometimes referred to as "slaves"). Initially, all workers are idle and report this to the master. The master answers worker requests and distributes the tasks to them. When he has no more tasks to give, he informs the workers so that they stop asking for tasks.

The advantage of this system is that it distributes the burden very fairly. In fact, if one does not take into account the time needed for the assignment, the execution time would be comparable to the prefix sum seen above.

The problem with this algorithm is that it has difficulty adapting to a large number of processors because of the high amount of necessary communications. This lack of

scalability

Scalability is the property of a system to handle a growing amount of work by adding resources to the system.

In an economic context, a scalable business model implies that a company can increase sales given increased resources. For example, a ...

makes it quickly inoperable in very large servers or very large parallel computers. The master acts as a

bottleneck

Bottleneck literally refers to the narrowed portion (neck) of a bottle near its opening, which limit the rate of outflow, and may describe any object of a similar shape. The literal neck of a bottle was originally used to play what is now known as ...

.

However, the quality of the algorithm can be greatly improved by replacing the master with a task list that can be used by different processors. Although this algorithm is a little more difficult to implement, it promises much better scalability, although still insufficient for very large computing centers.

Non-hierarchical architecture, without knowledge of the system: work stealing

Another technique to overcome scalability problems when the time needed for task completion is unknown is

work stealing.

The approach consists of assigning to each processor a certain number of tasks in a random or predefined manner, then allowing inactive processors to "steal" work from active or overloaded processors. Several implementations of this concept exist, defined by a task division model and by the rules determining the exchange between processors. While this technique can be particularly effective, it is difficult to implement because it is necessary to ensure that communication does not become the primary occupation of the processors instead of solving the problem.

In the case of atomic tasks, two main strategies can be distinguished, those where the processors with low load offer their computing capacity to those with the highest load, and those were the most loaded units wish to lighten the workload assigned to them. It has been shown that when the network is heavily loaded, it is more efficient for the least loaded units to offer their availability and when the network is lightly loaded, it is the overloaded processors that require support from the most inactive ones. This rule of thumb limits the number of exchanged messages.

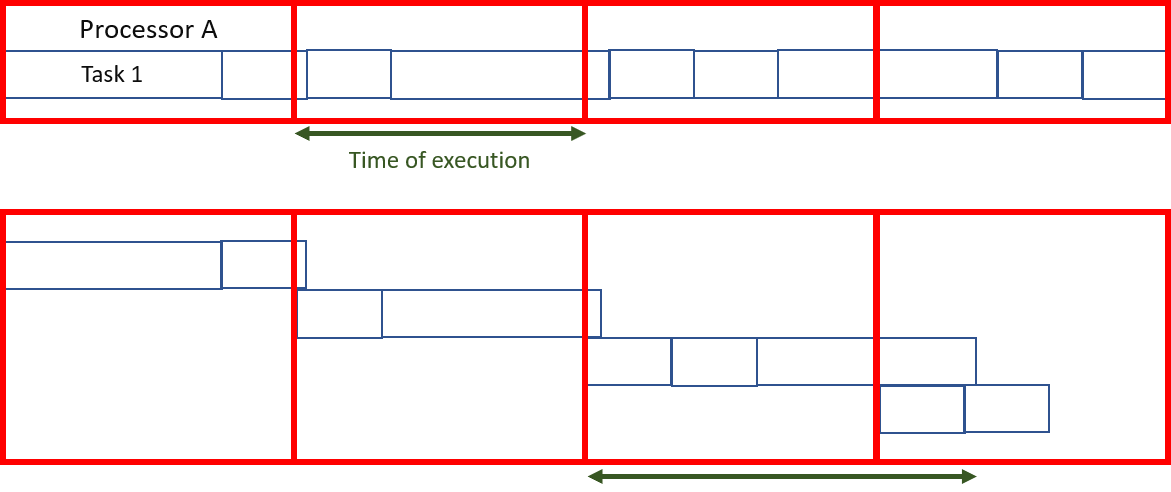

In the case where one starts from a single large task that cannot be divided beyond an atomic level, there is a very efficient algorithm "Tree-Shaped computation", where the parent task is distributed in a work tree.

Principle

Initially, many processors have an empty task, except one that works sequentially on it. Idle processors issue requests randomly to other processors (not necessarily active). If the latter is able to subdivide the task it is working on, it does so by sending part of its work to the node making the request. Otherwise, it returns an empty task. This induces a

tree structure

A tree structure, tree diagram, or tree model is a way of representing the hierarchical nature of a structure in a graphical form. It is named a "tree structure" because the classic representation resembles a tree, although the chart is genera ...

. It is then necessary to send a termination signal to the parent processor when the subtask is completed so that it, in turn, sends the message to its parent until it reaches the root of the tree. When the first processor, i.e. the root, has finished, a global termination message can be broadcast. In the end, it is necessary to assemble the results by going back up the tree.

Efficiency

The efficiency of such an algorithm is close to the prefix sum when the job cutting and communication time is not too high compared to the work to be done. To avoid too high communication costs, it is possible to imagine a list of jobs on

shared memory

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between progr ...

. Therefore, a request is simply reading from a certain position on this shared memory at the request of the master processor.

Use cases

In addition to efficient problem solving through parallel computations, load balancing algorithms are widely used in

HTTP

The Hypertext Transfer Protocol (HTTP) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems. HTTP is the foundation of data communication for the World Wide Web, ...

request management where a site with a large audience must be able to handle a large number of requests per second.

Internet-based services

One of the most commonly used applications of load balancing is to provide single Internet service from multiple

server

Server may refer to:

Computing

*Server (computing), a computer program or a device that provides functionality for other programs or devices, called clients

Role

* Waiting staff, those who work at a restaurant or a bar attending customers and su ...

s, sometimes known as a

server farm

A server farm or server cluster is a collection of computer servers, usually maintained by an organization to supply server functionality far beyond the capability of a single machine. They often consist of thousands of computers which require ...

. Commonly load-balanced systems include popular

web site

A website (also written as a web site) is a collection of web pages and related content that is identified by a common domain name and published on at least one web server. Examples of notable websites are Google, Facebook, Amazon, and Wikip ...

s, large

Internet Relay Chat

Internet Relay Chat (IRC) is a text-based chat system for instant messaging. IRC is designed for group communication in discussion forums, called '' channels'', but also allows one-on-one communication via private messages as well as chat a ...

networks, high-bandwidth

File Transfer Protocol

The File Transfer Protocol (FTP) is a standard communication protocol used for the transfer of computer files from a server to a client on a computer network. FTP is built on a client–server model architecture using separate control and data ...

(FTP) sites,

Network News Transfer Protocol

The Network News Transfer Protocol (NNTP) is an application protocol used for transporting Usenet news articles (''netnews'') between news servers, and for reading/posting articles by the end user client applications. Brian Kantor of the Univ ...

(NNTP) servers,

Domain Name System

The Domain Name System (DNS) is a hierarchical and distributed naming system for computers, services, and other resources in the Internet or other Internet Protocol (IP) networks. It associates various information with domain names assigned ...

(DNS) servers, and databases.

Round-robin DNS

Round-robin DNS

Round-robin DNS is a technique of load distribution, load balancing, or fault-tolerance provisioning multiple, redundant Internet Protocol service hosts, e.g., Web server, FTP servers, by managing the Domain Name System's (DNS) responses to ...

is an alternate method of load balancing that does not require a dedicated software or hardware node. In this technique, multiple

IP address

An Internet Protocol address (IP address) is a numerical label such as that is connected to a computer network that uses the Internet Protocol for communication.. Updated by . An IP address serves two main functions: network interface ident ...

es are associated with a single

domain name

A domain name is a string that identifies a realm of administrative autonomy, authority or control within the Internet. Domain names are often used to identify services provided through the Internet, such as websites, email services and more. ...

; clients are given IP in a round-robin fashion. IP is assigned to clients with a short expiration so the client is more likely to use a different IP the next time they access the Internet service being requested.

DNS delegation

Another more effective technique for load-balancing using DNS is to delegate as a sub-domain whose zone is served by each of the same servers that are serving the website. This technique works particularly well where individual servers are spread geographically on the Internet. For example:

one.example.org A 192.0.2.1

two.example.org A 203.0.113.2

www.example.org NS one.example.org

www.example.org NS two.example.org

However, the zone file for on each server is different such that each server resolves its own IP Address as the A-record. On server ''one'' the zone file for reports:

@ in a 192.0.2.1

On server ''two'' the same zone file contains:

@ in a 203.0.113.2

This way, when a server is down, its DNS will not respond and the web service does not receive any traffic. If the line to one server is congested, the unreliability of DNS ensures less HTTP traffic reaches that server. Furthermore, the quickest DNS response to the resolver is nearly always the one from the network's closest server, ensuring geo-sensitive load-balancing . A short

TTL on the A-record helps to ensure traffic is quickly diverted when a server goes down. Consideration must be given to the possibility that this technique may cause individual clients to switch between individual servers in mid-session.

Client-side random load balancing

Another approach to load balancing is to deliver a list of server IPs to the client, and then to have the client randomly select the IP from the list on each connection.

[MMOG Server-Side Architecture. Front-End Servers and Client-Side Random Load Balancing](_blank)

/ref> This essentially relies on all clients generating similar loads, and the Law of Large Numbers

In probability theory, the law of large numbers (LLN) is a theorem that describes the result of performing the same experiment a large number of times. According to the law, the average of the results obtained from a large number of trials sho ...

fault tolerance

Fault tolerance is the property that enables a system to continue operating properly in the event of the failure of one or more faults within some of its components. If its operating quality decreases at all, the decrease is proportional to the ...

.

Server-side load balancers

For Internet services, a server-side load balancer is usually a software program that is listening on the port

A port is a maritime facility comprising one or more wharves or loading areas, where ships load and discharge cargo and passengers. Although usually situated on a sea coast or estuary, ports can also be found far inland, such as ...

where external clients connect to access services. The load balancer forwards requests to one of the "backend" servers, which usually replies to the load balancer. This allows the load balancer to reply to the client without the client ever knowing about the internal separation of functions. It also prevents clients from contacting back-end servers directly, which may have security benefits by hiding the structure of the internal network and preventing attacks on the kernel's network stack or unrelated services running on other ports.

Some load balancers provide a mechanism for doing something special in the event that all backend servers are unavailable. This might include forwarding to a backup load balancer or displaying a message regarding the outage.

It is also important that the load balancer itself does not become a single point of failure

A single point of failure (SPOF) is a part of a system that, if it fails, will stop the entire system from working. SPOFs are undesirable in any system with a goal of high availability or reliability, be it a business practice, software appl ...

. Usually, load balancers are implemented in high-availability

High availability (HA) is a characteristic of a system which aims to ensure an agreed level of operational performance, usually uptime, for a higher than normal period.

Modernization has resulted in an increased reliance on these systems. Fo ...

pairs which may also replicate session persistence data if required by the specific application. Certain applications are programmed with immunity to this problem, by offsetting the load balancing point over differential sharing platforms beyond the defined network. The sequential algorithms paired to these functions are defined by flexible parameters unique to the specific database.

=Scheduling algorithms

=

Numerous scheduling algorithm

In computing, scheduling is the action of assigning ''resources'' to perform ''tasks''. The ''resources'' may be processors, network links or expansion cards. The ''tasks'' may be threads, processes or data flows.

The scheduling activity is c ...

s, also called load-balancing methods, are used by load balancers to determine which back-end server to send a request to.

Simple algorithms include random choice, round robin

Round-robin may refer to:

Computing

* Round-robin DNS, a technique for dealing with redundant Internet Protocol service hosts

* Round-robin networks, communications networks made up of radio nodes organized in a mesh topology

* Round-robin schedu ...

, or least connections.

=Persistence

=

An important issue when operating a load-balanced service is how to handle information that must be kept across the multiple requests in a user's session. If this information is stored locally on one backend server, then subsequent requests going to different backend servers would not be able to find it. This might be cached information that can be recomputed, in which case load-balancing a request to a different backend server just introduces a performance issue.Memcached

Memcached (pronounced variously ''mem-cash-dee'' or ''mem-cashed'') is a general-purpose distributed memory caching, memory-caching system. It is often used to speed up dynamic database-driven websites by caching data and Object (computer science) ...

.

One basic solution to the session data issue is to send all requests in a user session consistently to the same backend server. This is known as "persistence" or "stickiness". A significant downside to this technique is its lack of automatic failover

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer n ...

: if a backend server goes down, its per-session information becomes inaccessible, and any sessions depending on it are lost. The same problem is usually relevant to central database servers; even if web servers are "stateless" and not "sticky", the central database is (see below).

Assignment to a particular server might be based on a username, client IP address, or random. Because of changes in the client's perceived address resulting from DHCP

The Dynamic Host Configuration Protocol (DHCP) is a network management protocol used on Internet Protocol (IP) networks for automatically assigning IP addresses and other communication parameters to devices connected to the network using a cli ...

, network address translation, and web proxies this method may be unreliable. Random assignments must be remembered by the load balancer, which creates a burden on storage. If the load balancer is replaced or fails, this information may be lost, and assignments may need to be deleted after a timeout period or during periods of high load to avoid exceeding the space available for the assignment table. The random assignment method also requires that clients maintain some state, which can be a problem, for example when a web browser has disabled the storage of cookies. Sophisticated load balancers use multiple persistence techniques to avoid some of the shortcomings of any one method.

Another solution is to keep the per-session data in a database

In computing, a database is an organized collection of data stored and accessed electronically. Small databases can be stored on a file system, while large databases are hosted on computer clusters or cloud storage. The design of databases spa ...

. This is generally bad for performance because it increases the load on the database: the database is best used to store information less transient than per-session data. To prevent a database from becoming a single point of failure

A single point of failure (SPOF) is a part of a system that, if it fails, will stop the entire system from working. SPOFs are undesirable in any system with a goal of high availability or reliability, be it a business practice, software appl ...

, and to improve scalability

Scalability is the property of a system to handle a growing amount of work by adding resources to the system.

In an economic context, a scalable business model implies that a company can increase sales given increased resources. For example, a ...

, the database is often replicated across multiple machines, and load balancing is used to spread the query load across those replicas. Microsoft

Microsoft Corporation is an American multinational corporation, multinational technology company, technology corporation producing Software, computer software, consumer electronics, personal computers, and related services headquartered at th ...

's ASP.net State Server technology is an example of a session database. All servers in a web farm store their session data on State Server and any server in the farm can retrieve the data.

In the very common case where the client is a web browser, a simple but efficient approach is to store the per-session data in the browser itself. One way to achieve this is to use a browser cookie

HTTP cookies (also called web cookies, Internet cookies, browser cookies, or simply cookies) are small blocks of data created by a web server while a user is browsing a website and placed on the user's computer or other device by the user's w ...

, suitably time-stamped and encrypted. Another is URL rewriting

In web applications, a rewrite engine is a software component that performs rewriting on URLs (Uniform Resource Locators), modifying their appearance. This modification is called URL rewriting. It is a way of implementing URL mapping or routing ...

. Storing session data on the client is generally the preferred solution: then the load balancer is free to pick any backend server to handle a request. However, this method of state-data handling is poorly suited to some complex business logic scenarios, where session state payload is big and recomputing it with every request on a server is not feasible. URL rewriting has major security issues because the end-user can easily alter the submitted URL and thus change session streams.

Yet another solution to storing persistent data is to associate a name with each block of data, and use a distributed hash table

A distributed hash table (DHT) is a distributed system that provides a lookup service similar to a hash table: key–value pairs are stored in a DHT, and any participating node can efficiently retrieve the value associated with a given key. The ...

to pseudo-randomly assign that name to one of the available servers, and then store that block of data in the assigned server.

=Load balancer features

=

Hardware and software load balancers may have a variety of special features. The fundamental feature of a load balancer is to be able to distribute incoming requests over a number of backend servers in the cluster according to a scheduling algorithm. Most of the following features are vendor specific:

; Asymmetric load

: A ratio can be manually assigned to cause some backend servers to get a greater share of the workload than others. This is sometimes used as a crude way to account for some servers having more capacity than others and may not always work as desired.

; Priority activation

: When the number of available servers drops below a certain number, or the load gets too high, standby servers can be brought online.

; TLS Offload and Acceleration

: TLS (or its predecessor SSL) acceleration is a technique of offloading cryptographic protocol calculations onto specialized hardware. Depending on the workload, processing the encryption and authentication requirements of a TLS request can become a major part of the demand on the Web Server's CPU; as the demand increases, users will see slower response times, as the TLS overhead is distributed among Web servers. To remove this demand on Web servers, a balancer can terminate TLS connections, passing HTTPS requests as HTTP requests to the Web servers. If the balancer itself is not overloaded, this does not noticeably degrade the performance perceived by end-users. The downside of this approach is that all of the TLS processing is concentrated on a single device (the balancer) which can become a new bottleneck. Some load balancer appliances include specialized hardware to process TLS. Instead of upgrading the load balancer, which is quite expensive dedicated hardware, it may be cheaper to forgo TLS offload and add a few web servers. Also, some server vendors such as Oracle/Sun now incorporate cryptographic acceleration hardware into their CPUs such as the T2000. F5 Networks incorporates a dedicated TLS acceleration hardware card in their local traffic manager (LTM) which is used for encrypting and decrypting TLS traffic. One clear benefit to TLS offloading in the balancer is that it enables it to do balancing or content switching based on data in the HTTPS request.

; Distributed Denial of Service

In computing, a denial-of-service attack (DoS attack) is a cyber-attack in which the perpetrator seeks to make a machine or network resource unavailable to its intended users by temporarily or indefinitely disrupting services of a host conn ...

(DDoS) attack protection

: Load balancers can provide features such as SYN cookies

Syn or SYN may refer to:

In arts and entertainment In music

* The Syn, a band

*Synyster Gates, lead guitarist of the band Avenged Sevenfold

Fictional characters

* Doctor Syn, in novels by Russell Thorndike

Other uses in arts and entertainment

* ...

and delayed-binding (the back-end servers don't see the client until it finishes its TCP handshake) to mitigate SYN flood

A SYN flood is a form of denial-of-service attack in which an attacker rapidly initiates a connection to a server without finalizing the connection. The server has to spend resources waiting for half-opened connections, which can consume enough ...

attacks and generally offload work from the servers to a more efficient platform.

; HTTP compression

HTTP compression is a capability that can be built into web servers and web clients to improve transfer speed and bandwidth utilization.

HTTP data is compressed before it is sent from the server: compliant browsers will announce what methods ar ...

: HTTP compression reduces the amount of data to be transferred for HTTP objects by utilising gzip compression available in all modern web browsers. The larger the response and the further away the client is, the more this feature can improve response times. The trade-off is that this feature puts additional CPU demand on the load balancer and could be done by web servers instead.

; TCP offload

: Different vendors use different terms for this, but the idea is that normally each HTTP request from each client is a different TCP connection. This feature utilises HTTP/1.1 to consolidate multiple HTTP requests from multiple clients into a single TCP socket to the back-end servers.

; TCP buffering

: The load balancer can buffer responses from the server and spoon-feed the data out to slow clients, allowing the webserver to free a thread for other tasks faster than it would if it had to send the entire request to the client directly.

; Direct Server Return

: An option for asymmetrical load distribution, where request and reply have different network paths.

; Health checking

: The balancer polls servers for application layer health and removes failed servers from the pool.

; HTTP caching

: The balancer stores static content so that some requests can be handled without contacting the servers.

; Content filtering

: Some balancers can arbitrarily modify traffic on the way through.

; HTTP security

: Some balancers can hide HTTP error pages, remove server identification headers from HTTP responses, and encrypt cookies so that end users cannot manipulate them.

; Priority queuing

: Also known as rate shaping

Traffic shaping is a bandwidth management technique used on computer networks which delays some or all datagrams to bring them into compliance with a desired ''traffic profile''. Traffic shaping is used to optimize or guarantee performance, improv ...

, the ability to give different priorities to different traffic.

; Content-aware switching

: Most load balancers can send requests to different servers based on the URL being requested, assuming the request is not encrypted (HTTP) or if it is encrypted (via HTTPS) that the HTTPS request is terminated (decrypted) at the load balancer.

; Client authentication

: Authenticate users against a variety of authentication sources before allowing them access to a website.

; Programmatic traffic manipulation

: At least one balancer allows the use of a scripting language to allow custom balancing methods, arbitrary traffic manipulations, and more.

; Firewall

Firewall may refer to:

* Firewall (computing), a technological barrier designed to prevent unauthorized or unwanted communications between computer networks or hosts

* Firewall (construction), a barrier inside a building, designed to limit the spre ...

: Firewalls can prevent direct connections to backend servers, for network security reasons.

; Intrusion prevention system

An intrusion detection system (IDS; also intrusion prevention system or IPS) is a device or software application that monitors a network or systems for malicious activity or policy violations. Any intrusion activity or violation is typically rep ...

: Intrusion prevention systems offer application layer security in addition to the network/transport layer offered by firewall security.

Telecommunications

Load balancing can be useful in applications with redundant communications links. For example, a company may have multiple Internet connections ensuring network access if one of the connections fails. A failover

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer n ...

arrangement would mean that one link is designated for normal use, while the second link is used only if the primary link fails.

Using load balancing, both links can be in use all the time. A device or program monitors the availability of all links and selects the path for sending packets. The use of multiple links simultaneously increases the available bandwidth.

Shortest Path Bridging

TRILL

TRILL (Transparent Interconnection of Lots of Links) is an Internet Standard implemented by devices called TRILL switches. TRILL combines techniques from bridging and routing, and is the application of link-state routing to the VLAN-aware cust ...

(TRansparent Interconnection of Lots of Links) facilitates an Ethernet

Ethernet () is a family of wired computer networking technologies commonly used in local area networks (LAN), metropolitan area networks (MAN) and wide area networks (WAN). It was commercially introduced in 1980 and first standardized in ...

to have an arbitrary topology, and enables per flow pair-wise load splitting by way of Dijkstra's algorithm

Dijkstra's algorithm ( ) is an algorithm for finding the shortest paths between nodes in a graph, which may represent, for example, road networks. It was conceived by computer scientist Edsger W. Dijkstra in 1956 and published three years ...

, without configuration and user intervention. The catalyst for TRILL was an event at Beth Israel Deaconess Medical Center

Beth Israel Deaconess Medical Center (BIDMC) in Boston, Massachusetts is a teaching hospital of Harvard Medical School. It was formed out of the 1996 merger of Beth Israel Hospital (founded in 1916) and New England Deaconess Hospital (founded ...

which began on 13 November 2002. The concept of Rbridges icwas first proposed to the Institute of Electrical and Electronics Engineers

The Institute of Electrical and Electronics Engineers (IEEE) is a 501(c)(3) professional association for electronic engineering and electrical engineering (and associated disciplines) with its corporate office in New York City and its operat ...

in the year 2004, whom in 2005 rejected what came to be known as TRILL, and in the years 2006 through 2012 devised an incompatible variation known as Shortest Path Bridging.

The IEEE approved the IEEE 802.1aq

Shortest Path Bridging (SPB), specified in the IEEE 802.1aq standard, is a computer networking technology intended to simplify the creation and configuration of Ethernet networks while enabling multipath routing.

It is the replacement for ...

standard in May 2012, also known as Shortest Path Bridging (SPB). SPB allows all links to be active through multiple equal-cost paths, provides faster convergence times to reduce downtime, and simplifies the use of load balancing in mesh network topologies (partially connected and/or fully connected) by allowing traffic to load share across all paths of a network. SPB is designed to virtually eliminate human error during configuration and preserves the plug-and-play nature that established Ethernet as the de facto protocol at Layer 2.[

]

Routing 1

Many telecommunications companies have multiple routes through their networks or to external networks. They use sophisticated load balancing to shift traffic from one path to another to avoid network congestion

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking ...

on any particular link, and sometimes to minimize the cost of transit across external networks or improve network reliability.

Another way of using load balancing is in network monitoring

Network monitoring is the use of a system that constantly monitors a computer network for slow or failing components and that notifies the network administrator (via email, SMS or other alarms) in case of outages or other trouble. Network monitorin ...

activities. Load balancers can be used to split huge data flows into several sub-flows and use several network analyzers, each reading a part of the original data. This is very useful for monitoring fast networks like 10GbE

10 Gigabit Ethernet (10GE, 10GbE, or 10 GigE) is a group of computer networking technologies for transmitting Ethernet frames at a rate of 10 gigabits per second. It was first defined by the IEEE 802.3ae-2002 standard. Unlike previous Et ...

or STM64, where complex processing of the data may not be possible at wire speed In computer networking, wire speed or wirespeed refers to the hypothetical peak physical layer net bit rate (useful information rate) of a cable (consisting of fiber-optical wires or copper wires) combined with a certain digital communication dev ...

.[Mohammad Noormohammadpour, Cauligi S. Raghavendr]

Minimizing Flow Completion Times using Adaptive Routing over Inter-Datacenter Wide Area Networks

''IEEE INFOCOM 2018 Poster Sessions, DOI:10.13140/RG.2.2.36009.90720'' 6 January 2019

Data center networks

Load balancing is widely used in data center networks to distribute traffic across many existing paths between any two servers.[M. Noormohammadpour, C. S. Raghavendra]

"Datacenter Traffic Control: Understanding Techniques and Trade-offs,"

''IEEE Communications Surveys & Tutorials'', vol. PP, no. 99, pp. 1-1. It allows more efficient use of network bandwidth and reduces provisioning costs. In general, load balancing in datacenter networks can be classified as either static or dynamic.

Static load balancing distributes traffic by computing a hash of the source and destination addresses and port numbers of traffic flows and using it to determine how flows are assigned to one of the existing paths. Dynamic load balancing assigns traffic flows to paths by monitoring bandwidth use on different paths. Dynamic assignments can also be proactive or reactive. In the former case, the assignment is fixed once made, while in the latter the network logic keeps monitoring available paths and shifts flows across them as network utilization changes (with arrival of new flows or completion of existing ones). A comprehensive overview of load balancing in datacenter networks has been made available.[

]

Failovers

Load balancing is often used to implement failover

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer n ...

—the continuation of service after the failure of one or more of its components. The components are monitored continually (e.g., web servers may be monitored by fetching known pages), and when one becomes unresponsive, the load balancer is informed and no longer sends traffic to it. When a component comes back online, the load balancer starts rerouting traffic to it. For this to work, there must be at least one component in excess of the service's capacity (N+1 redundancy

N, or n, is the fourteenth letter in the Latin alphabet, used in the modern English alphabet, the alphabets of other western European languages and others worldwide. Its name in English is ''en'' (pronounced ), plural ''ens''.

History

...

). This can be much less expensive and more flexible than failover approaches where every single live component is paired with a single backup component that takes over in the event of a failure (dual modular redundancy

In reliability engineering, dual modular redundancy (DMR) is when components of a system are duplicated, providing redundancy in case one should fail. It is particularly applied to systems where the duplicated components work in parallel, particu ...

). Some RAID

Raid, RAID or Raids may refer to:

Attack

* Raid (military), a sudden attack behind the enemy's lines without the intention of holding ground

* Corporate raid, a type of hostile takeover in business

* Panty raid, a prankish raid by male colleg ...

systems can also utilize hot spare

A hot spare or warm spare or hot standby is used as a failover mechanism to provide reliability in system configurations. The hot spare is active and connected as part of a working system. When a key component fails, the hot spare is switched ...

for a similar effect.[Failover and load balancing](_blank)

''IBM'' 6 January 2019

See also

* Affinity mask

An affinity mask is a bit mask indicating what processor(s) a thread or process should be run on by the scheduler of an operating system. Setting the affinity mask for certain processes running under Windows can be useful as there are several ...

* Application Delivery Controller

An application delivery controller (ADC) is a computer network device in a datacenter, often part of an application delivery network (ADN), that helps perform common tasks, such as those done by web accelerators to remove load from the web server ...

* Autoscaling Autoscaling, also spelled auto scaling or auto-scaling, and sometimes also called automatic scaling, is a method used in cloud computing that dynamically adjusts the amount of computational resources in a server farm - typically measured by the numb ...

* Cloud computing

Cloud computing is the on-demand availability of computer system resources, especially data storage ( cloud storage) and computing power, without direct active management by the user. Large clouds often have functions distributed over m ...

* Cloud load balancing

* Common Address Redundancy Protocol

The Common Address Redundancy Protocol or CARP is a computer networking protocol which allows multiple hosts on the same local area network to share a set of IP addresses. Its primary purpose is to provide failover redundancy, especially when used ...

* Edge computing

* InterPlanetary File System

The InterPlanetary File System (IPFS) is a protocol, hypermedia and file sharing peer-to-peer network for storing and sharing data in a distributed file system. IPFS uses content-addressing to uniquely identify each file in a global namespac ...

* Network Load Balancing

* SRV record

A Service record (SRV record) is a specification of data in the Domain Name System defining the location, i.e., the hostname and port number, of servers for specified services. It is defined iRFC 2782 and its type code is 33. Some Internet protoco ...

References

External links

Server routing for load balancing with full auto failure recovery

{{DEFAULTSORT:Load Balancing (Computing)

Servers (computing)

Routing

Balancing technology

In

In  If, however, the tasks cannot be subdivided (i.e., they are atomic), although optimizing task assignment is a difficult problem, it is still possible to approximate a relatively fair distribution of tasks, provided that the size of each of them is much smaller than the total computation performed by each of the nodes.

Most of the time, the execution time of a task is unknown and only rough approximations are available. This algorithm, although particularly efficient, is not viable for these scenarios.

If, however, the tasks cannot be subdivided (i.e., they are atomic), although optimizing task assignment is a difficult problem, it is still possible to approximate a relatively fair distribution of tasks, provided that the size of each of them is much smaller than the total computation performed by each of the nodes.

Most of the time, the execution time of a task is unknown and only rough approximations are available. This algorithm, although particularly efficient, is not viable for these scenarios.

However, the quality of the algorithm can be greatly improved by replacing the master with a task list that can be used by different processors. Although this algorithm is a little more difficult to implement, it promises much better scalability, although still insufficient for very large computing centers.

However, the quality of the algorithm can be greatly improved by replacing the master with a task list that can be used by different processors. Although this algorithm is a little more difficult to implement, it promises much better scalability, although still insufficient for very large computing centers.