HPCC on:

[Wikipedia]

[Google]

[Amazon]

HPCC (High-Performance Computing Cluster), also known as DAS (Data Analytics Supercomputer), is an open source, data-intensive computing system platform developed by LexisNexis Risk Solutions. The HPCC platform incorporates a

announced

in 2011, after ten years of in-house development (according to LexisNexis). It is an alternative to

The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, each of which can be optimized independently for its parallel data processing purpose.

The first of these platforms is called Thor, a data refinery whose overall purpose is the general processing of massive volumes of raw data of any type for any purpose but typically used for data cleansing and hygiene, ETL (

The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, each of which can be optimized independently for its parallel data processing purpose.

The first of these platforms is called Thor, a data refinery whose overall purpose is the general processing of massive volumes of raw data of any type for any purpose but typically used for data cleansing and hygiene, ETL ( The second of the parallel data processing platforms is called Roxie and functions as a rapid data delivery engine. This platform is designed as an online high-performance structured query and analysis platform or data warehouse delivering the parallel data access processing requirements of online applications through Web services interfaces supporting thousands of simultaneous queries and users with sub-second response times. Roxie utilizes a distributed indexed filesystem to provide parallel processing of queries using an optimized execution environment and filesystem for high-performance online processing. A Roxie cluster is similar in its function and capabilities to

The second of the parallel data processing platforms is called Roxie and functions as a rapid data delivery engine. This platform is designed as an online high-performance structured query and analysis platform or data warehouse delivering the parallel data access processing requirements of online applications through Web services interfaces supporting thousands of simultaneous queries and users with sub-second response times. Roxie utilizes a distributed indexed filesystem to provide parallel processing of queries using an optimized execution environment and filesystem for high-performance online processing. A Roxie cluster is similar in its function and capabilities to

Sandia sees data management challenges spiral

Sandia National Laboratories Leverages the Data Analytics Supercomputer (DAS) by LexisNexis Risk & Information Analytics Group, Which Offers Breakthrough High Performance Computing to Address Data Management and Analysis Challenges

Programming models for the LexisNexis High Performance Computing Cluster

LexisNexis Data Analytics Supercomputer

LexisNexis HPCC Systems

Reference to the term BORPS (Billions of Records Per Second)

* ttp://catalog.kennesaw.edu/preview_program.php?catoid=25&poid=3023&returnto=2119 High Performance Computing Clusters (HPCC) and Big Data Analytics Certificate - Stand-Alone

FAU Receives National Science Foundation Rapid Response Grant to Develop Innovative Computer Model for Ebola Spread

CPL Online delivers added value for clients through its Big Data Platform

HPCC Systems

{{DEFAULTSORT:Hpcc Parallel computing Distributed computing Declarative programming languages Query languages Data warehousing products

software architecture

Software architecture is the fundamental structure of a software system and the discipline of creating such structures and systems. Each structure comprises software elements, relations among them, and properties of both elements and relations.

...

implemented on commodity computing clusters to provide high-performance, data-parallel processing for applications utilizing big data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller am ...

. The HPCC platform includes system configurations to support both parallel batch data processing (Thor) and high-performance online query applications using indexed data files (Roxie). The HPCC platform also includes a data-centric declarative programming language for parallel data processing called ECL.

The public release of HPCC waannounced

in 2011, after ten years of in-house development (according to LexisNexis). It is an alternative to

Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage an ...

and other Big data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller am ...

platforms.

System architecture

The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, each of which can be optimized independently for its parallel data processing purpose.

The first of these platforms is called Thor, a data refinery whose overall purpose is the general processing of massive volumes of raw data of any type for any purpose but typically used for data cleansing and hygiene, ETL (

The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, each of which can be optimized independently for its parallel data processing purpose.

The first of these platforms is called Thor, a data refinery whose overall purpose is the general processing of massive volumes of raw data of any type for any purpose but typically used for data cleansing and hygiene, ETL (extract, transform, load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also ...

) processing of the raw data, record linking and entity resolution, large-scale ad-hoc complex analytics, and creation of keyed data and indexes to support high-performance structured queries and data warehouse applications. The data refinery name Thor

Thor (; from non, Þórr ) is a prominent god in Germanic paganism. In Norse mythology, he is a hammer-wielding god associated with lightning, thunder, storms, sacred groves and trees, strength, the protection of humankind, hallowing, ...

is a reference to the mythical Norse god of thunder with the large hammer symbolic of crushing large amounts of raw data into useful information. A Thor cluster is similar in its function, execution environment, filesystem, and capabilities to the Google and Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage an ...

MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster.

A MapReduce program is composed of a ''map'' procedure, which performs filtering ...

platforms.

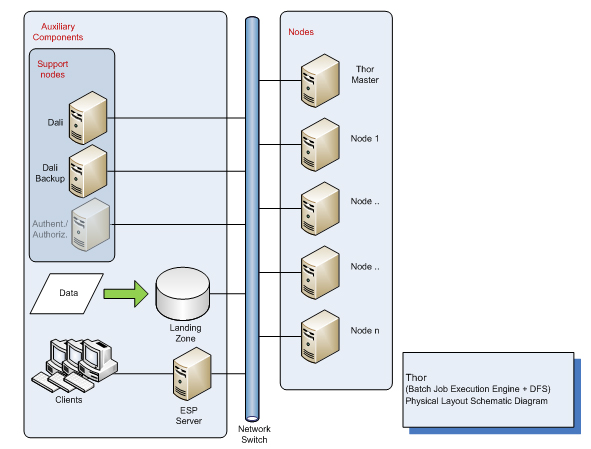

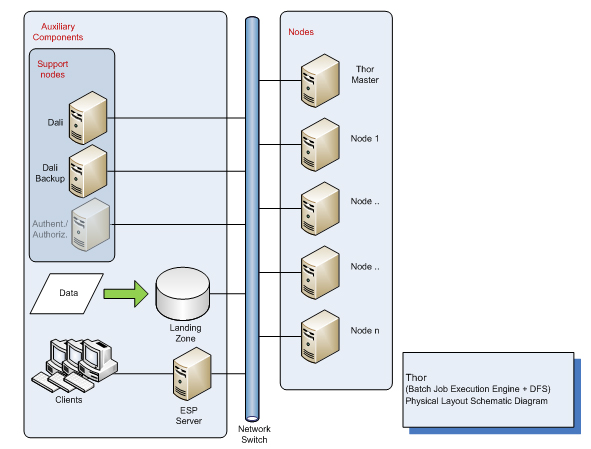

Figure 2 shows a representation of a physical Thor processing cluster which functions as a batch job execution engine for scalable data-intensive computing applications. In addition to the Thor master and slave nodes, additional auxiliary and common components are needed to implement a complete HPCC processing environment.

The second of the parallel data processing platforms is called Roxie and functions as a rapid data delivery engine. This platform is designed as an online high-performance structured query and analysis platform or data warehouse delivering the parallel data access processing requirements of online applications through Web services interfaces supporting thousands of simultaneous queries and users with sub-second response times. Roxie utilizes a distributed indexed filesystem to provide parallel processing of queries using an optimized execution environment and filesystem for high-performance online processing. A Roxie cluster is similar in its function and capabilities to

The second of the parallel data processing platforms is called Roxie and functions as a rapid data delivery engine. This platform is designed as an online high-performance structured query and analysis platform or data warehouse delivering the parallel data access processing requirements of online applications through Web services interfaces supporting thousands of simultaneous queries and users with sub-second response times. Roxie utilizes a distributed indexed filesystem to provide parallel processing of queries using an optimized execution environment and filesystem for high-performance online processing. A Roxie cluster is similar in its function and capabilities to ElasticSearch

Elasticsearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Elasticsearch is developed in Java and is dual ...

and Hadoop with HBase and Hive

A hive may refer to a beehive, an enclosed structure in which some honey bee species live and raise their young.

Hive or hives may also refer to:

Arts

* ''Hive'' (game), an abstract-strategy board game published in 2001

* "Hive" (song), a 201 ...

capabilities added, and provides for near real time predictable query latencies. Both Thor and Roxie clusters utilize the ECL programming language for implementing applications, increasing continuity and programmer productivity.

Figure 3 shows a representation of a physical Roxie processing cluster which functions as an online query execution engine for high-performance query and data warehousing applications. A Roxie cluster includes multiple nodes with server and worker processes for processing queries; an additional auxiliary component called an ESP server which provides interfaces for external client access to the cluster; and additional common components which are shared with a Thor cluster in an HPCC environment. Although a Thor processing cluster can be implemented and used without a Roxie cluster, an HPCC environment which includes a Roxie cluster should also include a Thor cluster. The Thor cluster is used to build the distributed index files used by the Roxie cluster and to develop online queries which will be deployed with the index files to the Roxie cluster.

Software architecture

The HPCC software architecture incorporates the Thor and Roxie clusters as well as commonmiddleware

Middleware is a type of computer software that provides services to software applications beyond those available from the operating system. It can be described as "software glue".

Middleware makes it easier for software developers to implement ...

components, an external communications layer, client interfaces which provide both end-user services and system management tools, and auxiliary components to support monitoring and to facilitate loading and storing of filesystem data from external sources. Usually a HPCC environment includes only Thor clusters, or both Thor and Roxie clusters, although Roxie occasionally is used to build its own indexes. The overall HPCC software architecture is shown in Figure 4.

HPCC Systems

HPCC Systems (High Performance Computing Cluster) is part of LexisNexis Risk Solutions and was formed to promote and sell the HPCC software. In June 2011, it announced the offering of the software under an open source dual license model. HPCC Systems offers both a Community Edition and an Enterprise Edition. The Community Edition is free to download, includes the source code and is released under the Apache License 2.0. The Enterprise Edition is available under a paid commercial license and includes training, support, indemnification and additional modules. In November 2011, HPCC Systems announced the availability of its Thor Data Refinery Cluster onAmazon Web Services

Amazon Web Services, Inc. (AWS) is a subsidiary of Amazon that provides on-demand cloud computing platforms and APIs to individuals, companies, and governments, on a metered pay-as-you-go basis. These cloud computing web services provide d ...

.

In January 2012, HPCC Systems announced distributed machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

algorithms.

See also

*Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage a ...

* Apache Spark

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance. Originally developed at the University of Califor ...

* Aster Data Systems

Aster Data Systems was a data management and analysis software company headquartered in San Carlos, California. It was founded in 2005 and acquired by Teradata in 2011.

History

Aster Data was co-founded in 2005 by Stanford University graduate s ...

* ECL (data-centric programming language)

*ElasticSearch

Elasticsearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Elasticsearch is developed in Java and is dual ...

* Sector/Sphere

Sector/Sphere is an open source software suite for high-performance distributed data storage and processing. It can be broadly compared to Google's GFS and MapReduce technology. Sector is a distributed file system targeting data storage over ...

*Machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

*MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster.

A MapReduce program is composed of a ''map'' procedure, which performs filtering ...

References

External links

Sandia sees data management challenges spiral

Sandia National Laboratories Leverages the Data Analytics Supercomputer (DAS) by LexisNexis Risk & Information Analytics Group, Which Offers Breakthrough High Performance Computing to Address Data Management and Analysis Challenges

Programming models for the LexisNexis High Performance Computing Cluster

LexisNexis Data Analytics Supercomputer

LexisNexis HPCC Systems

Reference to the term BORPS (Billions of Records Per Second)

* ttp://catalog.kennesaw.edu/preview_program.php?catoid=25&poid=3023&returnto=2119 High Performance Computing Clusters (HPCC) and Big Data Analytics Certificate - Stand-Alone

FAU Receives National Science Foundation Rapid Response Grant to Develop Innovative Computer Model for Ebola Spread

CPL Online delivers added value for clients through its Big Data Platform

HPCC Systems

{{DEFAULTSORT:Hpcc Parallel computing Distributed computing Declarative programming languages Query languages Data warehousing products