DFA Minimization on:

[Wikipedia]

[Google]

[Amazon]

In

In

let reachable_states :=

let new_states :=

do while (new_states ŌēĀ the empty set)

unreachable_states := Q \ reachable_states

Assuming an efficient implementation of the state sets (e.g.

P :=

W :=

while (W is not empty) do

choose and remove a set A from W

for each c in ╬Ż do

let X be the set of states for which a transition on c leads to a state in A

for each set Y in P for which X Ōł® Y is nonempty and Y \ X is nonempty do

replace Y in P by the two sets X Ōł® Y and Y \ X

if Y is in W

replace Y in W by the same two sets

else

if , X Ōł® Y, <= , Y \ X,

add X Ōł® Y to W

else

add Y \ X to W

The algorithm starts with a partition that is too coarse: every pair of states that are equivalent according to the Nerode congruence belong to the same set in the partition, but pairs that are inequivalent might also belong to the same set. It gradually refines the partition into a larger number of smaller sets, at each step splitting sets of states into pairs of subsets that are necessarily inequivalent.

The initial partition is a separation of the states into two subsets of states that clearly do not have the same behavior as each other: the accepting states and the rejecting states. The algorithm then repeatedly chooses a set from the current partition and an input symbol , and splits each of the sets of the partition into two (possibly empty) subsets: the subset of states that lead to on input symbol , and the subset of states that do not lead to . Since is already known to have different behavior than the other sets of the partition, the subsets that lead to also have different behavior than the subsets that do not lead to . When no more splits of this type can be found, the algorithm terminates.

Lemma. Given a fixed character ''c'' and an equivalence class ''Y'' that splits into equivalence classes ''B'' and ''C'', only one of ''B'' or ''C'' is necessary to refine the whole partition.

Example: Suppose we have an equivalence class ''Y'' that splits into equivalence classes ''B'' and ''C''. Suppose we also have classes ''D'', ''E'', and ''F''; ''D'' and ''E'' have states with transitions into ''B'' on character ''c'', while ''F'' has transitions into ''C'' on character ''c''. By the Lemma, we can choose either ''B'' or ''C'' as the distinguisher, let's say ''B''. Then the states of ''D'' and ''E'' are split by their transitions into ''B''. But ''F'', which doesn't point into ''B'', simply doesn't split during the current iteration of the algorithm; it will be refined by other distinguisher(s).

Observation. All of ''B'' or ''C'' is necessary to split referring classes like ''D'', ''E'', and ''F'' correctlyŌĆösubsets won't do.

The purpose of the outermost

' ; thus, at least one word is accepted from each state. to the language of ,Language of a state is the set of words accepted from that state. which couldn't possibly be present in , since this word is unique to (no other state accepts it). We see that this holds for each pair of powerstates, and thus each powerstate is distinguishable from every other powerstate. Therefore, after determinization of , we have a DFA with no indistinguishable or unreachable states; hence, the minimal DFA for the original .

If is already deterministic, then it suffices to trim it,Trim = remove unreachable and dead states. reverse it, determinize it, and then reverse it again. This could be thought of as starting with in the process above (assuming it has already been trimmed), since the input FA is already deterministic (but keep in mind it's actually not a reversal). We reverse and determinize to obtain , which is the minimal DFA for the ''reversal'' of the language of (since we did only one reversal so far). Now all that's left to do is to reverse to obtain the minimal DFA for the original language.

Technical Report STAN-CS-71-190

Stanford University, Computer Science Department, January 1971. * *. *. *. *. * *. *

DFA minimization using the MyhillŌĆōNerode theorem

{{DEFAULTSORT:Dfa Minimization Finite automata Articles with example pseudocode

In

In automata theory

Automata theory is the study of abstract machines and automata, as well as the computational problems that can be solved using them. It is a theory in theoretical computer science. The word ''automata'' comes from the Greek word ╬▒ßĮÉŽäŽī╬╝╬▒Žä╬┐Ž ...

(a branch of theoretical computer science

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory.

It is difficult to circumsc ...

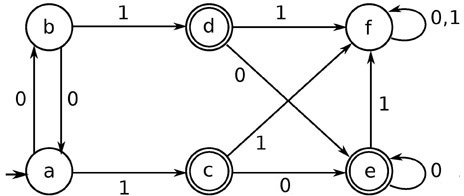

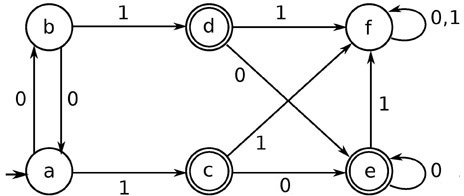

), DFA minimization is the task of transforming a given deterministic finite automaton

In the theory of computation, a branch of theoretical computer science, a deterministic finite automaton (DFA)ŌĆöalso known as deterministic finite acceptor (DFA), deterministic finite-state machine (DFSM), or deterministic finite-state automa ...

(DFA) into an equivalent DFA that has a minimum number of states. Here, two DFAs are called equivalent if they recognize the same regular language

In theoretical computer science and formal language theory, a regular language (also called a rational language) is a formal language that can be defined by a regular expression, in the strict sense in theoretical computer science (as opposed to ...

. Several different algorithms accomplishing this task are known and described in standard textbooks on automata theory.

Minimal DFA

For each regular language, there also exists a minimal automaton that accepts it, that is, a DFA with a minimum number of states and this DFA is unique (except that states can be given different names). The minimal DFA ensures minimal computational cost for tasks such aspattern matching

In computer science, pattern matching is the act of checking a given sequence of tokens for the presence of the constituents of some pattern. In contrast to pattern recognition, the match usually has to be exact: "either it will or will not be ...

.

There are two classes of states that can be removed or merged from the original DFA without affecting the language it accepts.

* Unreachable states are the states that are not reachable from the initial state of the DFA, for any input string. These states can be removed.

* Dead states are the states from which no final state is reachable. These states can be removed unless the automaton is required to be complete

Complete may refer to:

Logic

* Completeness (logic)

* Completeness of a theory, the property of a theory that every formula in the theory's language or its negation is provable

Mathematics

* The completeness of the real numbers, which implies t ...

.

* Nondistinguishable states are those that cannot be distinguished from one another for any input string. These states can be merged.

DFA minimization is usually done in three steps:

# remove dead and unreachable states (this will accelerate the following step),

# merge nondistinguishable states,

# optionally, re-create a single dead state ("sink" state) if the resulting DFA is required to be complete.

Unreachable states

The state of a deterministic finite automaton is unreachable if no string in exists for which . In this definition, is the set of states, is the set of input symbols, is the transition function (mapping a state and an input symbol to a set of states), is its extension to strings (also known as extended transition function), is the initial state, and is the set of accepting (also known as final) states. Reachable states can be obtained with the following algorithm:new_states) and operations on them (such as adding a state or checking whether it is present), this algorithm can be implemented with time complexity , where is the number of states and is the number of transitions of the input automaton.

Unreachable states can be removed from the DFA without affecting the language that it accepts.

Nondistinguishable states

The following algorithms present various approaches to merging nondistinguishable states.Hopcroft's algorithm

One algorithm for merging the nondistinguishable states of a DFA, due to , is based onpartition refinement In the design of algorithms, partition refinement is a technique for representing a partition of a set as a data structure that allows the partition to be refined by splitting its sets into a larger number of smaller sets. In that sense it is dual t ...

, partitioning the DFA states into groups by their behavior. These groups represent equivalence class

In mathematics, when the elements of some set S have a notion of equivalence (formalized as an equivalence relation), then one may naturally split the set S into equivalence classes. These equivalence classes are constructed so that elements a ...

es of the Nerode congruence, whereby every two states are equivalent if they have the same behavior for every input sequence. That is, for every two states and that belong to the same block of the partition , and every input word , the transitions determined by should always take states and to either states that both accept or states that both reject. It should not be possible for to take to an accepting state and to a rejecting state or vice versa.

The following pseudocode

In computer science, pseudocode is a plain language description of the steps in an algorithm or another system. Pseudocode often uses structural conventions of a normal programming language, but is intended for human reading rather than machine re ...

describes the form of the algorithm as given by Xu. Alternative forms have also been presented..

if statement (if Y is in W) is to patch up ''W'', the set of distinguishers. We see in the previous statement in the algorithm that ''Y'' has just been split. If ''Y'' is in ''W'', it has just become obsolete as a means to split classes in future iterations. So ''Y'' must be replaced by both splits because of the Observation above. If ''Y'' is not in ''W'', however, only one of the two splits, not both, needs to be added to ''W'' because of the Lemma above. Choosing the smaller of the two splits guarantees that the new addition to ''W'' is no more than half the size of ''Y''; this is the core of the Hopcroft algorithm: how it gets its speed, as explained in the next paragraph.

The worst case

In computer science, best, worst, and average cases of a given algorithm express what the resource usage is ''at least'', ''at most'' and ''on average'', respectively. Usually the resource being considered is running time, i.e. time complexity, b ...

running time of this algorithm is , where is the number of states and is the size of the alphabet. This bound follows from the fact that, for each of the transitions of the automaton, the sets drawn from that contain the target state of the transition have sizes that decrease relative to each other by a factor of two or more, so each transition participates in of the splitting steps in the algorithm. The partition refinement In the design of algorithms, partition refinement is a technique for representing a partition of a set as a data structure that allows the partition to be refined by splitting its sets into a larger number of smaller sets. In that sense it is dual t ...

data structure allows each splitting step to be performed in time proportional to the number of transitions that participate in it. This remains the most efficient algorithm known for solving the problem, and for certain distributions of inputs its average-case complexity

In computational complexity theory, the average-case complexity of an algorithm is the amount of some computational resource (typically time) used by the algorithm, averaged over all possible inputs. It is frequently contrasted with worst-case comp ...

is even better, ..

Once Hopcroft's algorithm has been used to group the states of the input DFA into equivalence classes, the minimum DFA can be constructed by forming one state for each equivalence class. If is a set of states in , is a state in , and is an input character, then the transition in the minimum DFA from the state for , on input , goes to the set containing the state that the input automaton would go to from state on input . The initial state of the minimum DFA is the one containing the initial state of the input DFA, and the accepting states of the minimum DFA are the ones whose members are accepting states of the input DFA.

Moore's algorithm

Moore's algorithm for DFA minimization is due to . Like Hopcroft's algorithm, it maintains a partition that starts off separating the accepting from the rejecting states, and repeatedly refines the partition until no more refinements can be made. At each step, it replaces the current partition with the coarsest common refinement of partitions, one of which is the current one and the rest of which are the preimages of the current partition under the transition functions for each of the input symbols. The algorithm terminates when this replacement does not change the current partition. Its worst-case time complexity is : each step of the algorithm may be performed in time using a variant ofradix sort

In computer science, radix sort is a non-comparative sorting algorithm. It avoids comparison by creating and distributing elements into buckets according to their radix. For elements with more than one significant digit, this bucketing process i ...

to reorder the states so that states in the same set of the new partition are consecutive in the ordering, and there are at most steps since each one but the last increases the number of sets in the partition. The instances of the DFA minimization problem that cause the worst-case behavior are the same as for Hopcroft's algorithm. The number of steps that the algorithm performs can be much smaller than , so on average (for constant ) its performance is or even depending on the random distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon ...

on automata chosen to model the algorithm's average-case behavior.

Brzozowski's algorithm

Reversing the transitions of anon-deterministic finite automaton

In automata theory, a finite-state machine is called a deterministic finite automaton (DFA), if

* each of its transitions is ''uniquely'' determined by its source state and input symbol, and

* reading an input symbol is required for each state tr ...

(NFA) and switching initial and final statesIn case there are several final states in ''M'', we either must allow multiple initial states in the reversal of ''M''; or add an extra state with ╬Ą-transitions to all the initial states, and make only this new state initial. produces an NFA for the reversal of the original language. Converting this NFA to a DFA using the standard powerset construction

In the theory of computation and automata theory, the powerset construction or subset construction is a standard method for converting a nondeterministic finite automaton (NFA) into a deterministic finite automaton (DFA) which recognizes the sa ...

(keeping only the reachable states of the converted DFA) leads to a DFA for the same reversed language. As observed, repeating this reversal and determinization a second time, again keeping only reachable states, produces the minimal DFA for the original language.

The intuition behind the algorithm is this: determinizing the reverse automaton merges states that are nondistinguishable in the original automaton, but may merge also states that should ''not'' be merged (i.e., are not merged in the minimal DFA). In such case, after we reverse the automaton for the second time, it may not be deterministic. That is why we need to determinize it again, obtaining the minimal DFA.

Proof of correctness

After we determinize to obtain , we reverse this to obtain . Now recognises the same language as , but there's one important difference: there are no two states in from which we can accept the same word. This follows from being deterministic, viz. there are no two states in that we can reach from the initial state through the same word. The determinization of then creates powerstates (sets of states of ), where every two powerstates differ ŌĆÆ naturally ŌĆÆ in at least one state of . Assume and ; then contributes at least one wordRecall there are no dead states in ''M''Complexity

The worst-case complexity of Brzozowski's algorithm is exponential in the number of states of the input automaton. This holds regardless of whether the input is a NFA or a DFA. In the case of DFA, the exponential explosion can happen during determinization of the reversal of the input automaton;For instance, the language of binary strings whose th symbol is a one requires only states, but its reversal requires states. provides a ternary -state DFA whose reversal requires states, the maximum possible. For additional examples and the observation of the connection between these examples and the worst-case analysis of Brzozowski's algorithm, see . in the case of NFA, it can also happen during the initial determinization of the input automaton.Exponential explosion will happen at most once, not in both determinizations. That is, the algorithm is at worst exponential, not doubly-exponential. However, the algorithm frequently performs better than this worst case would suggest.NFA minimization

While the above procedures work for DFAs, the method of partitioning does not work for non-deterministic finite automata (NFAs)., Section 4.4, Figure labeled "Minimizing the States of an NFA", p. 163. While an exhaustive search may minimize an NFA, there is nopolynomial-time algorithm

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by t ...

to minimize general NFAs unless P=PSPACE

In computational complexity theory, PSPACE is the set of all decision problems that can be solved by a Turing machine using a polynomial amount of space.

Formal definition

If we denote by SPACE(''t''(''n'')), the set of all problems that can b ...

, an unsolved conjecture in computational complexity theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by ...

that is widely believed to be false. However, there are methods of NFA minimization

In automata theory (a branch of theoretical computer science), NFA minimization is the task of transforming a given nondeterministic finite automaton (NFA) into an equivalent NFA that has a minimum number of states, transitions, or both. While effi ...

that may be more efficient than brute force search.

See also

*State encoding for low power State encoding assigns a unique pattern of ones and zeros to each defined state of a finite-state machine (FSM). Traditionally, design criteria for FSM synthesis were speed, area or both. Following Moore's law, with technology advancement, density a ...

Notes

References

*. * *. *. *. *. See also preliminary versionTechnical Report STAN-CS-71-190

Stanford University, Computer Science Department, January 1971. * *. *. *. *. * *. *

External links

DFA minimization using the MyhillŌĆōNerode theorem

{{DEFAULTSORT:Dfa Minimization Finite automata Articles with example pseudocode