D-prime on:

[Wikipedia]

[Google]

[Amazon]

The sensitivity index or discriminability index or detectability index is a dimensionless

The sensitivity index or discriminability index or detectability index is a dimensionless

Matlab code

, and may also be used as an approximation when the distributions are close to normal. is a positive-definite statistical distance measure that is free of assumptions about the distributions, like the Kullback-Leibler divergence . is asymmetric, whereas is symmetric for the two distributions. However, does not satisfy the triangle inequality, so it is not a full metric. In particular, for a yes/no task between two univariate normal distributions with means and variances , the Bayes-optimal classification accuracies are: : , where denotes the

Interactive signal detection theory tutorial

including calculation of ''d''′. Detection theory Signal processing Summary statistics {{stat-stub

The sensitivity index or discriminability index or detectability index is a dimensionless

The sensitivity index or discriminability index or detectability index is a dimensionless statistic

A statistic (singular) or sample statistic is any quantity computed from values in a sample which is considered for a statistical purpose. Statistical purposes include estimating a population parameter, describing a sample, or evaluating a hypo ...

used in signal detection theory

Detection theory or signal detection theory is a means to measure the ability to differentiate between information-bearing patterns (called stimulus in living organisms, signal in machines) and random patterns that distract from the information (ca ...

. A higher index indicates that the signal can be more readily detected.

Definition

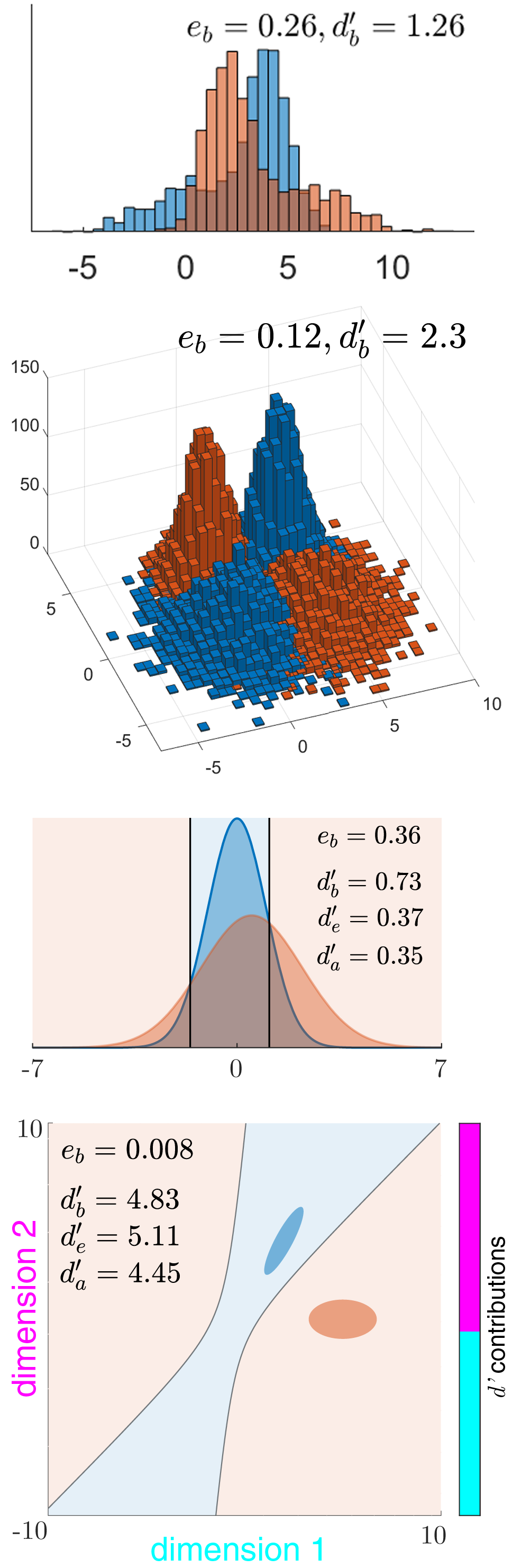

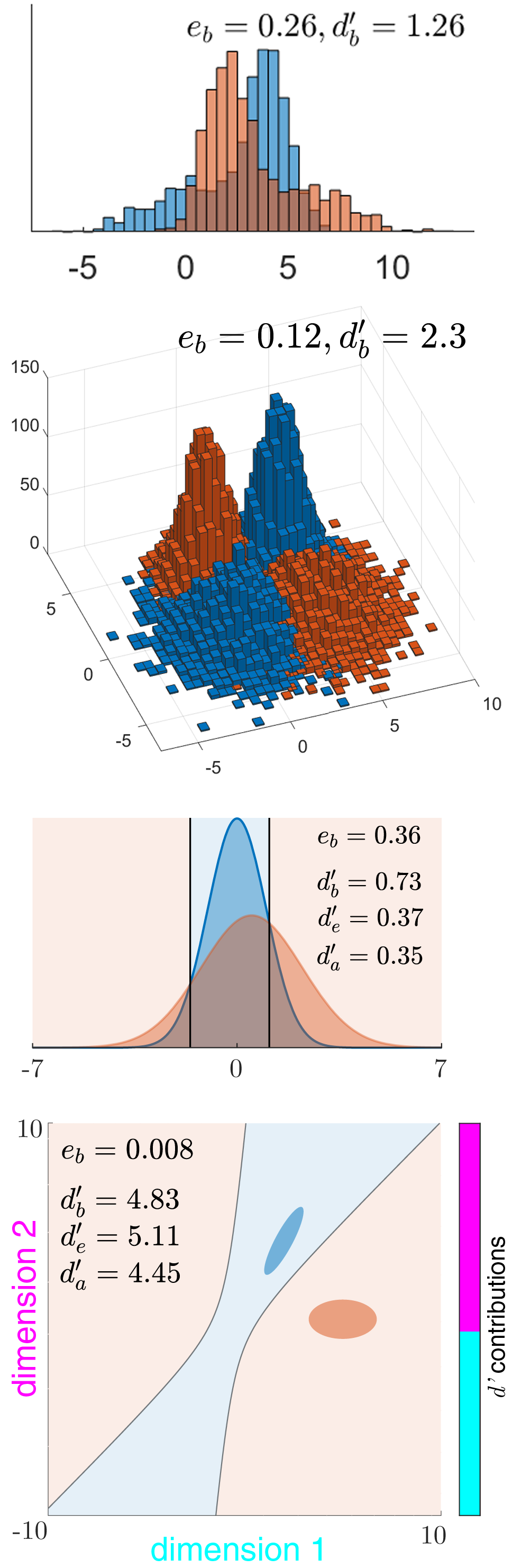

The discriminability index is the separation between the means of two distributions (typically the signal and the noise distributions), in units of the standard deviation.Equal variances/covariances

For two univariate distributions and with the same standard deviation, it is denoted by ('dee-prime'): : . In higher dimensions, i.e. with two multivariate distributions with the same variance-covariance matrix , (whose symmetric square-root, the standard deviation matrix, is ), this generalizes to theMahalanobis distance The Mahalanobis distance is a measure of the distance between a point ''P'' and a distribution ''D'', introduced by P. C. Mahalanobis in 1936. Mahalanobis's definition was prompted by the problem of identifying the similarities of skulls based ...

between the two distributions:

: ,

where is the 1d slice of the sd along the unit vector through the means, i.e. the equals the along the 1d slice through the means.

This is also estimated as .

Unequal variances/covariances

When the two distributions have different standard deviations (or in general dimensions, different covariance matrices), there exist several contending indices, all of which reduce to for equal variance/covariance.Bayes discriminability index

This is the maximum (Bayes-optimal) discriminability index for two distributions, based on the amount of their overlap, i.e. the optimal (Bayes) error of classification by an ideal observer, or its complement, the optimal accuracy : : , where is the inverse cumulative distribution function of the standard normal. The Bayes discriminability between univariate or multivariate normal distributions can be numerically computedMatlab code

, and may also be used as an approximation when the distributions are close to normal. is a positive-definite statistical distance measure that is free of assumptions about the distributions, like the Kullback-Leibler divergence . is asymmetric, whereas is symmetric for the two distributions. However, does not satisfy the triangle inequality, so it is not a full metric. In particular, for a yes/no task between two univariate normal distributions with means and variances , the Bayes-optimal classification accuracies are: : , where denotes the

non-central chi-squared distribution

In probability theory and statistics, the noncentral chi-squared distribution (or noncentral chi-square distribution, noncentral \chi^2 distribution) is a noncentral generalization of the chi-squared distribution. It often arises in the power an ...

, , and . The Bayes discriminability

can also be computed from the ROC curve

A receiver operating characteristic curve, or ROC curve, is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The method was originally developed for operators of m ...

of a yes/no task between two univariate normal distributions with a single shifting criterion. It can also be computed from the ROC curve of any two distributions (in any number of variables) with a shifting likelihood-ratio, by locating the point on the ROC curve that is farthest from the diagonal.

For a two-interval task between these distributions, the optimal accuracy is ( denotes the generalized chi-squared distribution

In probability theory and statistics, the generalized chi-squared distribution (or generalized chi-square distribution) is the distribution of a quadratic form of a multinormal variable (normal vector), or a linear combination of different no ...

), where

. The Bayes discriminability .

RMS sd discriminability index

A common approximate (i.e. sub-optimal) discriminability index that has a closed-form is to take the average of the variances, i.e. the rms of the two standard deviations: (also denoted by ). It is times the -score of the area under thereceiver operating characteristic

A receiver operating characteristic curve, or ROC curve, is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The method was originally developed for operators of ...

curve (AUC) of a single-criterion observer. This index is extended to general dimensions as the Mahalanobis distance using the pooled covariance, i.e. with as the common sd matrix.

Average sd discriminability index

Another index is , extended to general dimensions using as the common sd matrix.Comparison of the indices

It has been shown that for two univariate normal distributions, , and for multivariate normal distributions, still. Thus, and underestimate the maximum discriminability of univariate normal distributions. can underestimate by a maximum of approximately 30%. At the limit of high discriminability for univariate normal distributions, converges to . These results often hold true in higher dimensions, but not always. Simpson and Fitter promoted as the best index, particularly for two-interval tasks, but Das and Geisler have shown that is the optimal discriminability in all cases, and is often a better closed-form approximation than , even for two-interval tasks. The approximate index , which uses the geometric mean of the sd's, is less than at small discriminability, but greater at large discriminability.See also

*Receiver operating characteristic

A receiver operating characteristic curve, or ROC curve, is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The method was originally developed for operators of ...

(ROC)

* Summary statistics

In descriptive statistics, summary statistics are used to summarize a set of observations, in order to communicate the largest amount of information as simply as possible. Statisticians commonly try to describe the observations in

* a measure of ...

* Effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

References

*External links

Interactive signal detection theory tutorial

including calculation of ''d''′. Detection theory Signal processing Summary statistics {{stat-stub