content moderation on:

[Wikipedia]

[Google]

[Amazon]

On Internet websites that invite users to post comments, content moderation is the process of detecting contributions that are irrelevant, obscene, illegal, harmful, or insulting with regards to useful or informative contributions. The purpose of content moderation is to remove or apply a warning label to problematic content or allow users to block and filter content themselves.

Various types of Internet sites permit user-generated content such as comments, including Internet forums, blogs, and news sites powered by scripts such as phpBB, a Wiki, or PHP-Nuke. Depending on the site's content and intended audience, the site's administrators will decide what kinds of user comments are appropriate, then delegate the responsibility of sifting through comments to lesser

On Internet websites that invite users to post comments, content moderation is the process of detecting contributions that are irrelevant, obscene, illegal, harmful, or insulting with regards to useful or informative contributions. The purpose of content moderation is to remove or apply a warning label to problematic content or allow users to block and filter content themselves.

Various types of Internet sites permit user-generated content such as comments, including Internet forums, blogs, and news sites powered by scripts such as phpBB, a Wiki, or PHP-Nuke. Depending on the site's content and intended audience, the site's administrators will decide what kinds of user comments are appropriate, then delegate the responsibility of sifting through comments to lesser

Facebook

Facebook has decided to create an oversight board that will decide what content remains and what content is removed. This idea was proposed in late 2018. The "Supreme Court" at Facebook is to replace making decisions in an ad hoc manner.

Slashdot

– A definitive example of user moderation

Fundamental Basics of Content Moderation

* Cliff Lampe and Paul Resnick

Slash (dot) and burn: distributed moderation in a large online conversation space

Proceedings of the SIGCHI conference on Human factors in computing systems table of contents, Vienna, Austria 2005, 543–550. *Hamed Alhoori, Omar Alvarez, Richard Furuta, Miguel Muñiz, Eduardo Urbina

Supporting the Creation of Scholarly Bibliographies by Communities through Online Reputation Based Social Collaboration.

ECDL 2009: 180–191 Internet forum terminology Internet culture Reputation management *

On Internet websites that invite users to post comments, content moderation is the process of detecting contributions that are irrelevant, obscene, illegal, harmful, or insulting with regards to useful or informative contributions. The purpose of content moderation is to remove or apply a warning label to problematic content or allow users to block and filter content themselves.

Various types of Internet sites permit user-generated content such as comments, including Internet forums, blogs, and news sites powered by scripts such as phpBB, a Wiki, or PHP-Nuke. Depending on the site's content and intended audience, the site's administrators will decide what kinds of user comments are appropriate, then delegate the responsibility of sifting through comments to lesser

On Internet websites that invite users to post comments, content moderation is the process of detecting contributions that are irrelevant, obscene, illegal, harmful, or insulting with regards to useful or informative contributions. The purpose of content moderation is to remove or apply a warning label to problematic content or allow users to block and filter content themselves.

Various types of Internet sites permit user-generated content such as comments, including Internet forums, blogs, and news sites powered by scripts such as phpBB, a Wiki, or PHP-Nuke. Depending on the site's content and intended audience, the site's administrators will decide what kinds of user comments are appropriate, then delegate the responsibility of sifting through comments to lesser moderator

Moderator may refer to:

Government

*Moderator (town official), elected official who presides over the Town Meeting form of government Internet

*Internet forum#Moderators, Internet forum moderator, a person given special authority to enforce the ...

s. Most often, they will attempt to eliminate trolling, spamming, or flaming, although this varies widely from site to site.

Major platforms use a combination of algorithmic tools, user reporting and human review. Social media sites may also employ content moderators to manually inspect or remove content flagged for hate speech

Hate speech is defined by the ''Cambridge Dictionary'' as "public speech that expresses hate or encourages violence towards a person or group based on something such as race, religion, sex, or sexual orientation". Hate speech is "usually thoug ...

or other objectionable content. Other content issues include revenge porn, graphic content, child abuse material and propaganda

Propaganda is communication that is primarily used to influence or persuade an audience to further an agenda, which may not be objective and may be selectively presenting facts to encourage a particular synthesis or perception, or using loaded ...

. Some websites must also make their content hospitable to advertisements.

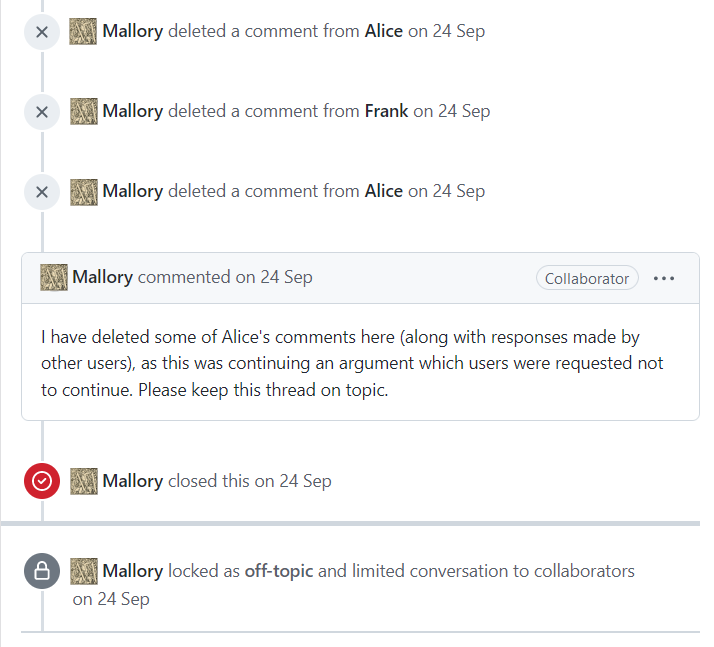

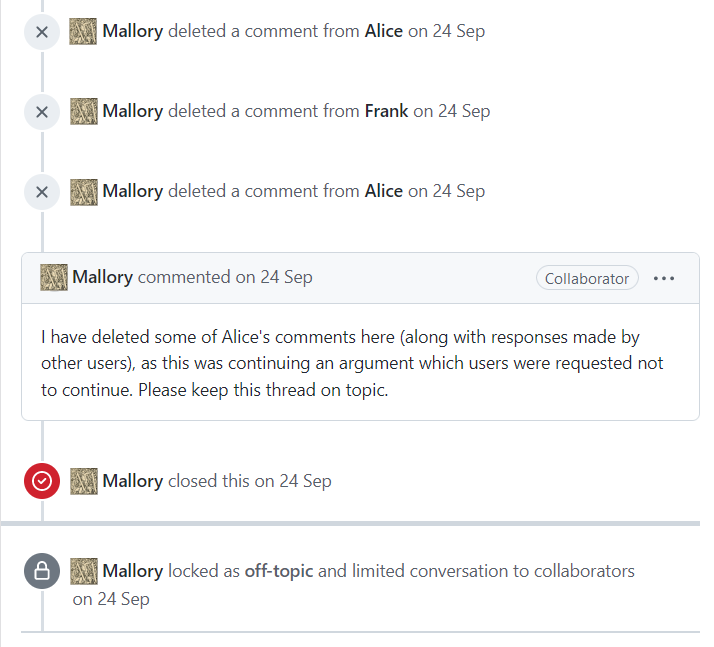

Supervisor moderation

Also known as unilateral moderation, this kind of moderation system is often seen on Internet forums. A group of people are chosen by the site's administrators (usually on a long-term basis) to act as delegates, enforcing the community rules on their behalf. Thesemoderator

Moderator may refer to:

Government

*Moderator (town official), elected official who presides over the Town Meeting form of government Internet

*Internet forum#Moderators, Internet forum moderator, a person given special authority to enforce the ...

s are given special privileges to delete or edit others' contributions and/or exclude people based on their e-mail address

An email address identifies an email box to which messages are delivered. While early messaging systems used a variety of formats for addressing, today, email addresses follow a set of specific rules originally standardized by the Internet Engine ...

or IP address, and generally attempt to remove negative contributions throughout the community. They act as an invisible backbone, underpinning the social web in a crucial but undervalued role.

In the case of Facebook, the company has increased the number of content moderators from 4,500 to 7,500 in 2017 due to legal and other controversies. In Germany, Facebook is responsible for removing hate speech within 24 hours of when it is posted.

Social media site Twitter has a suspension policy. Between August 2015 and December 2017 it suspended over 1.2 million accounts for terrorist content in an effort to reduce the number of followers and amount of content associated with the Islamic State.

Commercial content moderation (CCM)

Commercial Content Moderation is a term coined by Sarah T. Roberts to describe the practice of "monitoring and vetting user-generated content (UGC) for social media platforms of all types, in order to ensure that the content complies with legal and regulatory exigencies, site/community guidelines, user agreements, and that it falls within norms of taste and acceptability for that site and its cultural context." While at one time this work may have been done by volunteers within the online community, for commercial websites this is largely achieved throughoutsourcing

Outsourcing is an agreement in which one company hires another company to be responsible for a planned or existing activity which otherwise is or could be carried out internally, i.e. in-house, and sometimes involves transferring employees and ...

the task to specialized companies, often in low-wage areas such as India and the Philippines. Outsourcing of content moderation jobs grew as a result of the social media boom. With the overwhelming growth of users and UGC, companies needed many more employees to moderate the content. In the late 1980s and early 1990s, tech companies began to outsource jobs to foreign countries that had an educated workforce but were willing to work for cheap.

Employees work by viewing, assessing and deleting disturbing content, and may suffer psychological damage. Secondary trauma Secondary trauma can be incurred when an individual is exposed to people who have been traumatized themselves, disturbing descriptions of traumatic events by a survivor, or others inflicting cruelty on one another. Symptoms of secondary trauma are s ...

may arise, with symptoms similar to PTSD. Some large companies such as Facebook offer psychological support and increasingly rely on the use of Artificial Intelligence (AI) to sort out the most graphic and inappropriate content, but critics claim that it is insufficient.

Distributed moderation

Distributed moderation comes in two types: user moderation and spontaneous moderation.User moderation

User moderation allows any user to moderate any other user's contributions. Billions of people are currently making decisions on what to share, forward or give visibility to on a daily basis. On a large site with a sufficiently large active population, this usually works well, since relatively small numbers of troublemakers are screened out by the votes of the rest of the community. Strictly speaking, wikis such as Wikipedia are the ultimate in user moderation, but in the context of Internet forums, the definitive example of a user moderation system is Slashdot. For example, each moderator is given a limited number of "mod points," each of which can be used to moderate an individual comment up or down by one point. Comments thus accumulate a score, which is additionally bounded to the range of -1 to 5 points. When viewing the site, a threshold can be chosen from the same scale, and only posts meeting or exceeding that threshold will be displayed. This system is further refined by the concept of karma—the ratings assigned to a user's' previous contributions can bias the initial rating of contributions he or she makes. On sufficiently specialized websites, user moderation will often lead to groupthink, in which any opinion that is in disagreement with the website's established principles (no matter how sound or well-phrased) will very likely be "modded down" and censored, leading to the perpetuation of the groupthink mentality. This is often confused with trolling. User moderation can also be characterized by reactive moderation. This type of moderation depends on users of a platform or site to report content that is inappropriate and breachescommunity standards As a legal term in the United States, community standards arose from a test to determine whether material is or is not obscene as explicated in the 1957RA Supreme Court decision in the matter of Roth v. United States. In its 6–3 decision written ...

. In this process, when users are faced with an image or video they deem unfit, they can click the report button. The complaint is filed and queued for moderators to look at.Grimes-Viort, Blaise (December 7, 2010). "6 types of content moderation you need to know about". ''Social Media Today''.

Spontaneous moderation

Spontaneous moderation is what occurs when no official moderation scheme exists. Without any ability to moderate comments, users will spontaneously moderate their peers through posting their own comments about others' comments. Because spontaneous moderation exists, no system that allows users to submit their own content can ever go completely without any kind of moderation.See also

* Like button *Meta-moderation system

Meta-moderation is a second level of comment moderation. A user is invited to rate a moderator's decision. He is shown a post that was moderated up or down and marks whether the moderator acted fairly. This is used to improve the quality of mod ...

* Recommender system

* Trust metric

* ''We Had to Remove This Post

''We Had to Remove This Post'' is a 2021 novella by Dutch writer Hanna Bervoets.

Summary

The novella follows the story of Kayleigh, who has recently taken a job as a content moderator for social media platform Hexa after accruing a significan ...

''

References

{{Reflist, 35emExternal links

Slashdot

– A definitive example of user moderation

Fundamental Basics of Content Moderation

* Cliff Lampe and Paul Resnick

Slash (dot) and burn: distributed moderation in a large online conversation space

Proceedings of the SIGCHI conference on Human factors in computing systems table of contents, Vienna, Austria 2005, 543–550. *Hamed Alhoori, Omar Alvarez, Richard Furuta, Miguel Muñiz, Eduardo Urbina

Supporting the Creation of Scholarly Bibliographies by Communities through Online Reputation Based Social Collaboration.

ECDL 2009: 180–191 Internet forum terminology Internet culture Reputation management *