Overview

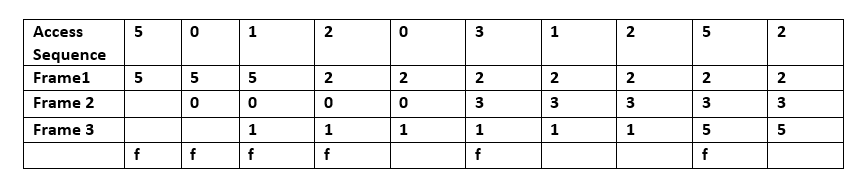

The average memory reference time is : where : = miss ratio = 1 - (hit ratio) : = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : = the latency: the time to reference the cache (should be the same for hits and misses) : = various secondary effects, such as queuing effects in multiprocessor systems There are two primary figures of merit of a cache: the latency and the hit ratio. There are also a number of secondary factors affecting cache performance. Alan Jay Smith. "Design of CPU Cache Memories". Proc. IEEE TENCON, 1987The "hit ratio" of a cache describes how often a searched-for item is actually found in the cache. More efficient replacement policies keep track of more usage information in order to improve the hit rate (for a given cache size). The "latency" of a cache describes how long after requesting a desired item the cache can return that item (when there is a hit). Faster replacement strategies typically keep track of less usage information—or, in the case of direct-mapped cache, no information—to reduce the amount of time required to update that information. Each replacement strategy is a compromise between hit rate and latency. Hit rate measurements are typically performed on Benchmark (computing), benchmark applications. The actual hit ratio varies widely from one application to another. In particular, video and audio streaming applications often have a hit ratio close to zero, because each bit of data in the stream is read once for the first time (a compulsory miss), used, and then never read or written again. Even worse, many cache algorithms (in particular, LRU) allow this streaming data to fill the cache, pushing out of the cache information that will be used again soon (

Policies

Bélády's algorithm

The ''most'' efficient caching algorithm would be to always discard the information that will not be needed for the longest time in the future. This optimal result is referred to as Bélády's optimal algorithm/simply optimal replacement policy or the clairvoyant algorithm. Since it is generally impossible to predict how far in the future information will be needed, this is generally not implementable in practice. The practical minimum can be calculated only after experimentation, and one can compare the effectiveness of the actually chosen cache algorithm. At the moment when a

At the moment when a Random replacement (RR)

Randomly selects a candidate item and discards it to make space when necessary. This algorithm does not require keeping any information about the access history. For its simplicity, it has been used inSimple queue-based policies

First in first out (FIFO)

Using this algorithm the cache behaves in the same way as a FIFO queue. The cache evicts the blocks in the order they were added, without any regard to how often or how many times they were accessed before.Last in first out (LIFO) or First in last out (FILO)

Using this algorithm the cache behaves in the same way as a stack and opposite way as a FIFO queue. The cache evicts the block added most recently first without any regard to how often or how many times it was accessed before.Simple recency-based policies

Least recently used (LRU)

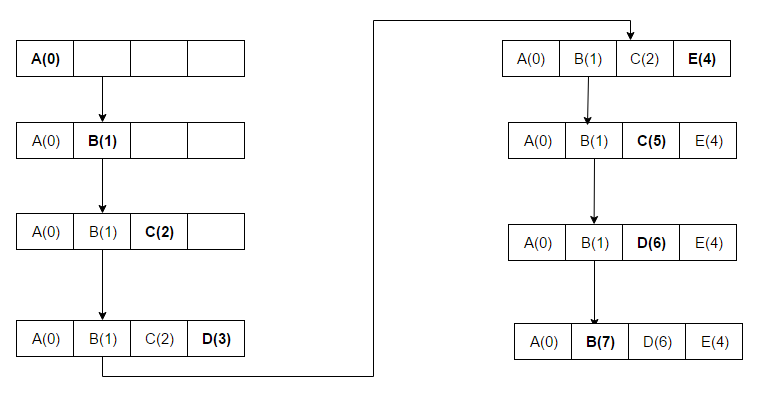

Discards the least recently used items first. This algorithm requires keeping track of what was used when, which is expensive if one wants to make sure the algorithm always discards the least recently used item. General implementations of this technique require keeping "age bits" for cache-lines and track the "Least Recently Used" cache-line based on age-bits. In such an implementation, every time a cache-line is used, the age of all other cache-lines changes. LRU is actually a family of caching algorithms with members including 2Q by Theodore Johnson and Dennis Shasha, and LRU/K by Pat O'Neil, Betty O'Neil and Gerhard Weikum. The access sequence for the below example is A B C D E D F. In the above example once A B C D gets installed in the blocks with sequence numbers (Increment 1 for each new Access) and when E is accessed, it is a miss and it needs to be installed in one of the blocks. According to the LRU Algorithm, since A has the lowest Rank(A(0)), E will replace A.

In the second to last step, D is accessed and therefore the sequence number is updated.

Finally, F is accessed taking the place of B which had the lowest Rank(B(1)) at the moment.

In the above example once A B C D gets installed in the blocks with sequence numbers (Increment 1 for each new Access) and when E is accessed, it is a miss and it needs to be installed in one of the blocks. According to the LRU Algorithm, since A has the lowest Rank(A(0)), E will replace A.

In the second to last step, D is accessed and therefore the sequence number is updated.

Finally, F is accessed taking the place of B which had the lowest Rank(B(1)) at the moment.

Time aware least recently used (TLRU)

The Time aware Least Recently Used (TLRU) is a variant of LRU designed for the situation where the stored contents in cache have a valid life time. The algorithm is suitable in network cache applications, such as Information-centric networking (ICN),Most recently used (MRU)

In contrast to Least Recently Used (LRU), MRU discards the ''most recently used'' items first. In findings presented at the 11th Here, A B C D are placed in the cache as there is still space available. At the 5th access E, we see that the block which held D is now replaced with E as this block was used most recently. Another access to C and at the next access to D, C is replaced as it was the block accessed just before D and so on.

Here, A B C D are placed in the cache as there is still space available. At the 5th access E, we see that the block which held D is now replaced with E as this block was used most recently. Another access to C and at the next access to D, C is replaced as it was the block accessed just before D and so on.

Segmented LRU (SLRU)

SLRU cache is divided into two segments, a probationary segment and a protected segment. Lines in each segment are ordered from the most to the least recently accessed. Data from misses is added to the cache at the most recently accessed end of the probationary segment. Hits are removed from wherever they currently reside and added to the most recently accessed end of the protected segment. Lines in the protected segment have thus been accessed at least twice. The protected segment is finite, so migration of a line from the probationary segment to the protected segment may force the migration of the LRU line in the protected segment to the most recently used (MRU) end of the probationary segment, giving this line another chance to be accessed before being replaced. The size limit on the protected segment is an SLRU parameter that varies according to the I/O workload patterns. Whenever data must be discarded from the cache, lines are obtained from the LRU end of the probationary segment.LRU Approximations

LRU can be prohibitively expensive in caches with higher associativity. Practical hardware usually employs an approximation to achieve similar performance at a much lower hardware cost.= Pseudo-LRU (PLRU)

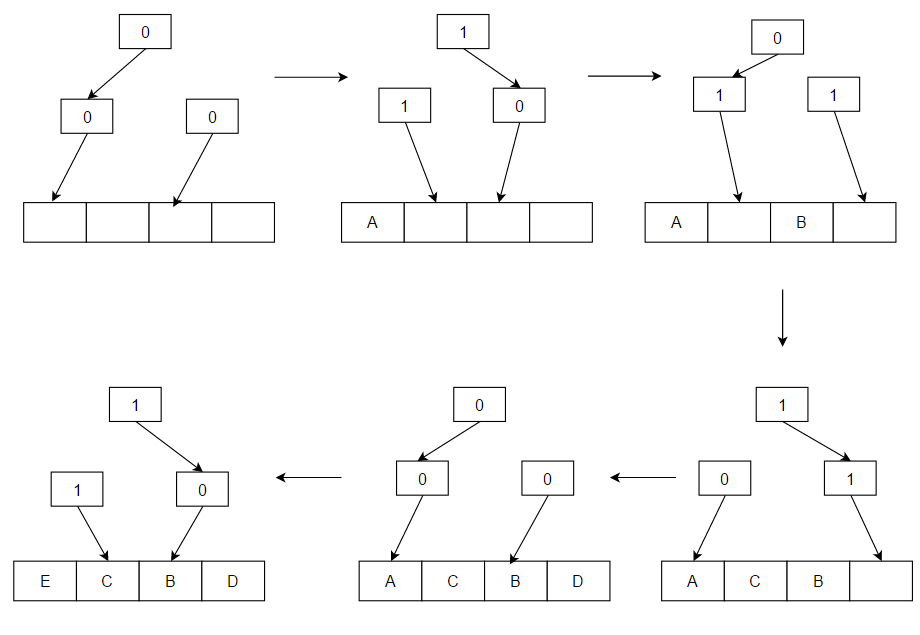

= For The principle here is simple to understand if we only look at the arrow pointers. When there is an access to a value, say 'A', and we cannot find it in the cache, then we load it from memory and place it at the block where the arrows are currently pointing, going from top to bottom. After we have placed that block we flip those same arrows so they point the opposite way. In the above example we see how 'A' was placed, followed by 'B', 'C and 'D'. Then as the cache became full 'E' replaced 'A' because that was where the arrows were pointing at that time, and the arrows that led to 'A' were flipped to point in the opposite direction. The arrows then led to 'B', which will be the block replaced on the next cache miss.

The principle here is simple to understand if we only look at the arrow pointers. When there is an access to a value, say 'A', and we cannot find it in the cache, then we load it from memory and place it at the block where the arrows are currently pointing, going from top to bottom. After we have placed that block we flip those same arrows so they point the opposite way. In the above example we see how 'A' was placed, followed by 'B', 'C and 'D'. Then as the cache became full 'E' replaced 'A' because that was where the arrows were pointing at that time, and the arrows that led to 'A' were flipped to point in the opposite direction. The arrows then led to 'B', which will be the block replaced on the next cache miss.

= CLOCK-Pro

= LRU algorithm cannot be directly implemented in the critical path of computer systems, such asSimple frequency-based policies

Least-frequently used (LFU)

Counts how often an item is needed. Those that are used least often are discarded first. This works very similar to LRU except that instead of storing the value of how recently a block was accessed, we store the value of how many times it was accessed. So of course while running an access sequence we will replace a block which was used fewest times from our cache. E.g., if A was used (accessed) 5 times and B was used 3 times and others C and D were used 10 times each, we will replace B.Least frequent recently used (LFRU)

The Least Frequent Recently Used (LFRU) cache replacement scheme combines the benefits of LFU and LRU schemes. LFRU is suitable for 'in network' cache applications, such as Information-centric networking (ICN),LFU with dynamic aging (LFUDA)

A variant called LFU with Dynamic Aging (LFUDA) that uses dynamic aging to accommodate shifts in the set of popular objects. It adds a cache age factor to the reference count when a new object is added to the cache or when an existing object is re-referenced. LFUDA increments the cache ages when evicting blocks by setting it to the evicted object's key value. Thus, the cache age is always less than or equal to the minimum key value in the cache. Suppose when an object was frequently accessed in the past and now it becomes unpopular, it will remain in the cache for a long time thereby preventing the newly or less popular objects from replacing it. So this Dynamic aging is introduced to bring down the count of such objects thereby making them eligible for replacement. The advantage of LFUDA is it reduces theRRIP-style policies

RRIP-style policies form the basis for many other cache replacement policies including Hawkeye which won the CRC2 championship and was considered the most advanced cache replacement policy of its time.Re-Reference Interval Prediction (RRIP)

RRIP is a very flexible policy proposed by= Static RRIP (SRRIP)

= SRRIP inserts lines with an RRPV value of maxRRIP. This means that a line that has just been inserted will be the most likely to be evicted on a cache miss.= Bimodal RRIP (BRRIP)

= SRRIP performs well in the normal case, but suffers when the working set is much larger than the cache size and causes= Dynamic RRIP (DRRIP)

= SRRIP performs best when the working set is smaller than the cache size, while BRRIP performs best when the working set is larger than the cache size. DRRIP aims for the best of both worlds. It uses set dueling to select whether to use SRRIP or BRRIP. It dedicates a few sets (typically 32) to only use SRRIP and another few to only use BRRIP, and it uses a policy counter that monitors which of these sets performs better to determine which policy will be used by the rest of the cache.Cache replacement policies approximating Bélády's algorithm

Bélády's algorithm is the optimal cache replacement policy, but it requires knowledge of the future to evict lines that will be reused farthest in the future. Multiple replacement policies have been proposed that attempt to predict future reuse distances from past access patterns. Thus allowing them to approximate the optimal replacement policy. Some of the best performing cache replacement policies are ones that attempt to imitate Bélády's algorithm.Hawkeye

Hawkeye attempts to emulate Bélády's algorithm by using past accesses by a PC to predict whether the accesses it produces generate cache friendly accesses (accesses that get used later on) or cache averse accesses (don't get used later on). It does this by sampling a number of the cache sets (that aren't aligned), it uses a history of length and emulates Bélády's algorithm on these accesses. This allows the policy to figure out which lines should have been cached and which should not have been cached. This data allows it to predict whether an instruction is cache friendly or cache averse. This data is then fed into an RRIP, and means accesses from instructions that are cache friendly have a lower RRPV value (likely to be evicted later), and accesses from instructions that are cache averse have a higher RRPV value (likely to be evicted sooner). The RRIP backend is the part that does the actual eviction decisions. The sampled cache and OPT generator are only used to set the initial RRPV value of the inserted cache lines. Hawkeye won the CRC2 cache championship in 2017, beating all other cache replacement policies at the time. Harmony is an extension of Hawkeye that improves prefetching performance.Mockingjay

Mockingjay tries to improve upon Hawkeye in multiple ways. First, it drops the binary prediction, allowing it to make more fine grained decisions about which cache lines to evict. Second, it leaves the decision about which cache line to evict later on, after more information is available. It achieves this by keeping a sampled cache of unique accesses, the PCs that produced them and their timestamps. When a line in the sampled cache gets accessed again, the difference in time will be sent to the Reuse Distance Predictor, which uses Temporal Difference Learning, where the new RDP vale will be incremented or decremented by a small number to compensate for outliers. The number is calculated as . Except when the value has not been initialized, in which case the observed reuse distance is inserted directly. If the sampled cache is full, and we need to throw away a line, we train the RDP that the PC that last accessed it produces streaming accesses. On an access or insertion, the Estimated Time of Reuse (ETR) for this line is updated to reflect the predicted reuse distance. Every few accesses to the set, decrement all the ETR counters of the set (which can fall into the negative, if not accesses past their estimated time of reuse). On a cache miss, the line with the highest absolute ETR value is evicted (The line which is estimated to be reused farthest in the future, or has been estimated to be reused farthest in the past and hasn't been reused). Mockingjay achieves results that are very close to the optimal Bélády's algorithm, typically within only a few percent performance difference.Cache replacement policies using machine learning

Multiple cache replacement policies have attempted to useOther cache replacement policies

Low inter-reference recency set (LIRS)

LIRS is a page replacement algorithm with an improved performance over LRU and many other newer replacement algorithms. This is achieved by using reuse distance as a metric for dynamically ranking accessed pages to make a replacement decision. LIRS effectively address the limits of LRU by using recency to evaluate Inter-Reference Recency (IRR) for making a replacement decision. In the above figure, "x" represents that a block is accessed at time t. Suppose if block A1 is accessed at time 1 then Recency will become 0 since this is the first accessed block and IRR will be 1 since it predicts that A1 will be accessed again in time 3. In the time 2 since A4 is accessed, the recency will become 0 for A4 and 1 for A1 because A4 is the most recently accessed Object and IRR will become 4 and it will go on. At time 10, the LIRS algorithm will have two sets LIR set = and HIR set = . Now at time 10 if there is access to A4, miss occurs. LIRS algorithm will now evict A5 instead of A2 because of its largest recency.

In the above figure, "x" represents that a block is accessed at time t. Suppose if block A1 is accessed at time 1 then Recency will become 0 since this is the first accessed block and IRR will be 1 since it predicts that A1 will be accessed again in time 3. In the time 2 since A4 is accessed, the recency will become 0 for A4 and 1 for A1 because A4 is the most recently accessed Object and IRR will become 4 and it will go on. At time 10, the LIRS algorithm will have two sets LIR set = and HIR set = . Now at time 10 if there is access to A4, miss occurs. LIRS algorithm will now evict A5 instead of A2 because of its largest recency.

Adaptive replacement cache (ARC)

Constantly balances between LRU and LFU, to improve the combined result.ARC: A Self-Tuning, Low Overhead Replacement Cache.

FAST, 2003. ARC improves on SLRU by using information about recently evicted cache items to dynamically adjust the size of the protected segment and the probationary segment to make the best use of the available cache space. Adaptive replacement algorithm is explained with the example.

AdaptiveClimb (AC)

Uses recent hit/miss to adjust the jump where in climb any hit switches the position one slot to the top, and in LRU hit switches the position of the hit to the top. Thus, benefiting from the optimality of climb when the program is in a fixed scope, and the rapid adaption to a new scope, as LRU does.Danny Berend,Clock with adaptive replacement (CAR)

Combines the advantages of Adaptive Replacement Cache (ARC) andMulti queue (MQ)

The multi queue algorithm or MQ was developed to improve the performance of second level buffer cache for e.g. a server buffer cache. It is introduced in a paper by Zhou, Philbin, and Li. Yuanyuan Zhou, James Philbin, and Kai LiThe Multi-Queue Replacement Algorithm for Second Level Buffer Caches.

USENIX, 2002. The MQ cache contains an ''m'' number of LRU queues: Q0, Q1, ..., Q''m''-1. Here, the value of ''m'' represents a hierarchy based on the lifetime of all blocks in that particular queue. For example, if ''j''>''i'', blocks in Q''j'' will have a longer lifetime than those in Q''i''. In addition to these there is another history buffer Qout, a queue which maintains a list of all the Block Identifiers along with their access frequencies. When Qout is full the oldest identifier is evicted. Blocks stay in the LRU queues for a given lifetime, which is defined dynamically by the MQ algorithm to be the maximum temporal distance between two accesses to the same file or the number of cache blocks, whichever is larger. If a block has not been referenced within its lifetime, it is demoted from Q''i'' to Q''i''−1 or evicted from the cache if it is in Q0. Each queue also has a maximum access count; if a block in queue Q''i'' is accessed more than 2''i'' times, this block is promoted to Q''i''+1 until it is accessed more than 2''i''+1 times or its lifetime expires. Within a given queue, blocks are ranked by the recency of access, according to LRU.Eduardo Pinheiro, Ricardo Bianchini, Energy conservation techniques for disk array-based servers, Proceedings of the 18th annual international conference on Supercomputing, June 26-July 01, 2004, Malo, France

We can see from Fig. how the ''m'' LRU queues are placed in the cache. Also see from Fig. how the Qout stores the block identifiers and their corresponding access frequencies. ''a'' was placed in Q0 as it was accessed only once recently and we can check in Qout how ''b'' and ''c'' were placed in Q1 and Q2 respectively as their access frequencies are 2 and 4. The queue in which a block is placed is dependent on access frequency(f) as log2(f). When the cache is full, the first block to be evicted will be the head of Q0 in this case ''a''. If ''a'' is accessed one more time it will move to Q1 below ''b''.

We can see from Fig. how the ''m'' LRU queues are placed in the cache. Also see from Fig. how the Qout stores the block identifiers and their corresponding access frequencies. ''a'' was placed in Q0 as it was accessed only once recently and we can check in Qout how ''b'' and ''c'' were placed in Q1 and Q2 respectively as their access frequencies are 2 and 4. The queue in which a block is placed is dependent on access frequency(f) as log2(f). When the cache is full, the first block to be evicted will be the head of Q0 in this case ''a''. If ''a'' is accessed one more time it will move to Q1 below ''b''.

Pannier: Container-based caching algorithm for compound objects

PannierCheng Li, Philip Shilane, Fred Douglis and Grant WallacePannier: A Container-based Flash Cache for Compound Objects.

ACM/IFIP/USENIX Middleware, 2015. is a container-based flash caching mechanism that identifies divergent (heterogeneous) containers where blocks held therein have highly varying access patterns. Pannier uses a priority-queue based survival queue structure to rank the containers based on their survival time, which is proportional to the live data in the container. Pannier is built based on Segmented LRU (S2LRU), which segregates hot and cold data. Pannier also uses a multi-step feedback controller to throttle flash writes to ensure flash lifespan.

See also

*References

External links

Definitions of various cache algorithms

Caching algorithm for flash/SSDs

{{DEFAULTSORT:Cache Algorithms Cache (computing) Memory management algorithms fr:Algorithmes de remplacement des lignes de cache zh:快取文件置換機制