|

X87

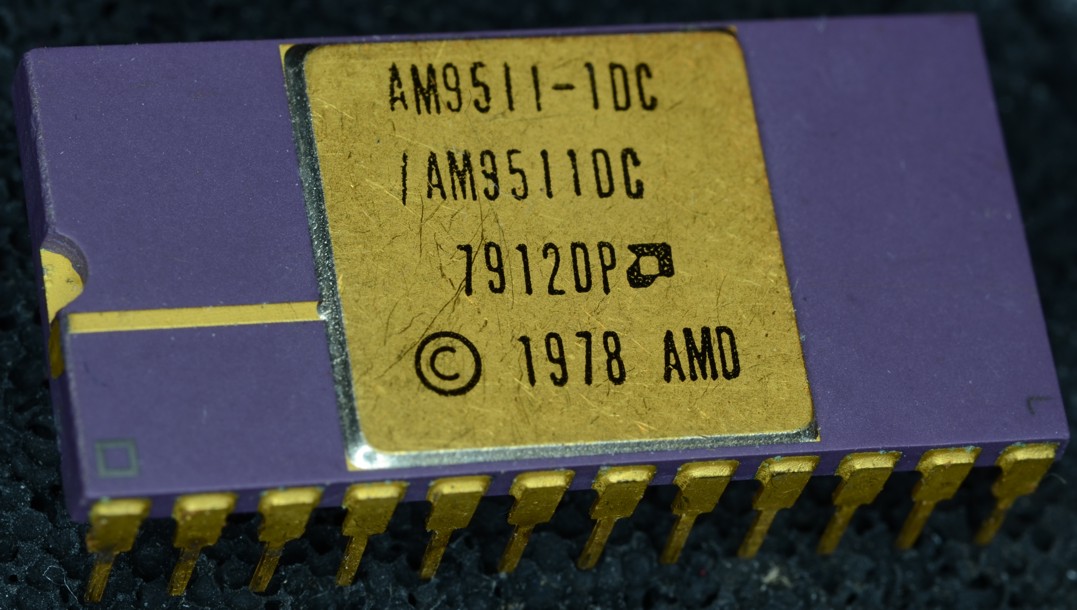

x87 is a floating-point-related subset of the x86 architecture instruction set. It originated as an extension of the 8086 instruction set in the form of optional floating-point coprocessors that worked in tandem with corresponding x86 CPUs. These microchips had names ending in "87". This was also known as the NPX (''Numeric Processor eXtension''). Like other extensions to the basic instruction set, x87 instructions are not strictly needed to construct working programs, but provide hardware and microcode implementations of common numerical tasks, allowing these tasks to be performed much faster than corresponding machine code routines can. The x87 instruction set includes instructions for basic floating-point operations such as addition, subtraction and comparison, but also for more complex numerical operations, such as the computation of the tangent function and its inverse, for example. Most x86 processors since the Intel 80486 have had these x87 instructions implemented in the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

X86 Architecture

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors. The term is not synonymous with IBM PC compatibility, as this implies a multitude of other computer hardware. Embedded systems and general-purpose computers used x86 chips before the PC-compatible market started, some of them before the IBM PC (1981) debut. , most desktop and laptop computers sold are based on the x86 architecture family, while mobile categories such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SSE2

SSE2 (Streaming SIMD Extensions 2) is one of the Intel SIMD (Single Instruction, Multiple Data) processor supplementary instruction sets first introduced by Intel with the initial version of the Pentium 4 in 2000. It extends the earlier SSE instruction set, and is intended to fully replace MMX. Intel extended SSE2 to create SSE3 in 2004. SSE2 added 144 new instructions to SSE, which has 70 instructions. Competing chip-maker AMD added support for SSE2 with the introduction of their Opteron and Athlon 64 ranges of AMD64 64-bit CPUs in 2003. Features Most of the SSE2 instructions implement the integer vector operations also found in MMX. Instead of the MMX registers they use the XMM registers, which are wider and allow for significant performance improvements in specialized applications. Another advantage of replacing MMX with SSE2 is avoiding the mode switching penalty for issuing x87 instructions present in MMX because it is sharing register space with the x87 FPU. The SSE2 als ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extended Precision

Extended precision refers to floating-point number formats that provide greater precision than the basic floating-point formats. Extended precision formats support a basic format by minimizing roundoff and overflow errors in intermediate values of expressions on the base format. In contrast to ''extended precision'', arbitrary-precision arithmetic refers to implementations of much larger numeric types (with a storage count that usually is not a power of two) using special software (or, rarely, hardware). Extended precision implementations There is a long history of extended floating-point formats reaching back nearly to the middle of the last century. Various manufacturers have used different formats for extended precision for different machines. In many cases the format of the extended precision is not quite the same as a scale-up of the ordinary single- and double-precision formats it is meant to extend. In a few cases the implementation was merely a software-based change in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten ( decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stack (data Structure)

In computer science, a stack is an abstract data type that serves as a collection of elements, with two main operations: * Push, which adds an element to the collection, and * Pop, which removes the most recently added element that was not yet removed. Additionally, a peek operation can, without modifying the stack, return the value of the last element added. Calling this structure a ''stack'' is by analogy to a set of physical items stacked one atop another, such as a stack of plates. The order in which an element added to or removed from a stack is described as last in, first out, referred to by the acronym LIFO. As with a stack of physical objects, this structure makes it easy to take an item off the top of the stack, but accessing a datum deeper in the stack may require taking off multiple other items first. Considered as a linear data structure, or more abstractly a sequential collection, the push and pop operations occur only at one end of the structure, referre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754-1985

IEEE 754-1985 was an industry standard for representing floating-point numbers in computers, officially adopted in 1985 and superseded in 2008 by IEEE 754-2008, and then again in 2019 by minor revision IEEE 754-2019. During its 23 years, it was the most widely used format for floating-point computation. It was implemented in software, in the form of floating-point libraries, and in hardware, in the instructions of many CPUs and FPUs. The first integrated circuit to implement the draft of what was to become IEEE 754-1985 was the Intel 8087. IEEE 754-1985 represents numbers in binary, providing definitions for four levels of precision, of which the two most commonly used are: The standard also defines representations for positive and negative infinity, a " negative zero", five exceptions to handle invalid results like division by zero, special values called NaNs for representing those exceptions, denormal numbers to represent numbers smaller than shown above, and fou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating Point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten ( decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-poi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel P5 (microarchitecture)

The Pentium (also referred to as P5, its microarchitecture, or i586) is a fifth generation, 32-bit x86 microprocessor that was introduced by Intel on March 22, 1993, as the very first CPU in the Pentium brand. It was instruction set compatible with the 80486 but was a new and very different microarchitecture design from previous iterations. The P5 Pentium was the first superscalar x86 microarchitecture and the world's first superscalar microprocessor to be in mass productionmeaning it generally executes at least 2 instructions per clock mainly because of a design-first dual integer pipeline design previously thought impossible to implement on a CISC microarchitecture. Additional features include a faster floating-point unit, wider data bus, separate code and data caches, and many other techniques and features to enhance performance and support security, encryption, and multiprocessing, for workstations and servers when compared to the next best previous industry standard proces ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coprocessor

A coprocessor is a computer processor used to supplement the functions of the primary processor (the CPU). Operations performed by the coprocessor may be floating-point arithmetic, graphics, signal processing, string processing, cryptography or I/O interfacing with peripheral devices. By offloading processor-intensive tasks from the main processor, coprocessors can accelerate system performance. Coprocessors allow a line of computers to be customized, so that customers who do not need the extra performance do not need to pay for it. Functionality Coprocessors vary in their degree of autonomy. Some (such as FPUs) rely on direct control via coprocessor instructions, embedded in the CPU's instruction stream. Others are independent processors in their own right, capable of working asynchronously; they are still not optimized for general-purpose code, or they are incapable of it due to a limited instruction set focused on accelerating specific tasks. It is common for these to be d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

80486

The Intel 486, officially named i486 and also known as 80486, is a microprocessor. It is a higher-performance follow-up to the Intel 386. The i486 was introduced in 1989. It represents the fourth generation of binary compatible CPUs following the 8086 of 1978, the Intel 80286 of 1982, and 1985's i386. It was the first tightly- pipelined x86 design as well as the first x86 chip to include more than one million transistors. It offered a large on-chip cache and an integrated floating-point unit. A typical 50 MHz i486 executes around 40 million instructions per second (MIPS), reaching 50 MIPS peak performance. It is approximately twice as fast as the i386 or i286 per clock cycle. The i486's improved performance is thanks to its five-stage pipeline with all stages bound to a single cycle. The enhanced FPU unit on the chip was significantly faster than the i387 FPU per cycle. The intel 80387 FPU ("i387") was a separate, optional math coprocessor that was installed in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel 80486

The Intel 486, officially named i486 and also known as 80486, is a microprocessor. It is a higher-performance follow-up to the Intel 386. The i486 was introduced in 1989. It represents the fourth generation of binary compatible CPUs following the 8086 of 1978, the Intel 80286 of 1982, and 1985's i386. It was the first tightly- pipelined x86 design as well as the first x86 chip to include more than one million transistors. It offered a large on-chip cache and an integrated floating-point unit. A typical 50 MHz i486 executes around 40 million instructions per second (MIPS), reaching 50 MIPS peak performance. It is approximately twice as fast as the i386 or i286 per clock cycle. The i486's improved performance is thanks to its five-stage pipeline with all stages bound to a single cycle. The enhanced FPU unit on the chip was significantly faster than the i387 FPU per cycle. The intel 80387 FPU ("i387") was a separate, optional math coprocessor that was installed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

64-bit

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit CPUs and ALUs are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer. From the software perspective, 64-bit computing means the use of machine code with 64-bit virtual memory addresses. However, not all 64-bit instruction sets support full 64-bit virtual memory addresses; x86-64 and ARMv8, for example, support only 48 bits of virtual address, with the remaining 16 bits of the virtual address required to be all 0's or all 1's, and several 64-bit instruction sets support fewer than 64 bits of physical memory address. The term ''64-bit'' also describes a generation of computers in which 64-bit processors are the norm. 64 bits is a word size that defines certain classes of computer architecture, buses, memory, and CPUs and, by extension, the software that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |