|

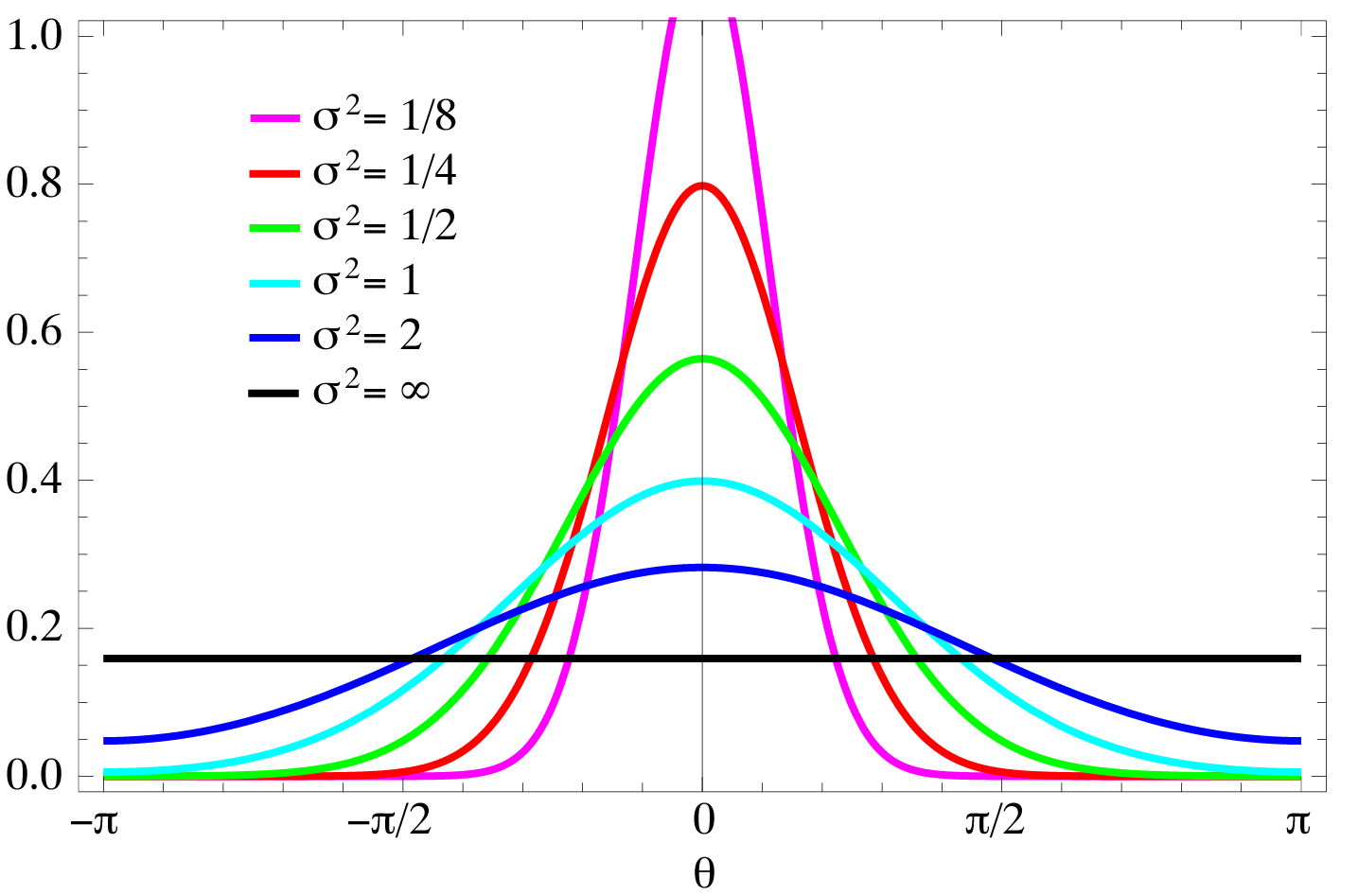

Wrapped Normal

In probability theory and directional statistics, a wrapped normal distribution is a wrapped probability distribution that results from the "wrapping" of the normal distribution around the unit circle. It finds application in the theory of Brownian motion and is a solution to the heat equation for periodic boundary conditions. It is closely approximated by the von Mises distribution, which, due to its mathematical simplicity and tractability, is the most commonly used distribution in directional statistics. Definition The probability density function of the wrapped normal distribution is : f_(\theta;\mu,\sigma)=\frac \sum^_ \exp \left frac \right where ''μ'' and ''σ'' are the mean and standard deviation of the unwrapped distribution, respectively. Expressing the above density function in terms of the characteristic function of the normal distribution yields: : f_(\theta;\mu,\sigma)=\frac\sum_^\infty e^ =\frac\vartheta\left(\frac,\frac\right) , where \vartheta(\theta,\tau ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Function (probability Theory)

In probability theory and statistics, the characteristic function of any real-valued random variable completely defines its probability distribution. If a random variable admits a probability density function, then the characteristic function is the Fourier transform of the probability density function. Thus it provides an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables. In addition to univariate distributions, characteristic functions can be defined for vector- or matrix-valued random variables, and can also be extended to more generic cases. The characteristic function always exists when treated as a function of a real-valued argument, unlike the moment-generating function. There are relations between the behavior of the characteristic function of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Distributions

Continuity or continuous may refer to: Mathematics * Continuity (mathematics), the opposing concept to discreteness; common examples include ** Continuous probability distribution or random variable in probability and statistics ** Continuous game, a generalization of games used in game theory ** Law of Continuity, a heuristic principle of Gottfried Leibniz * Continuous function, in particular: ** Continuity (topology), a generalization to functions between topological spaces ** Scott continuity, for functions between posets ** Continuity (set theory), for functions between ordinals ** Continuity (category theory), for functors ** Graph continuity, for payoff functions in game theory * Continuity theorem may refer to one of two results: ** Lévy's continuity theorem, on random variables ** Kolmogorov continuity theorem, on stochastic processes * In geometry: ** Parametric continuity, for parametrised curves ** Geometric continuity, a concept primarily applied to the conic secti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Von Mises Distribution

In probability theory and directional statistics, the von Mises distribution (also known as the circular normal distribution or Tikhonov distribution) is a continuous probability distribution on the circle. It is a close approximation to the wrapped normal distribution, which is the circular analogue of the normal distribution. A freely diffusing angle \theta on a circle is a wrapped normally distributed random variable with an unwrapped variance that grows linearly in time. On the other hand, the von Mises distribution is the stationary distribution of a drift and diffusion process on the circle in a harmonic potential, i.e. with a preferred orientation. The von Mises distribution is the maximum entropy distribution for circular data when the real and imaginary parts of the first circular moment are specified. The von Mises distribution is a special case of the von Mises–Fisher distribution on the ''N''-dimensional sphere. Definition The von Mises probability density funct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

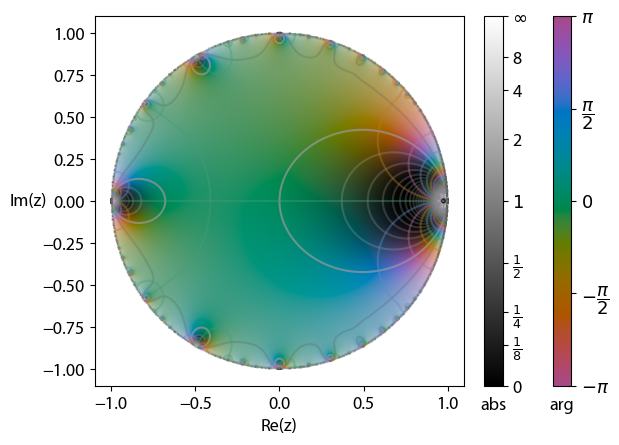

Wrapped Cauchy Distribution

In probability theory and directional statistics, a wrapped Cauchy distribution is a wrapped probability distribution that results from the "wrapping" of the Cauchy distribution around the unit circle. The Cauchy distribution is sometimes known as a Lorentzian distribution, and the wrapped Cauchy distribution may sometimes be referred to as a wrapped Lorentzian distribution. The wrapped Cauchy distribution is often found in the field of spectroscopy where it is used to analyze diffraction patterns (e.g. see Fabry–Pérot interferometer). Description The probability density function of the wrapped Cauchy distribution is: : f_(\theta;\mu,\gamma)=\sum_^\infty \frac\qquad -\pi<\theta<\pi where is the scale factor and is the peak position of the "unwrapped" distribution. Expressing the above pdf in terms of the |

Dirac Comb

In mathematics, a Dirac comb (also known as shah function, impulse train or sampling function) is a periodic function with the formula \operatorname_(t) \ := \sum_^ \delta(t - k T) for some given period T. Here ''t'' is a real variable and the sum extends over all integers ''k.'' The Dirac delta function \delta and the Dirac comb are tempered distributions. The graph of the function resembles a comb (with the \deltas as the comb's ''teeth''), hence its name and the use of the comb-like Cyrillic letter sha (Ш) to denote the function. The symbol \operatorname\,\,(t), where the period is omitted, represents a Dirac comb of unit period. This implies \operatorname_(t) \ = \frac\operatorname\ \!\!\!\left(\frac\right). Because the Dirac comb function is periodic, it can be represented as a Fourier series based on the Dirichlet kernel: \operatorname_(t) = \frac\sum_^ e^. The Dirac comb function allows one to represent both continuous and discrete phenomena, such as sampling and al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Series

A Fourier series () is a summation of harmonically related sinusoidal functions, also known as components or harmonics. The result of the summation is a periodic function whose functional form is determined by the choices of cycle length (or ''period''), the number of components, and their amplitudes and phase parameters. With appropriate choices, one cycle (or ''period'') of the summation can be made to approximate an arbitrary function in that interval (or the entire function if it too is periodic). The number of components is theoretically infinite, in which case the other parameters can be chosen to cause the series to converge to almost any ''well behaved'' periodic function (see Pathological and Dirichlet–Jordan test). The components of a particular function are determined by ''analysis'' techniques described in this article. Sometimes the components are known first, and the unknown function is ''synthesized'' by a Fourier series. Such is the case of a discrete-ti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euler Function

In mathematics, the Euler function is given by :\phi(q)=\prod_^\infty (1-q^k),\quad , q, A000203 On account of the identity \sum_ d = \sum_ \frac, this may also be written as :\ln(\phi(q)) = -\sum_^\infty \frac \sum_ d. Also if a,b\in\mathbb^+ and ab=\pi ^2, then :a^e^\phi (e^)=b^e^\phi (e^). Special values The next identities come from Ramanujan's Notebooks: : \phi(e^)=\frac : \phi(e^)=\frac : \phi(e^)=\frac : \phi(e^)=\frac(\sqrt-1)^ Using the Pentagonal number theorem, exchanging sum and integral In mathematics Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented i ..., and then invoking complex-analytic methods, one derives : \int_0^1\phi(q)\,\mathrmq = \frac. References Notes Other * {{Leonhard Euler Number theory Q-analogs Leonhard Euler km:អនុគមន៍អឺលែ� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper "A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

A Course Of Modern Analysis

''A Course of Modern Analysis: an introduction to the general theory of infinite processes and of analytic functions; with an account of the principal transcendental functions'' (colloquially known as Whittaker and Watson) is a landmark textbook on mathematical analysis written by Edmund T. Whittaker and George N. Watson, first published by Cambridge University Press in 1902. The first edition was Whittaker's alone, but later editions were co-authored with Watson. History Its first, second, third, and the fourth edition were published in 1902, 1915, 1920, and 1927, respectively. Since then, it has continuously been reprinted and is still in print today. A revised, expanded and digitally reset fifth edition, edited by Victor H. Moll, was published in 2021. The book is notable for being the standard reference and textbook for a generation of Cambridge mathematicians including Littlewood and Godfrey H. Hardy. Mary L. Cartwright studied it as preparation for her final hono ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jacobi Triple Product

In mathematics, the Jacobi triple product is the mathematical identity: :\prod_^\infty \left( 1 - x^\right) \left( 1 + x^ y^2\right) \left( 1 +\frac\right) = \sum_^\infty x^ y^, for complex numbers ''x'' and ''y'', with , ''x'', < 1 and ''y'' ≠ 0. It was introduced by in his work '' Fundamenta Nova Theoriae Functionum Ellipticarum''. The Jacobi triple product identity is the Macdonald identity for the affine root system of type ''A''1, and is the Weyl denominator formula for the corresponding affine Kac–Moody algebra. Properties The basis of Jacobi's proof relies on Euler's pentagonal number theorem, which is itself a specific case of the Jacobi Triple Product Identity. Let x=q\sqrt q and y^2=-\sqrt. Then we have :\phi(q) = \prod_^\infty \left(1-q^m \right) = \sum_^\infty (-1)^n q^. The Jacobi Triple Product also allows the Jacobi theta function to be written as an infinite product as follows: Let x=e^ and y=e^. Then the Jacobi theta function : \vartheta(z; ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wrapped Distribution

In probability theory and directional statistics, a wrapped probability distribution is a continuous probability distribution that describes data points that lie on a unit ''n''-sphere. In one dimension, a wrapped distribution consists of points on the unit circle. If \phi is a random variate in the interval (-\infty,\infty) with probability density function (PDF) p(\phi), then z = e^ is a circular variable distributed according to the wrapped distribution p_(\theta) and \theta = \arg(z) is an angular variable in the interval (-\pi,\pi] distributed according to the wrapped distribution p_w(\theta). Any probability density function p(\phi) on the line can be "wrapped" around the circumference of a circle of unit radius. That is, the PDF of the wrapped variable :\theta=\phi \mod 2\pi in some interval of length 2\pi is : p_w(\theta)=\sum_^\infty which is a periodic summation, periodic sum of period 2\pi. The preferred interval is generally (-\pi<\theta\le\pi) for which [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |